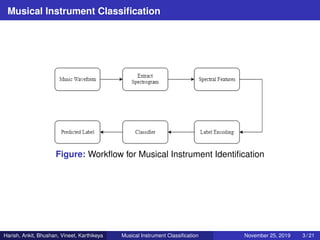

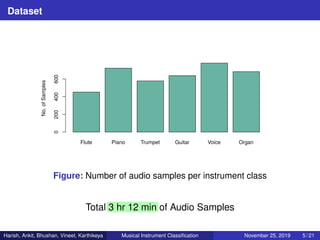

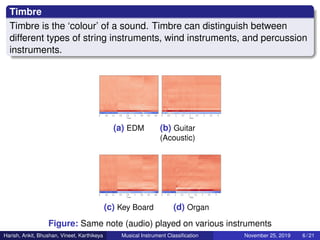

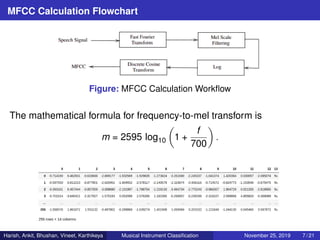

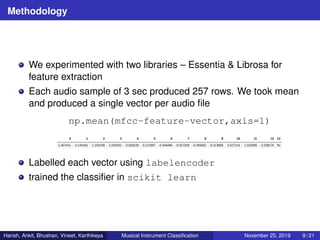

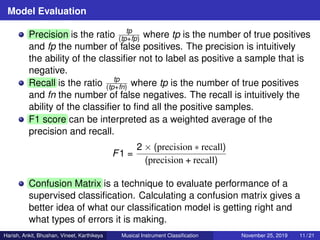

The document describes a study on classifying musical instruments based on spectral features extracted from audio samples. Several supervised machine learning classifiers like logistic regression, decision trees, light GBM, XGBoost, random forest and SVM were tested on an annotated dataset containing samples of 11 instruments. Evaluation metrics like precision, recall, F1 score and confusion matrices showed that light GBM, XGBoost and SVM performed best, achieving F1 scores over 0.7 for most instruments. Unsupervised clustering using K-means was not effective for this task.