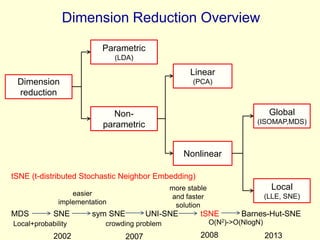

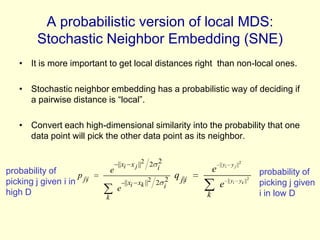

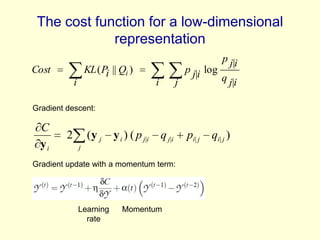

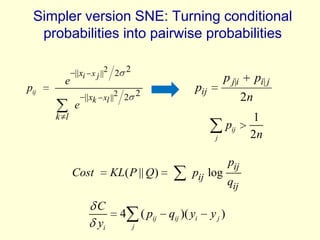

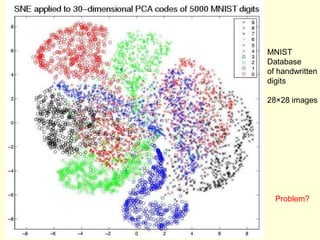

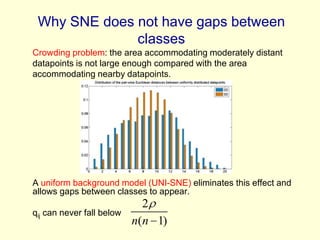

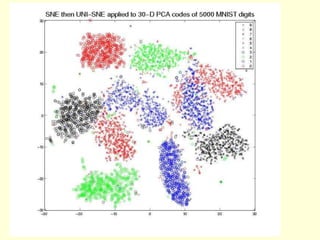

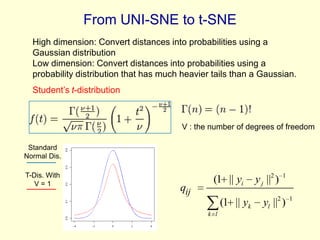

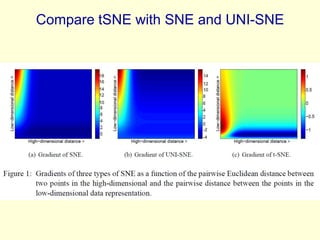

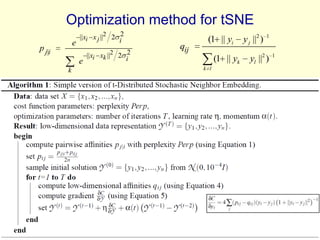

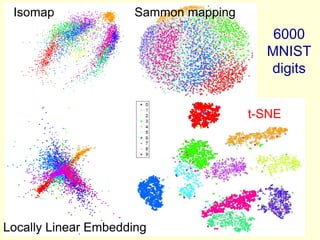

This document discusses t-Distributed Stochastic Neighbor Embedding (t-SNE), a technique for visualizing high-dimensional data. It begins by overviewing dimension reduction techniques before focusing on t-SNE. t-SNE is an improvement on Stochastic Neighbor Embedding (SNE) that converts similarities between data points to joint probabilities and minimizes the divergence between a high-dimensional and low-dimensional distribution. The document explains how t-SNE addresses issues like the "crowding problem" to better separate clusters in low dimensions. Optimization methods for t-SNE are also covered.