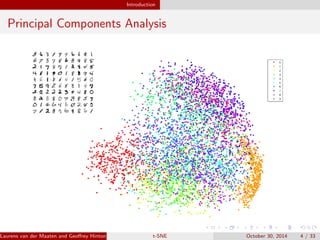

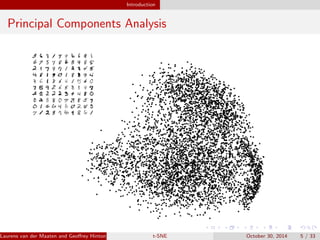

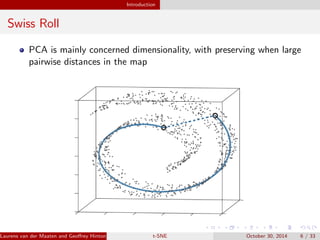

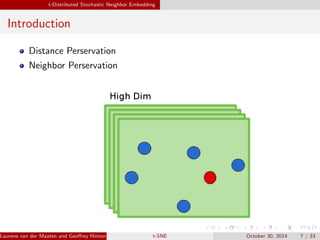

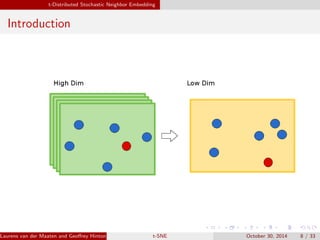

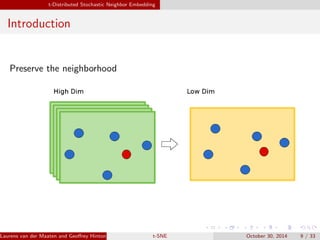

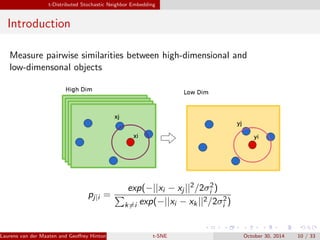

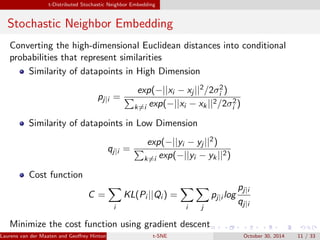

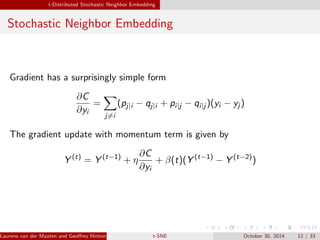

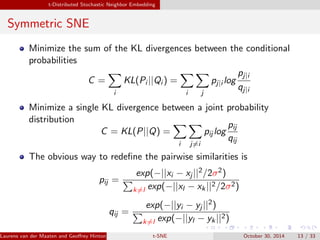

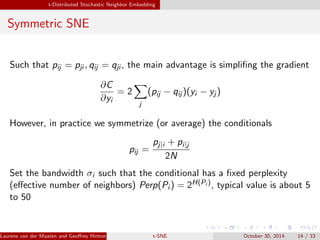

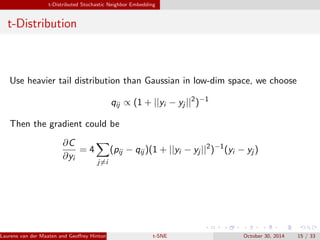

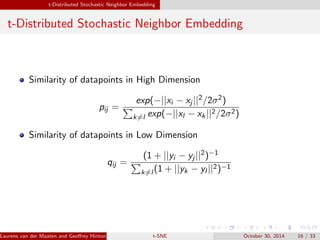

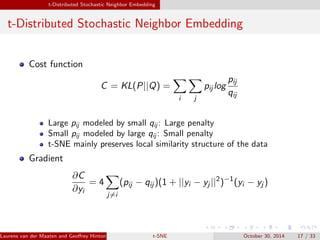

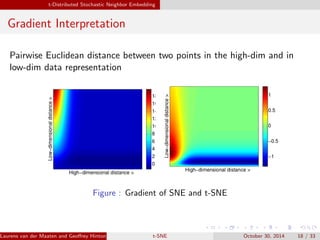

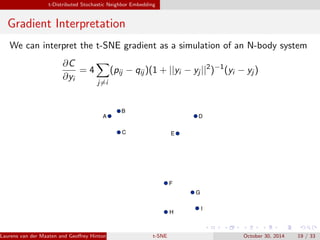

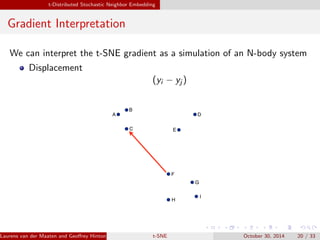

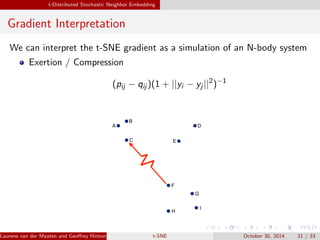

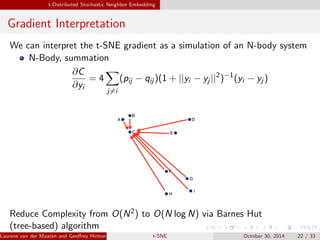

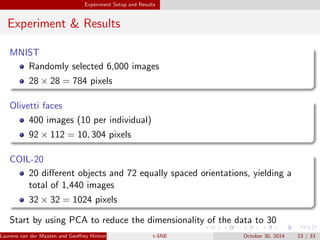

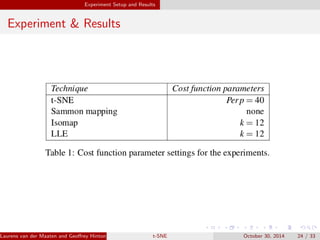

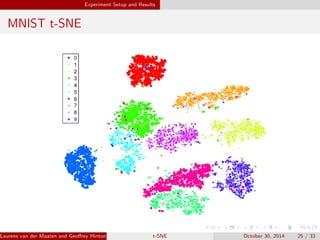

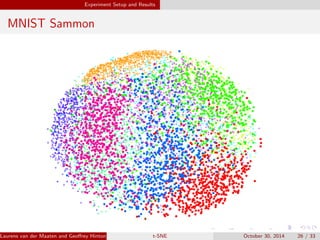

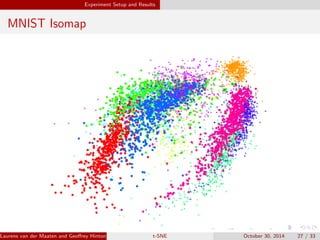

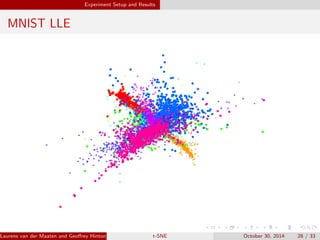

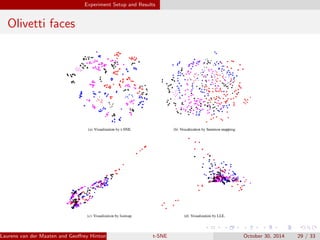

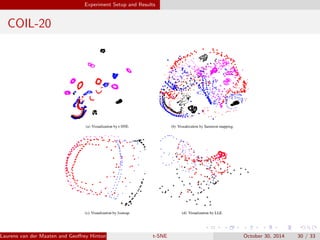

The document presents an overview of t-distributed Stochastic Neighbor Embedding (t-SNE), a technique for visualizing high-dimensional data in lower dimensions. It describes the methodology, including the preservation of local data similarities and the minimization of a cost function through gradient descent. Additionally, the document includes experimental results and resources for implementing t-SNE in various programming environments.