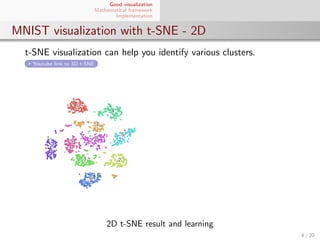

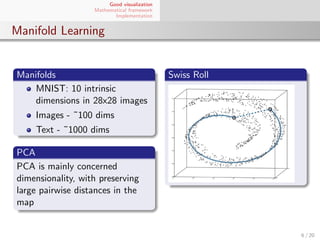

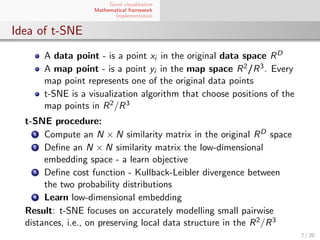

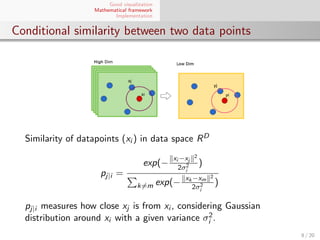

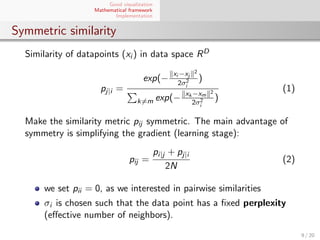

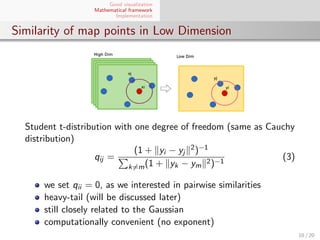

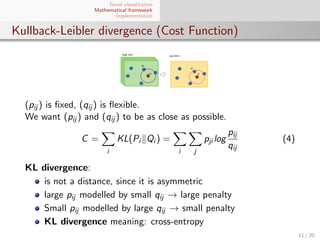

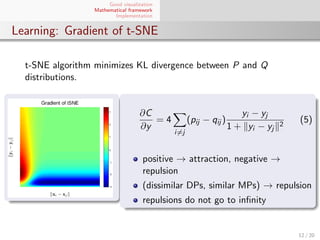

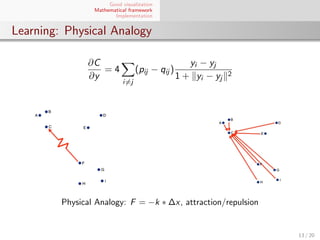

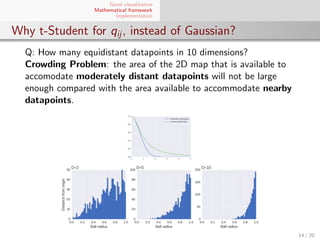

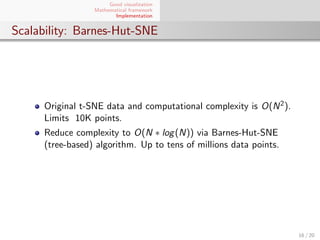

El documento presenta un marco matemático para la visualización de datos utilizando el algoritmo t-SNE, destacando su aplicación en conjuntos de datos de alta dimensión. Se explica cómo t-SNE preserva la estructura local de los datos al reducir dimensiones y se discuten sus implementaciones y escalabilidad con respecto a grandes conjuntos de datos. También se aborda la convergencia y el aprendizaje del algoritmo mediante la minimización de la divergencia de Kullback-Leibler entre distribuciones de similitud.