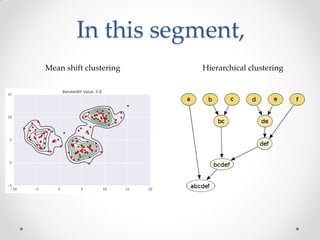

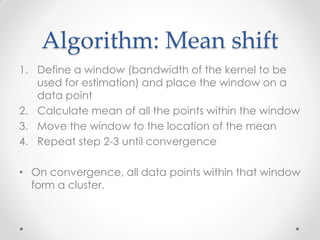

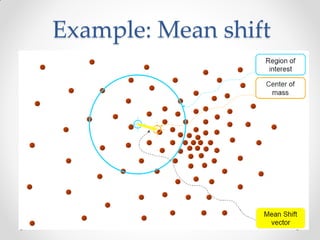

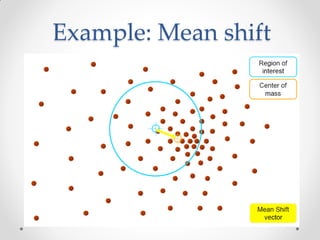

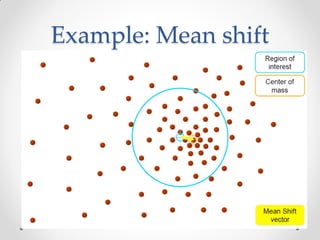

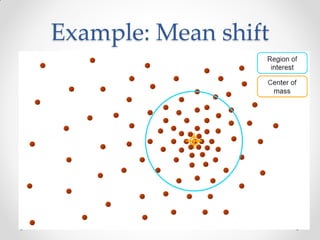

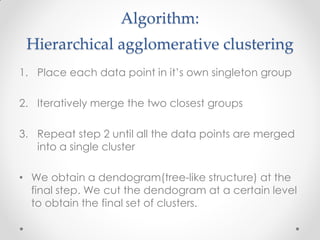

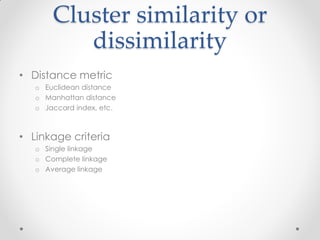

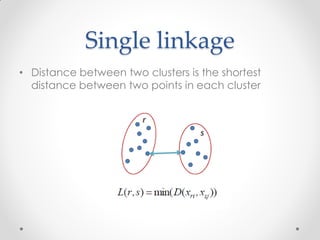

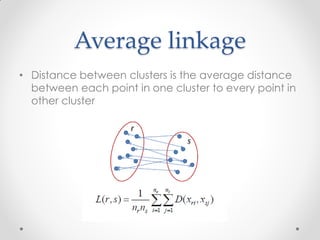

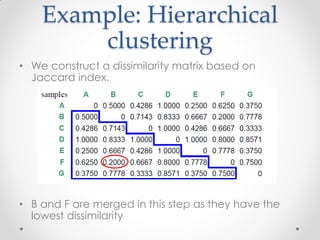

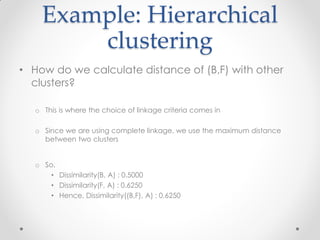

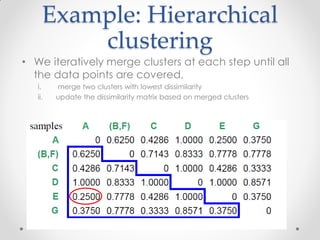

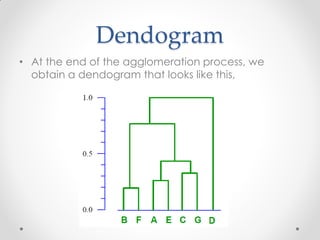

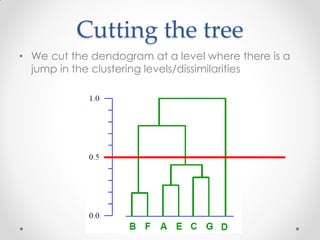

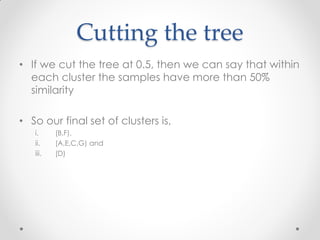

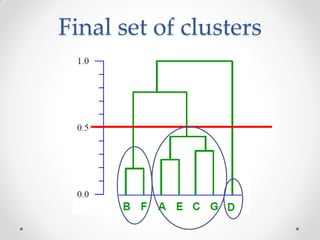

Mean shift clustering finds clusters by locating peaks in the probability density function of the data. It iteratively moves data points to the mean of nearby points until convergence. Hierarchical clustering builds clusters gradually by either merging or splitting clusters at each step. There are two types: divisive which splits clusters, and agglomerative which merges clusters. Agglomerative clustering starts with each point as a cluster and iteratively merges the closest pair of clusters until all are merged based on a chosen linkage method like complete or average linkage. The choice of distance metric and linkage method impacts the resulting clusters.