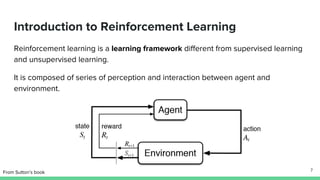

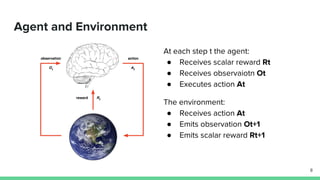

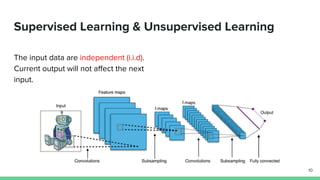

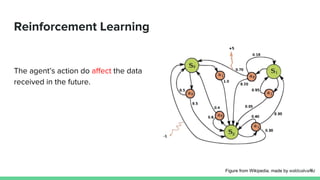

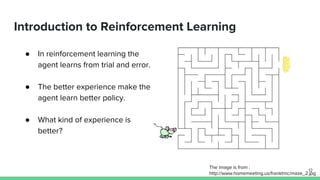

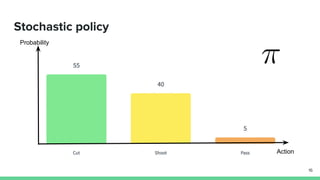

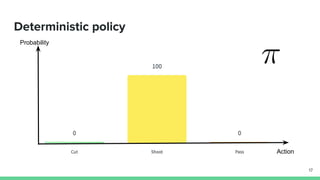

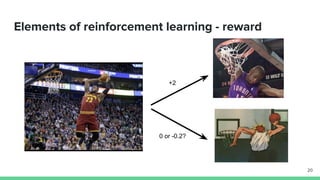

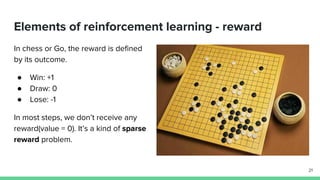

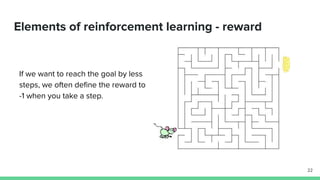

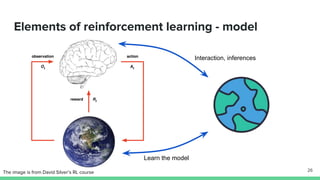

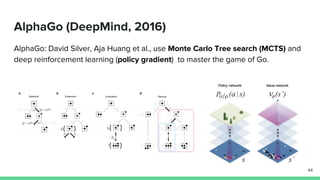

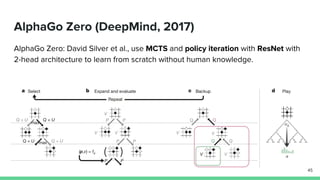

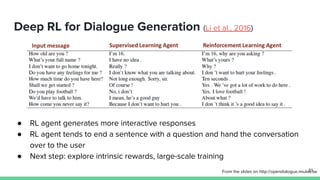

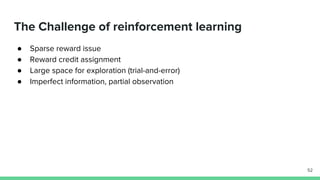

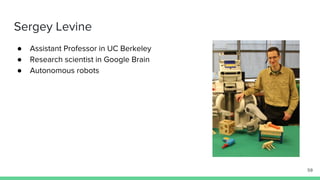

This document provides an introduction and overview of reinforcement learning. It begins with a syllabus that outlines key topics such as Markov decision processes, dynamic programming, Monte Carlo methods, temporal difference learning, deep reinforcement learning, and active research areas. It then defines the key elements of reinforcement learning including policies, reward signals, value functions, and models of the environment. The document discusses the history and applications of reinforcement learning, highlighting seminal works in backgammon, helicopter control, Atari games, Go, and dialogue generation. It concludes by noting challenges in the field and prominent researchers contributing to its advancement.