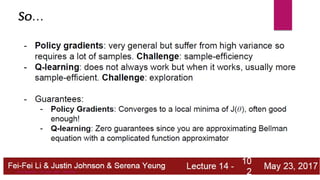

The document is a presentation on reinforcement learning by Niloofar Sedighian from the CS Department at SBU University in Tehran, Iran from June 2020. It covers key topics in reinforcement learning including the agent-environment framework, Markov decision processes, Q-learning, policy gradients, deep Q-networks, experience replay, and actor-critic methods. Examples are provided throughout to illustrate concepts such as Google DeepMind's work using reinforcement learning to develop agents that can walk, run and jump without prior programming.