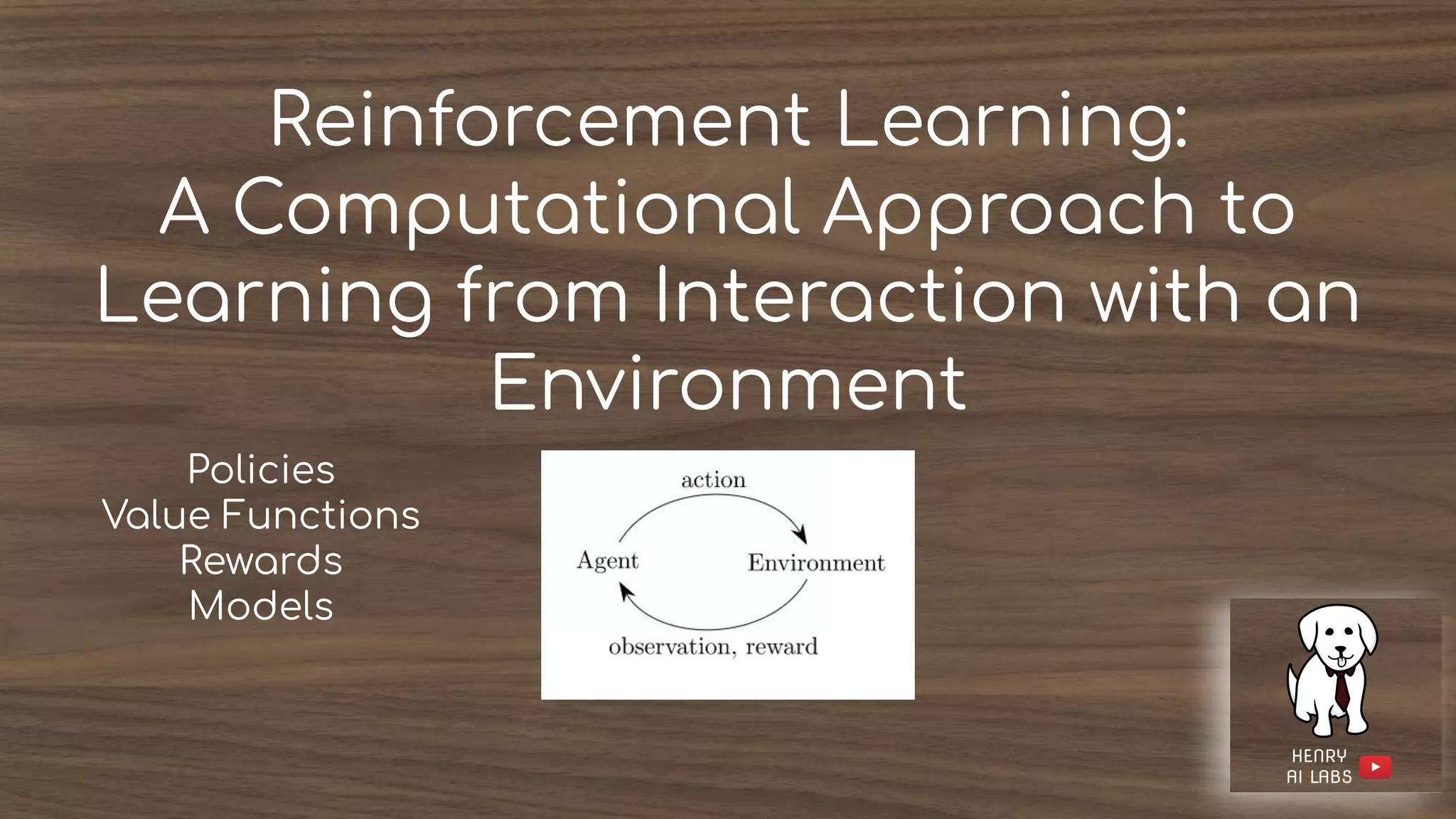

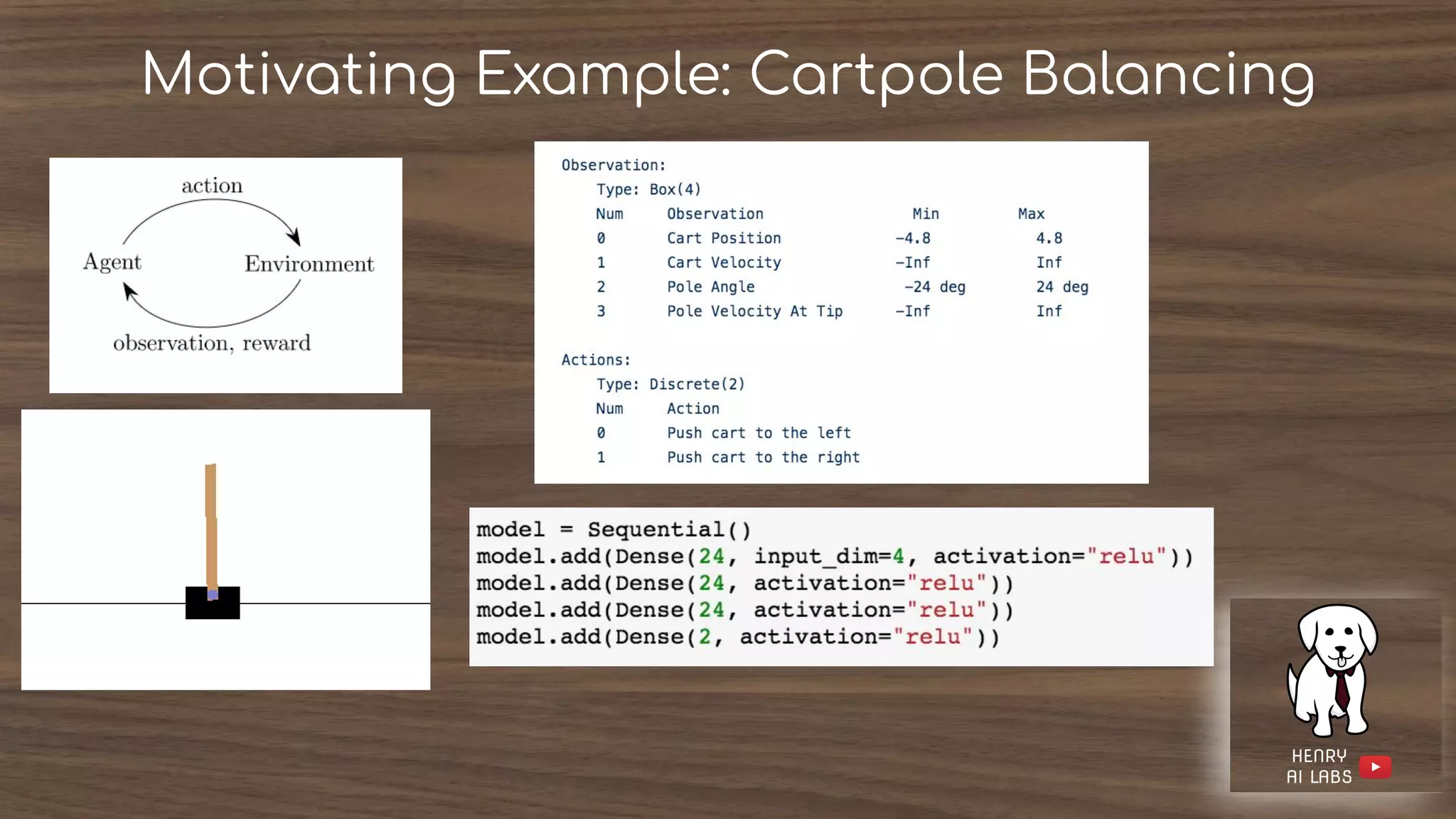

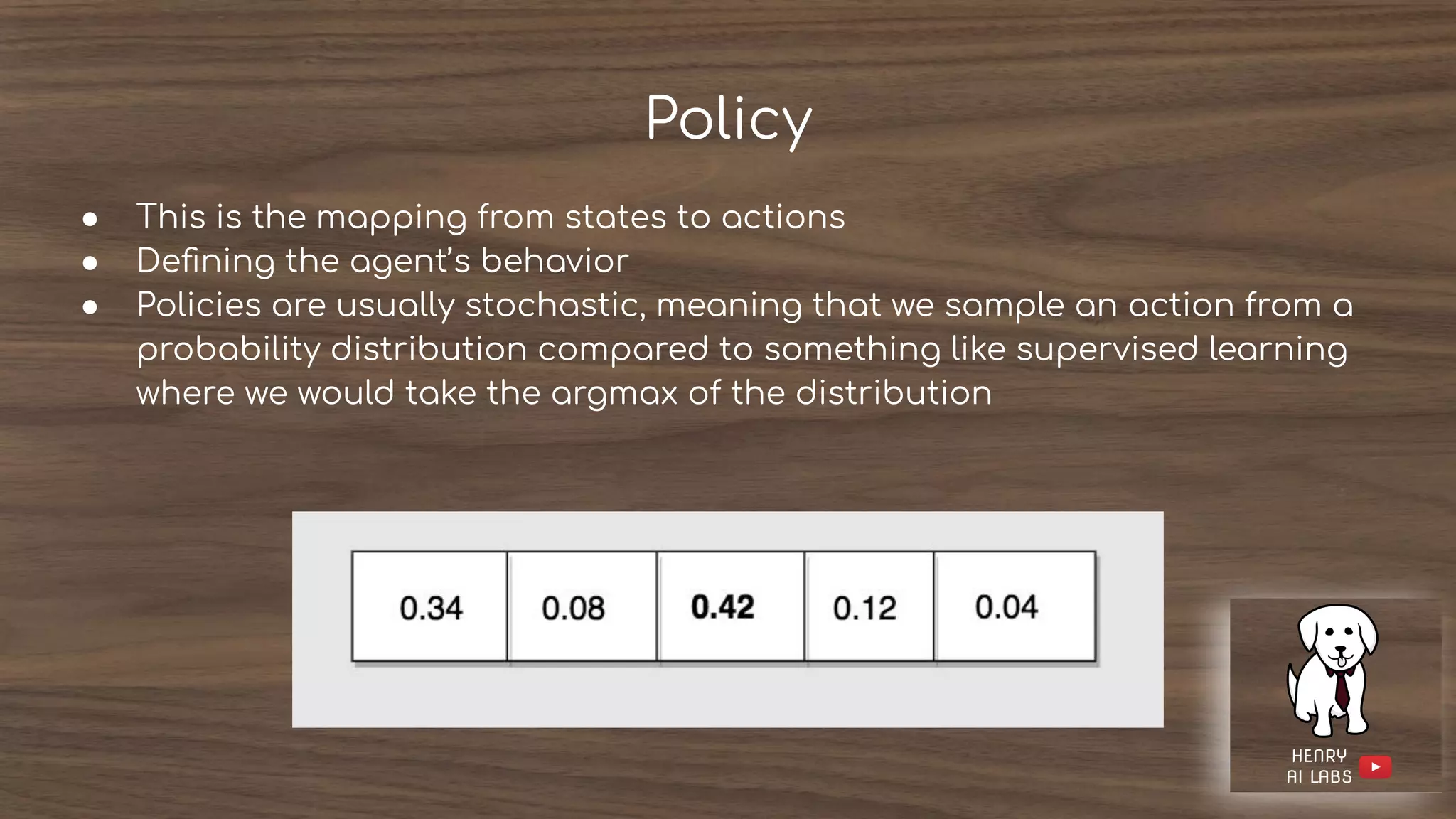

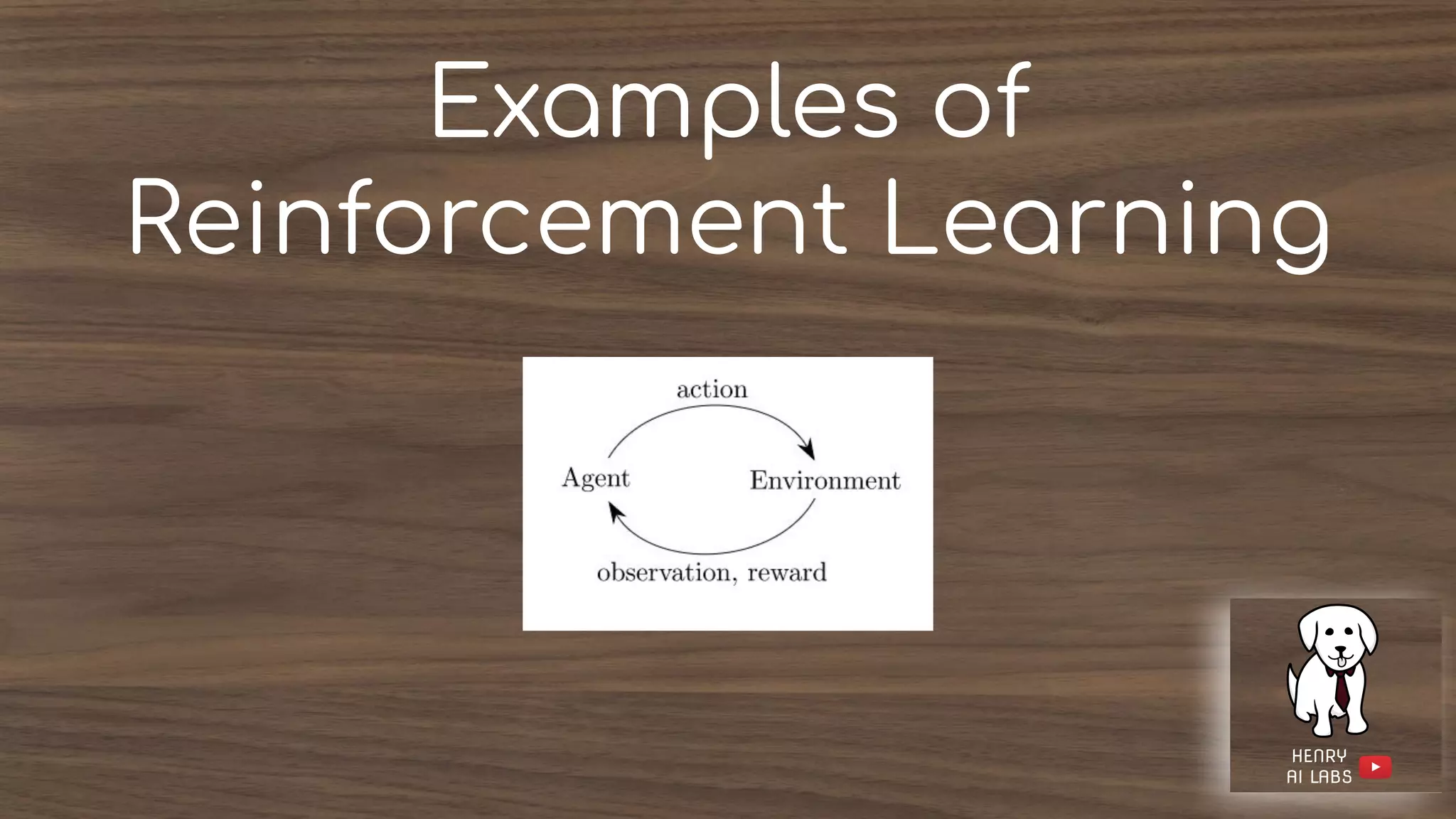

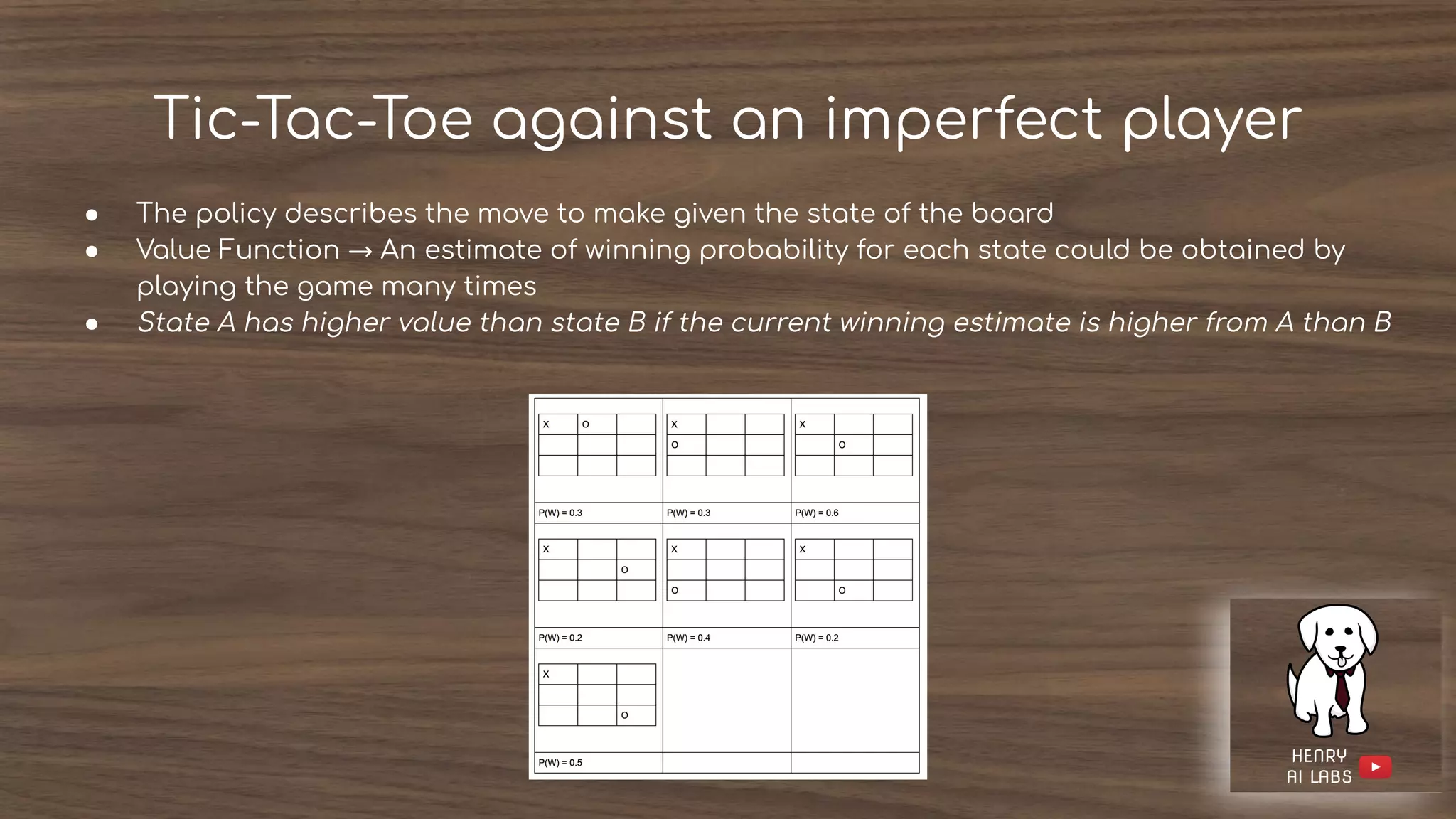

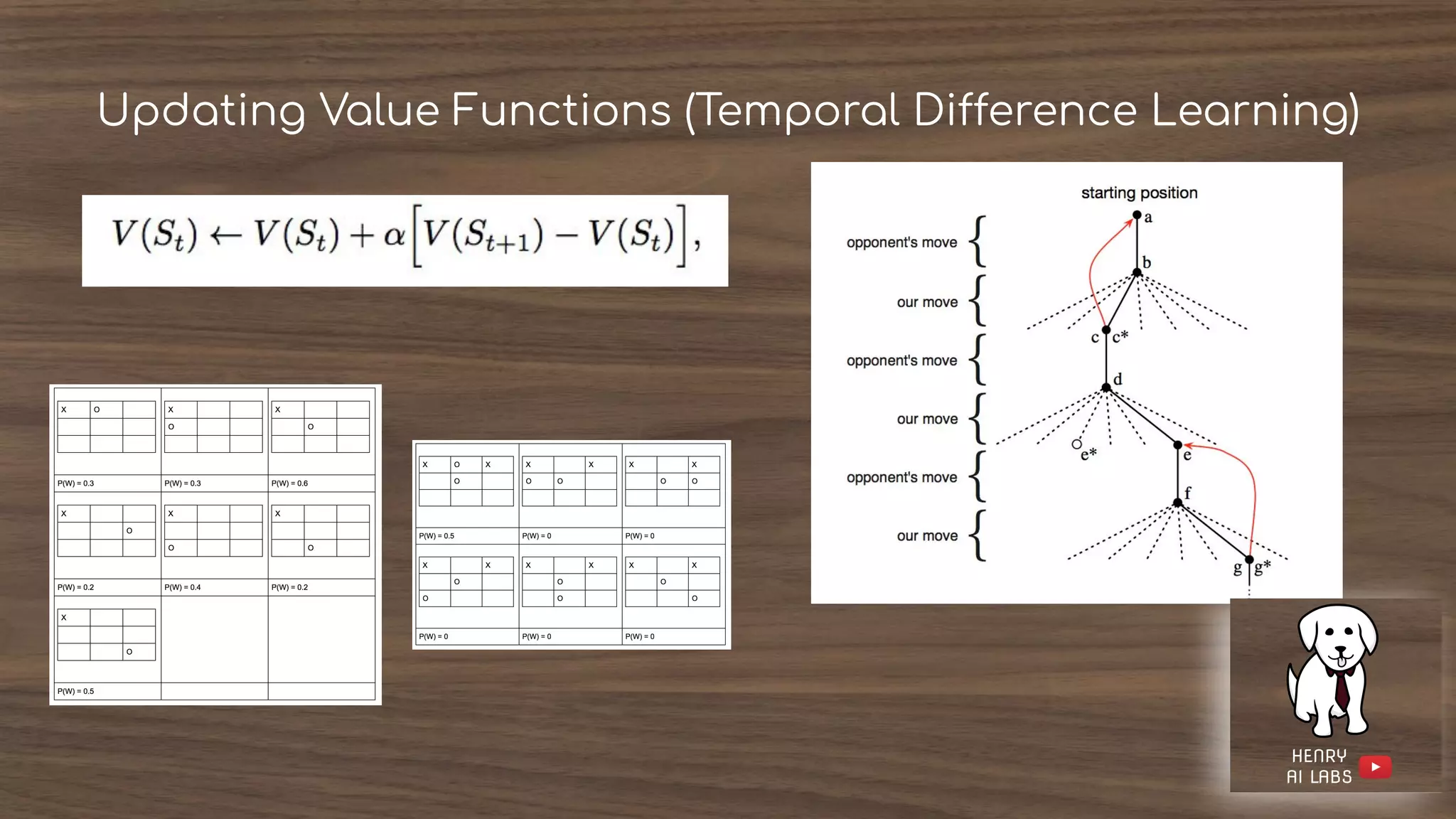

The document introduces reinforcement learning (RL) by discussing its core components: policies, rewards, value functions, and optional models. It highlights key challenges such as exploration versus exploitation and the importance of estimating values for effective decision-making. Examples of RL applications, including games and adaptive systems, illustrate the complexity and utility of RL in real-world scenarios.