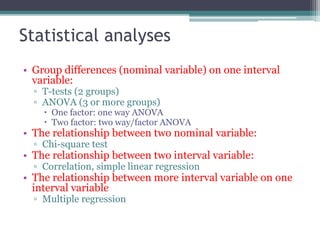

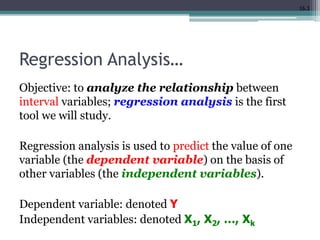

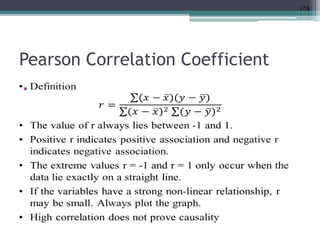

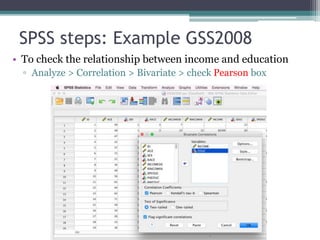

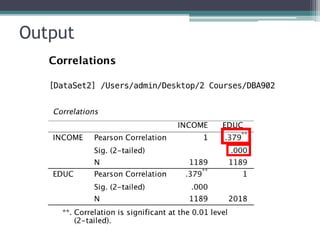

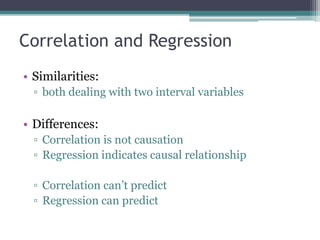

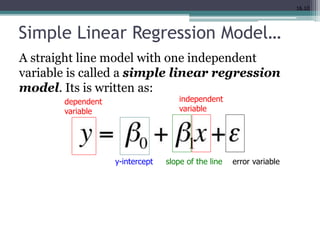

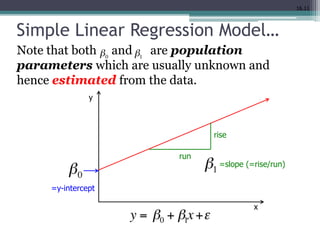

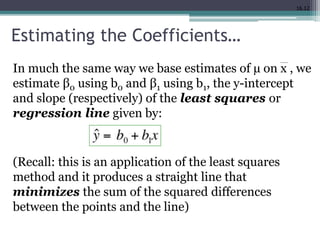

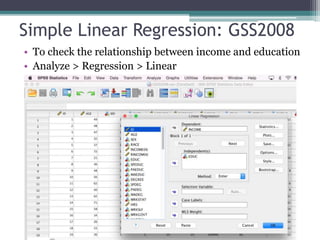

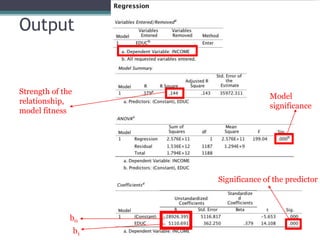

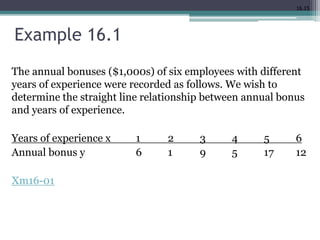

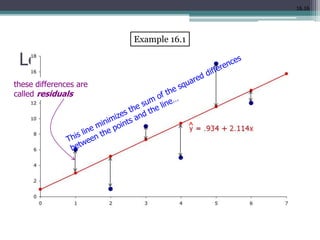

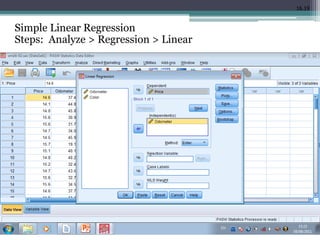

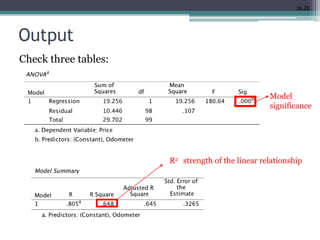

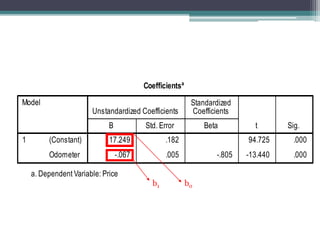

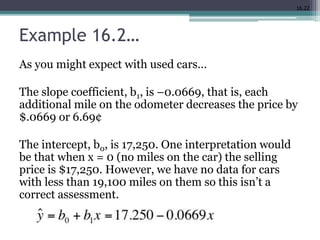

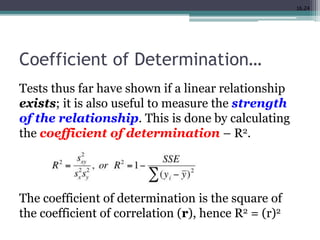

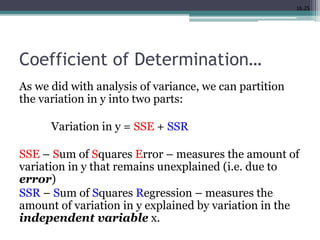

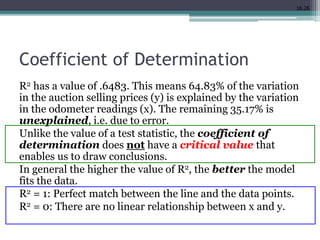

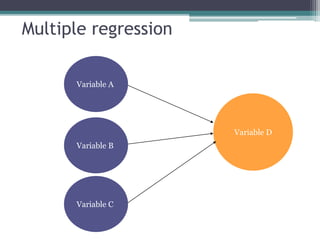

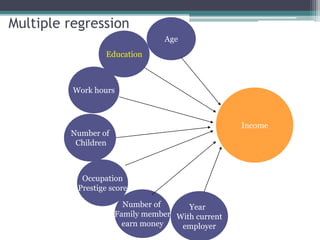

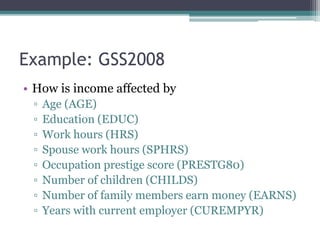

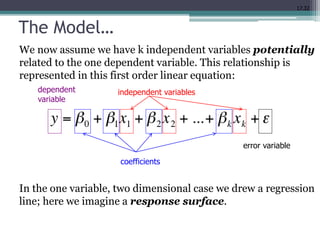

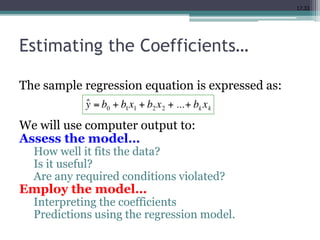

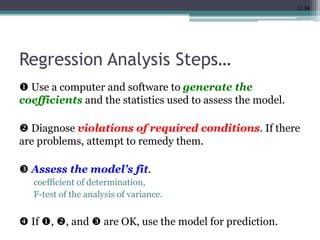

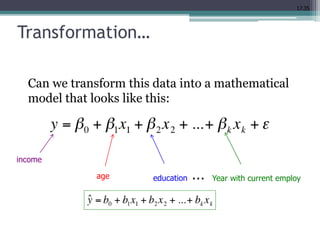

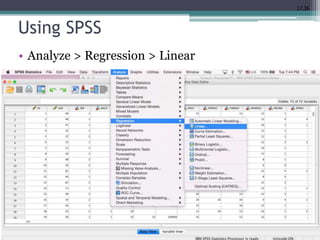

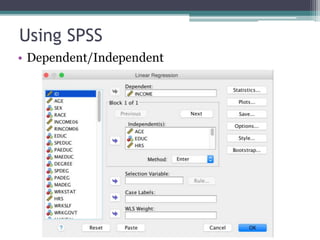

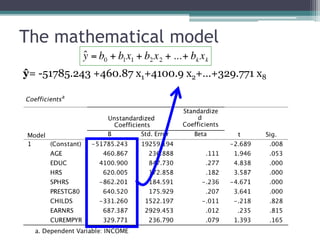

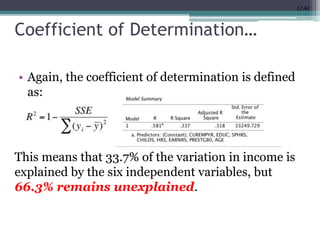

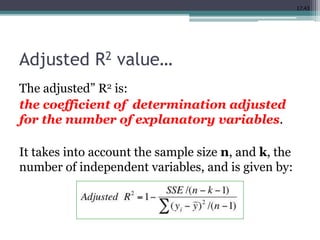

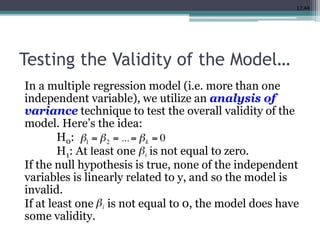

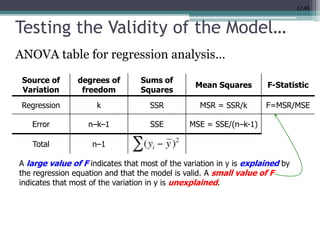

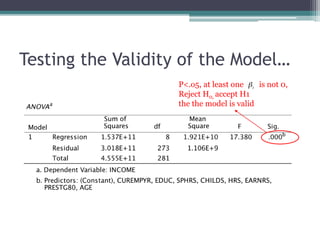

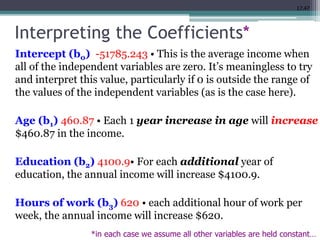

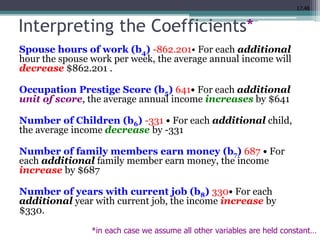

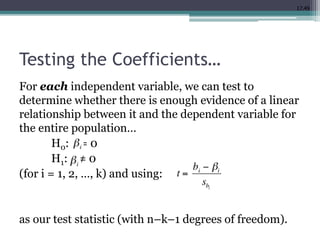

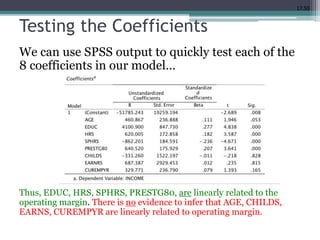

This document discusses quantitative research methods including correlation, simple linear regression, and multiple regression. It provides examples of how to conduct simple linear regression using SPSS to analyze the relationship between two variables and predict the dependent variable based on the independent variable. It then expands the discussion to multiple linear regression, using SPSS to analyze the relationships between multiple independent variables and one dependent variable. Key steps of assessing the model such as the coefficient of determination and F-test of ANOVA are also covered.