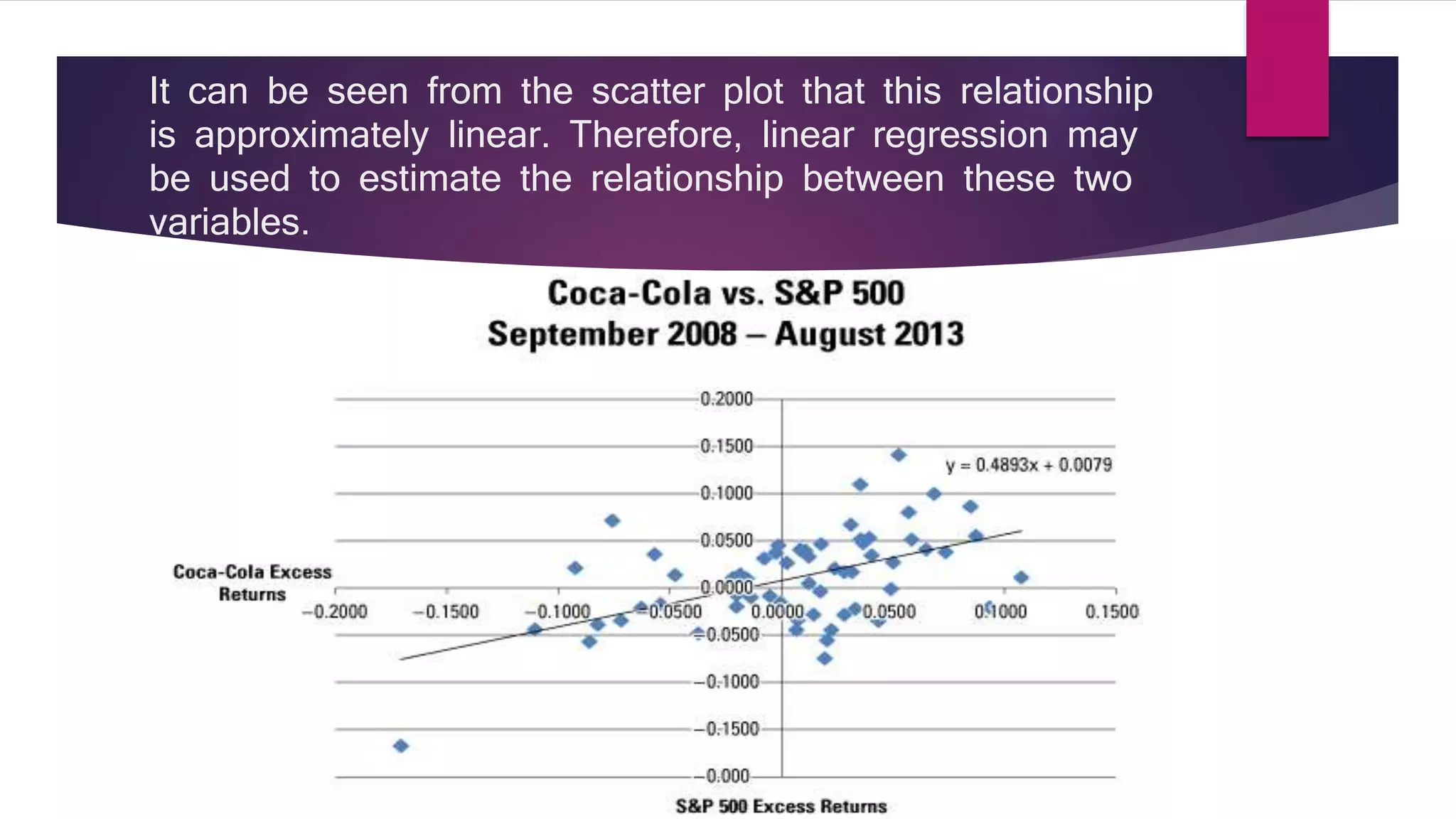

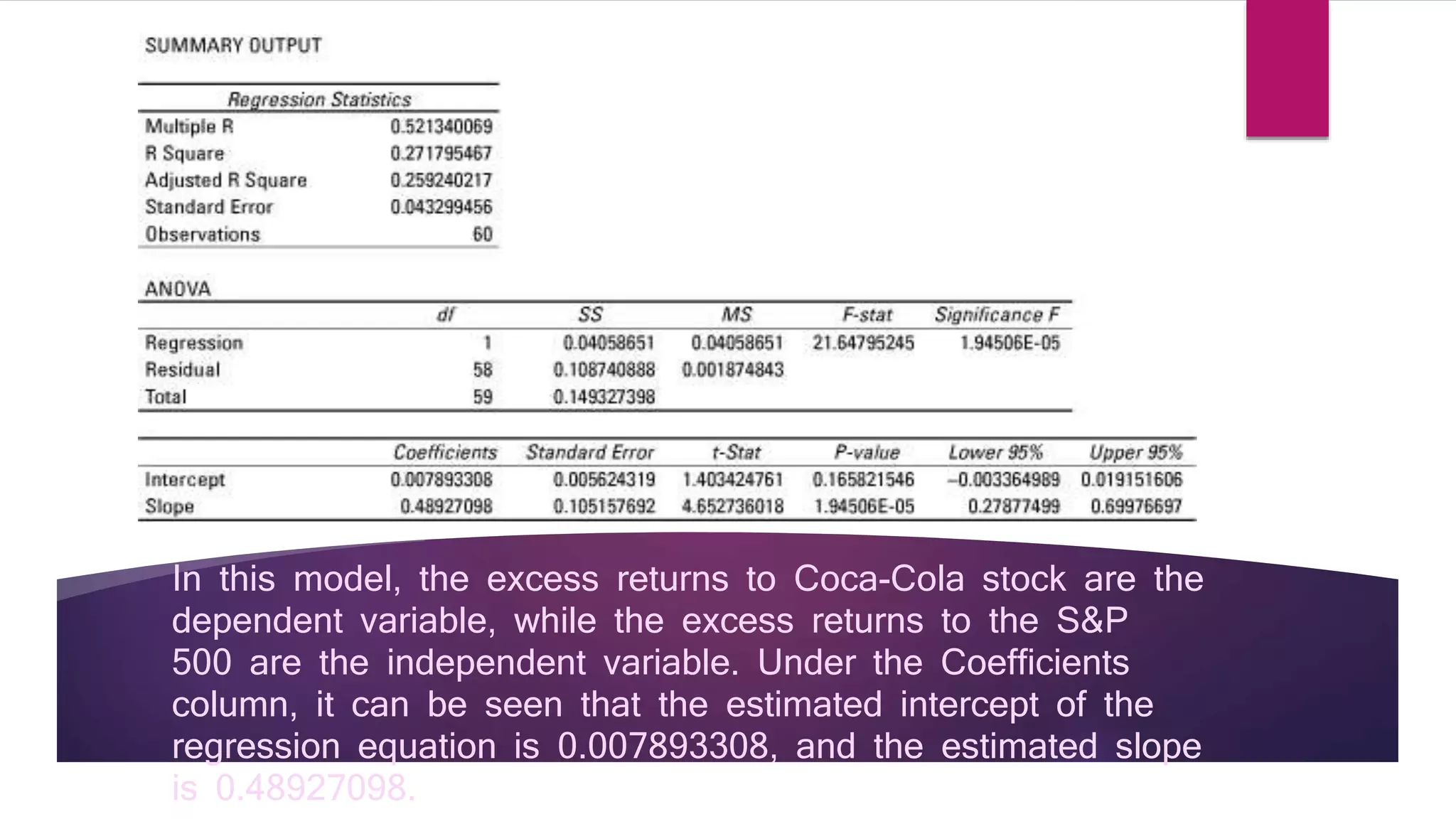

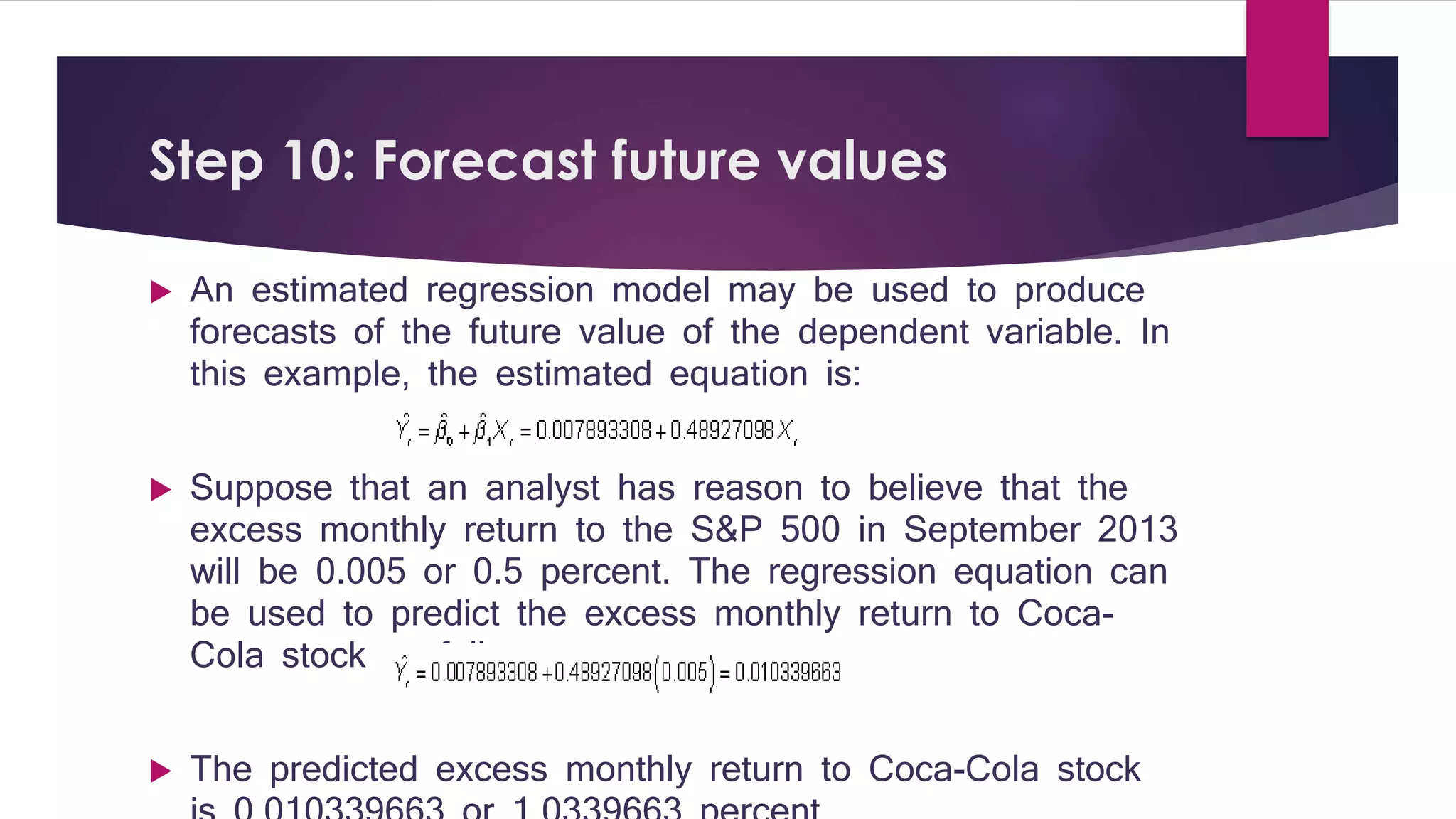

This document provides a summary of regression analysis in 9 steps: 1) Specify dependent and independent variables, 2) Check for linearity with scatter plots, 3) Transform variables if nonlinear, 4) Estimate the regression model, 5) Test the model fit with R2, 6) Perform a joint hypothesis test of the coefficients, 7) Test individual coefficients, 8) Check for violations of assumptions like autocorrelation and heteroscedasticity, 9) Interpret the intercept and slope coefficients. Regression analysis is used to determine relationships between variables and estimate how changes in independents impact dependents.