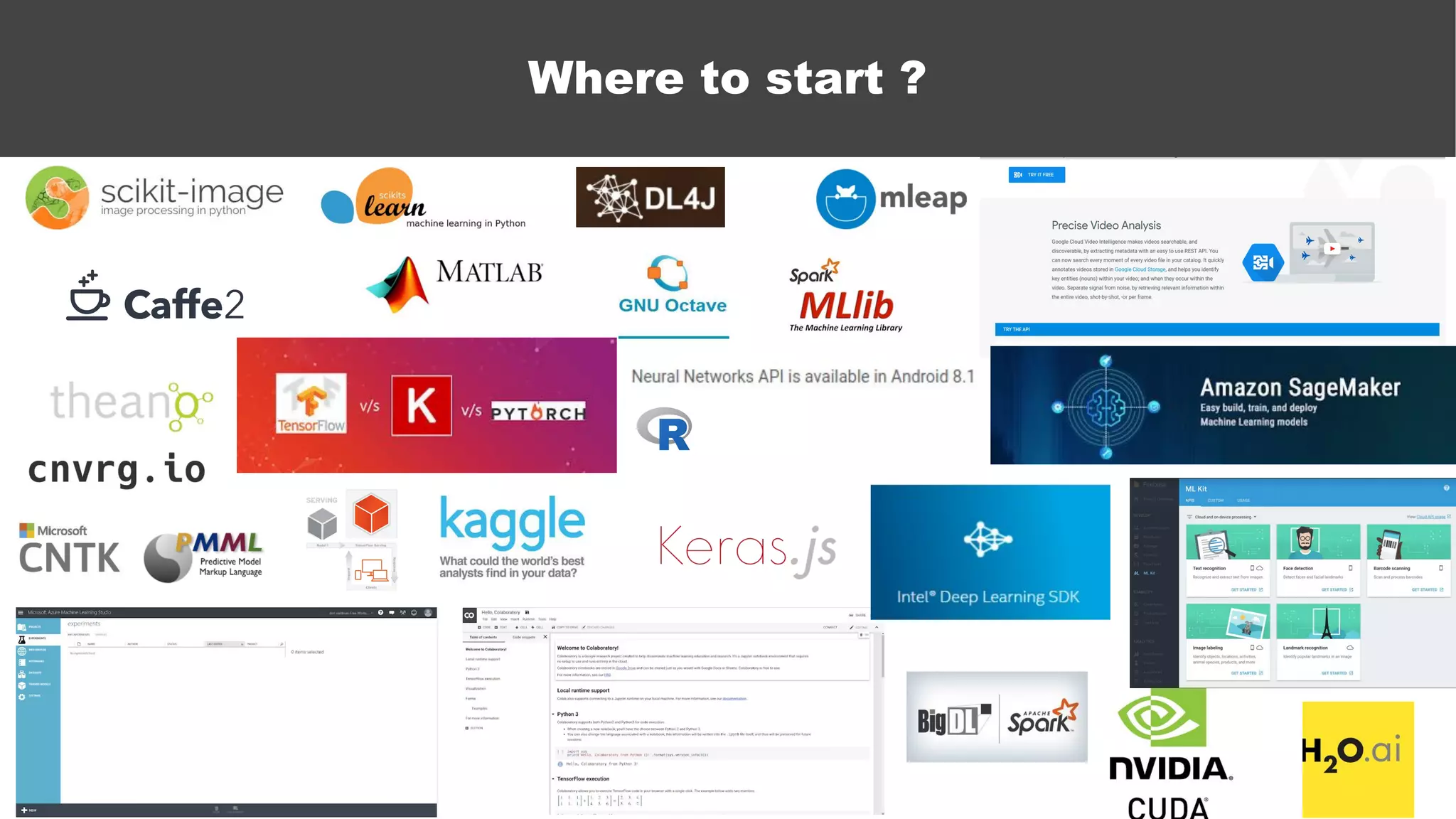

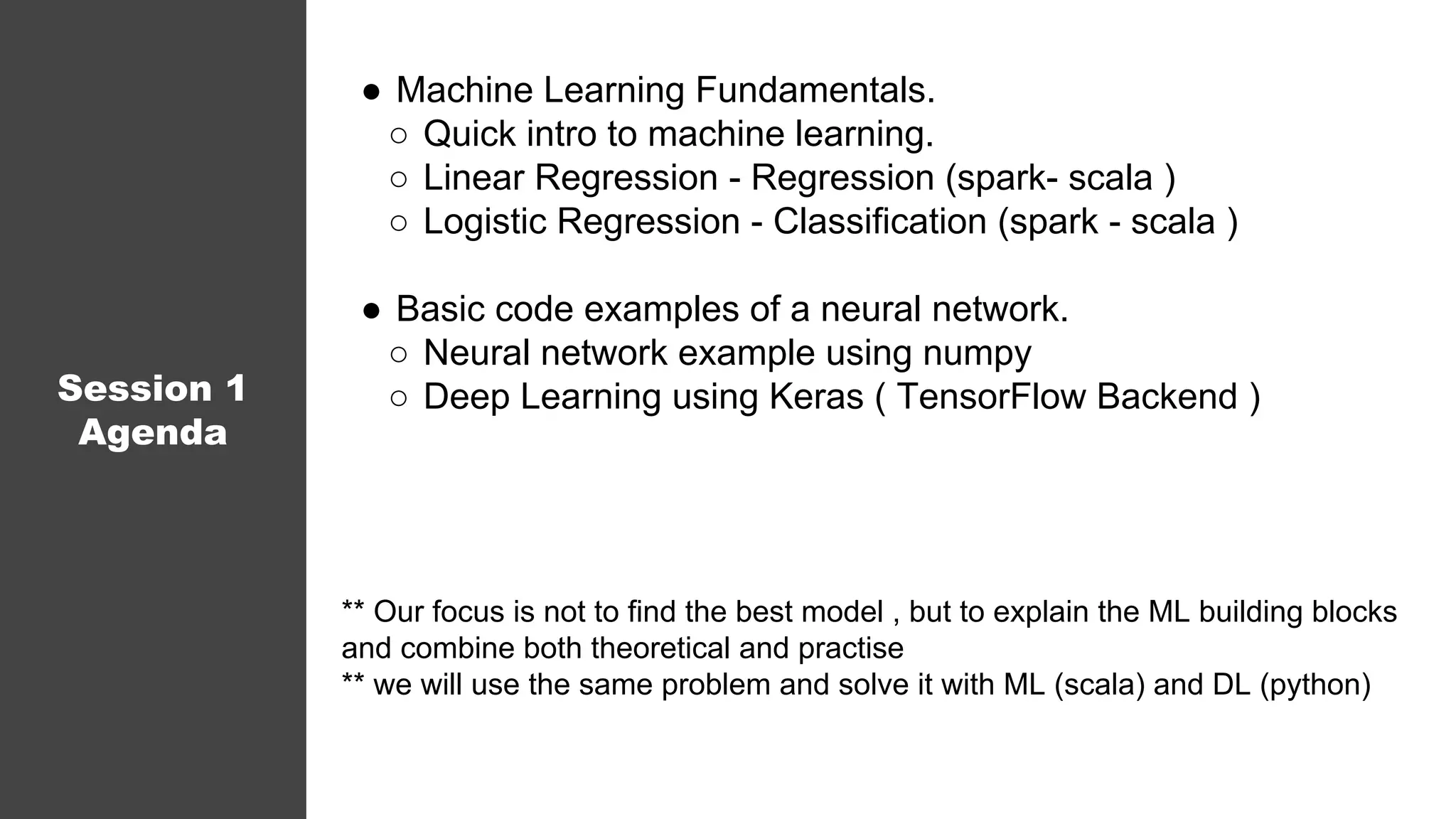

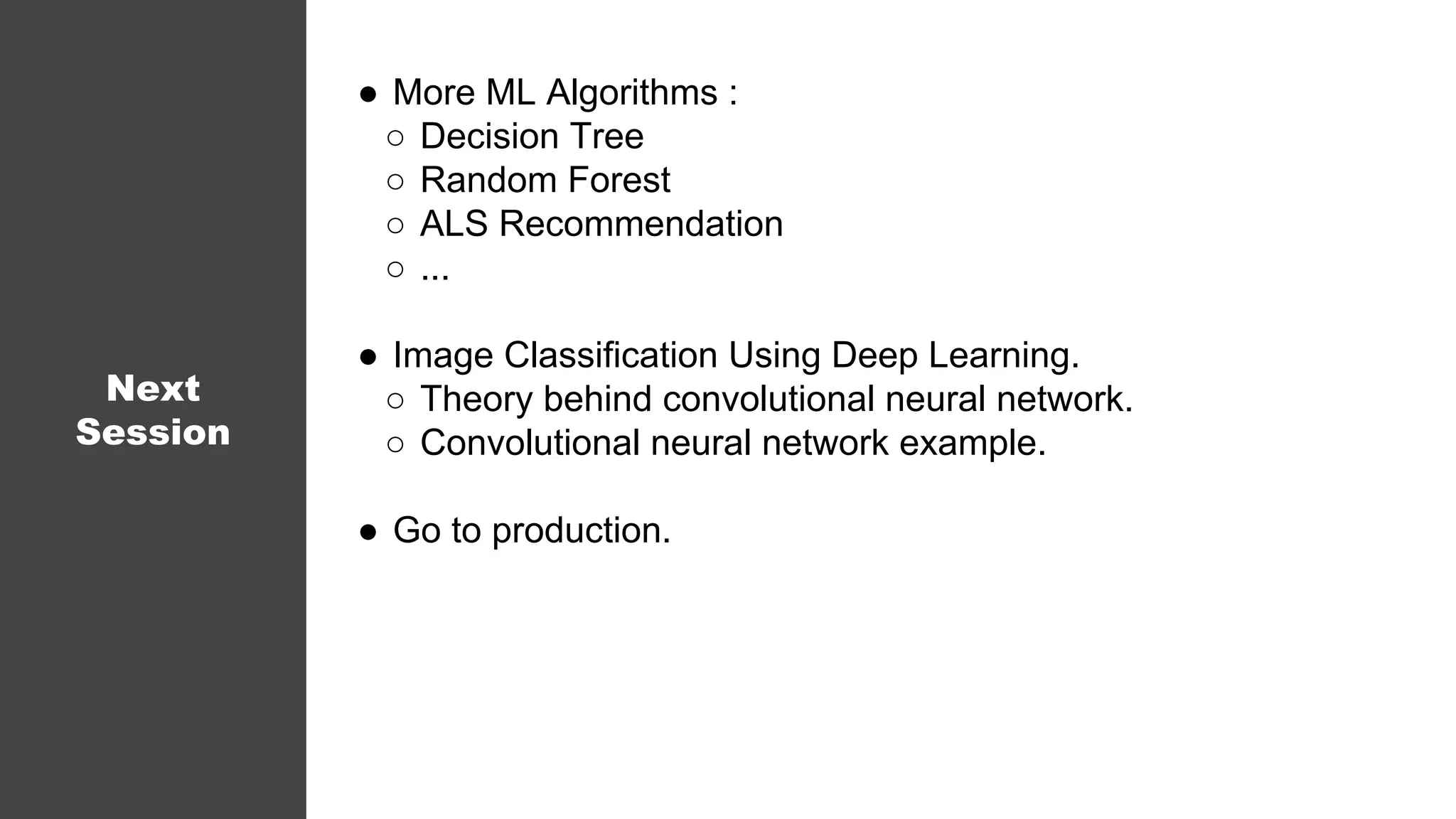

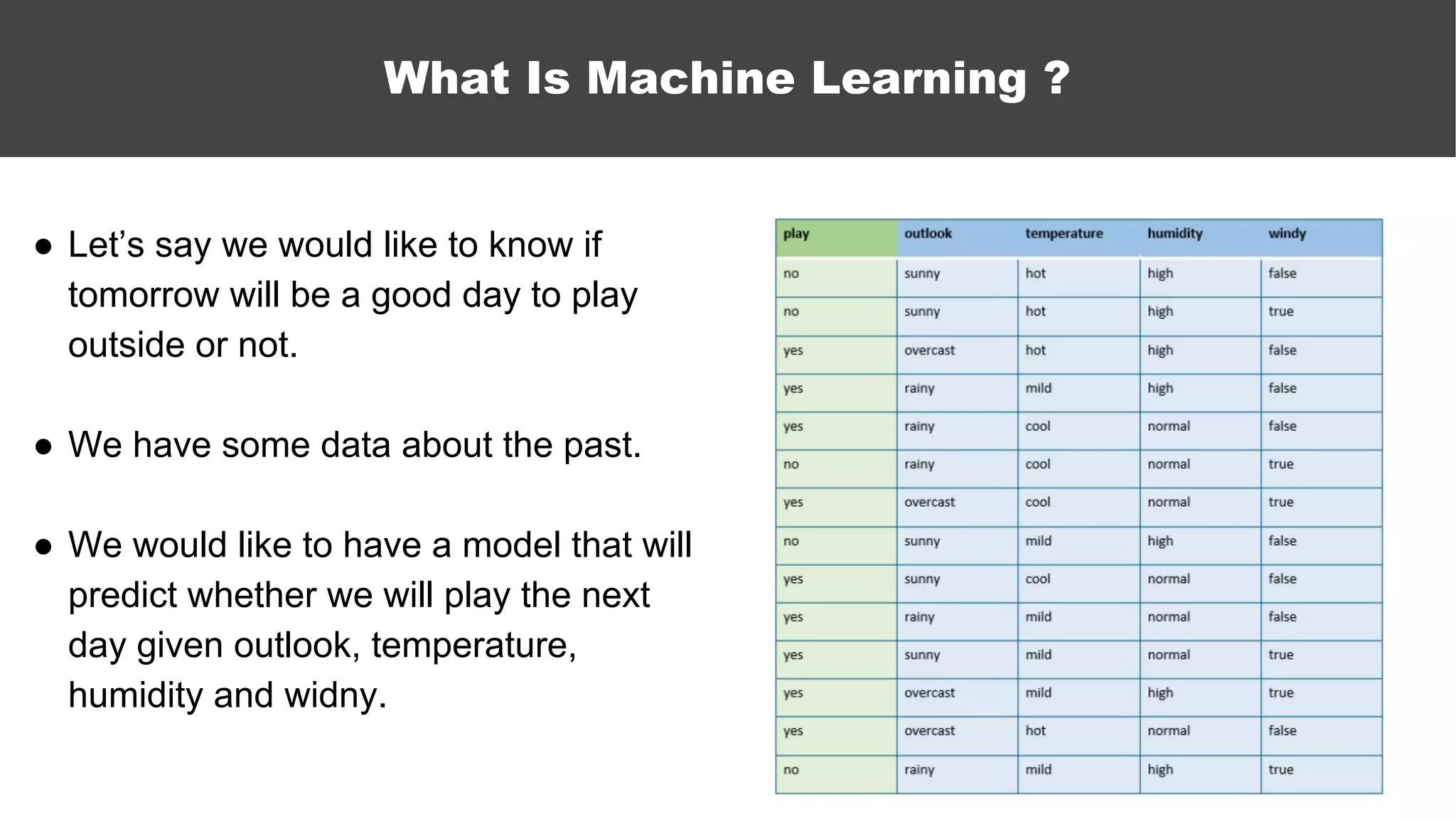

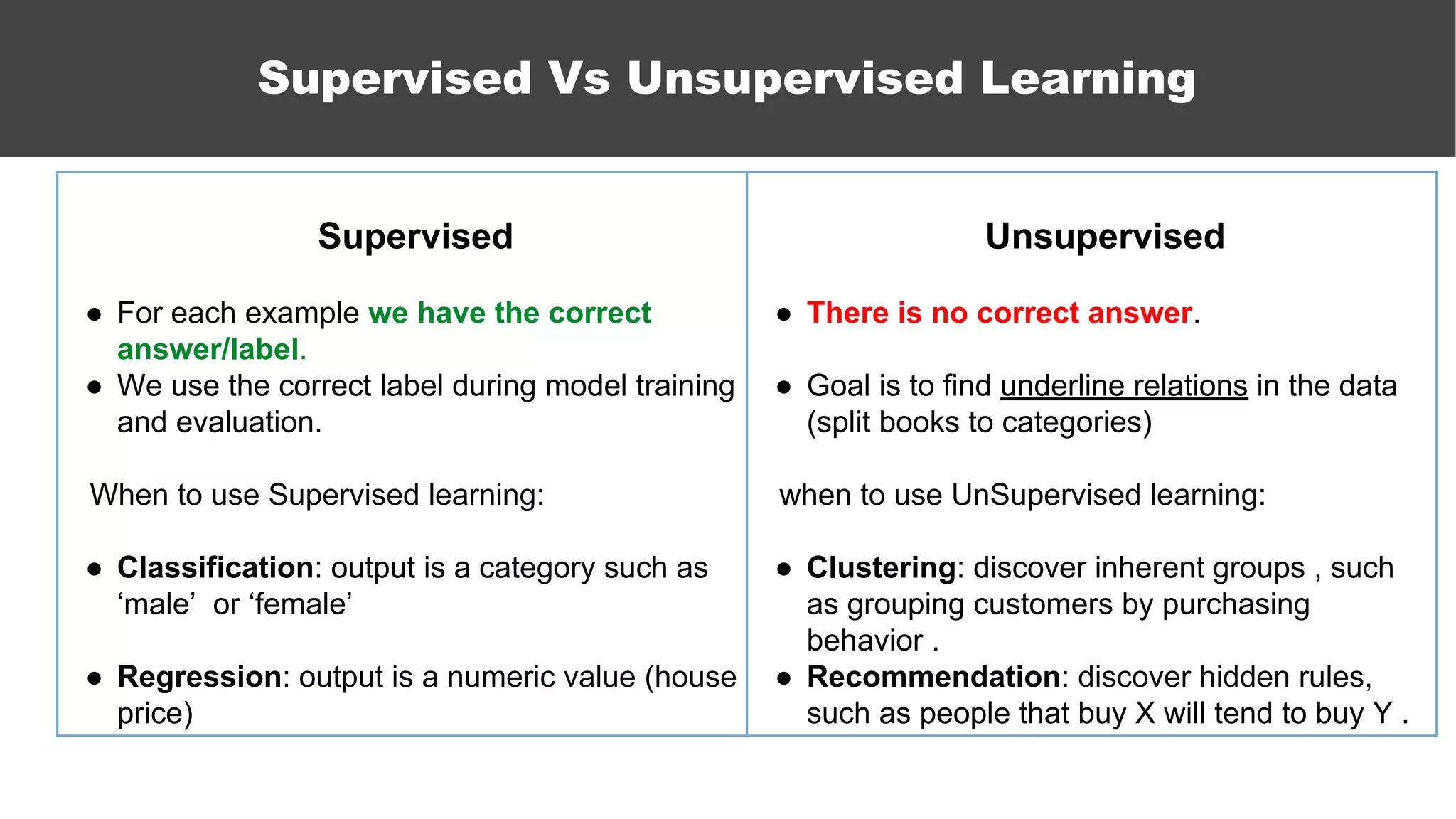

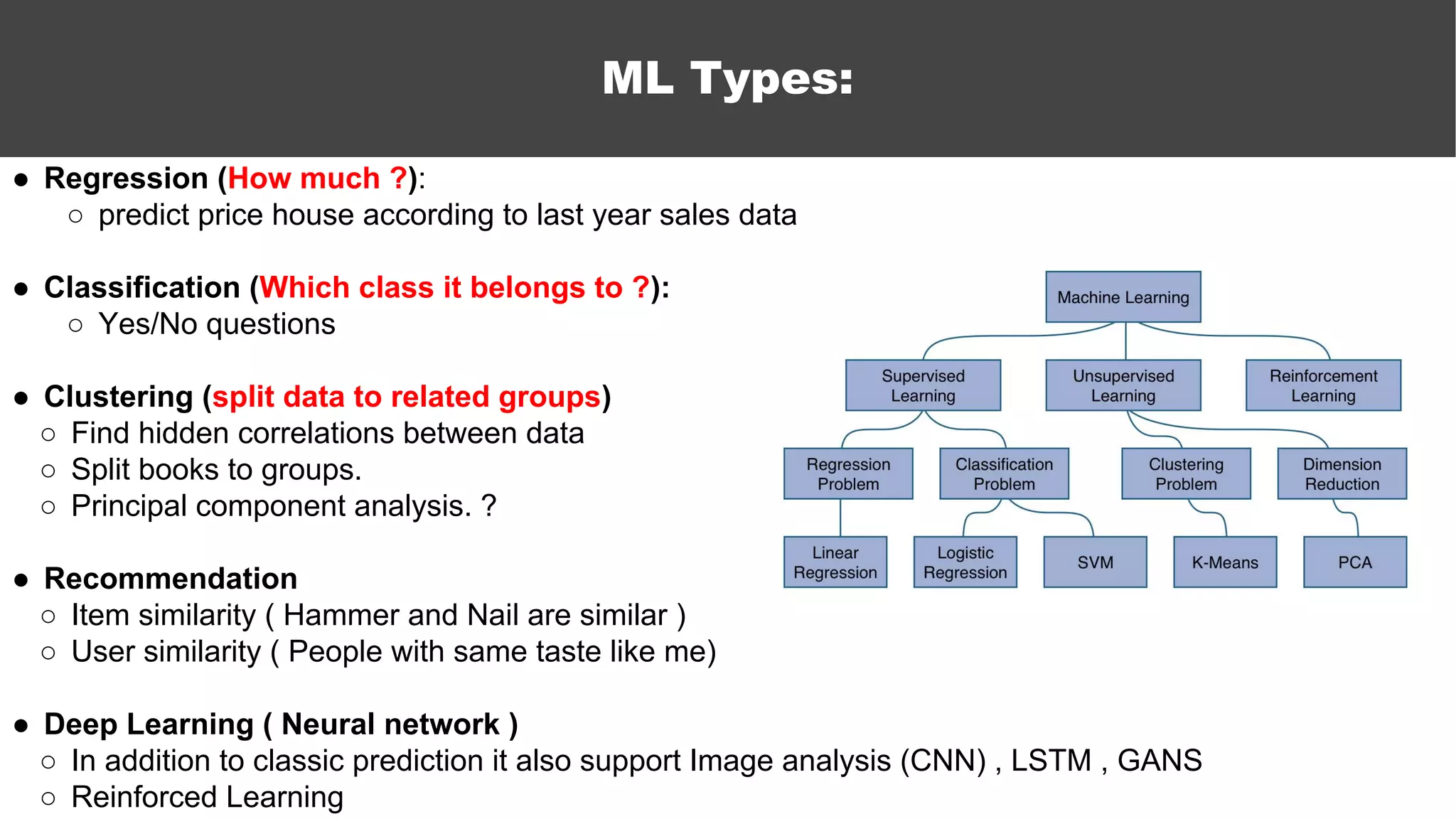

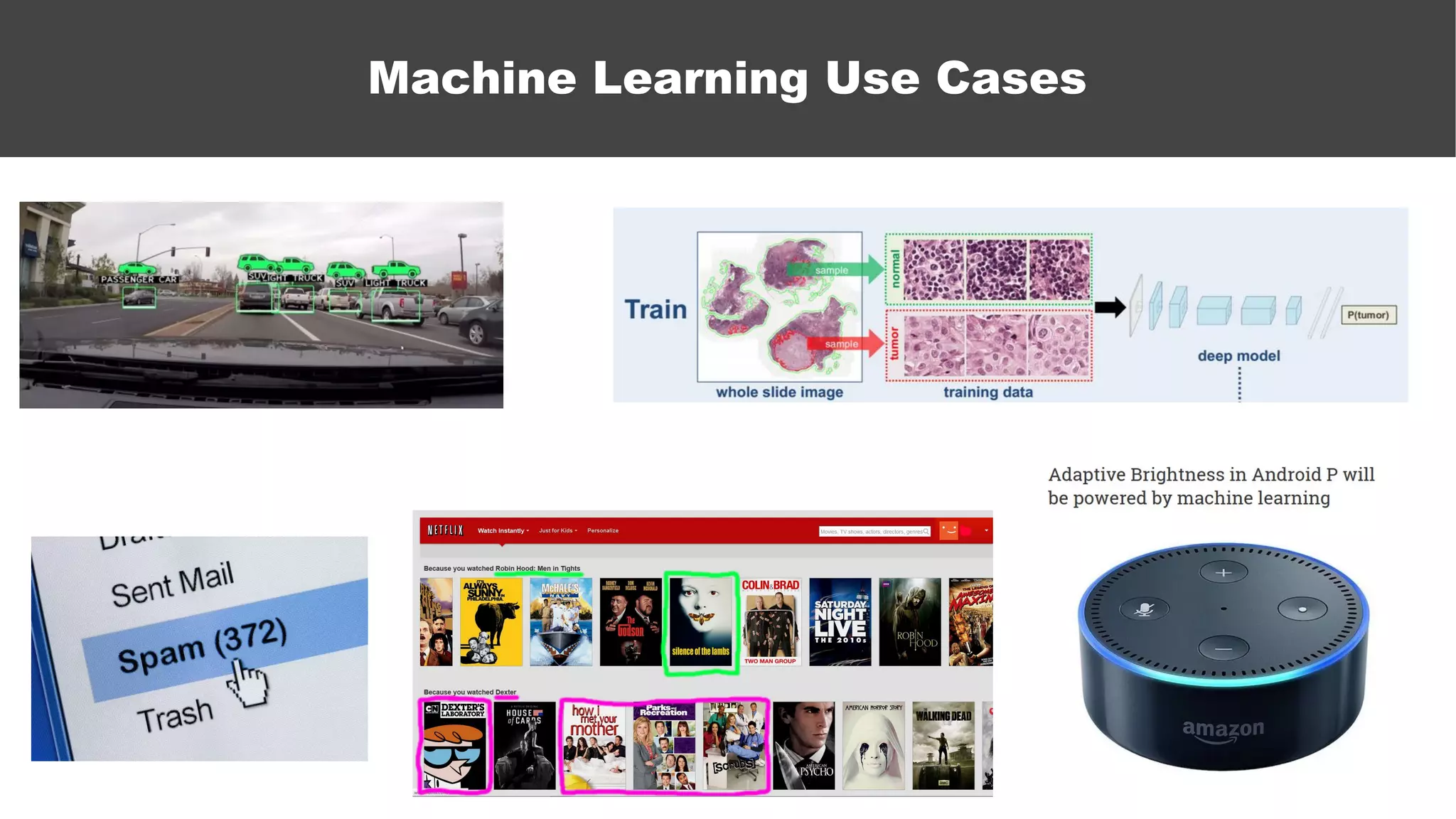

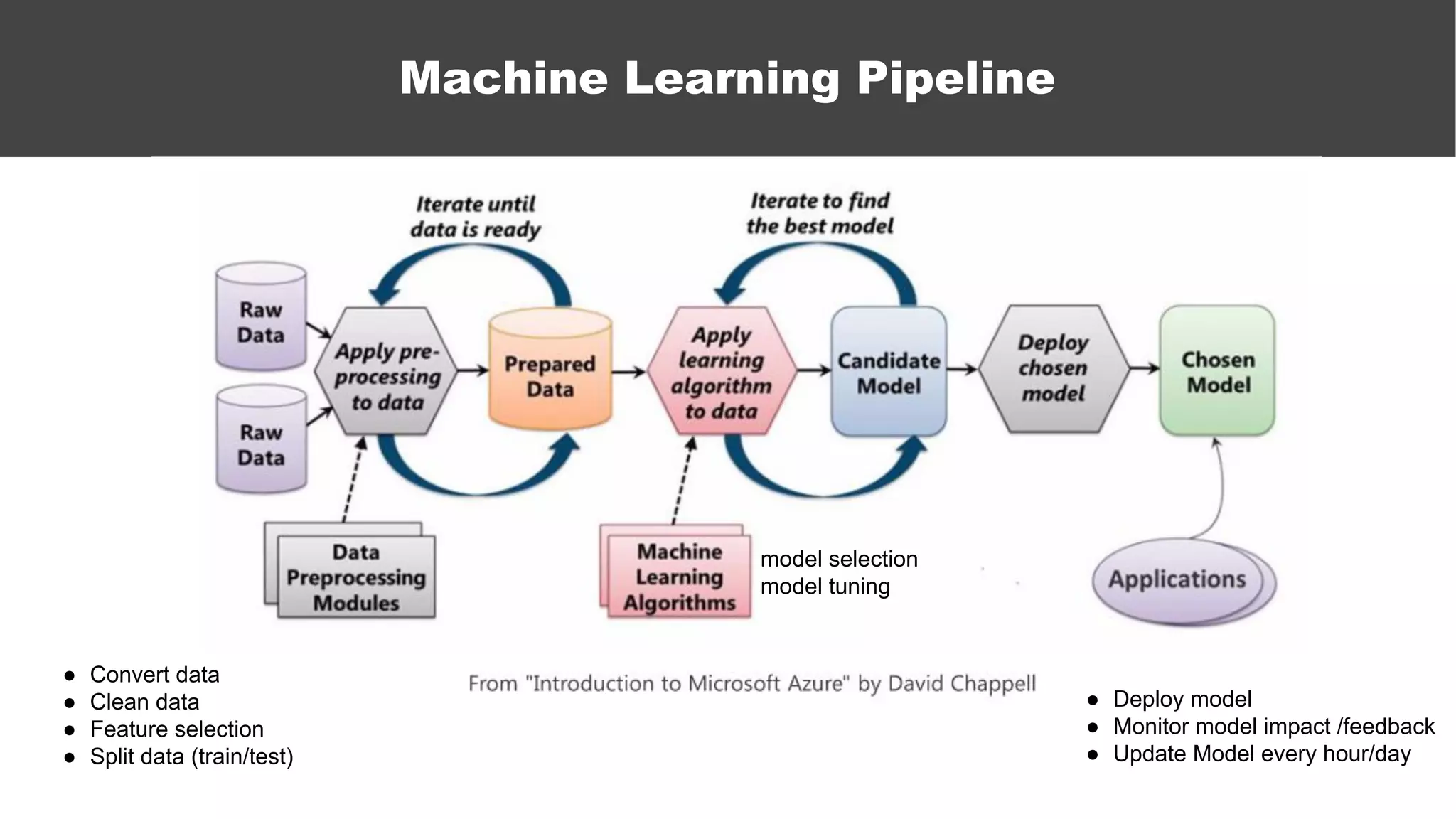

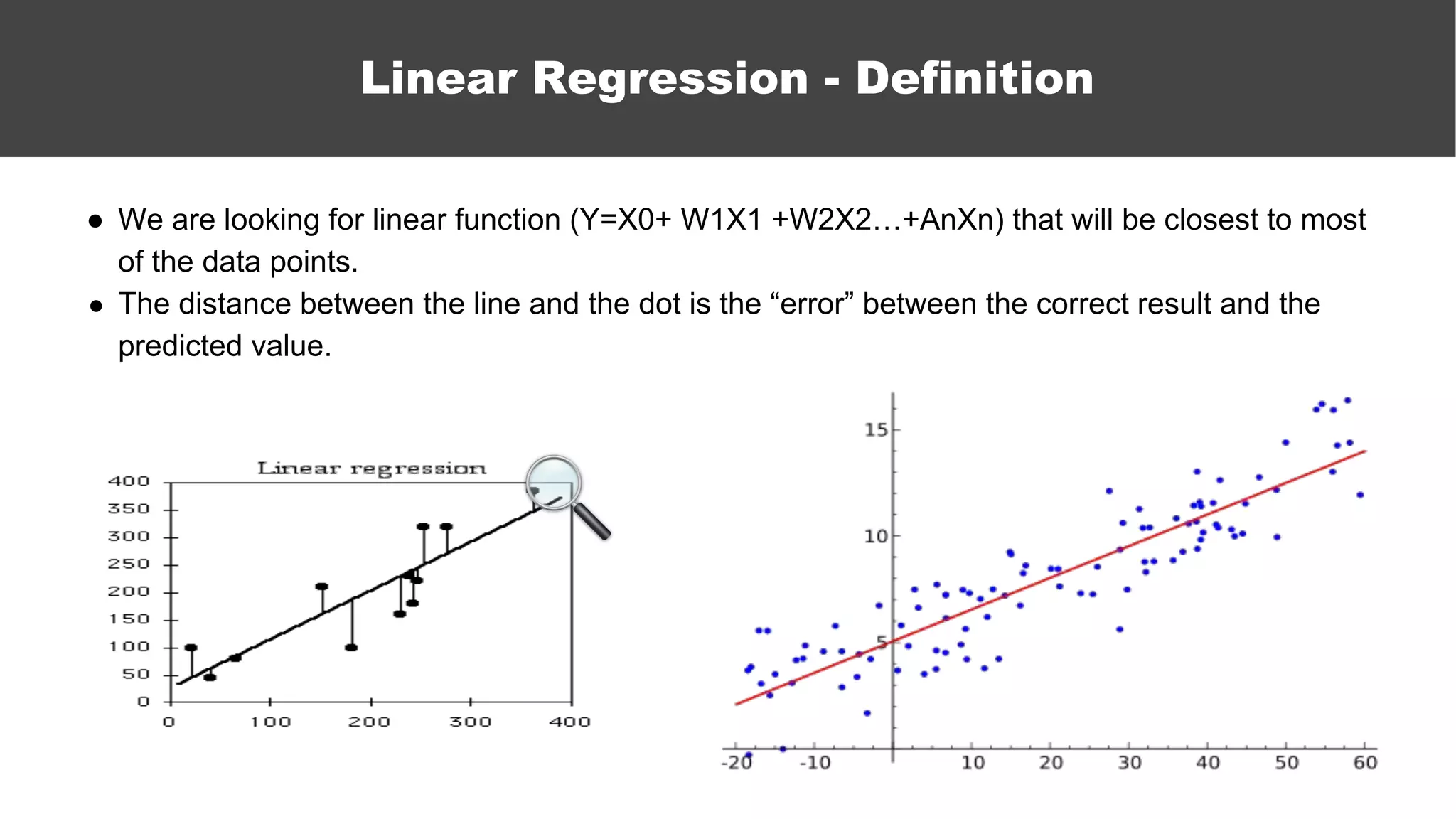

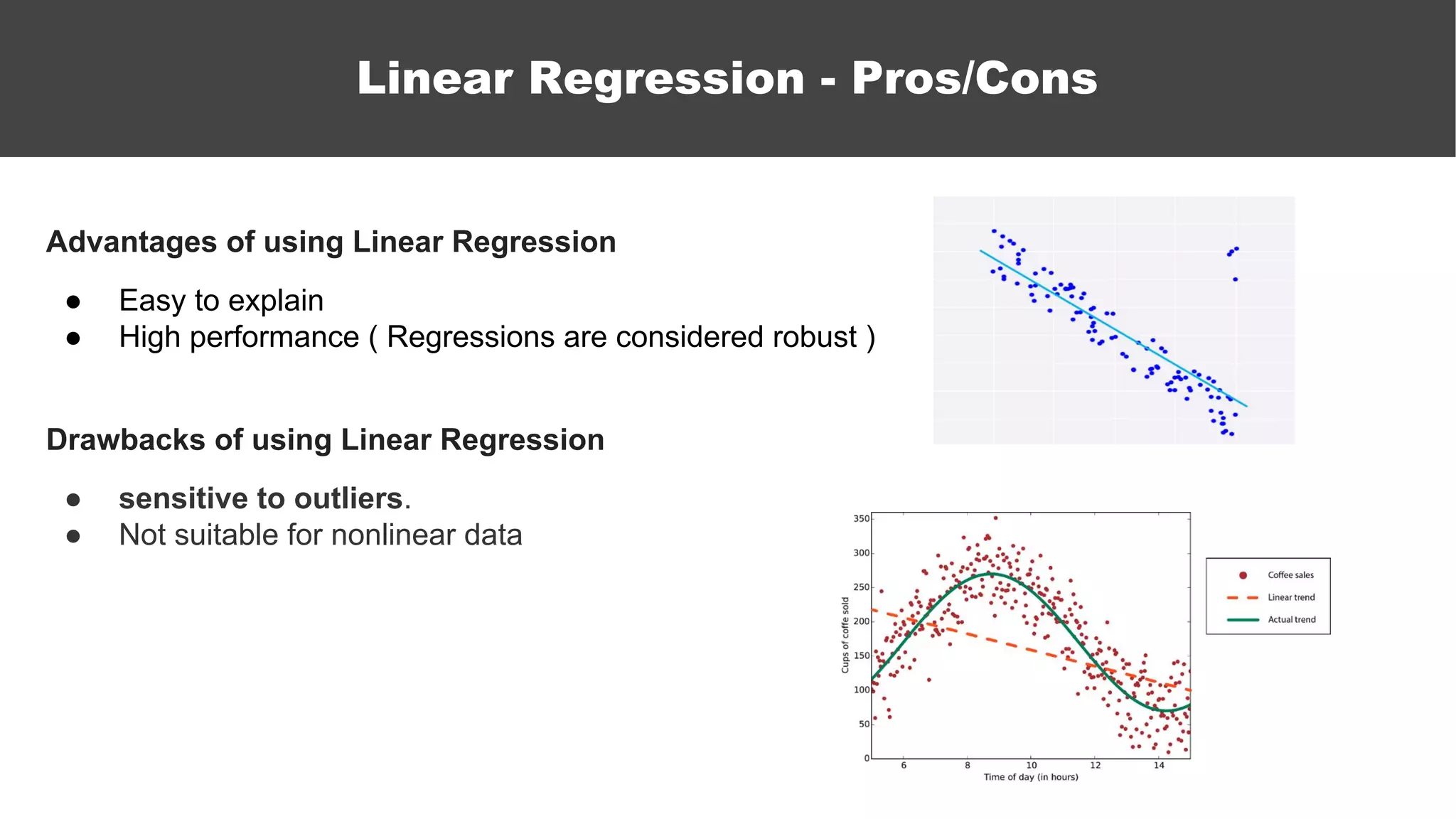

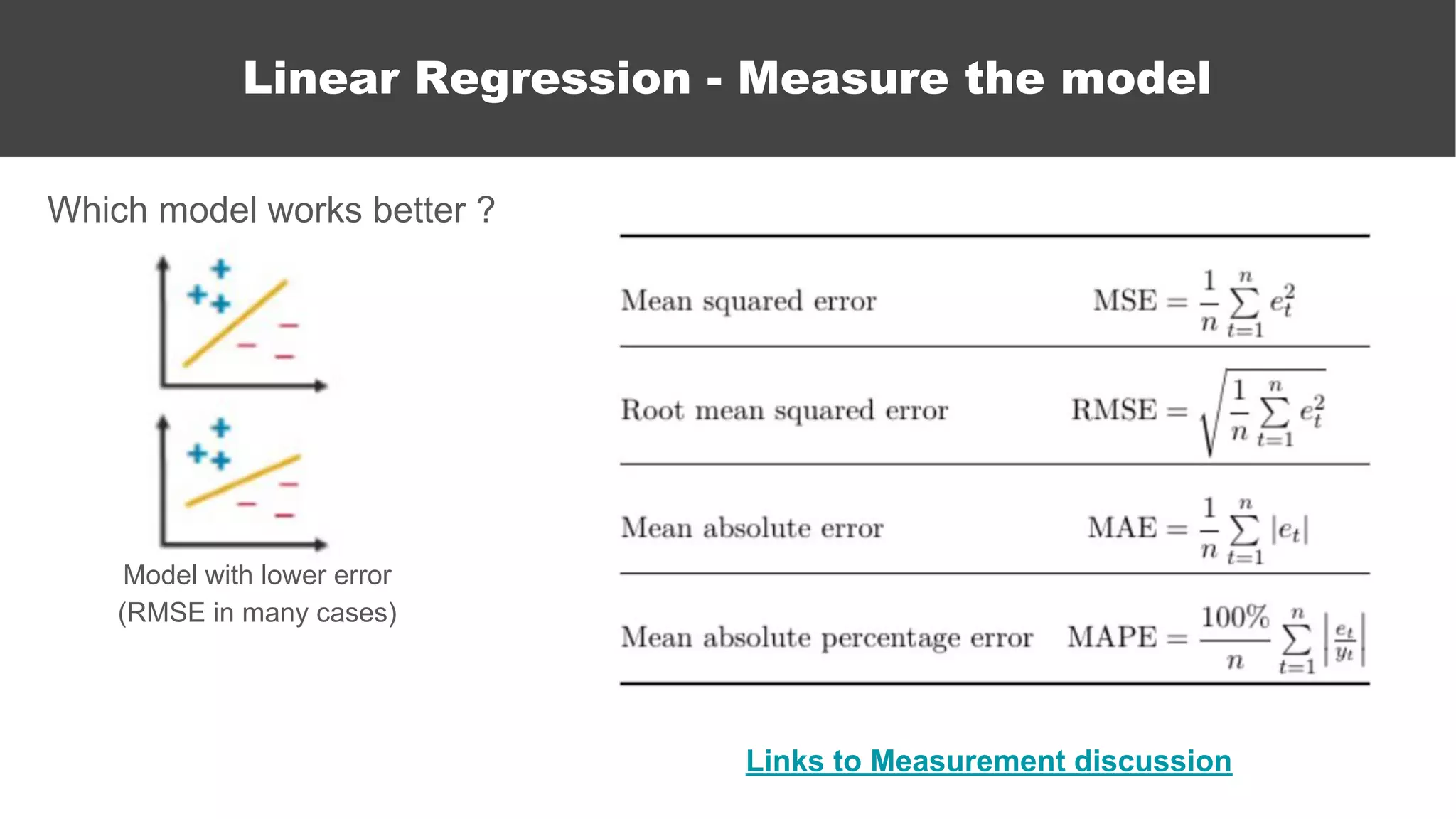

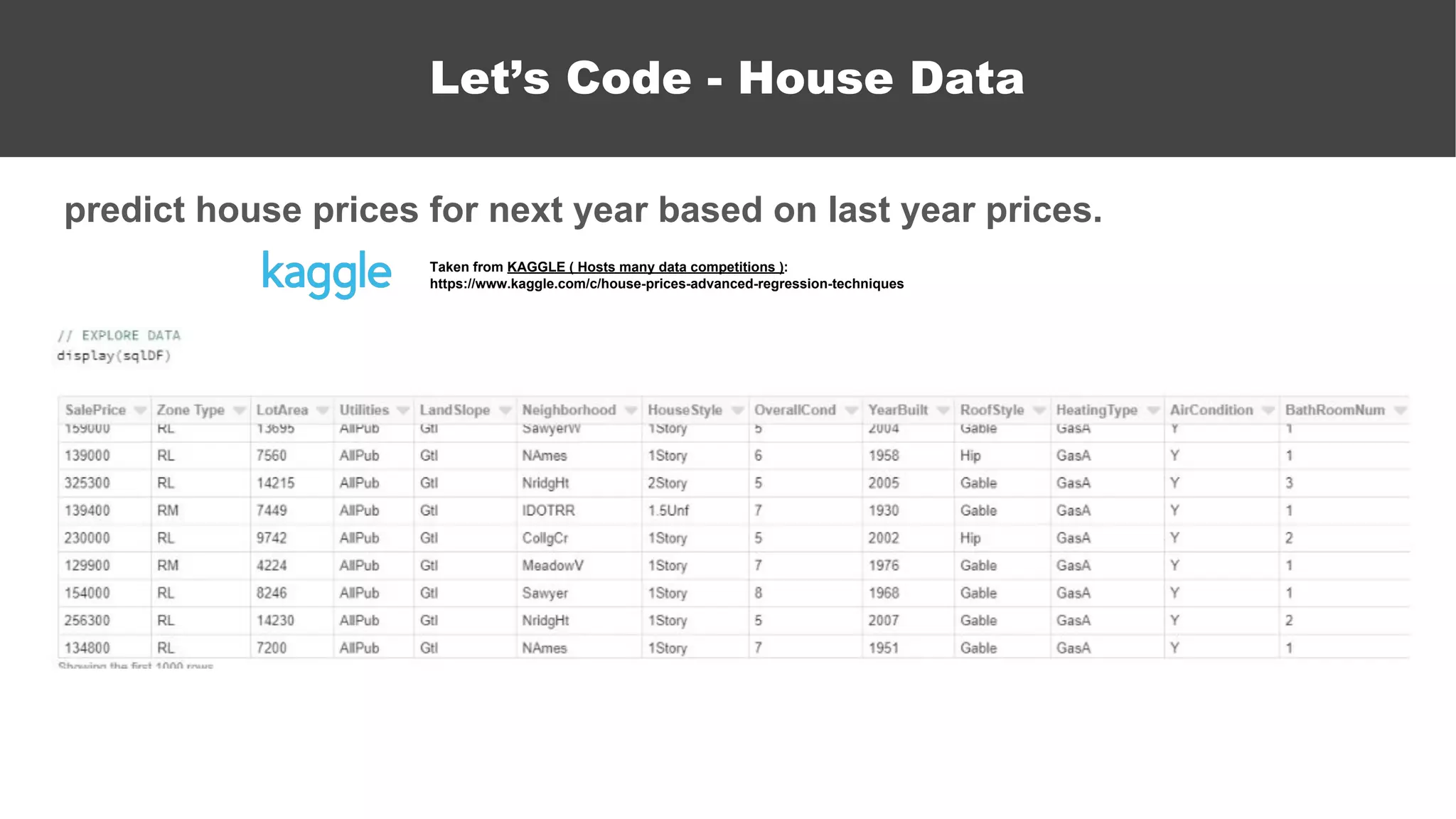

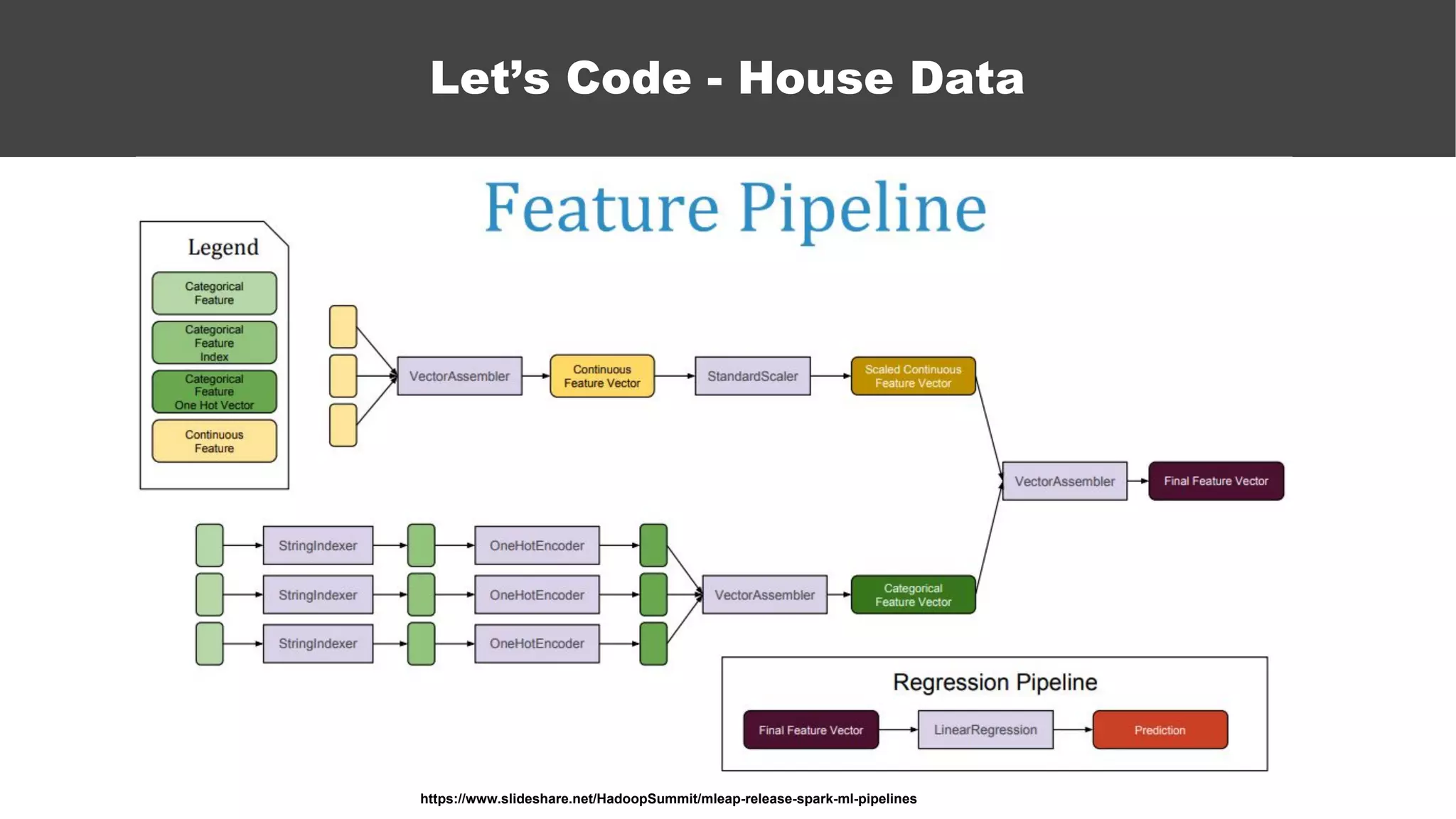

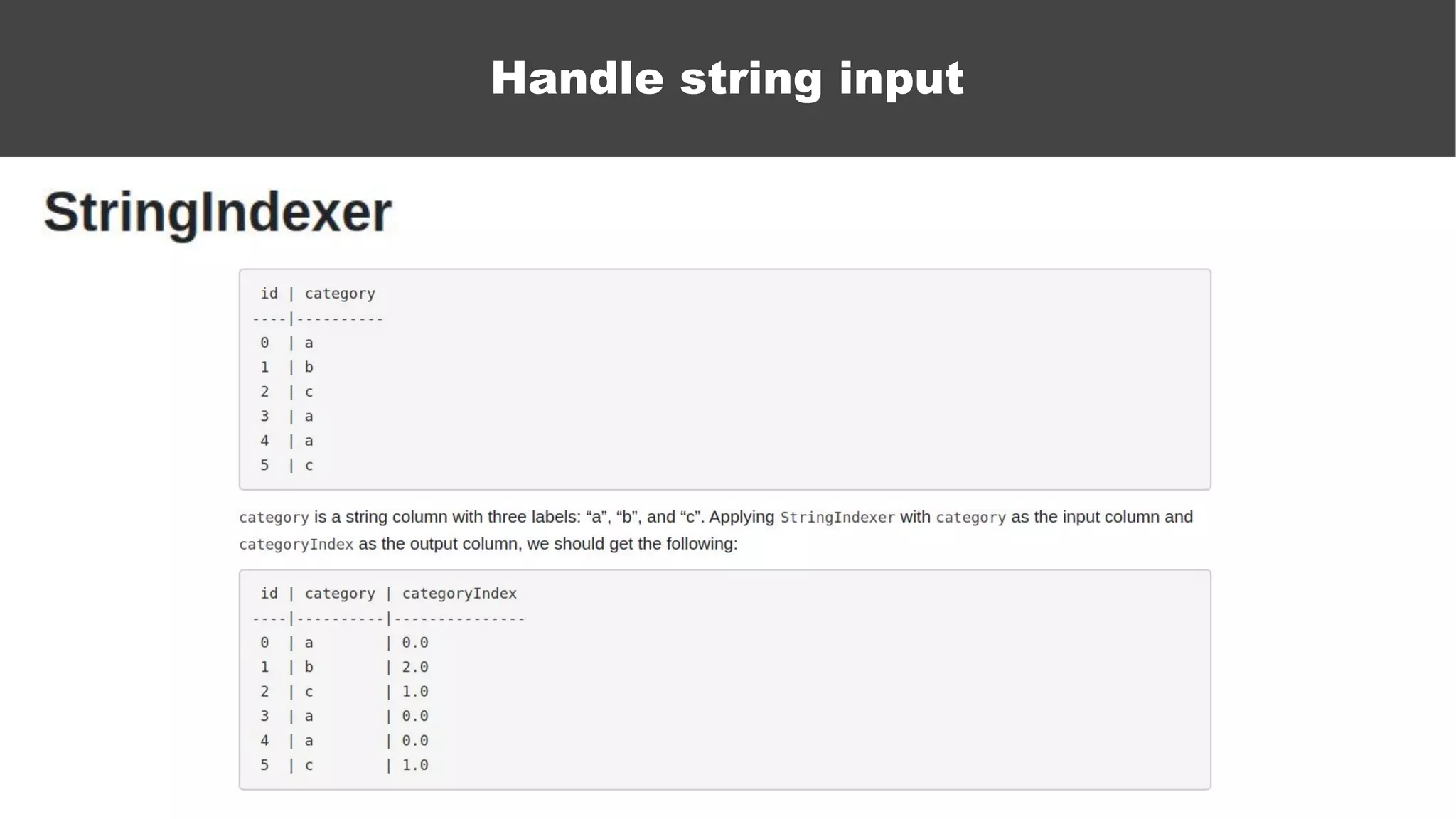

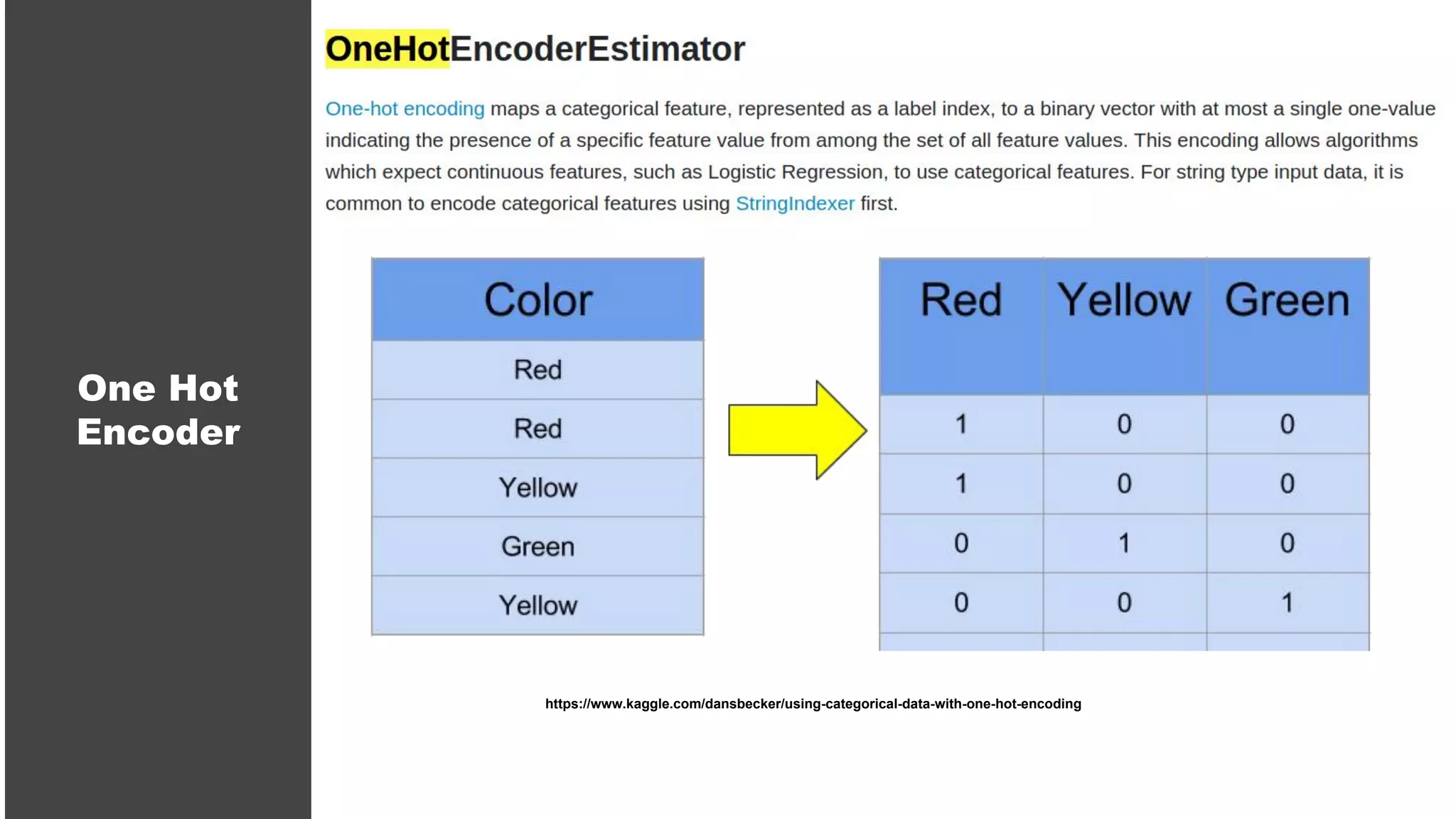

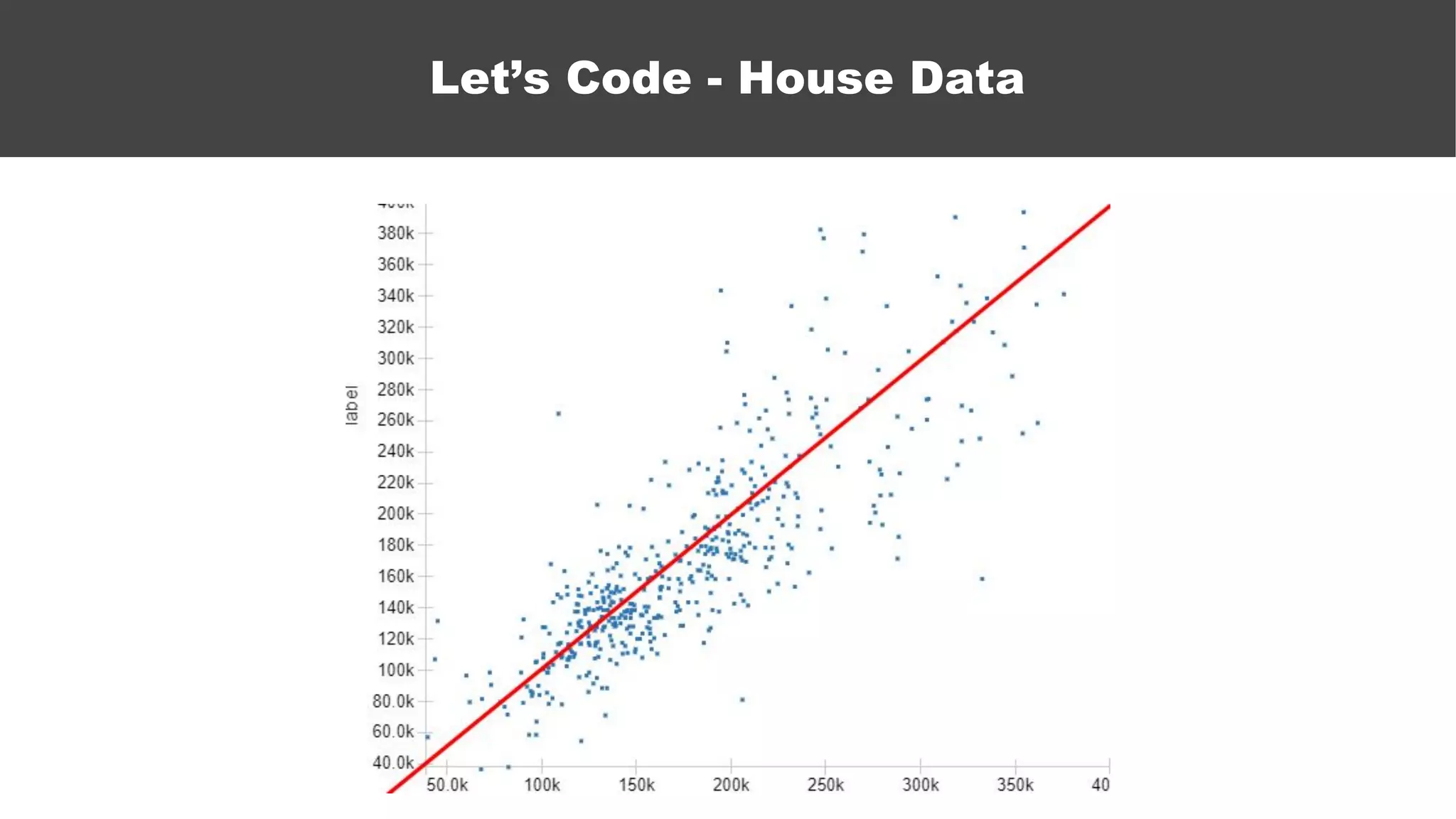

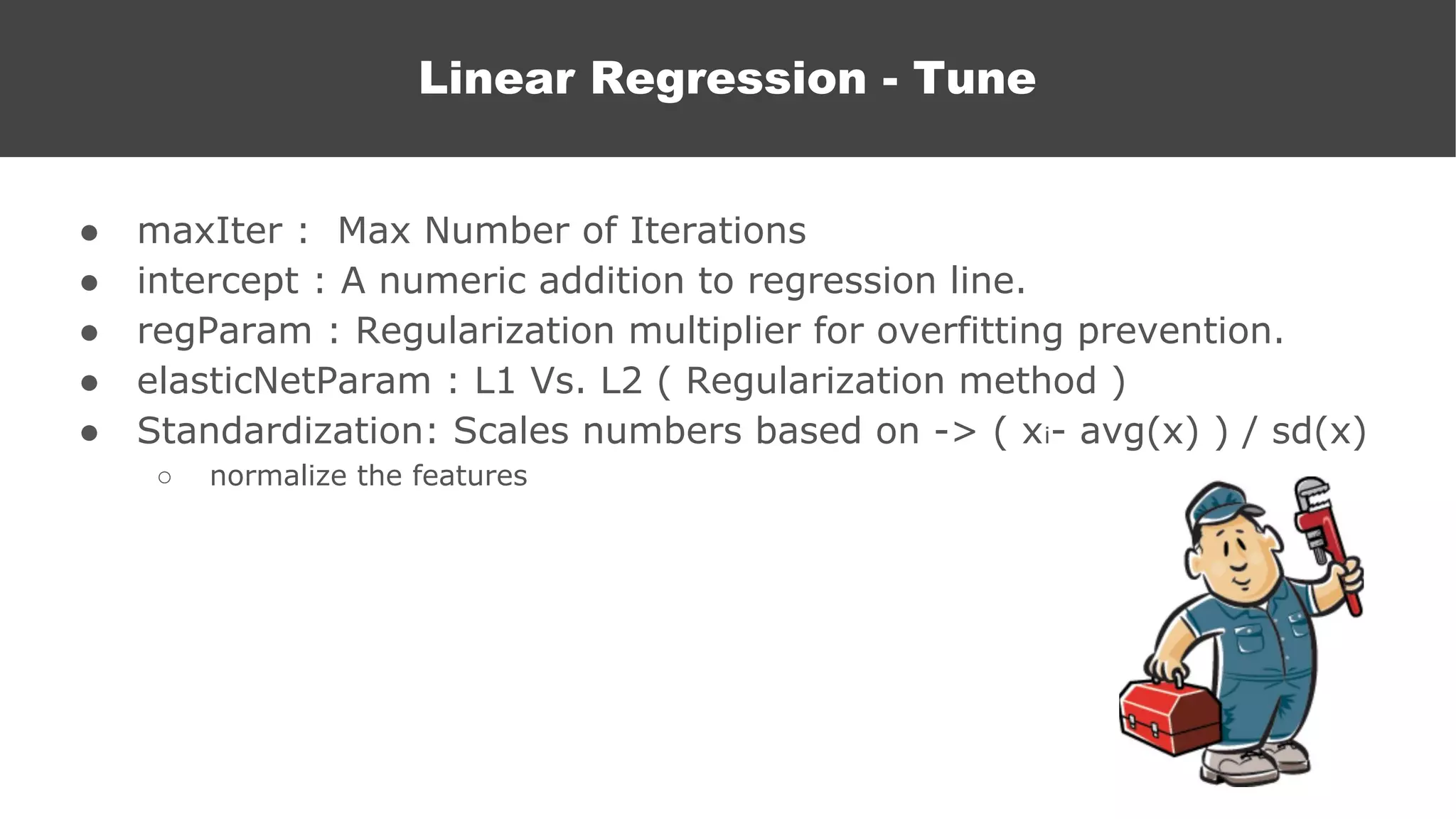

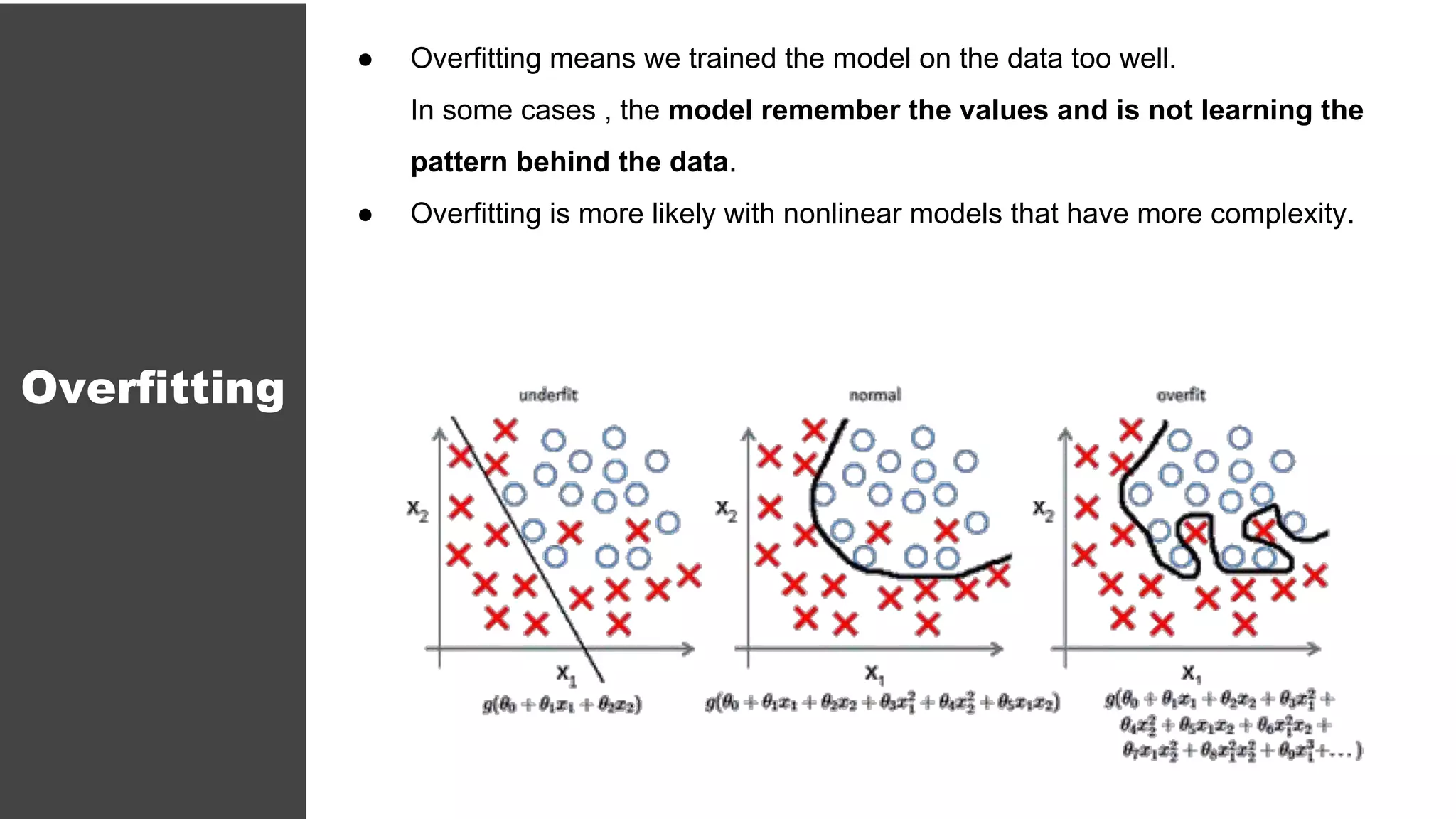

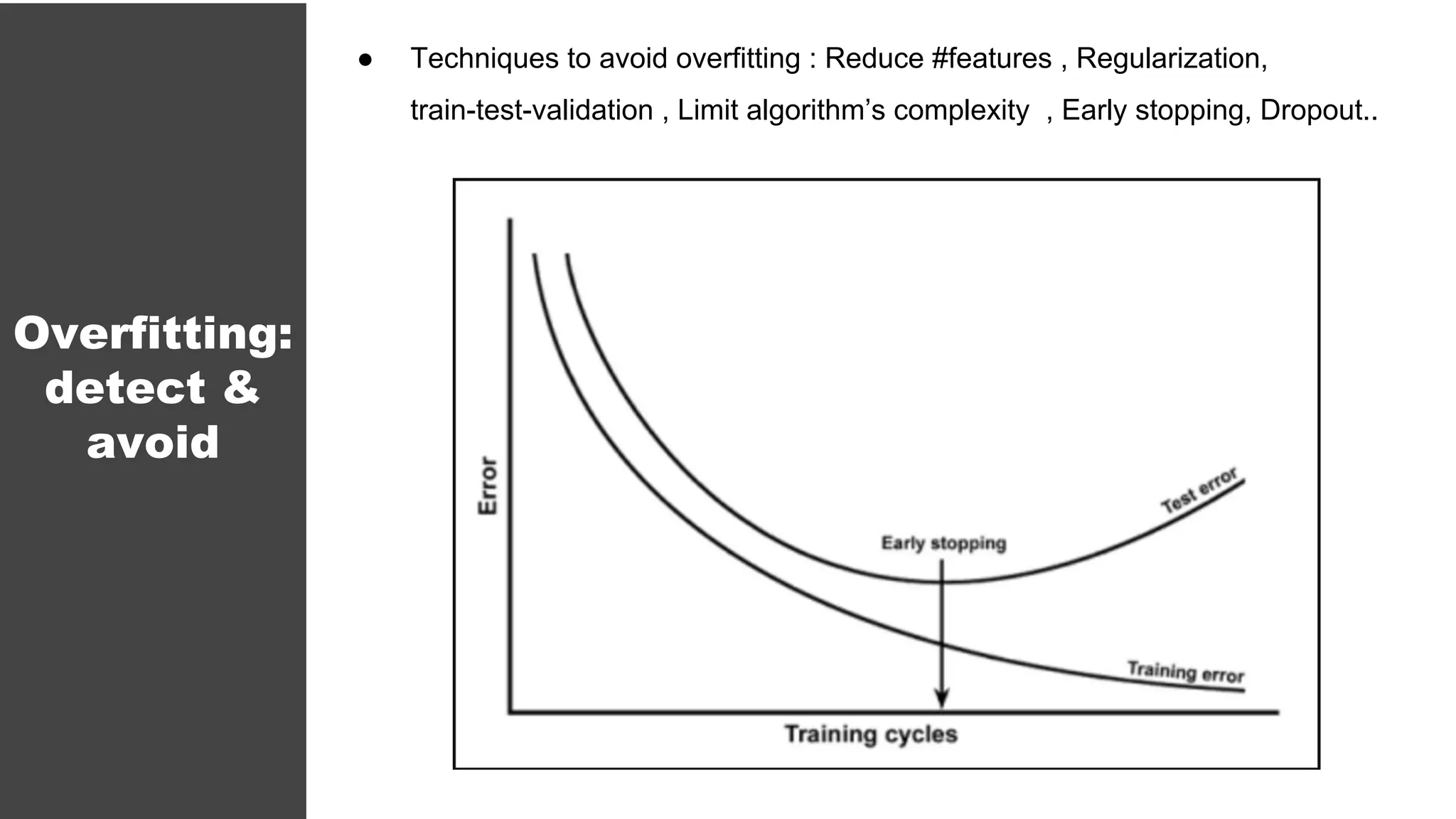

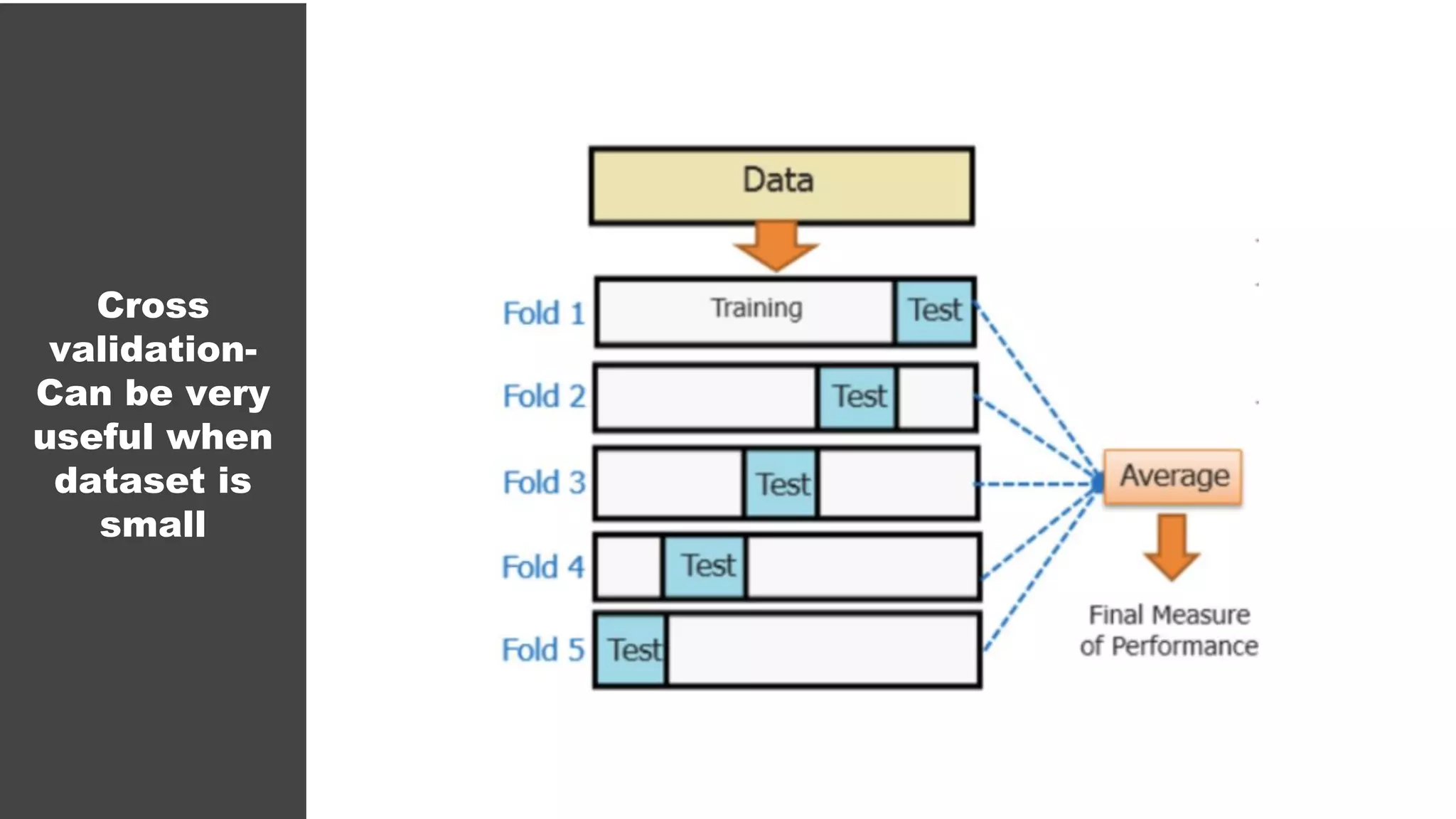

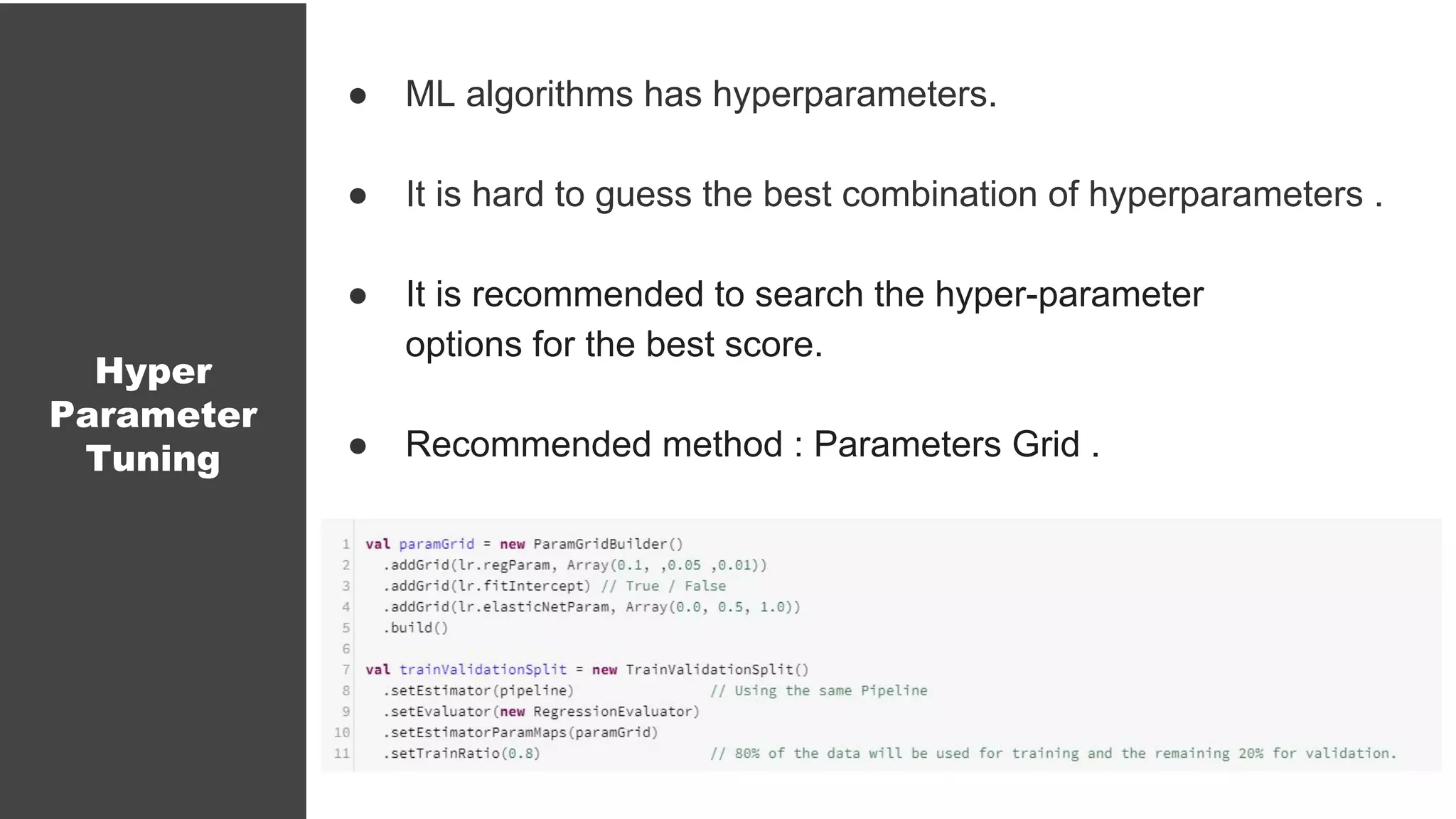

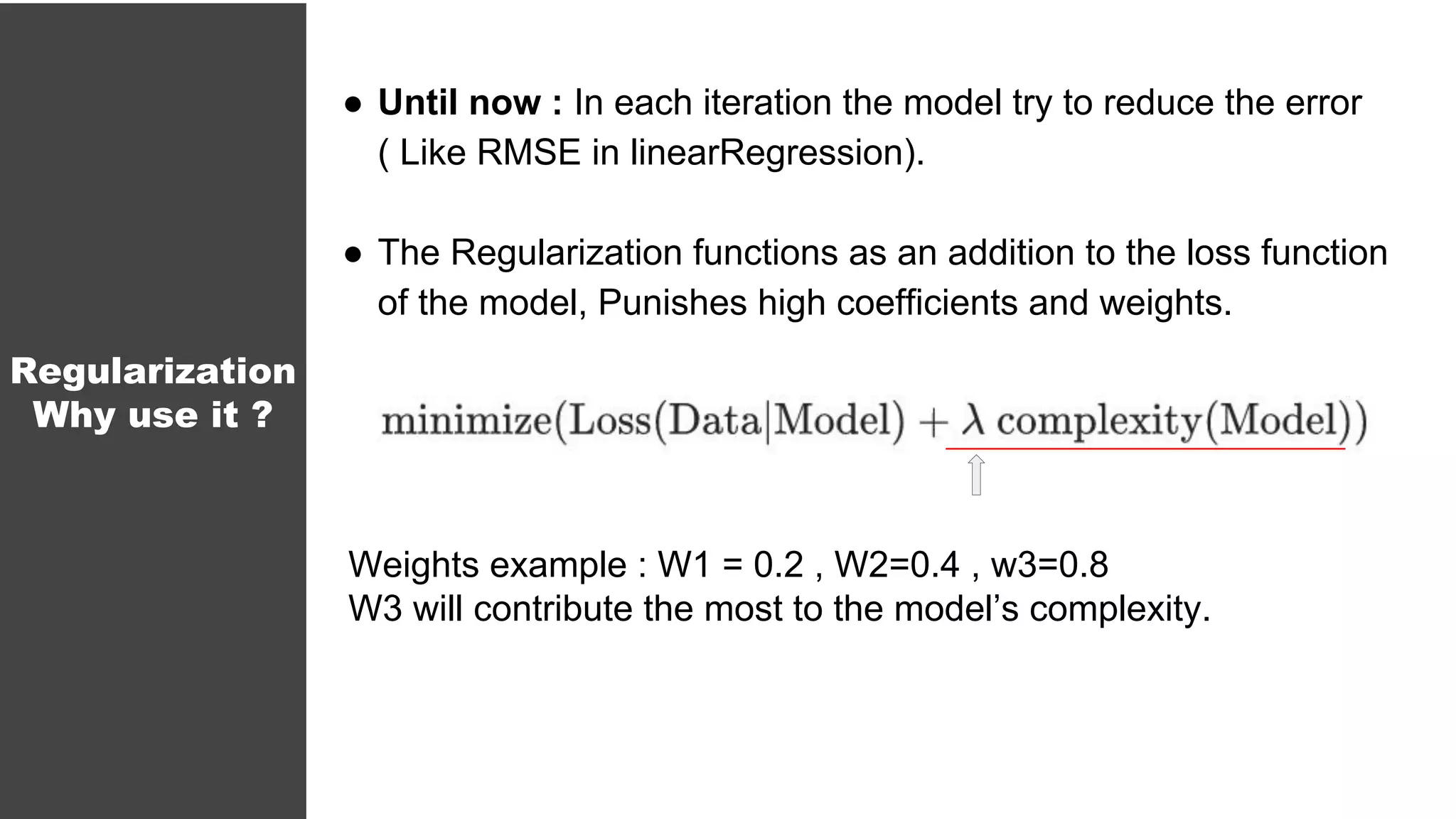

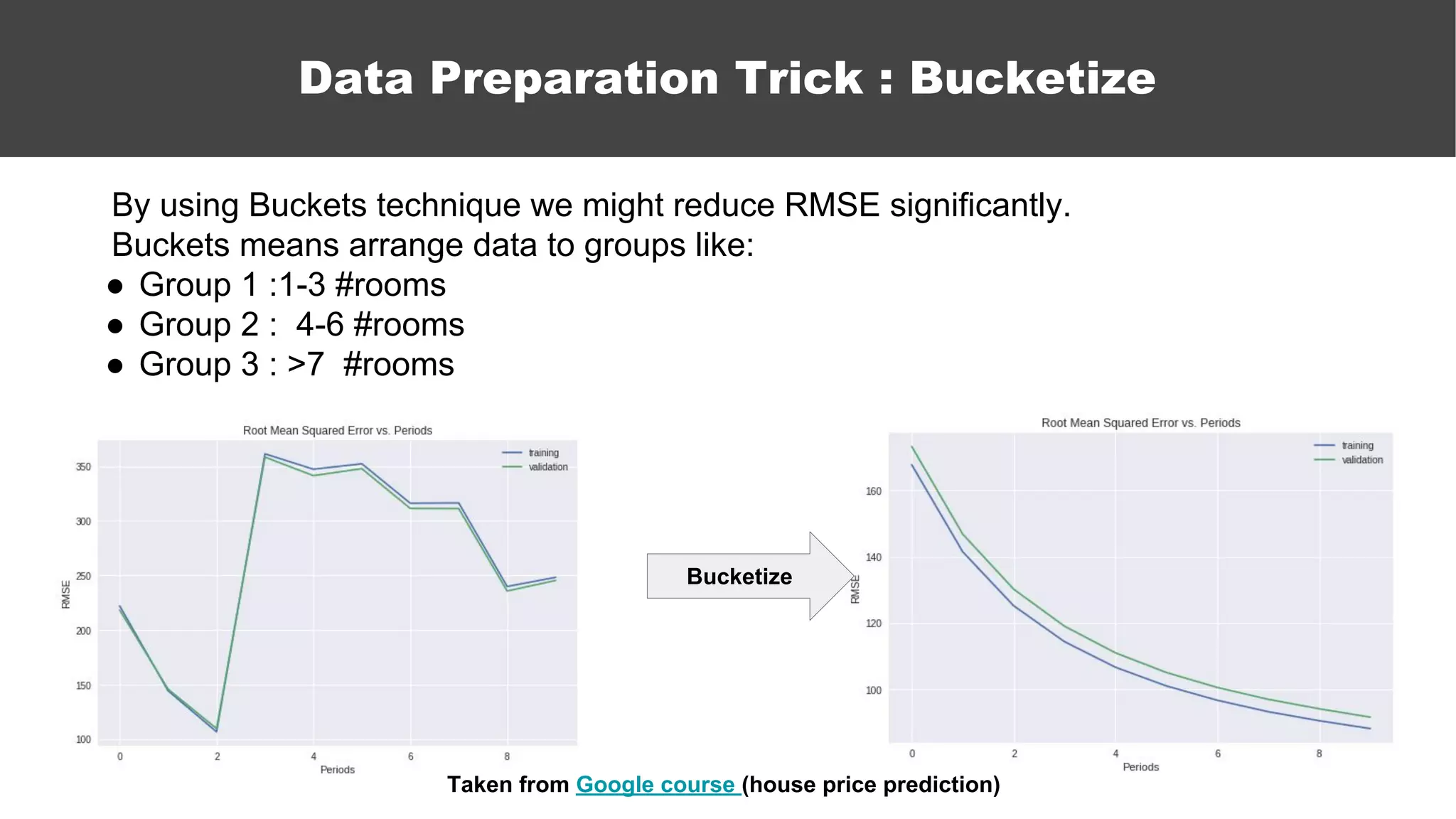

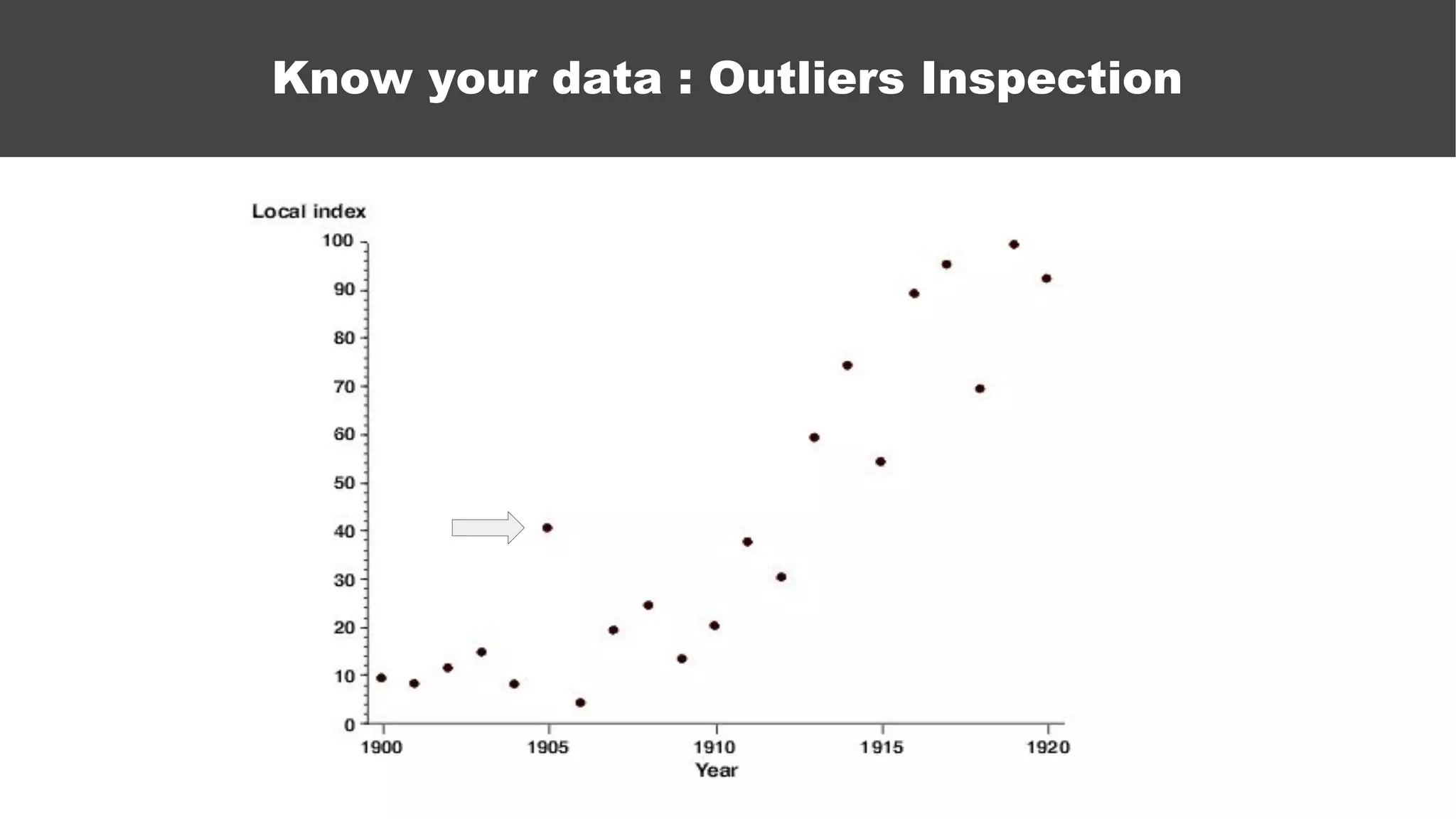

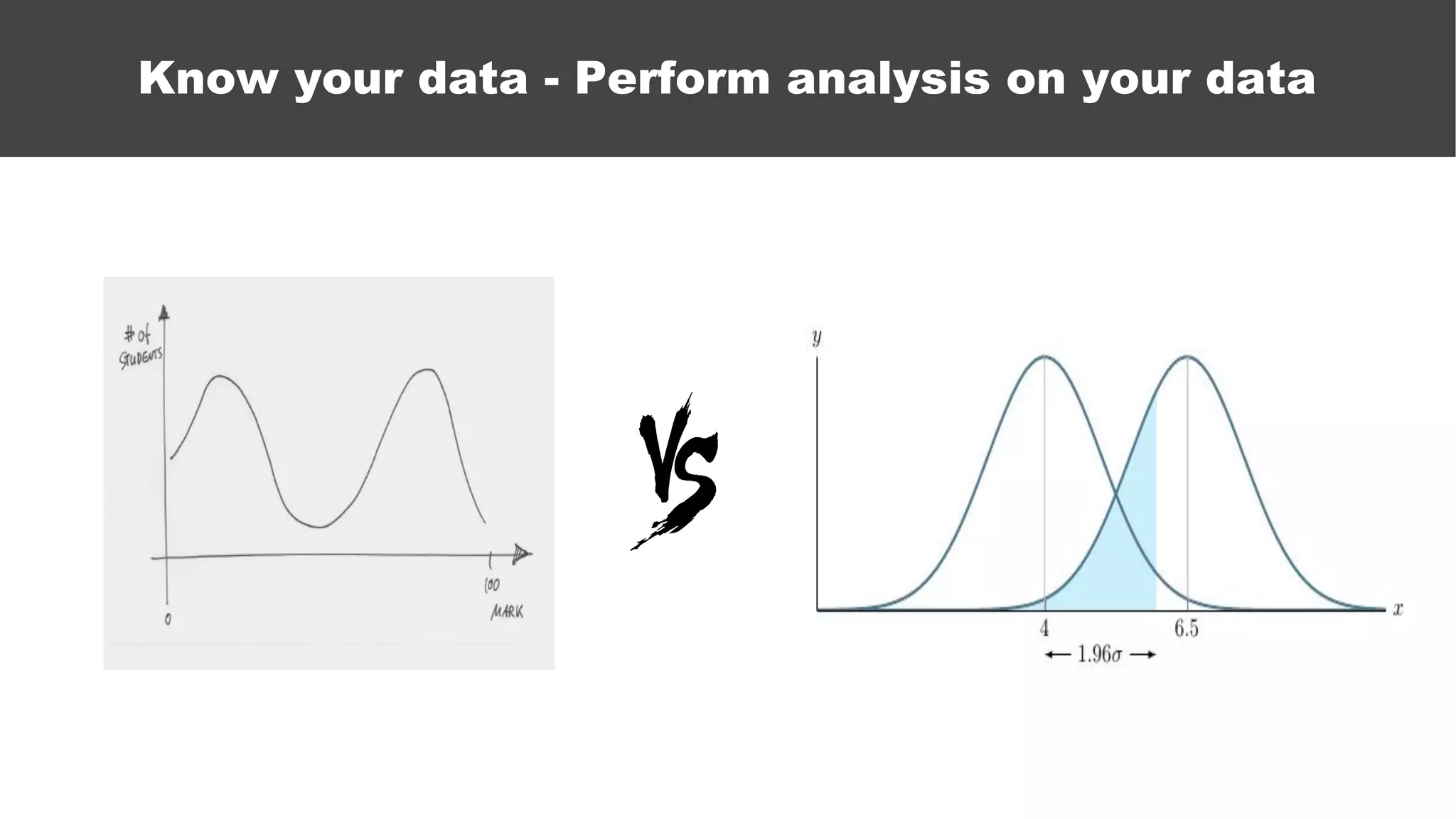

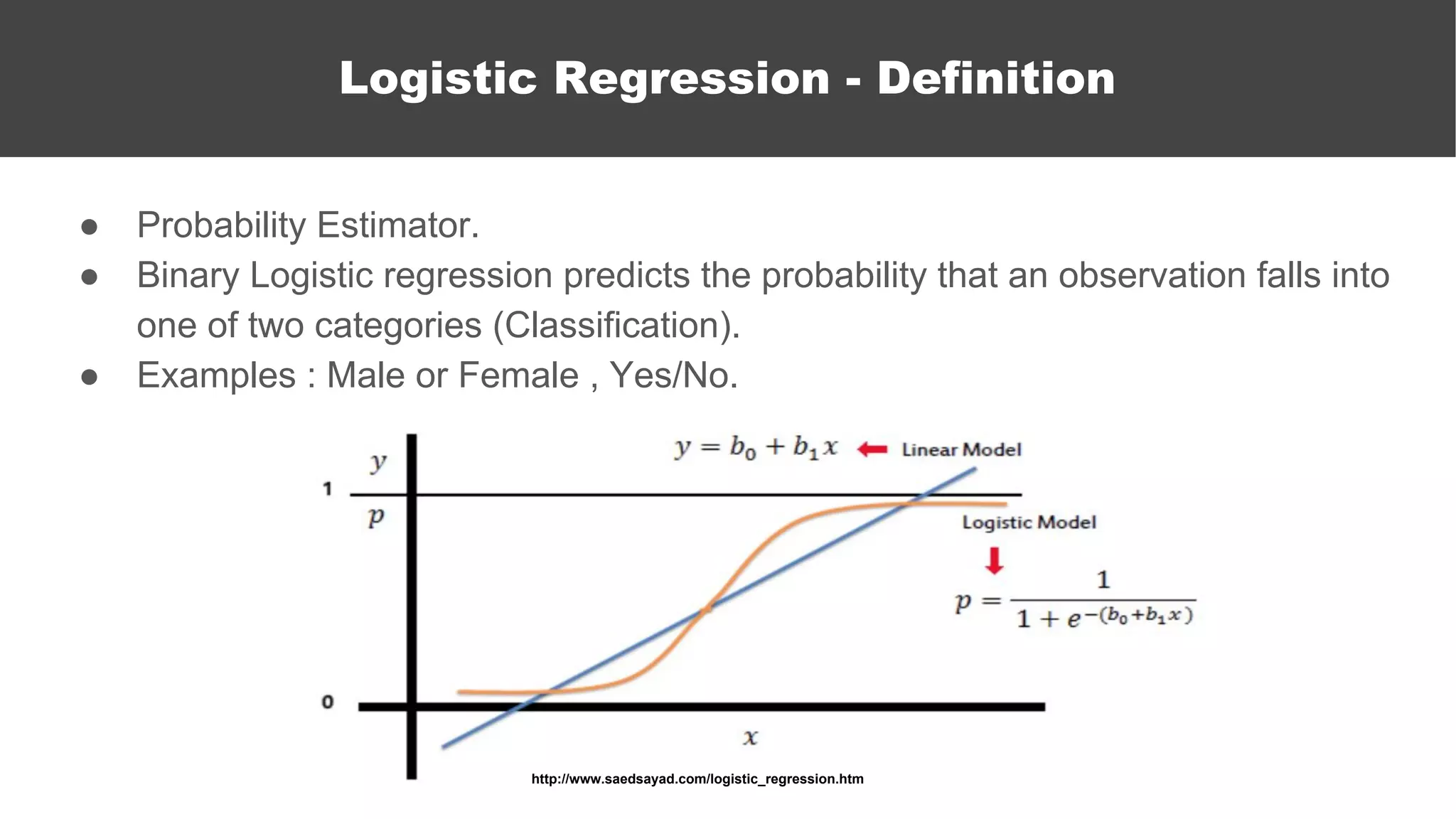

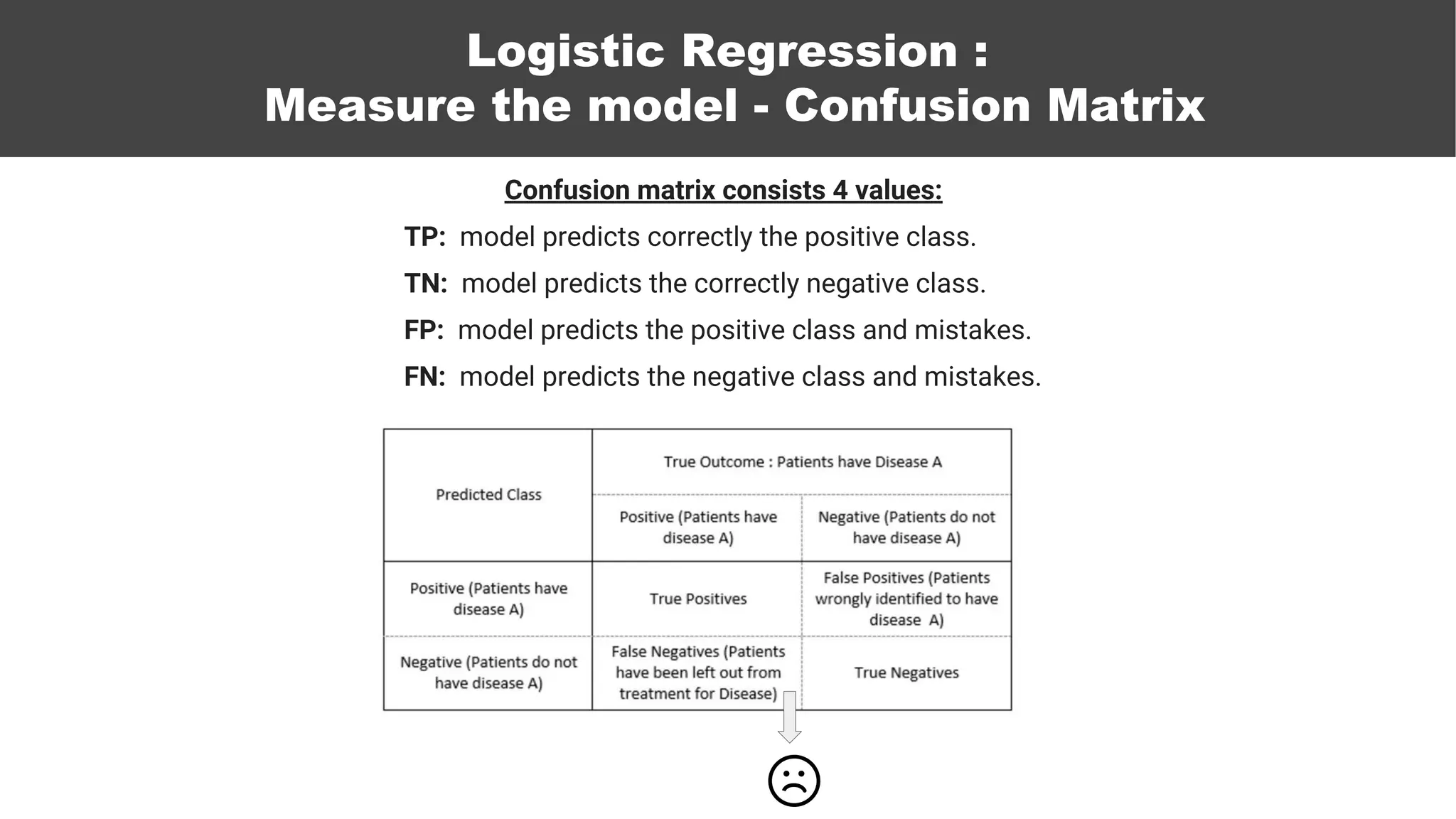

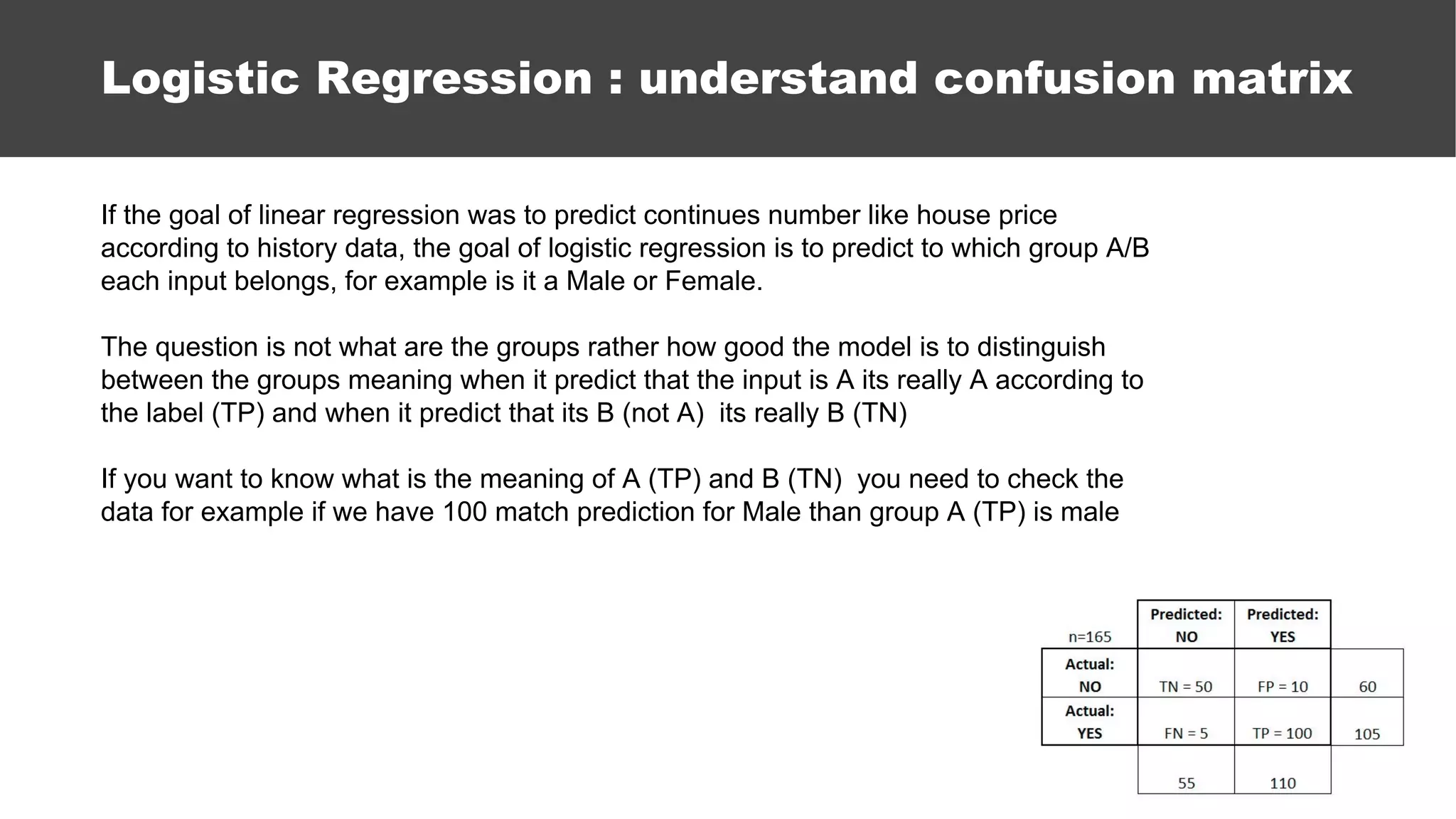

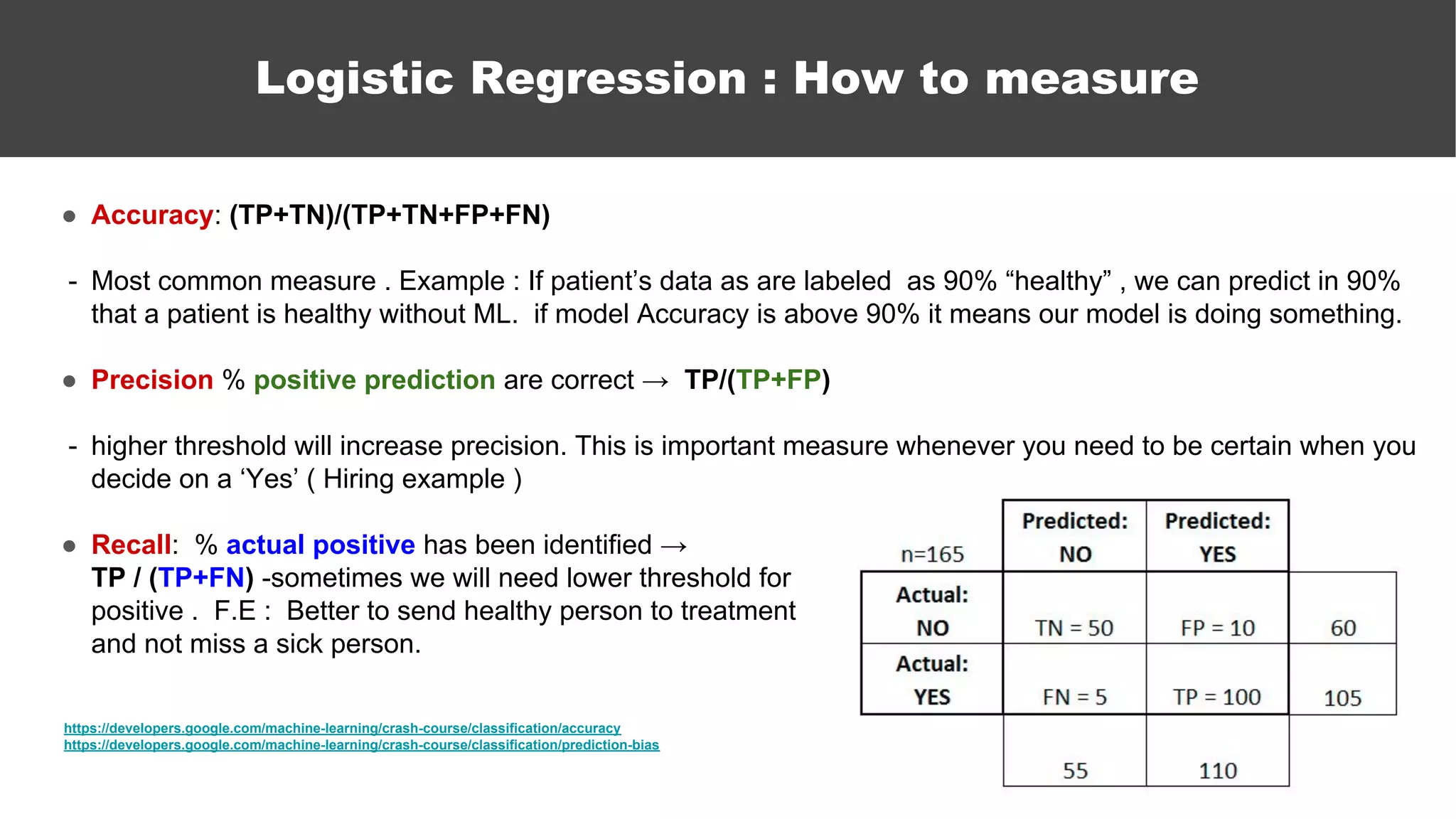

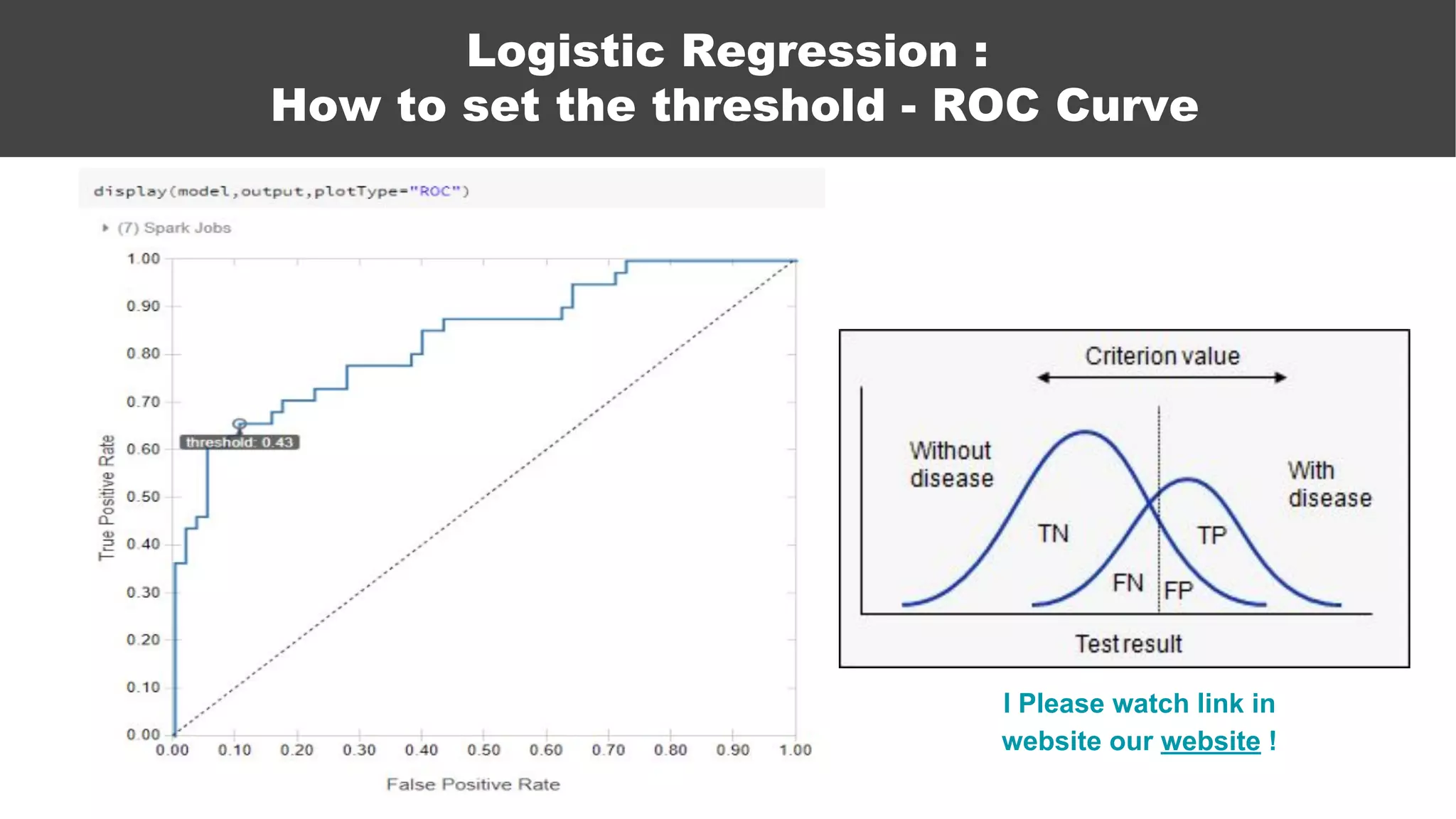

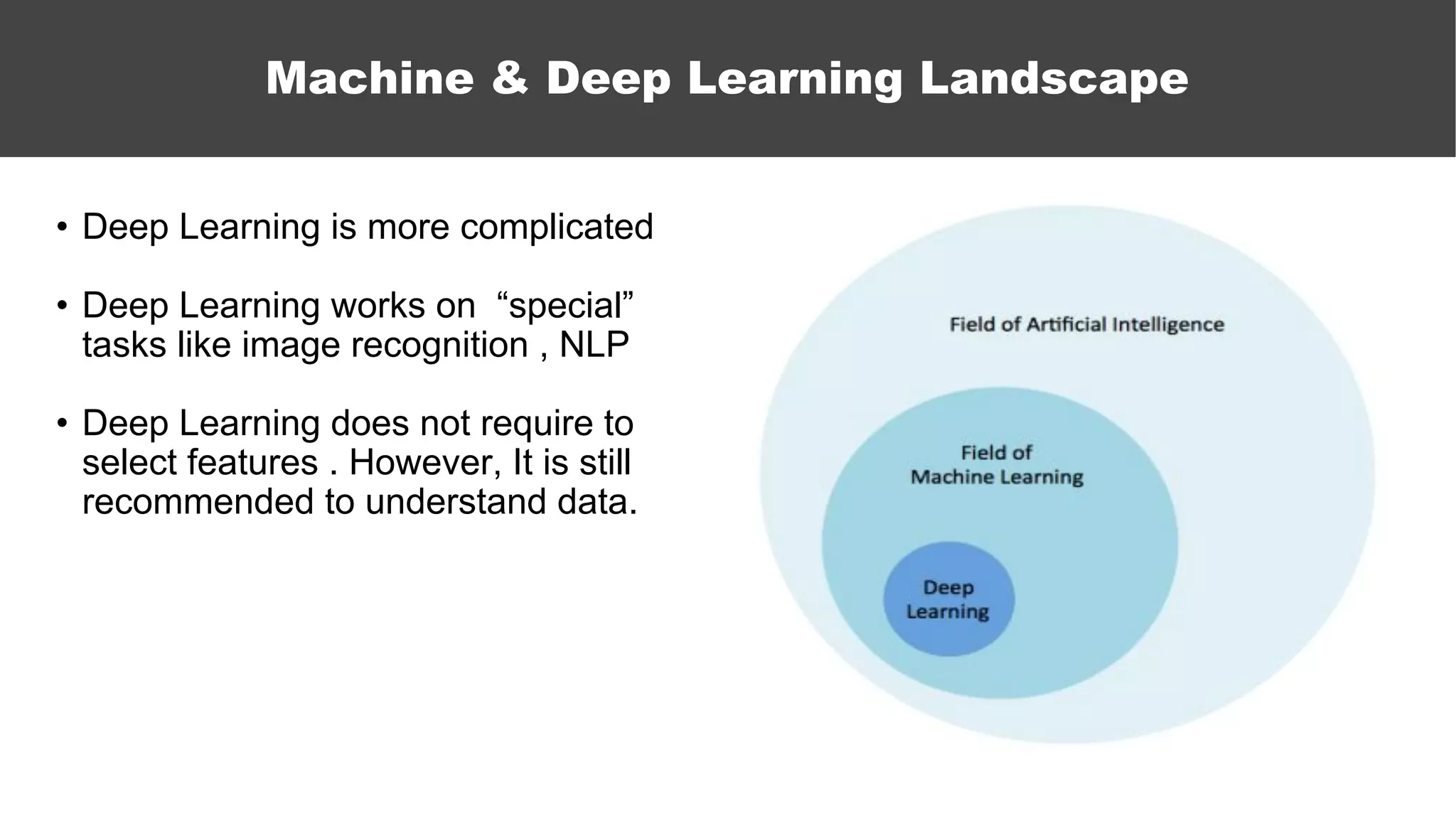

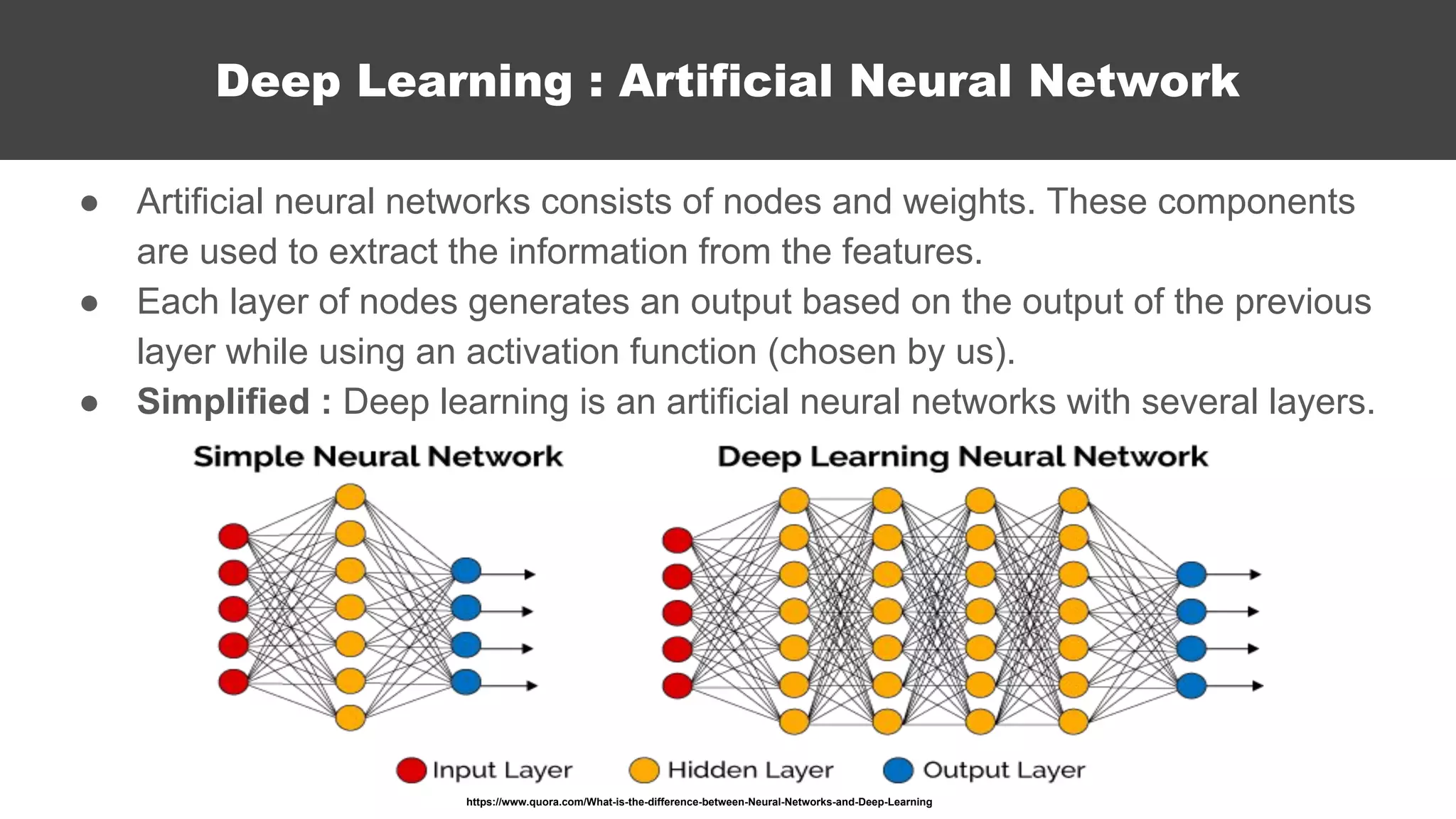

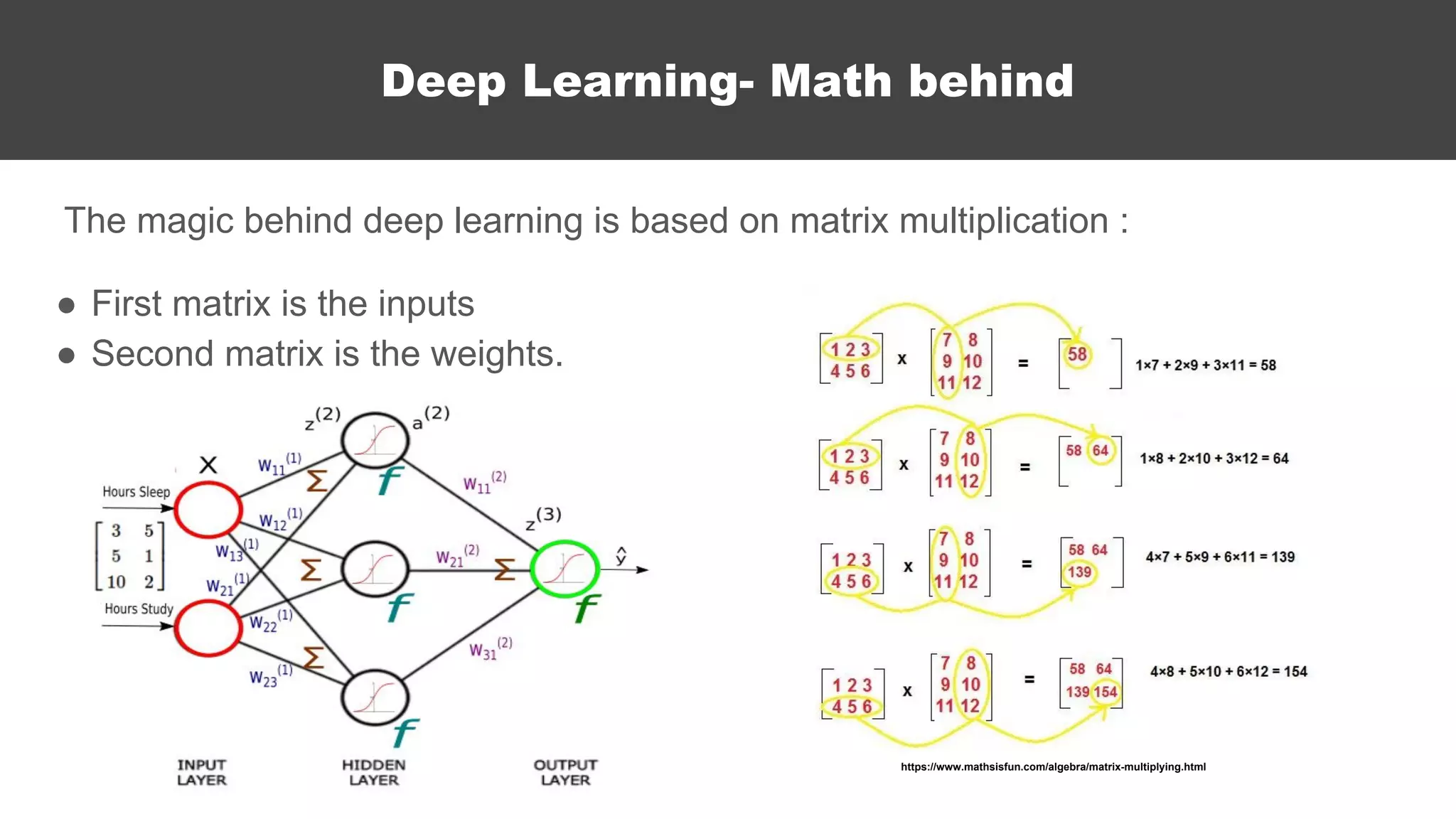

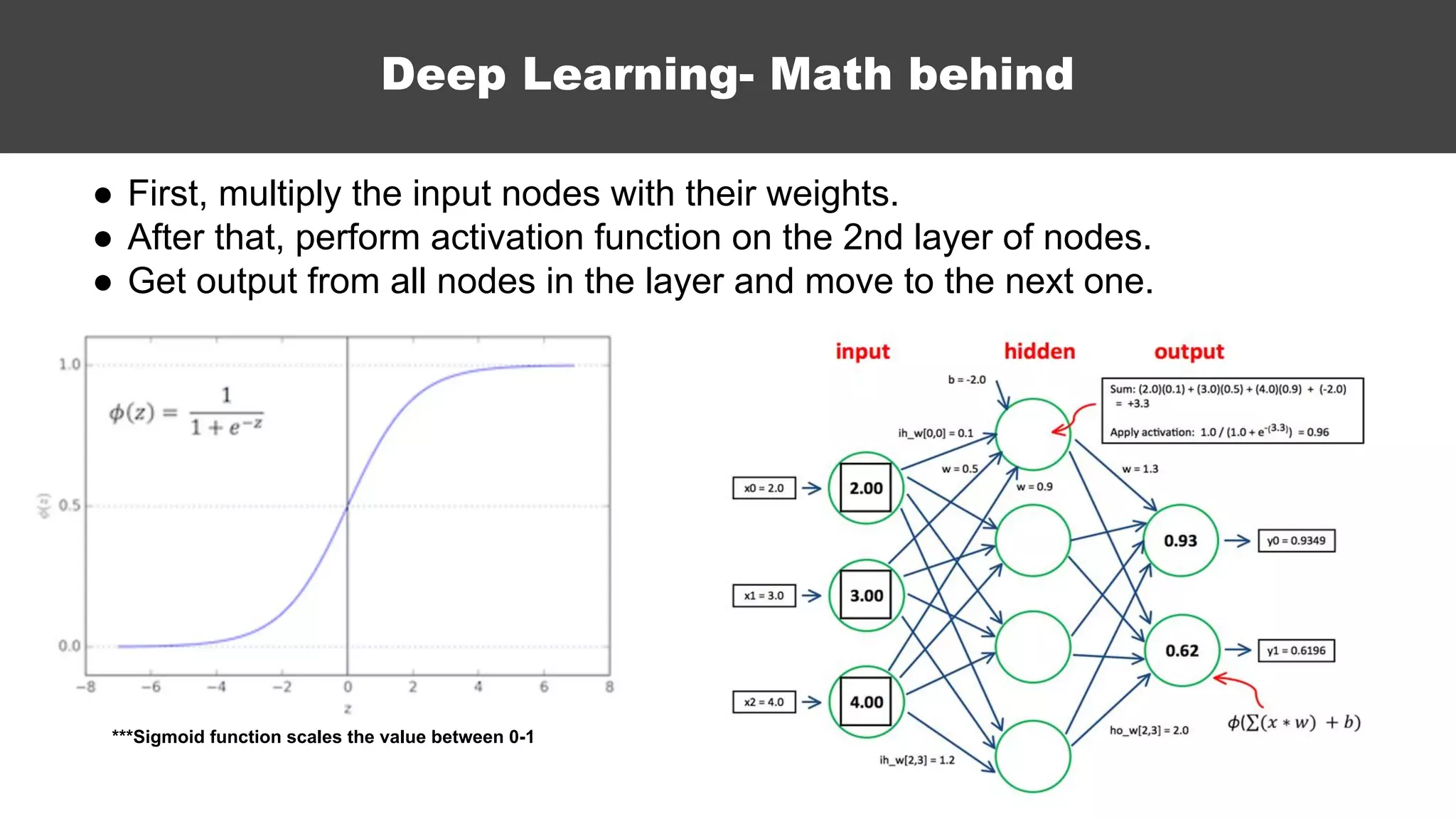

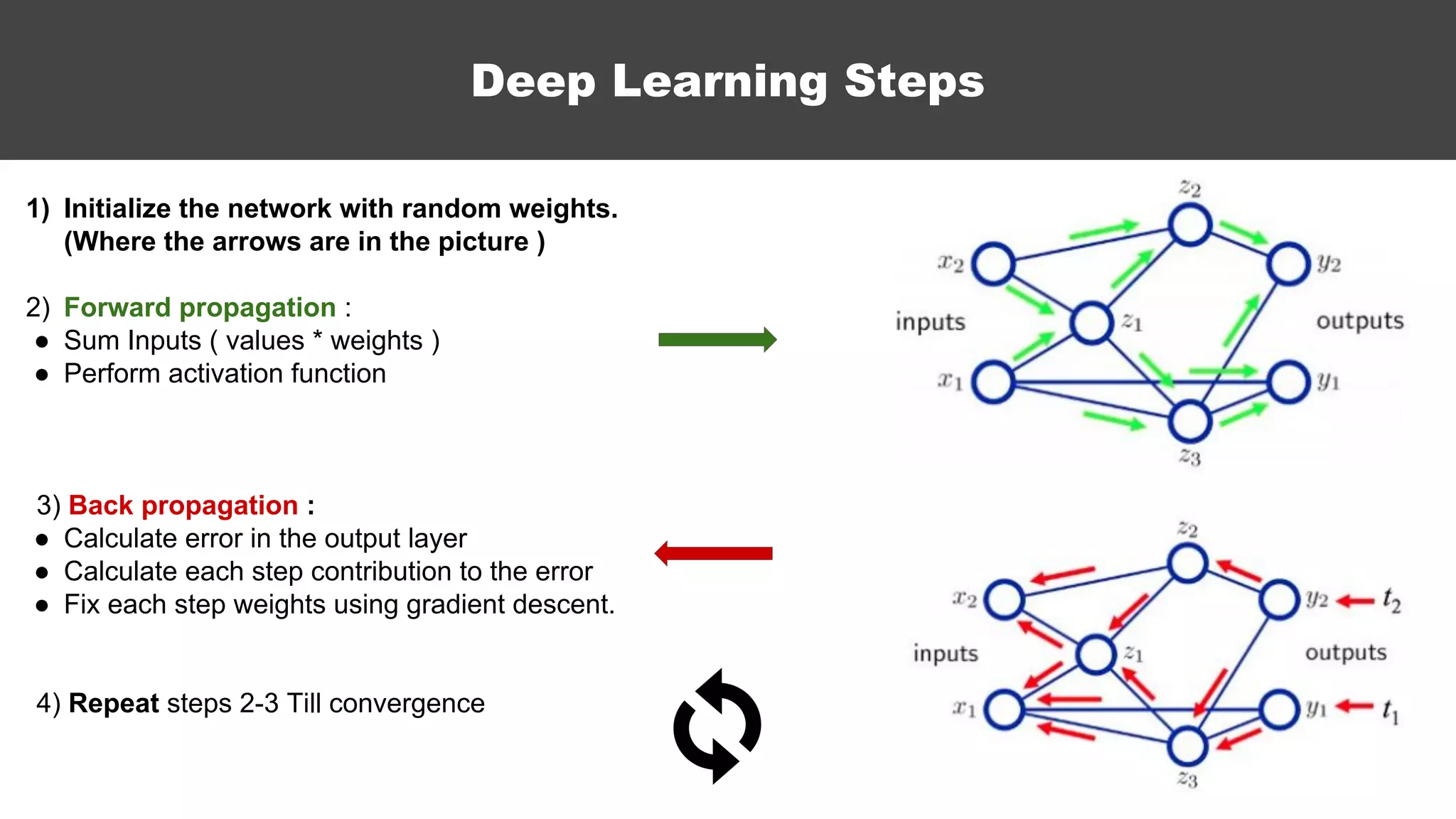

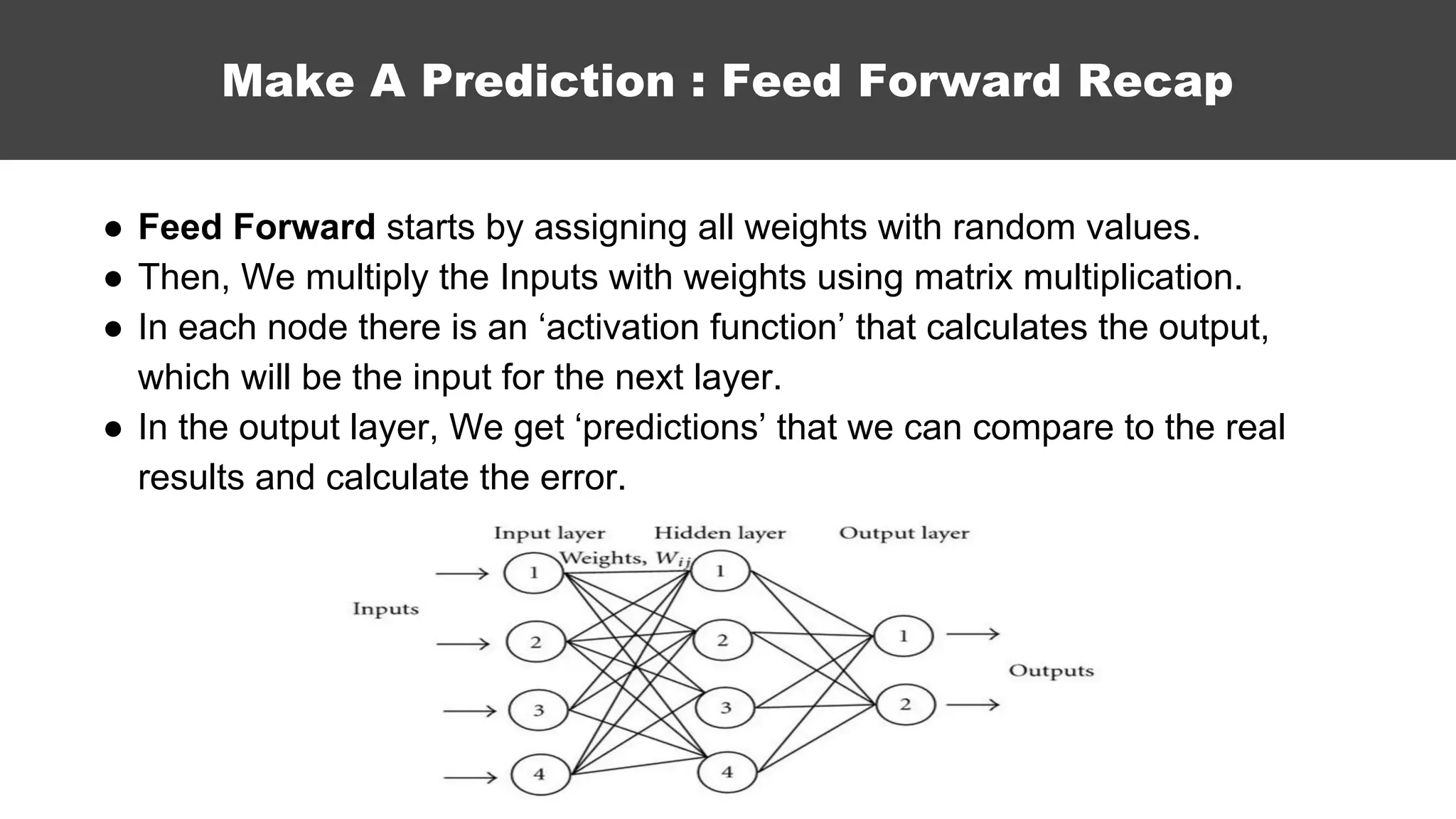

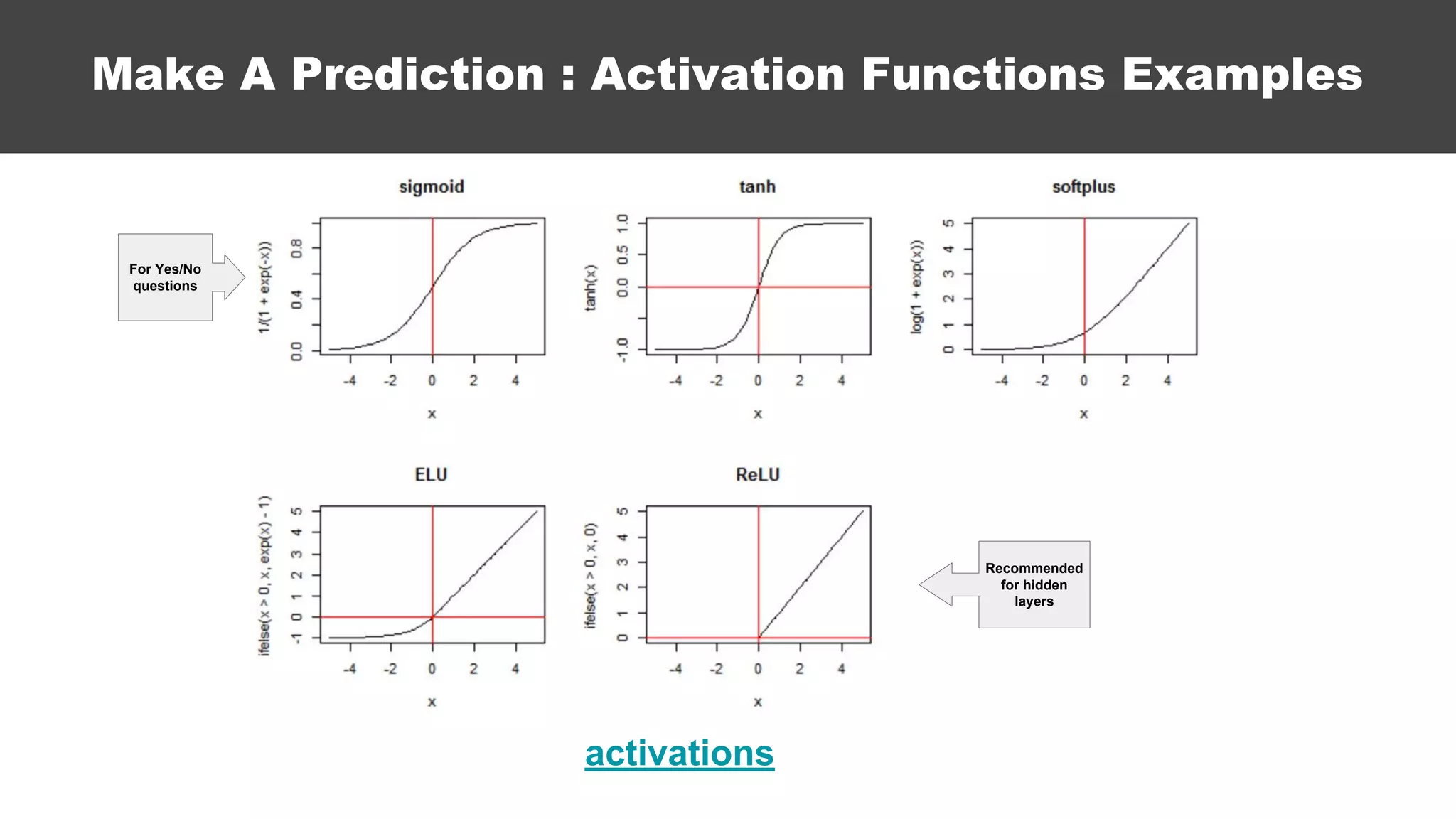

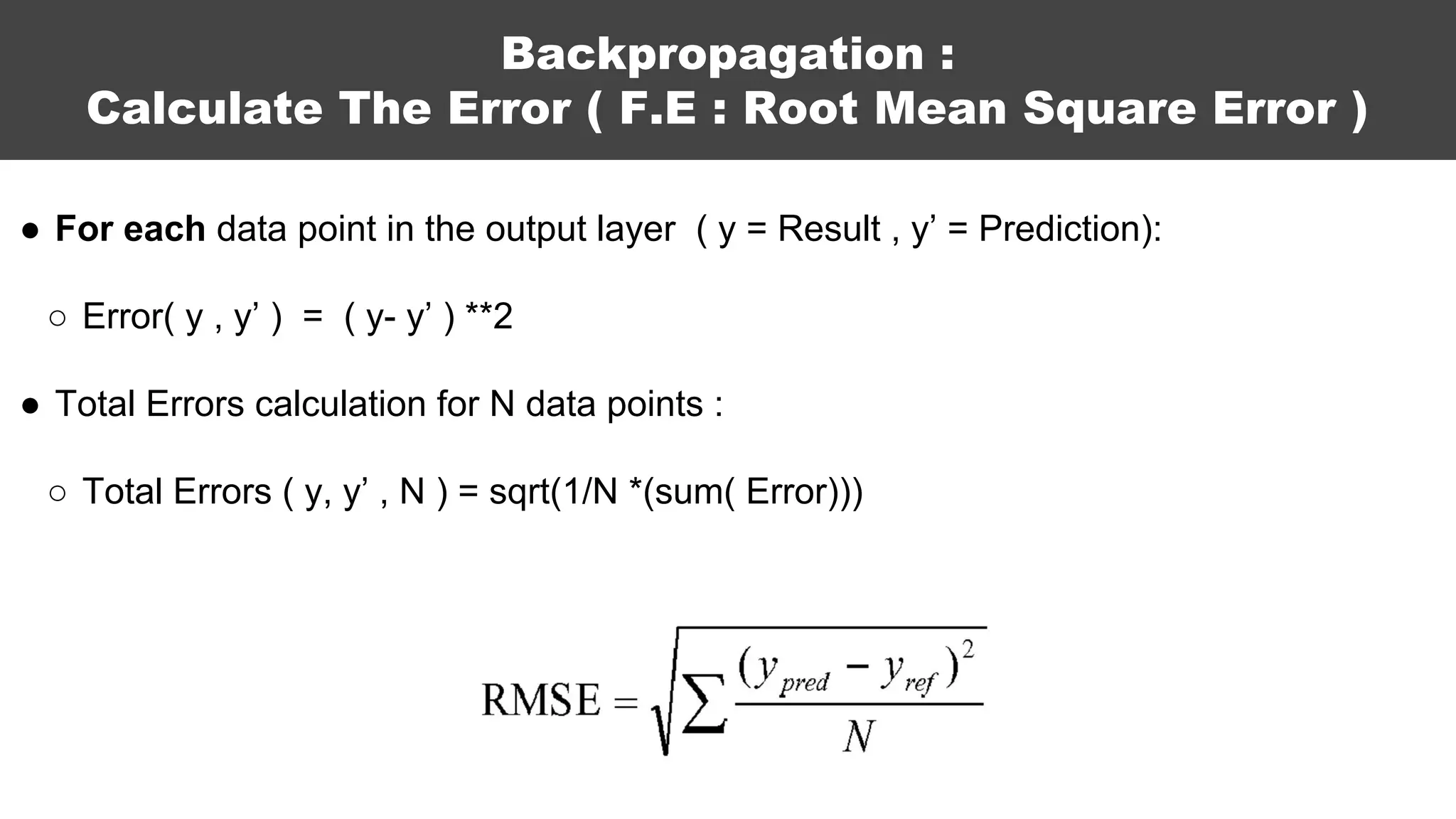

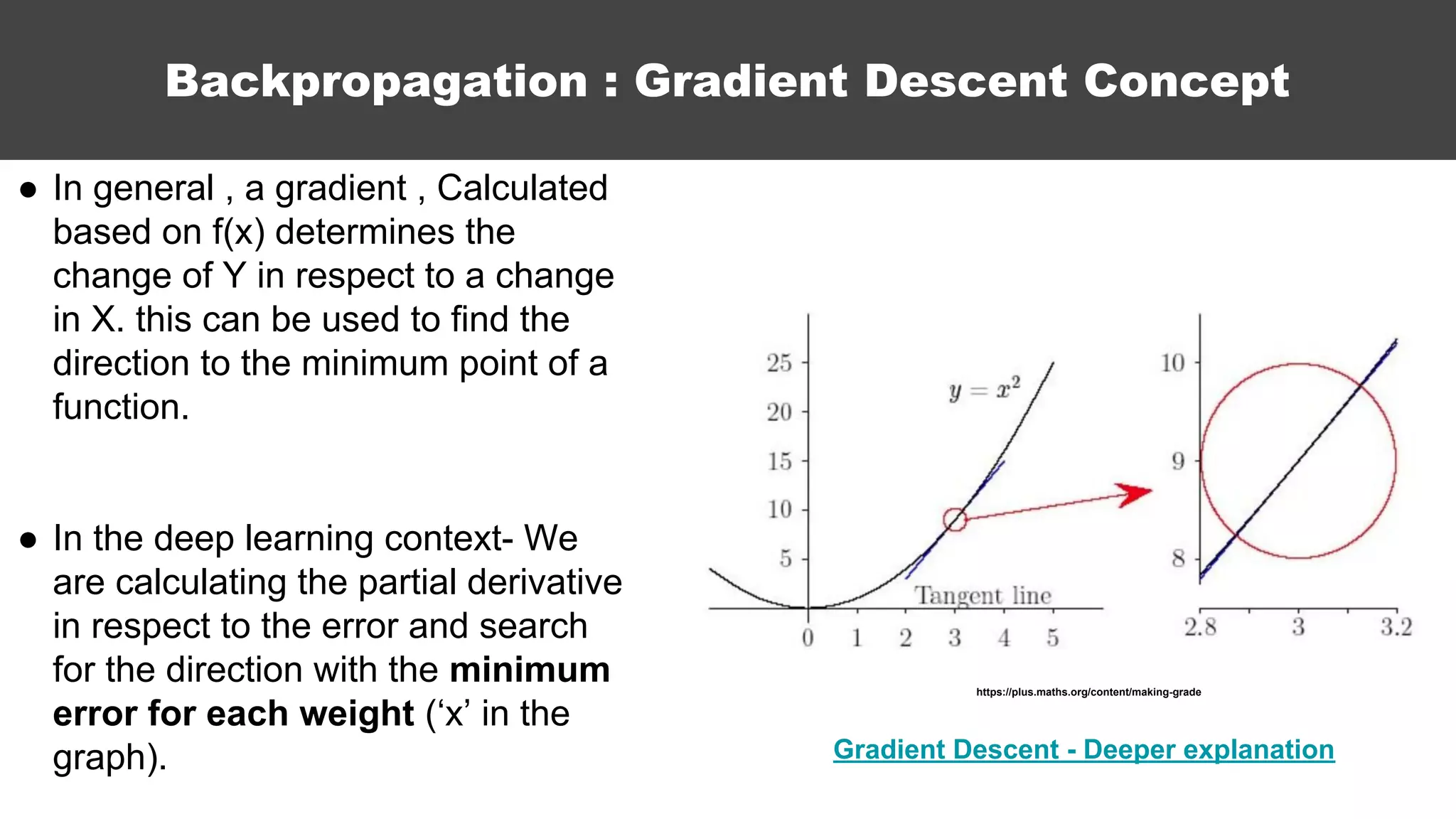

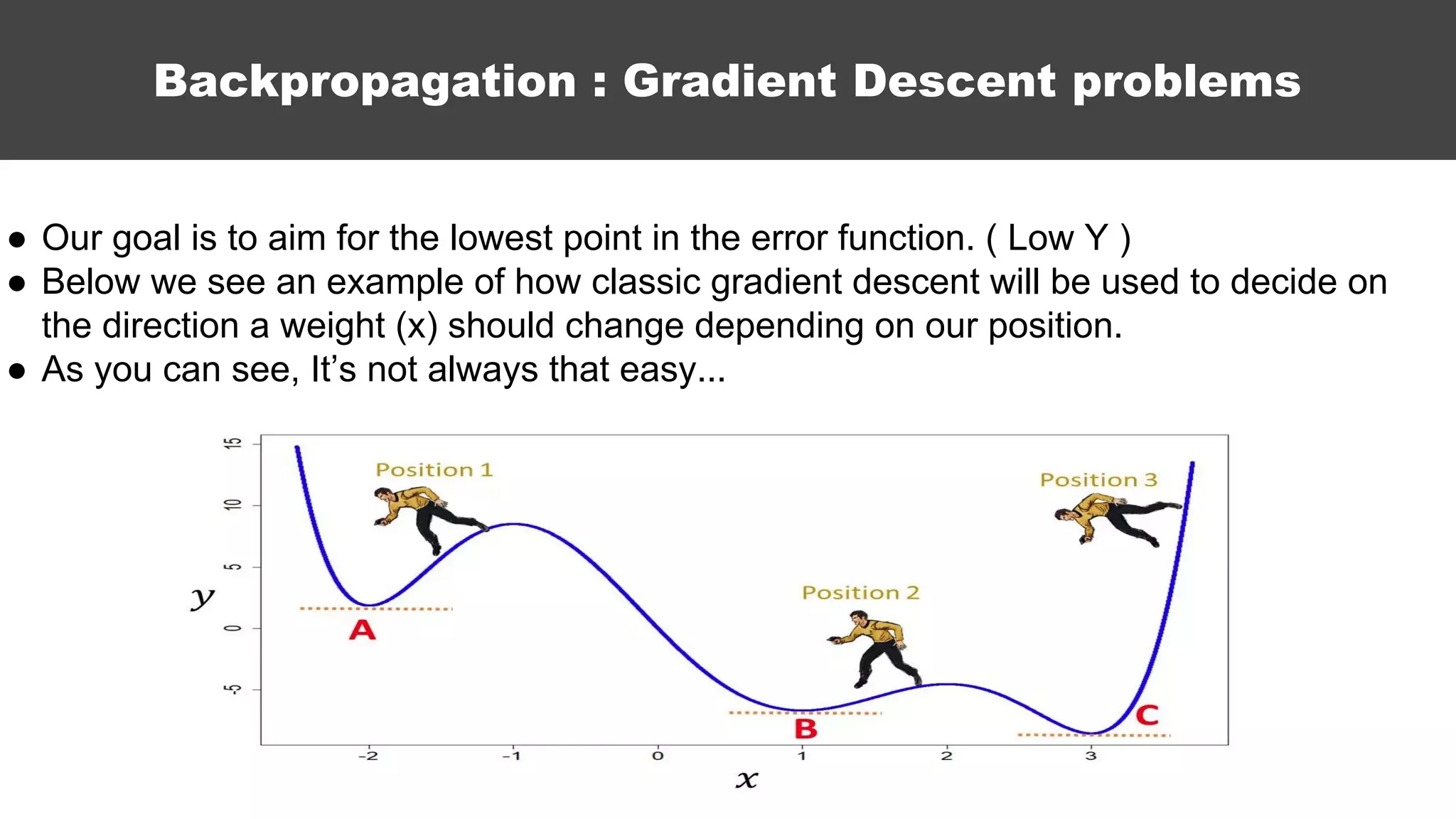

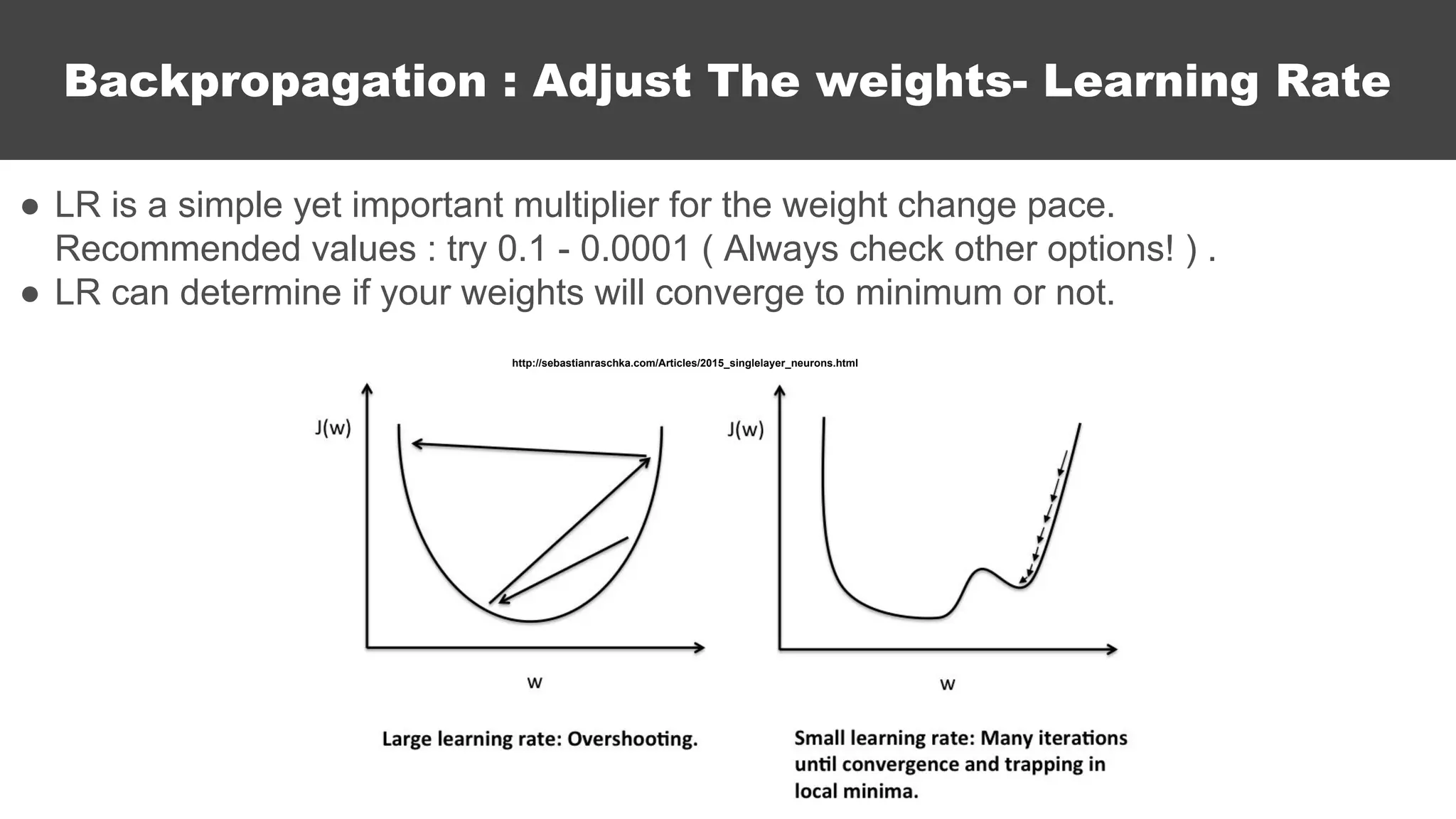

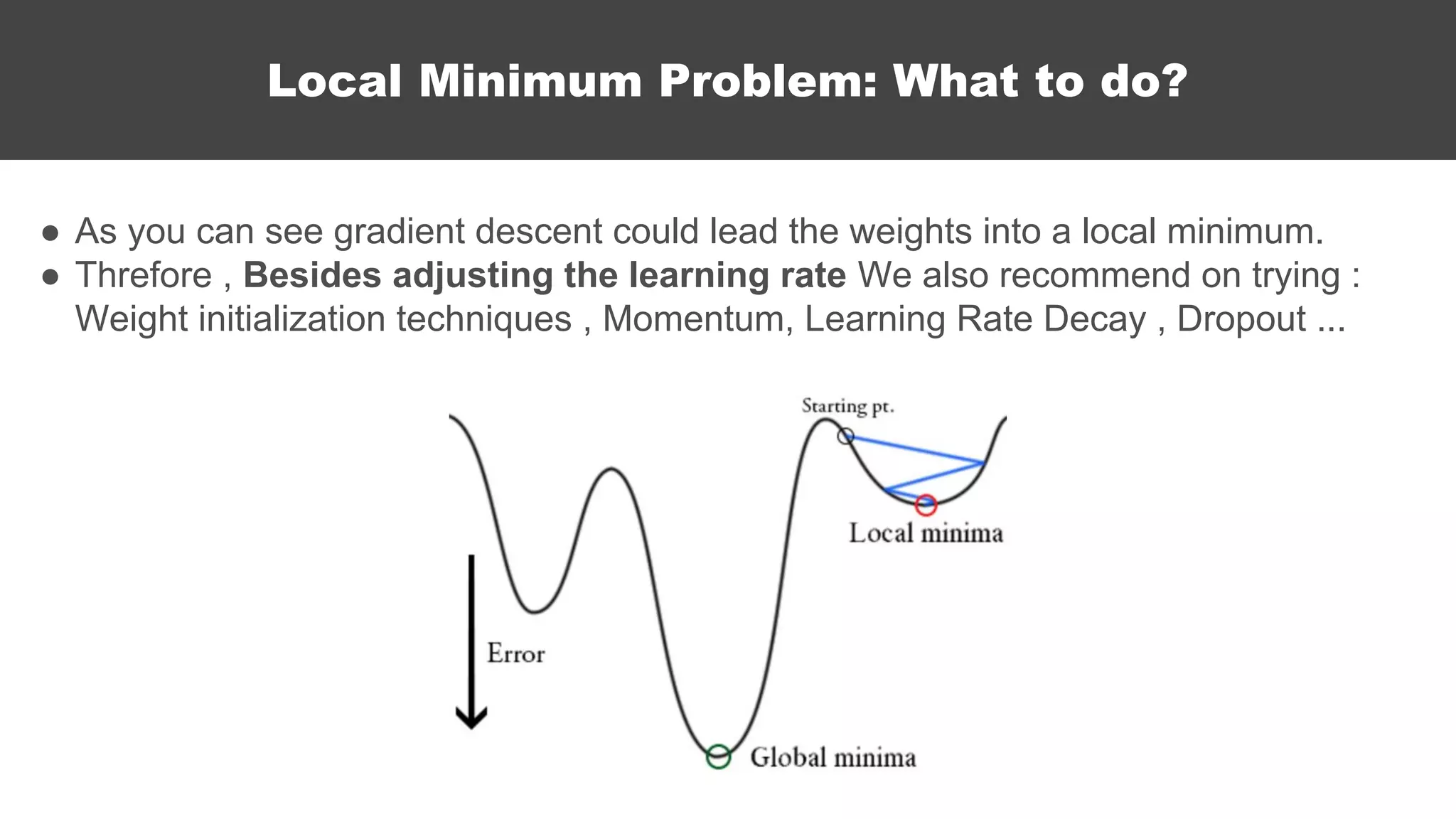

The document serves as an introductory guide to machine learning, covering key concepts such as linear and logistic regression, neural networks, and deep learning. It discusses the distinctions between supervised and unsupervised learning, outlines machine learning algorithms, and explains techniques for model evaluation and tuning. The document emphasizes practical applications, coding examples, and various challenges in building machine learning models.