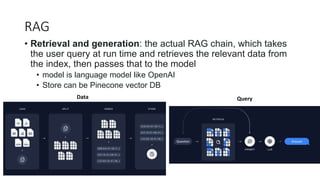

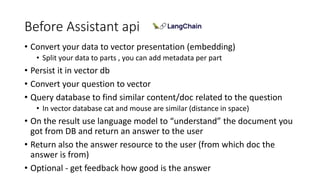

The document discusses using a vector database to enable question answering with custom data. Key points:

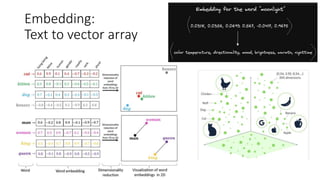

- Data is converted to vector embeddings and stored in a vector database like Pinecone to allow for similarity searches.

- When a user asks a question, it is converted to a vector and queried against the database to retrieve similar content to provide as input to a language model for generating an answer.

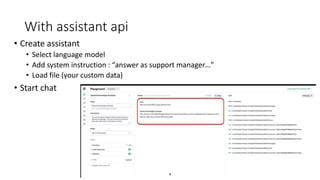

- The OpenAI API can also be used to build an assistant using a language model, where custom data is loaded to enable answering questions about that data as a "support manager."