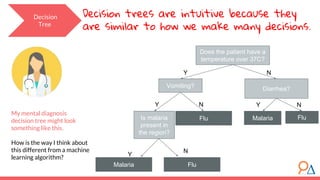

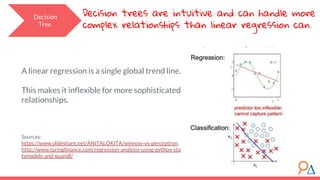

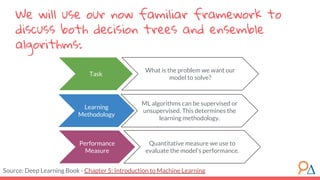

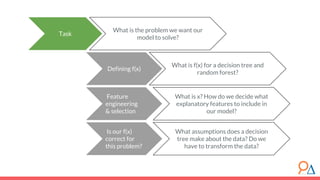

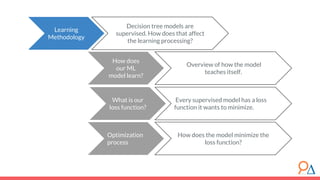

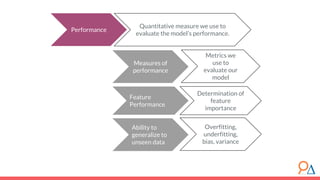

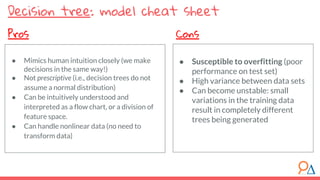

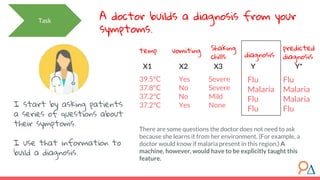

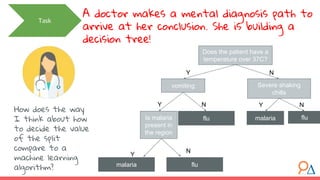

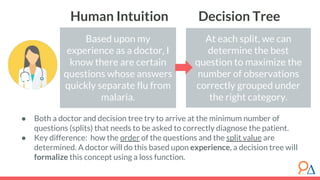

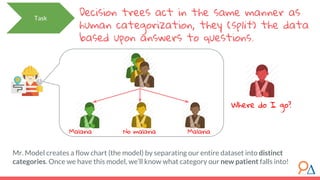

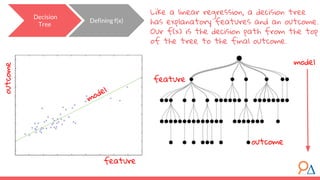

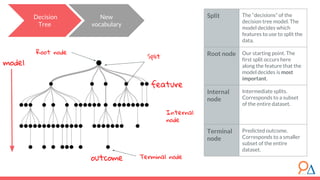

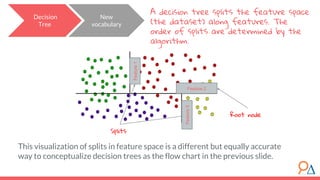

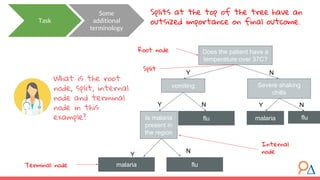

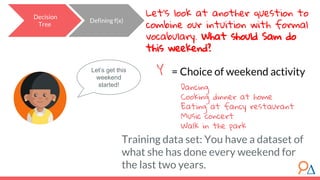

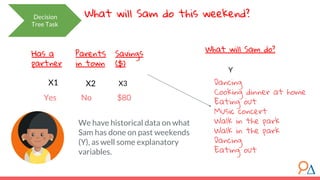

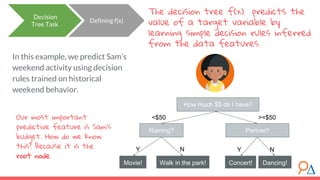

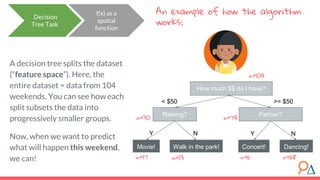

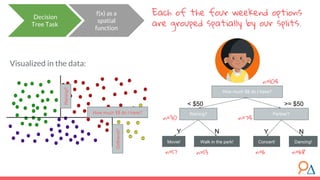

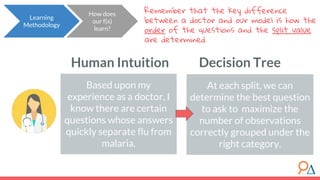

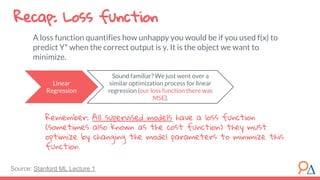

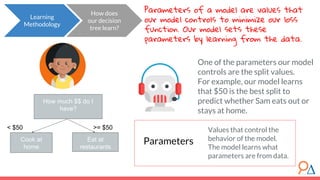

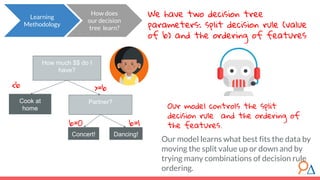

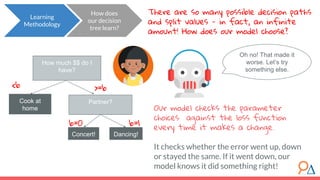

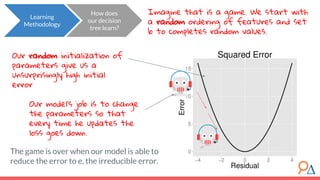

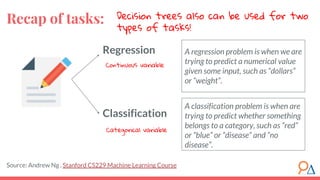

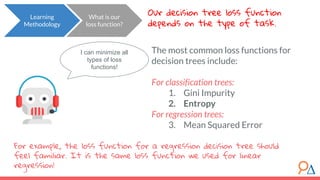

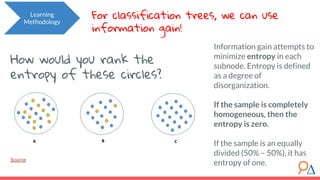

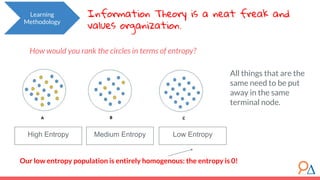

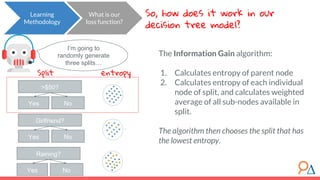

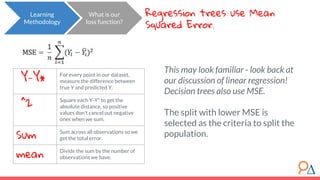

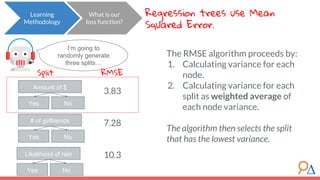

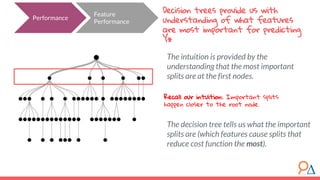

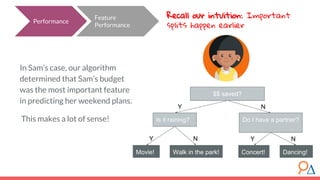

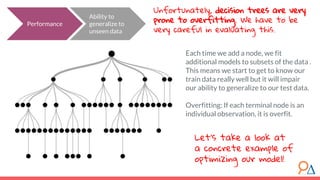

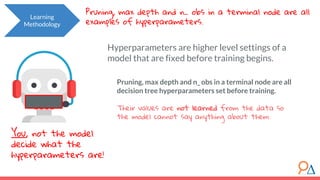

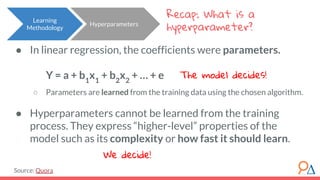

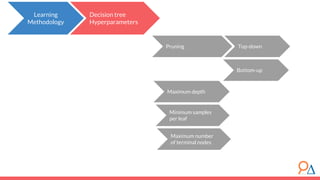

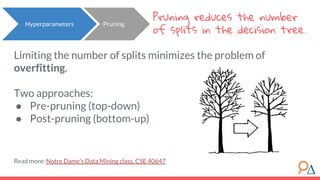

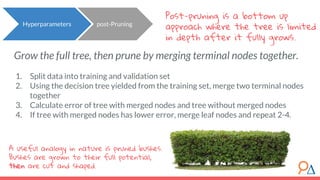

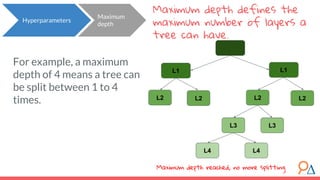

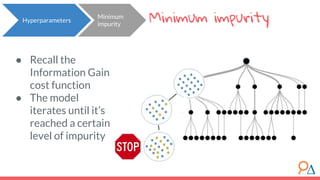

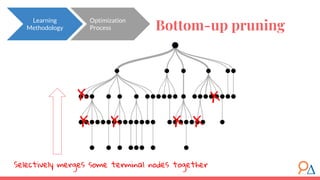

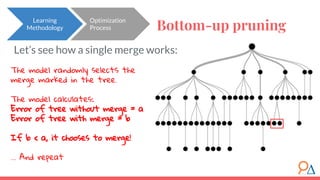

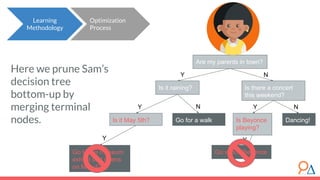

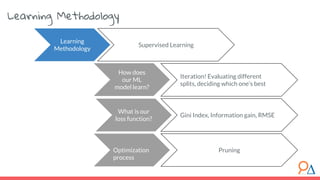

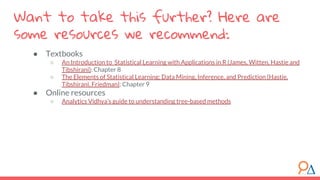

This document discusses decision trees as a machine learning algorithm used for classification and regression tasks. It explains the structure of decision trees, their intuitive similarity to human decision-making, and the learning methodology, which involves optimizing splits based on loss functions like Gini impurity and mean squared error. The content also highlights the pros and cons of decision trees, such as their susceptibility to overfitting and their interpretability.