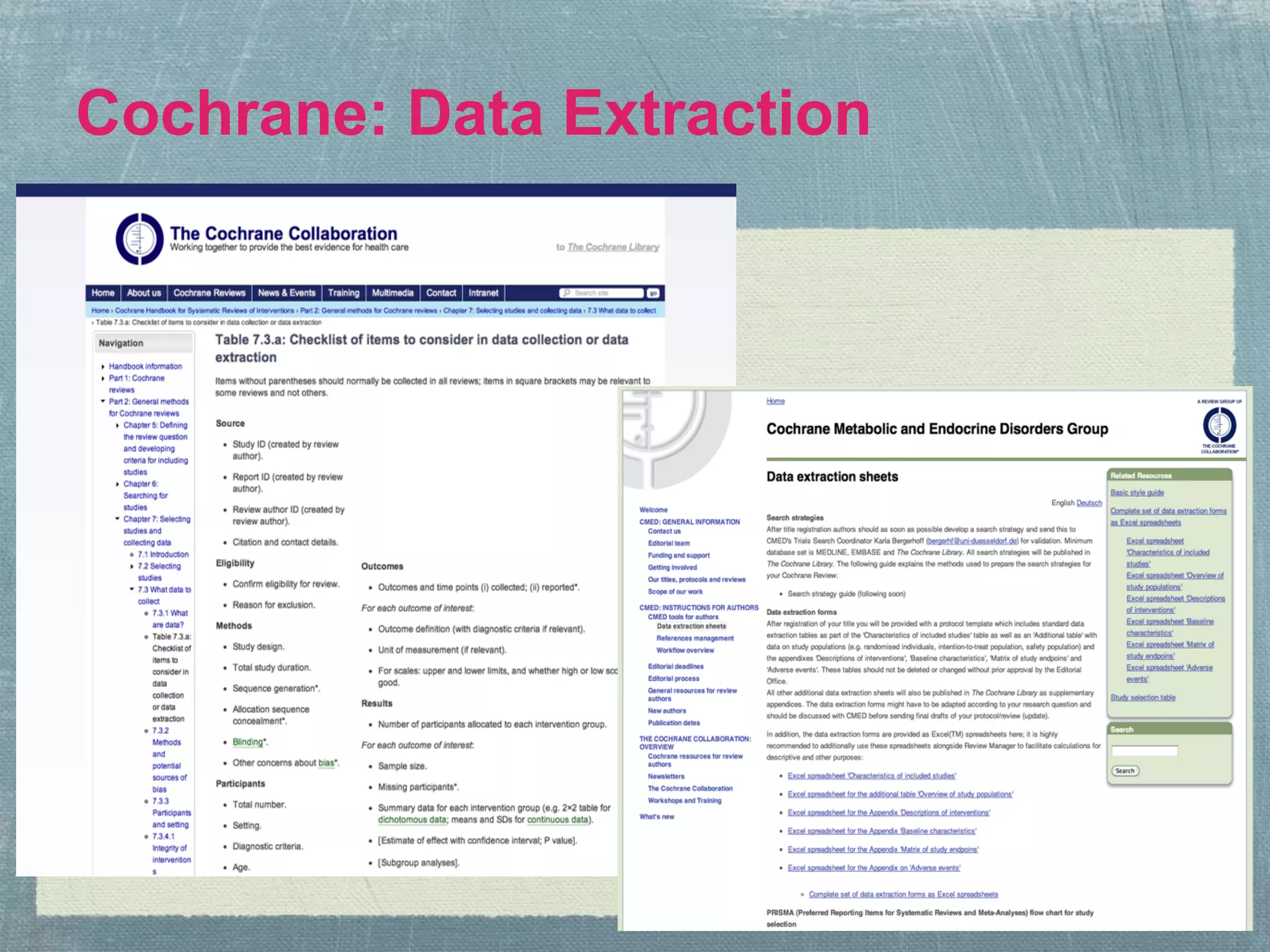

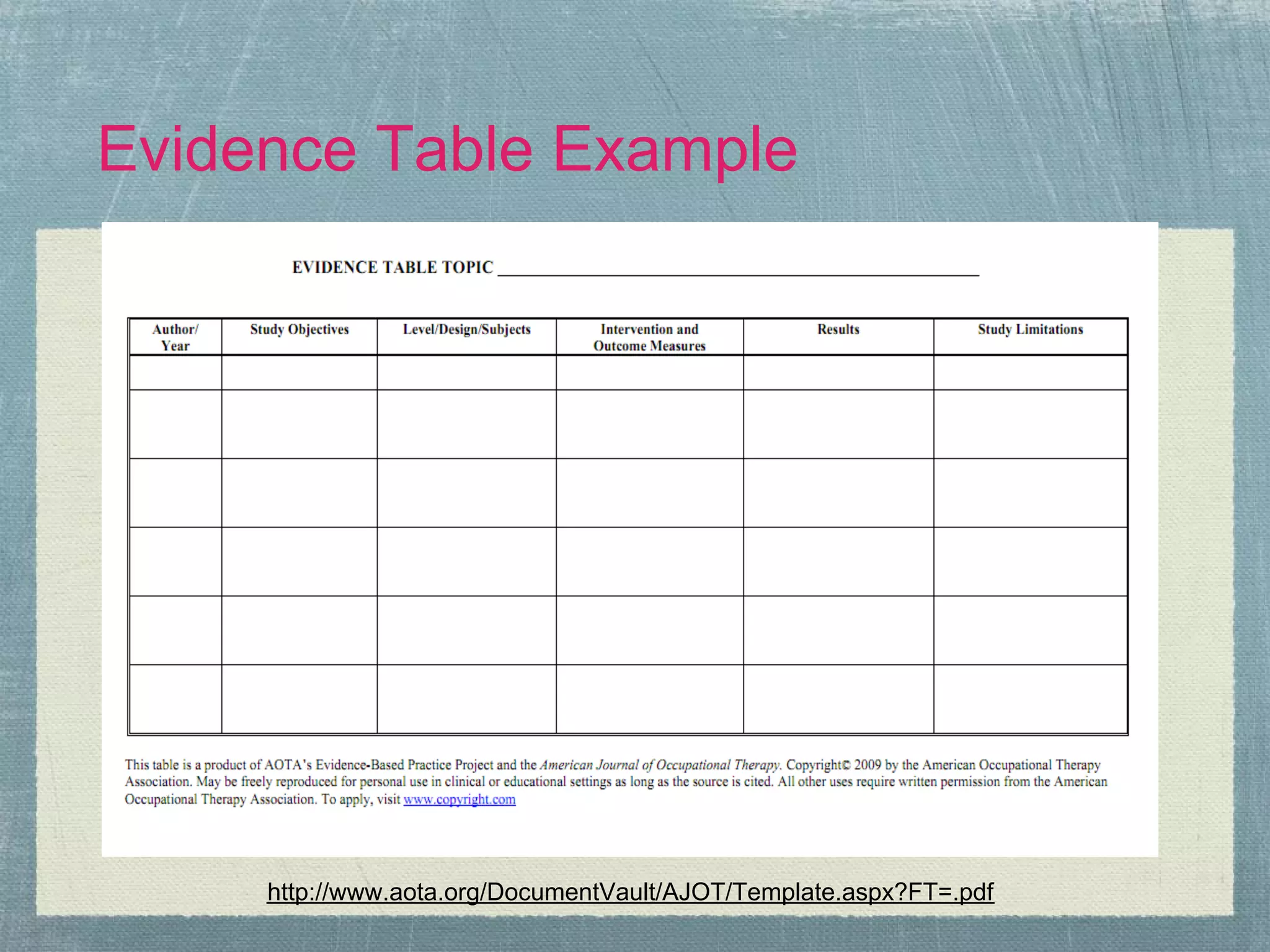

The document outlines the systematic review process, emphasizing its purpose, methodology, and the role of various team members, including librarians and clinical experts. It differentiates between evidence-based healthcare and systematic reviews, detailing the steps involved in conducting and reporting reviews. Additionally, it discusses best practices for search strategies, data extraction, and adherence to publication standards.

![What is Evidence-Based Healthcare?

●Short version:

●‘Make your [clinical] decisions based on the

best evidence available, integrated with your

clinical judgment. That’s all it means. The best

evidence, whatever that is.’

● Paraphrased from Dr. Ismail in

conversation, circa 2003.](https://image.slidesharecdn.com/systematicreviewteamsprocessesexperiences-120420142505-phpapp02/75/Systematic-review-teams-processes-experiences-10-2048.jpg)

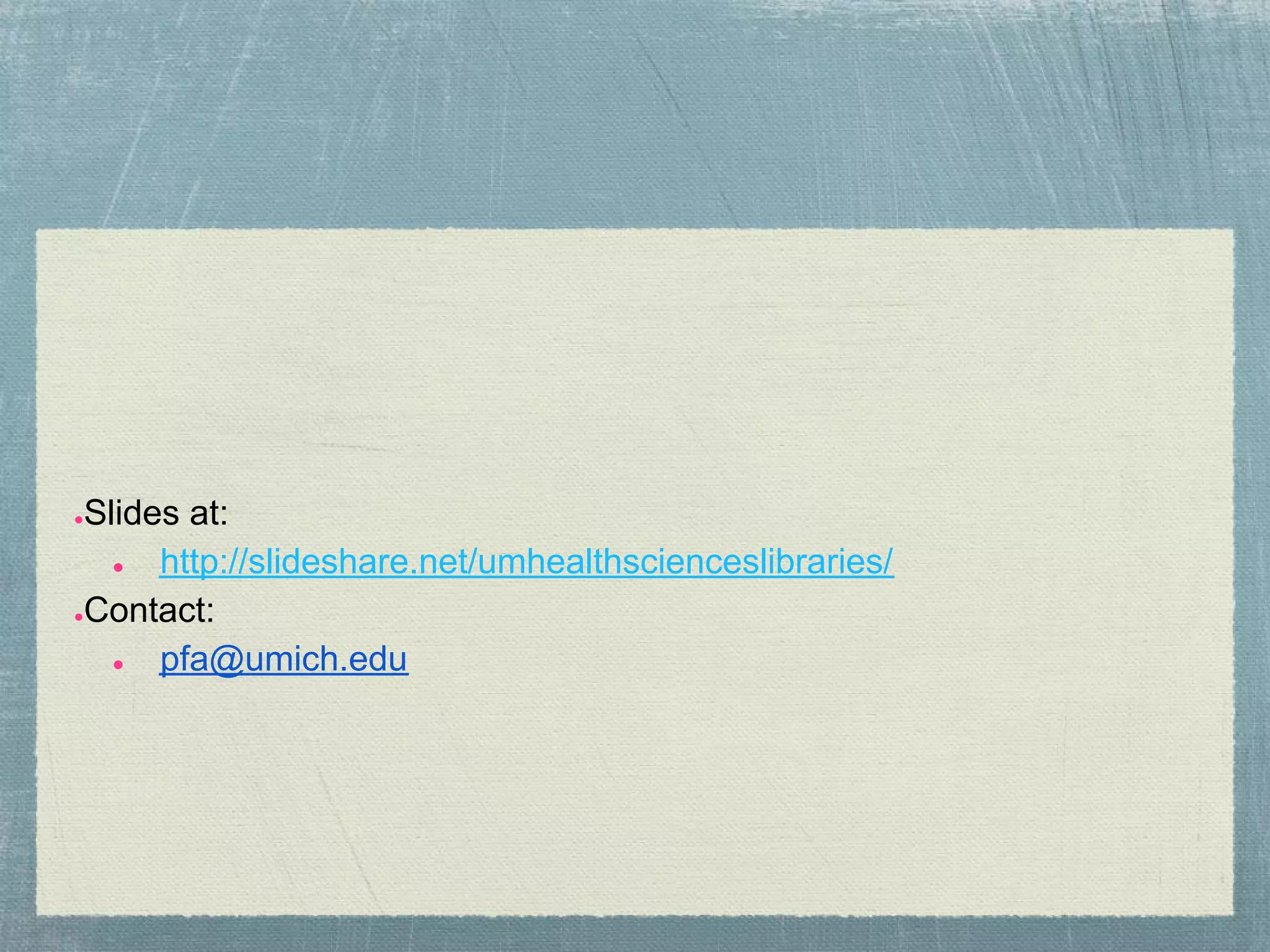

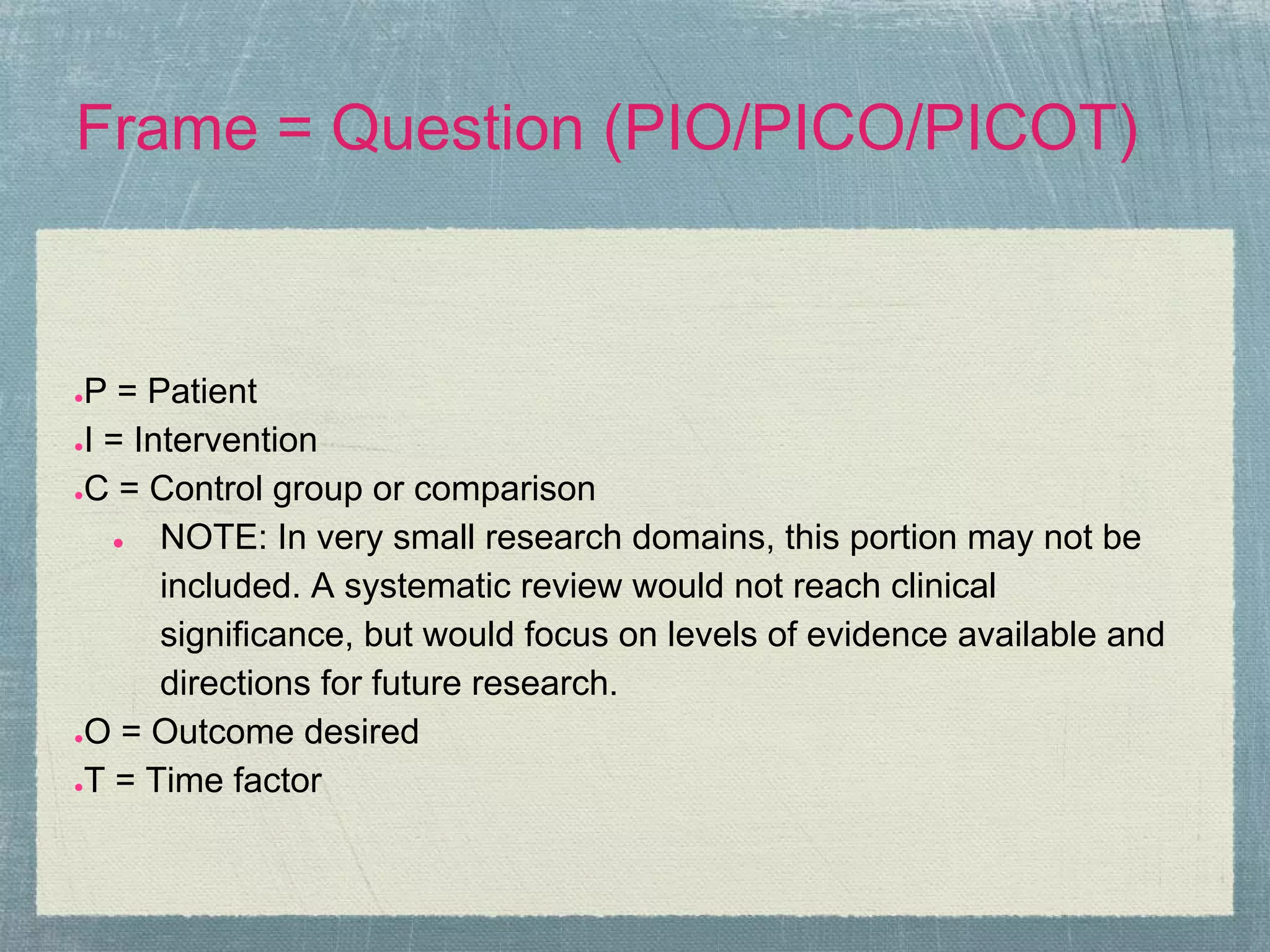

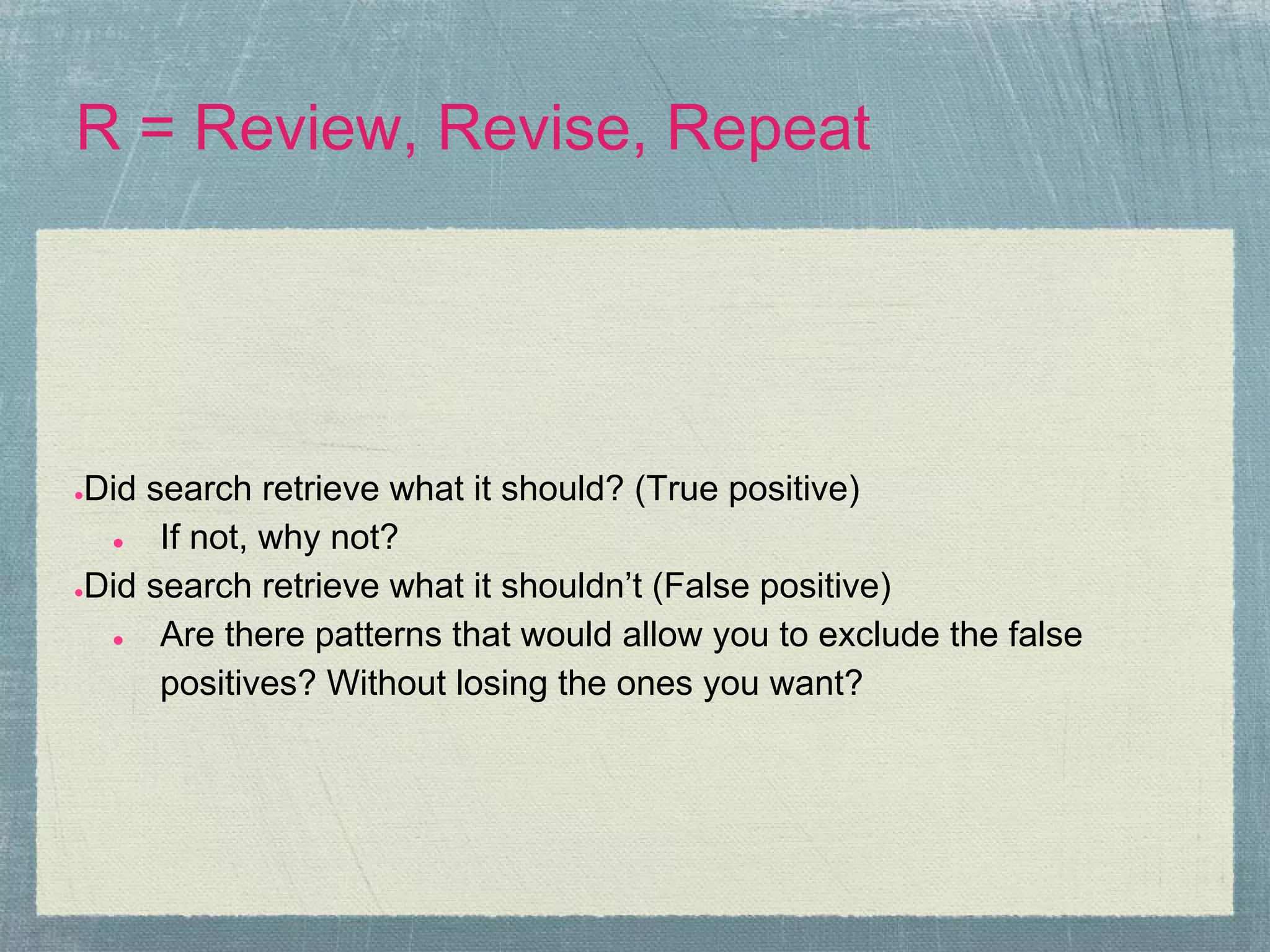

![Process & Methodology, Overview

Team meets

●

● Define topic, overview literature base, suggest inclusion/exclusion

criteria, discuss methodology & timeline.

Librarian

●

● Generates data for the team

● FRIAR/MEMORABLE/SECT

● Topic experts collaborate

Topic experts

●

● Review data at 3-4 levels (title, abstract, article, [request additional

information]), achieve consensus

● Handsearching (librarian generates list, experts implement)

● Determine level of evidence for remaining research

● Generate review tables

Share findings (Publication)

●

● Strength of evidence available (strong, weak, inadequate); suggest

directions for future research to fill gaps in research base](https://image.slidesharecdn.com/systematicreviewteamsprocessesexperiences-120420142505-phpapp02/75/Systematic-review-teams-processes-experiences-13-2048.jpg)

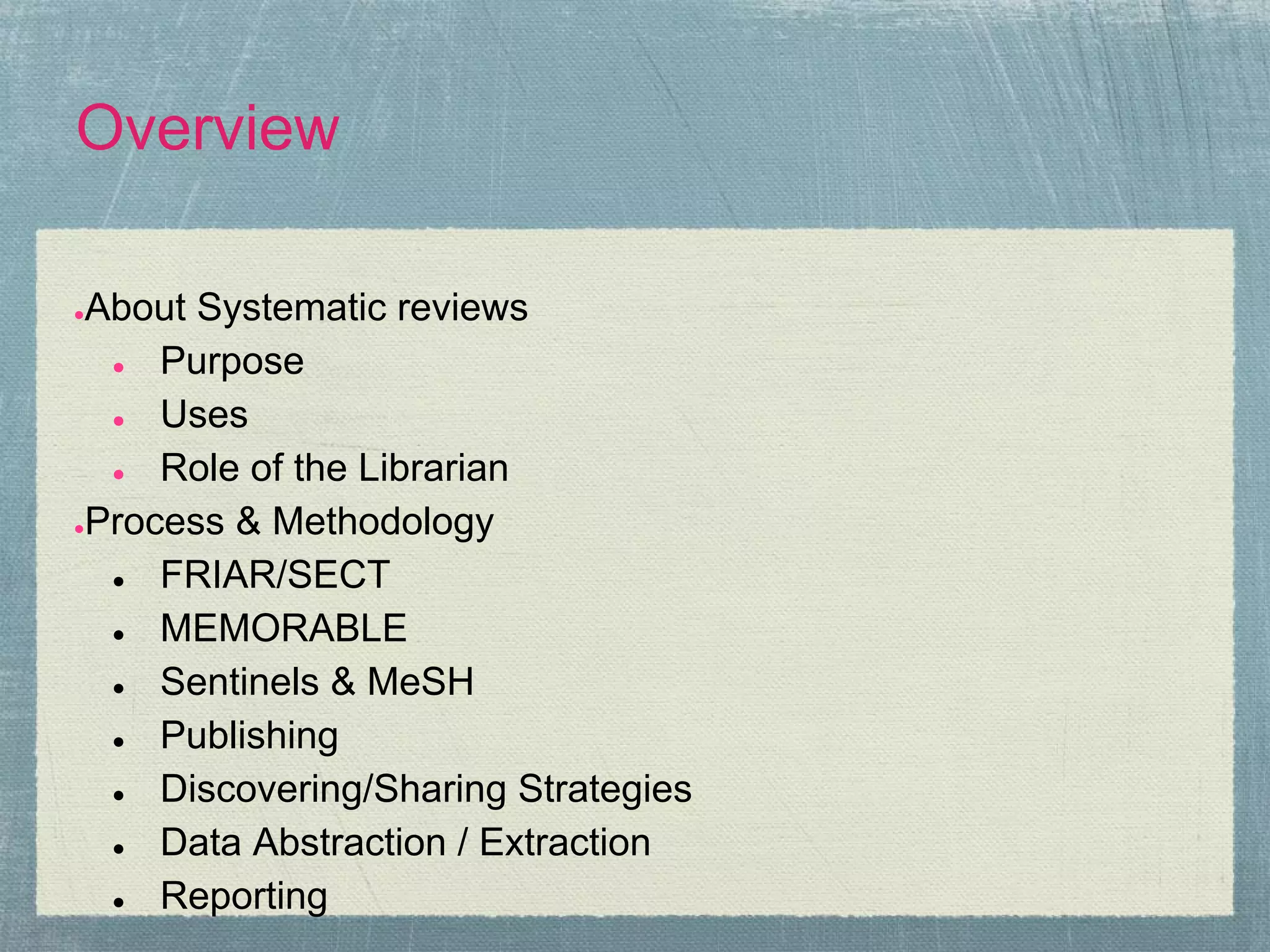

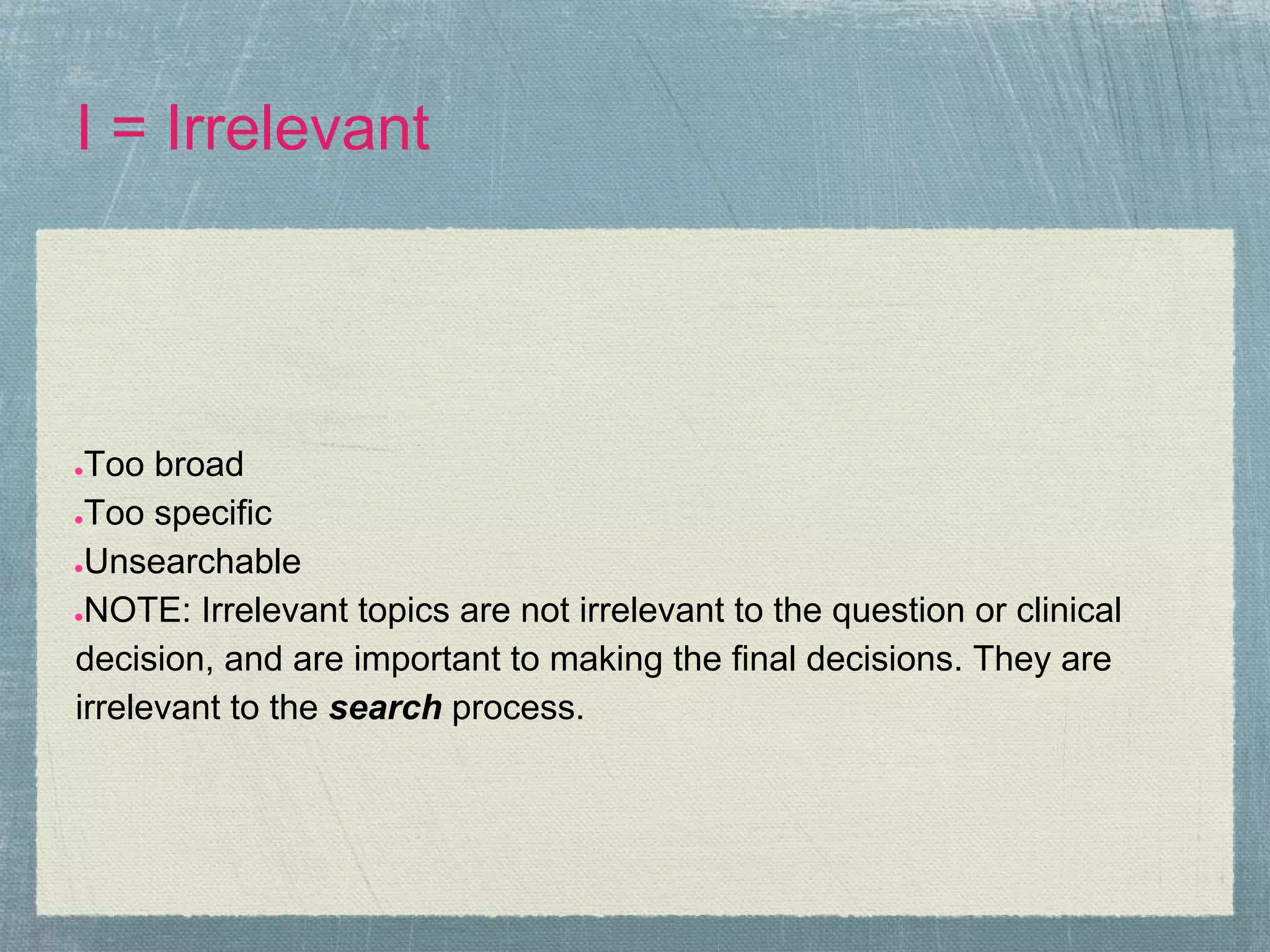

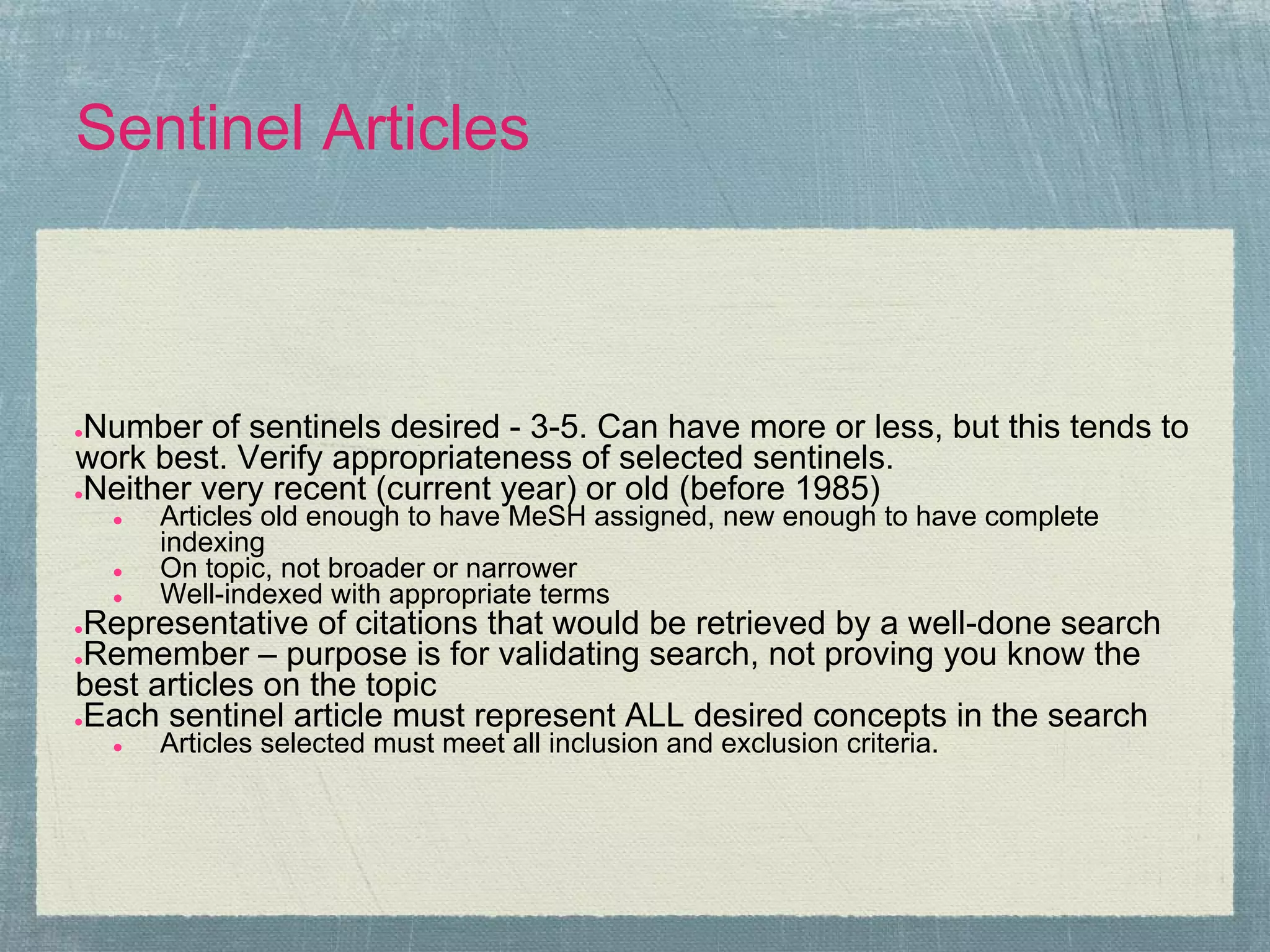

![MESH Tip

●Earlier term mappings prior to

assignment of a MeSH term are often:

● presenting symptom or diagnosis

● anatomical area

●TMJD Example:

● TMJD = temporomandibular joint

disorder

● = (Temporomandibular joint

[anatomical area] + ("myofacial

pain" OR "Bone Diseases")

[presenting symptom OR

diagnosis]

Image: Frank Gaillard. Normal anatomy of the Temporomandibular joint. 14 Jan

2009. http://commons.wikimedia.org/wiki/File:Temporomandibular_joint.png](https://image.slidesharecdn.com/systematicreviewteamsprocessesexperiences-120420142505-phpapp02/75/Systematic-review-teams-processes-experiences-25-2048.jpg)