The document discusses the Wishart and inverse-Wishart distributions which are used to model covariance matrices. It provides mathematical background on how the Wishart distribution arises from sampling covariance matrices from multivariate normal distributions. It also describes key properties of the Wishart distribution including its probability density function and how it relates to the chi-squared distribution when the dimensionality is one. Estimation of covariance matrices plays an important role in multivariate statistics.

![ Applying the vector(vec) operator to S, which forms a long vector

by stacking the columns of S, so that Cov[vec(s)] is a matrix rather

than an array, so we have

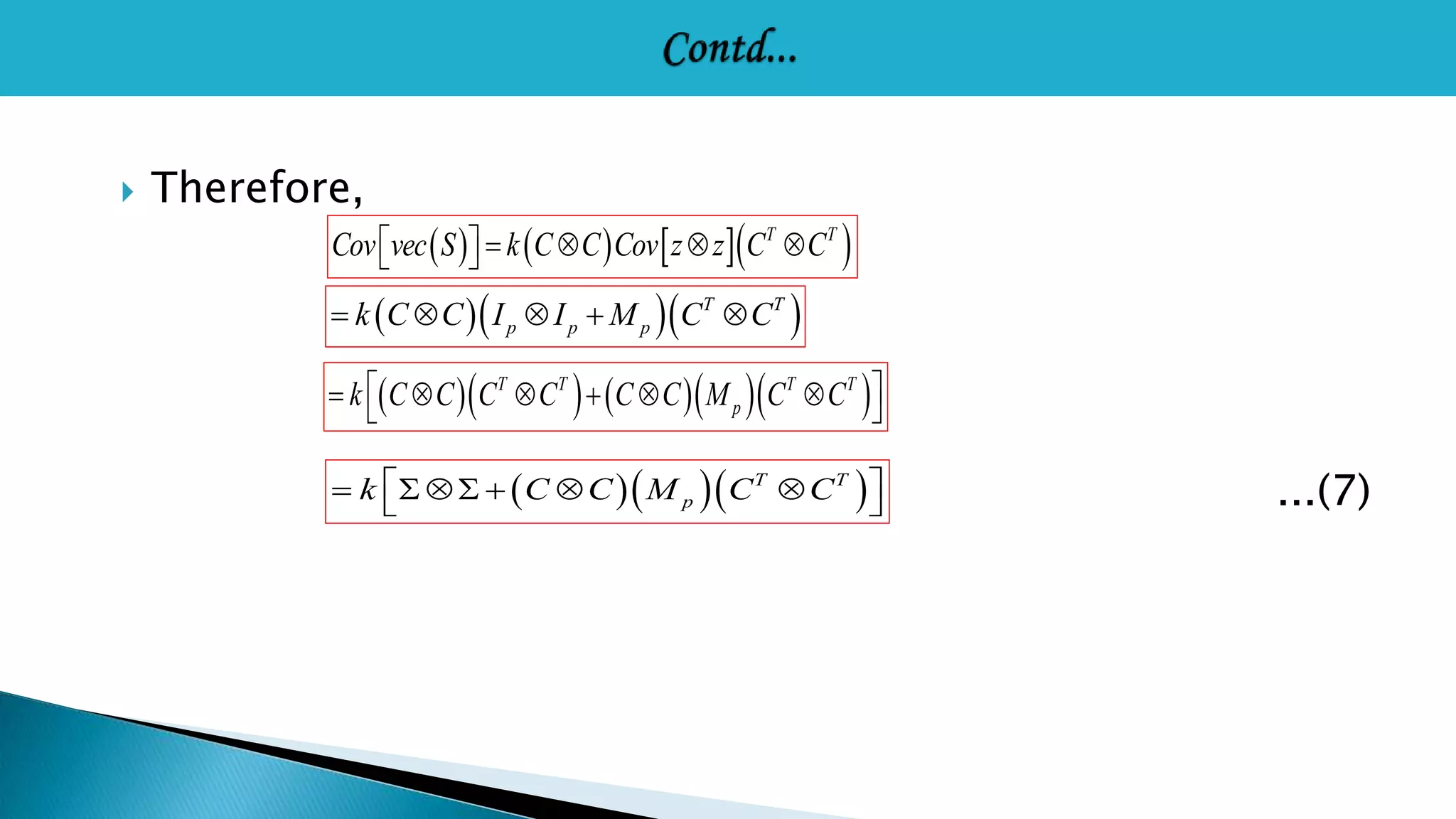

k T T Cov vec S Cov vec Czz C

T kCov CC vec zz

T T k C C Cov vec zz C C

T T k CC Cov zz C C

(by the vec to Kronecker property)

(by vec and Kronecker properties)

z](https://image.slidesharecdn.com/seminarpankaj-140908134953-phpapp02/75/The-Wishart-and-inverse-wishart-distribution-21-2048.jpg)

![ To determine cov [vec(s)] (as a proxy for cov(s)), one would only

need to know

Covzz

(1) The variance of (where n is any element of z);

(2) The variance of Z Z (where no

are any two elements of z);

n o (3) The covariance between & ;

2

n Z 2

(4) The covariance between & and

o Z

(5) The covariance between and (where at most two of i, j, n, or

o are the same).

n o ZZ o n ZZ

n o Z Z

2

n Z](https://image.slidesharecdn.com/seminarpankaj-140908134953-phpapp02/75/The-Wishart-and-inverse-wishart-distribution-22-2048.jpg)

![Therefore, the [p(n-1)+ n,p(n -1)+n] elements of will all be 2

because Var Z 2

2 and the remaining diagonal elements of

k will all be 1 because Var Z Z

1 n o for all k = l. The off-diagonal elements

must be 0 except for those elements symbolizing the covariance

between ZZ and ZZ

j i , which will be 1.

i j Ultimately can be written as

i j Z Z j i Z Z

is a matrix of 1s and 0s.

I p I p Mp

p M 2 2 p p

2 2 p p p M](https://image.slidesharecdn.com/seminarpankaj-140908134953-phpapp02/75/The-Wishart-and-inverse-wishart-distribution-25-2048.jpg)

![ Let T ~ InvWishp

Where denotes a positive definite scale matrix, m denotes the degrees of

freedom, and p indicates the dimensions of T (i.e. ).

Then T is positive definite with probability density function is given as

m

|Ψ| 2 1 = exp[- tr(ΨT-1)]

...(8)

m+p+1 mp 2

2 2 m | T | 2 Γp( )

f

2

T =

( , ) m

Ψ

p×p TR](https://image.slidesharecdn.com/seminarpankaj-140908134953-phpapp02/75/The-Wishart-and-inverse-wishart-distribution-33-2048.jpg)