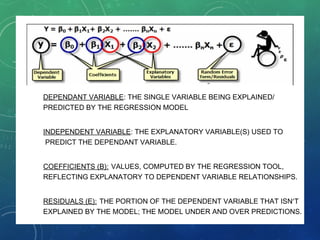

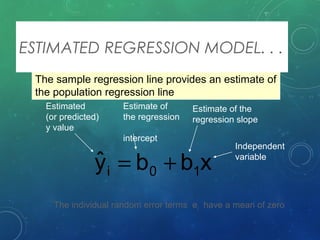

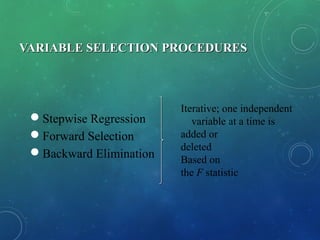

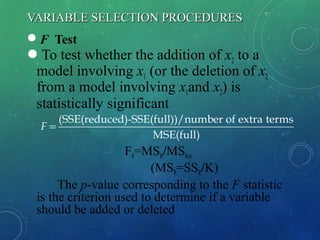

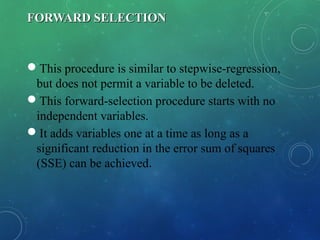

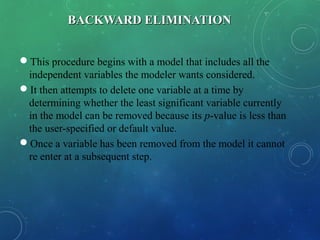

This presentation introduces regression analysis. It discusses key concepts such as dependent and independent variables, simple and multiple regression, and linear and nonlinear regression models. It also covers different types of regression including simple linear regression, cross-sectional vs time series data, and methods for building regression models like stepwise regression and forward/backward selection. Examples are provided to demonstrate calculating regression equations using the least squares method and computing deviations from mean values.