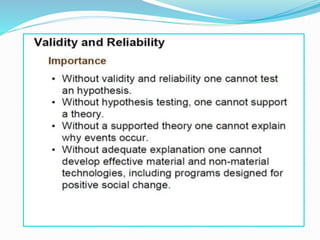

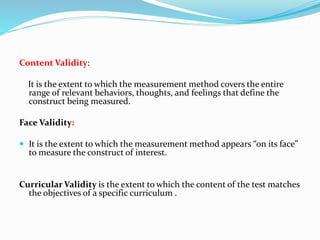

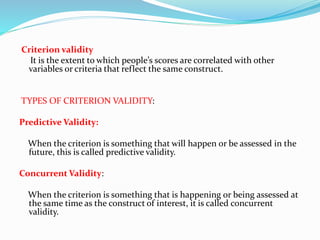

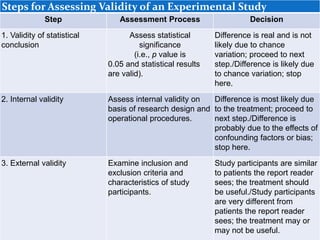

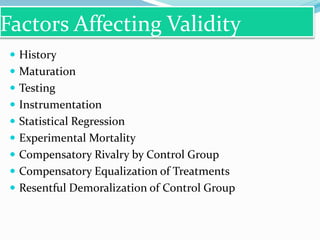

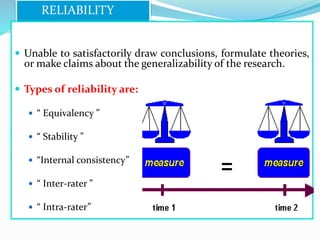

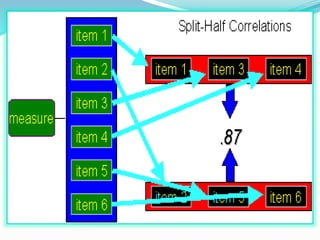

This document discusses validity and reliability in research. It defines validity as the extent to which a test measures what it claims to measure. Reliability is defined as the extent to which a test shows consistent results on repeated trials. The document then discusses various types of validity including content, face, criterion-related, construct, and ecological validity. It also discusses types of reliability including equivalency, stability, internal consistency, inter-rater, and intra-rater reliability. Factors affecting validity and reliability are presented along with how validity and reliability are related concepts in research.