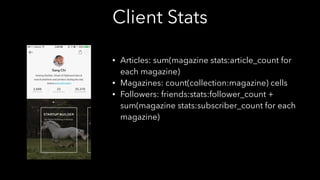

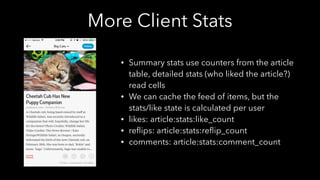

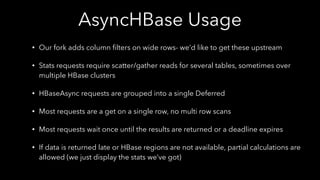

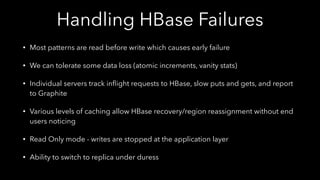

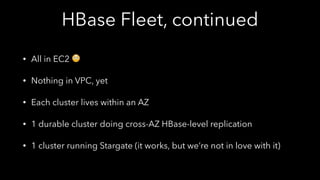

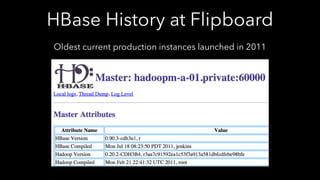

HBase is used at Flipboard for storing user and magazine data at scale. Some key uses of HBase include storing magazines, articles, user profiles, social graphs and metrics. HBase provides high write throughput, elasticity and strong consistency needed to support Flipboard's 100+ million users. Data is accessed through patterns optimized for common queries like fetching individual magazines or articles. HBase failures are handled through caching, replication and ability to switch to redundant clusters.

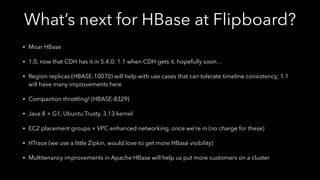

![User Magazines

[Reverse Unix TS]:

[magazineid]

[Reverse Unix TS]:

[magazineid]

sha1(userid)

Article Data(Serialized

JSON)

Article Data(Serialized

JSON)

Articles CF of Collection Table

Kept in temporal order so that most recently shared articles are first

Access patterns are usually newest first.

HBase Filters are used to slice wide rows.](https://image.slidesharecdn.com/usecases-session1-150605173432-lva1-app6892/85/HBaseCon-2015-HBase-Flipboard-12-320.jpg)

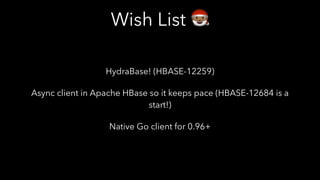

![User Magazines

like:[userid] reflip:[new article id]

comment:[timestamp]

[userid]

Article

ID

JSON (who/when)

JSON (where it was

reflipped)

JSON (comment/person)

Social Activity

One cell per like, since you can only do it once per user

Can be many comment and reflip cells by one user per article

Alternative orderings can be computed from Elasticsearch indexes](https://image.slidesharecdn.com/usecases-session1-150605173432-lva1-app6892/85/HBaseCon-2015-HBase-Flipboard-13-320.jpg)

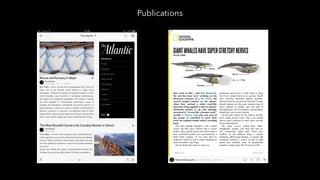

![HBase Table Access Patterns

• Tables optimized for application access patterns (“design for the questions, not the

answers”)

• Fetching an individual magazine- collection table, magazine CF, [magazine ID] -> cell

• Fetching an individual article - article table, article:[article ID] cell

• Fetch an article’s stats - article table, article:stats cells

• Fetching a magazine’s articles: collection table, article CF, with cell limit and column

qualifier starts with magazine id

• Fetching a user’s magazines- collection table, magazine CF, [magazine ID] in the CQ](https://image.slidesharecdn.com/usecases-session1-150605173432-lva1-app6892/85/HBaseCon-2015-HBase-Flipboard-18-320.jpg)