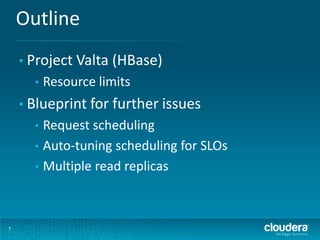

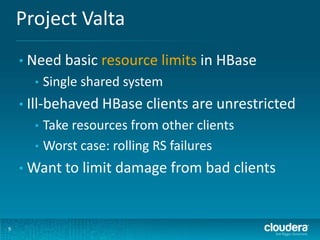

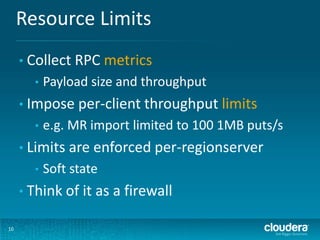

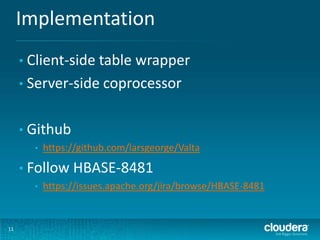

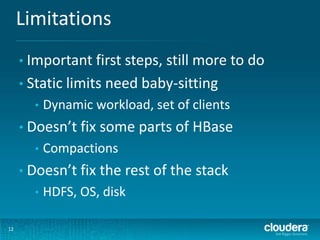

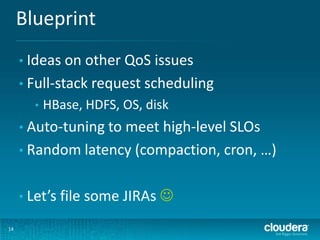

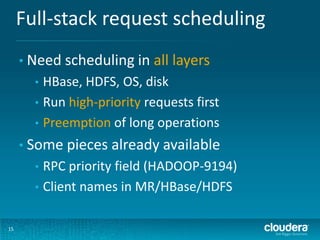

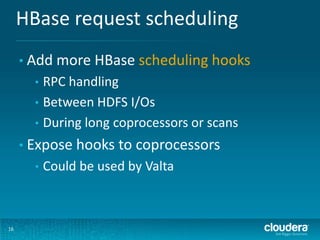

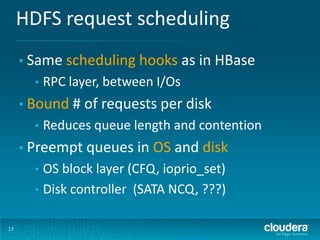

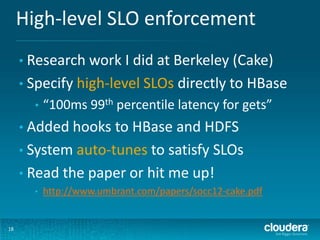

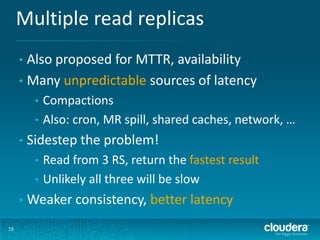

Valta is a resource management layer over Apache HBase that aims to address issues with shared workloads on a single HBase cluster. It introduces resource limits for HBase clients to prevent ill-behaved clients from monopolizing cluster resources. This is an initial step, and more work is needed to address request scheduling across HBase, HDFS, and lower layers to meet service level objectives. The document outlines ideas for full-stack request scheduling, auto-tuning systems based on high-level SLOs, and using multiple read replicas to improve latency.