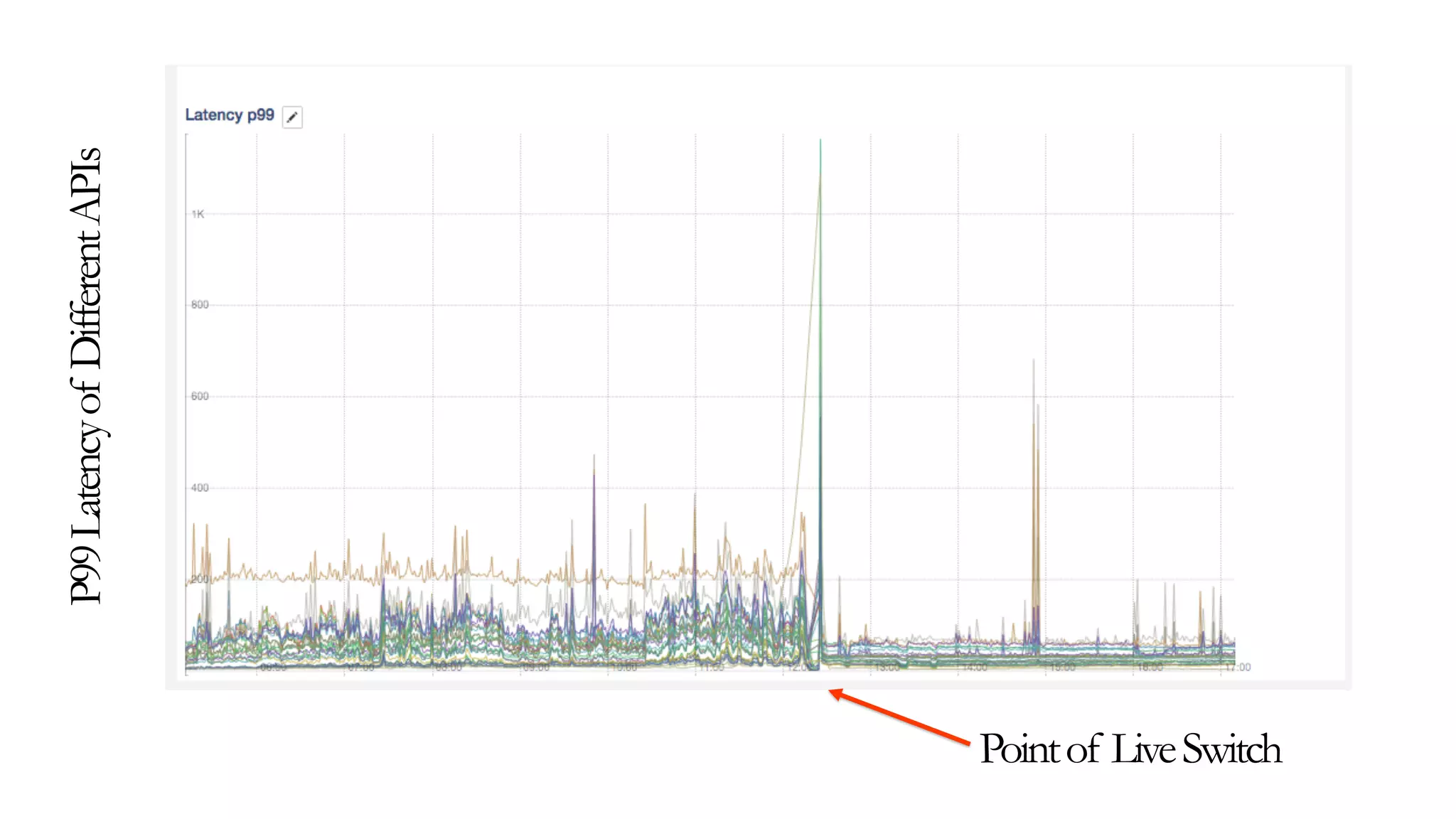

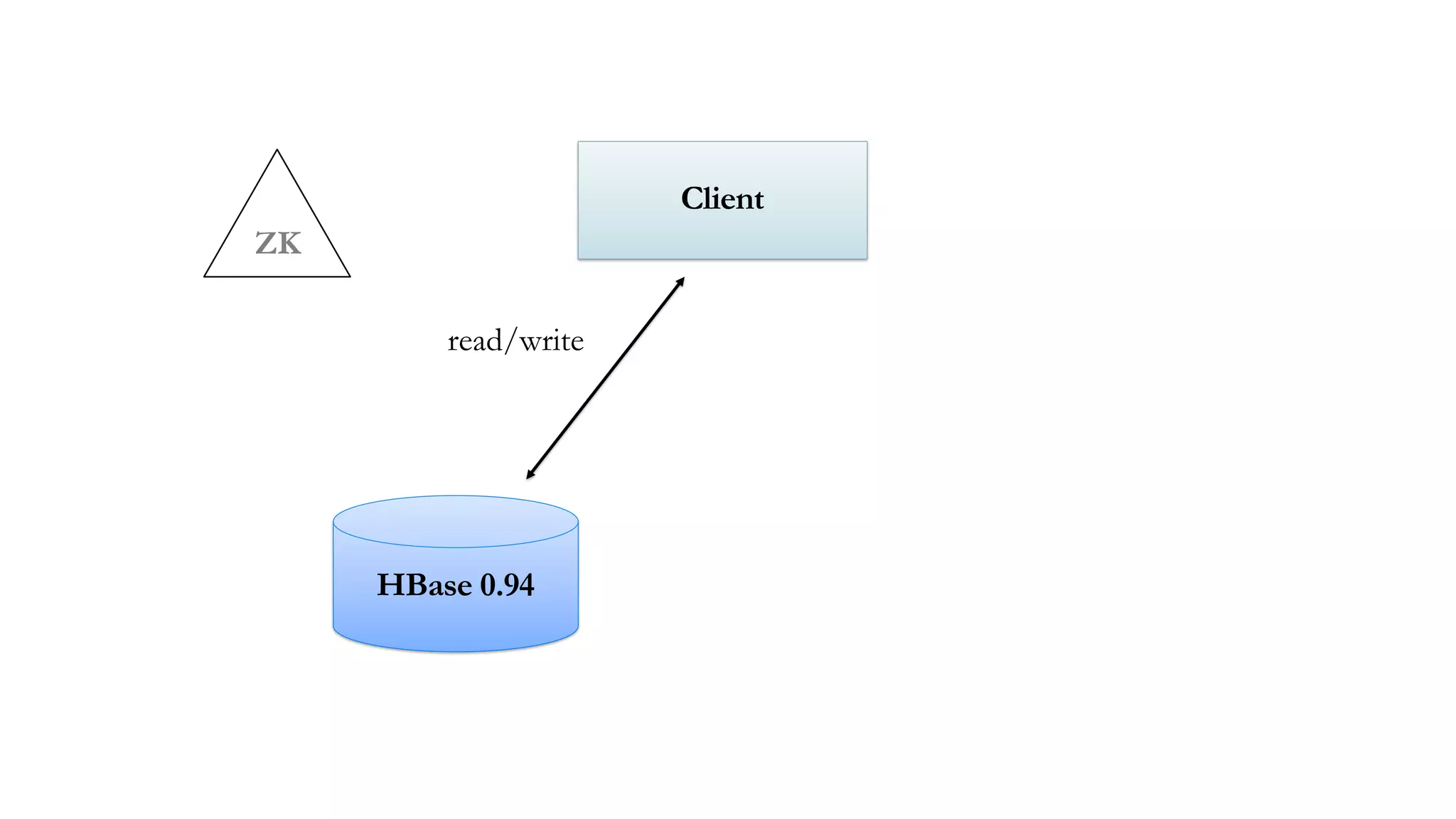

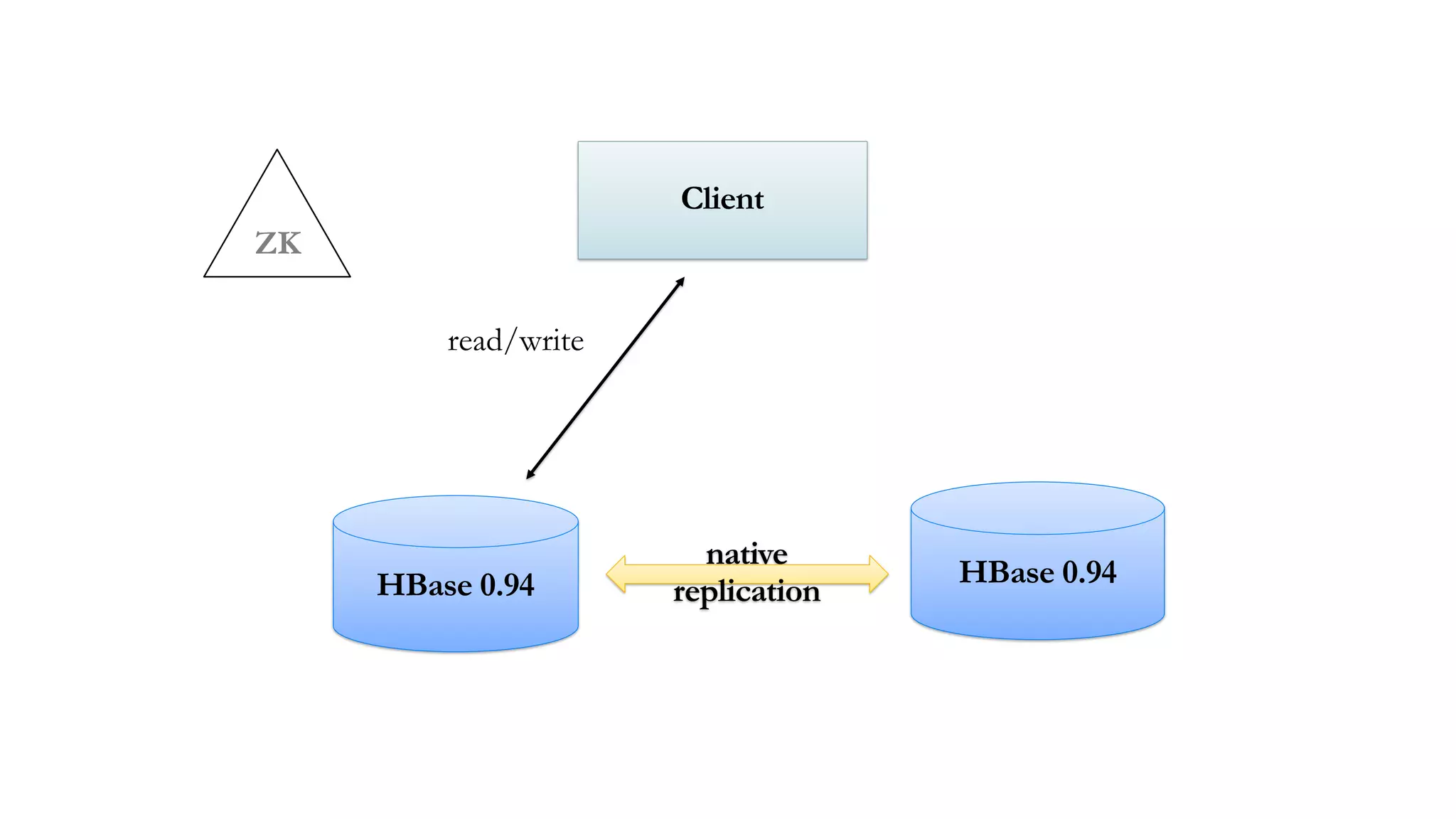

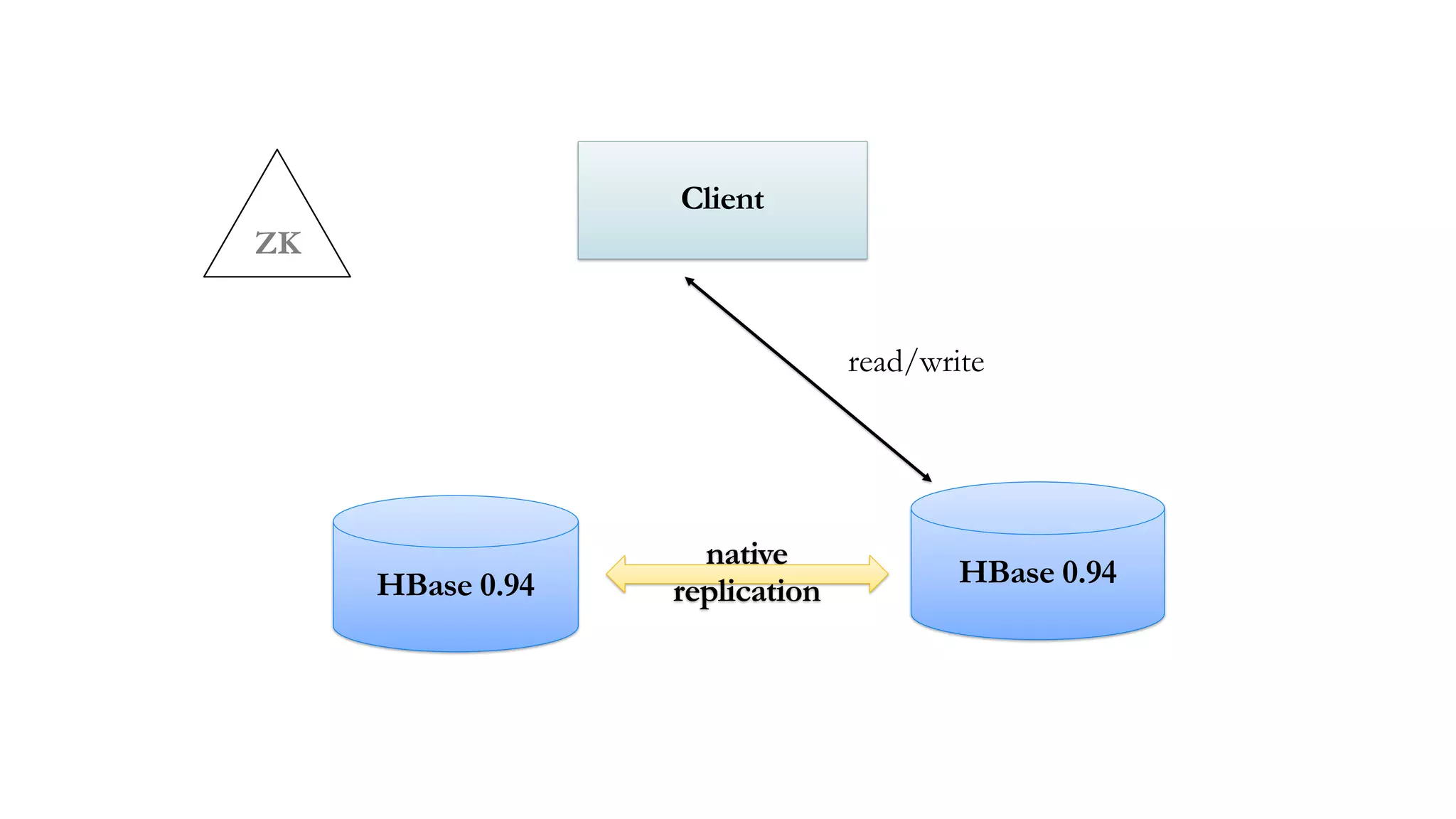

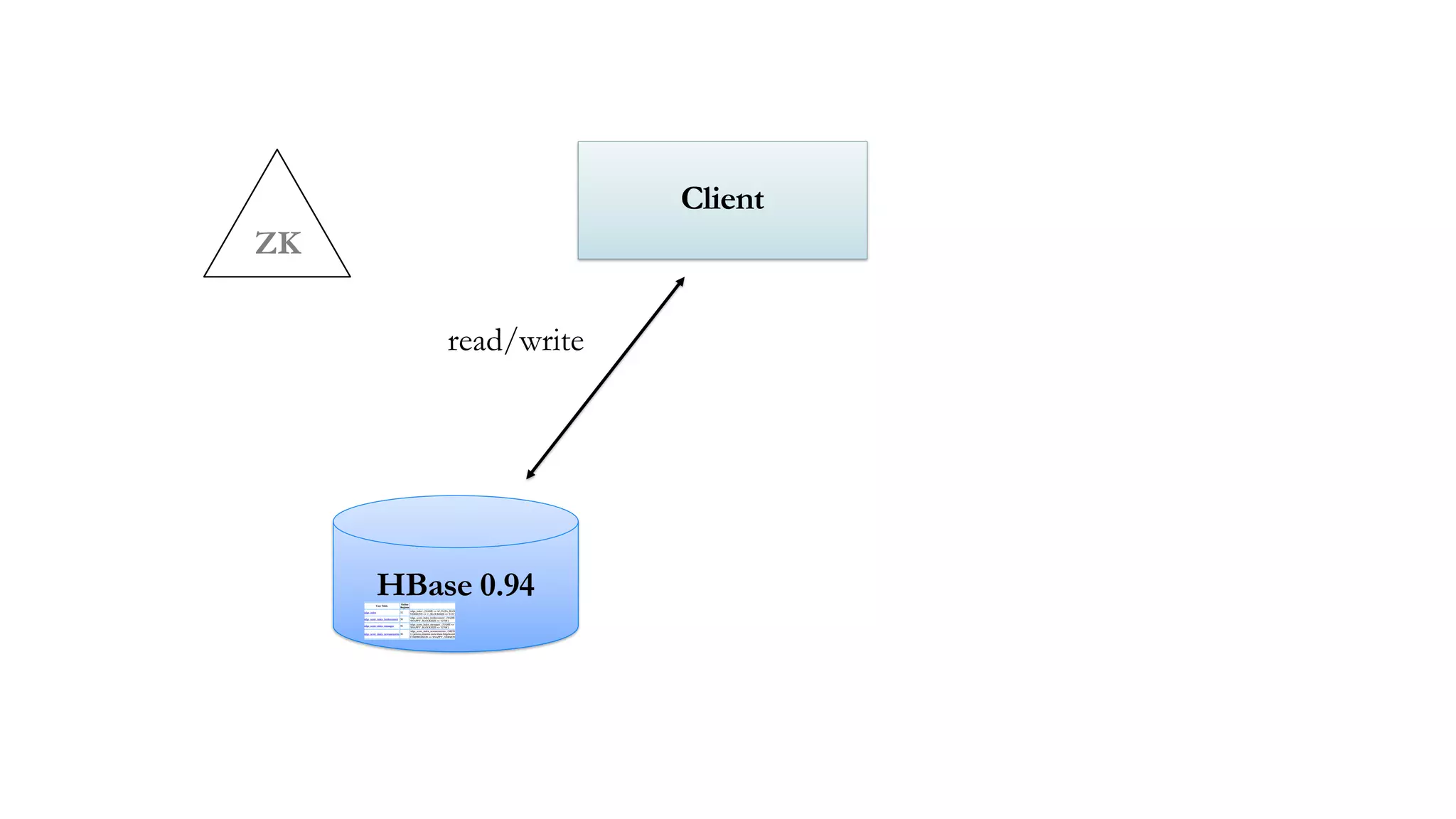

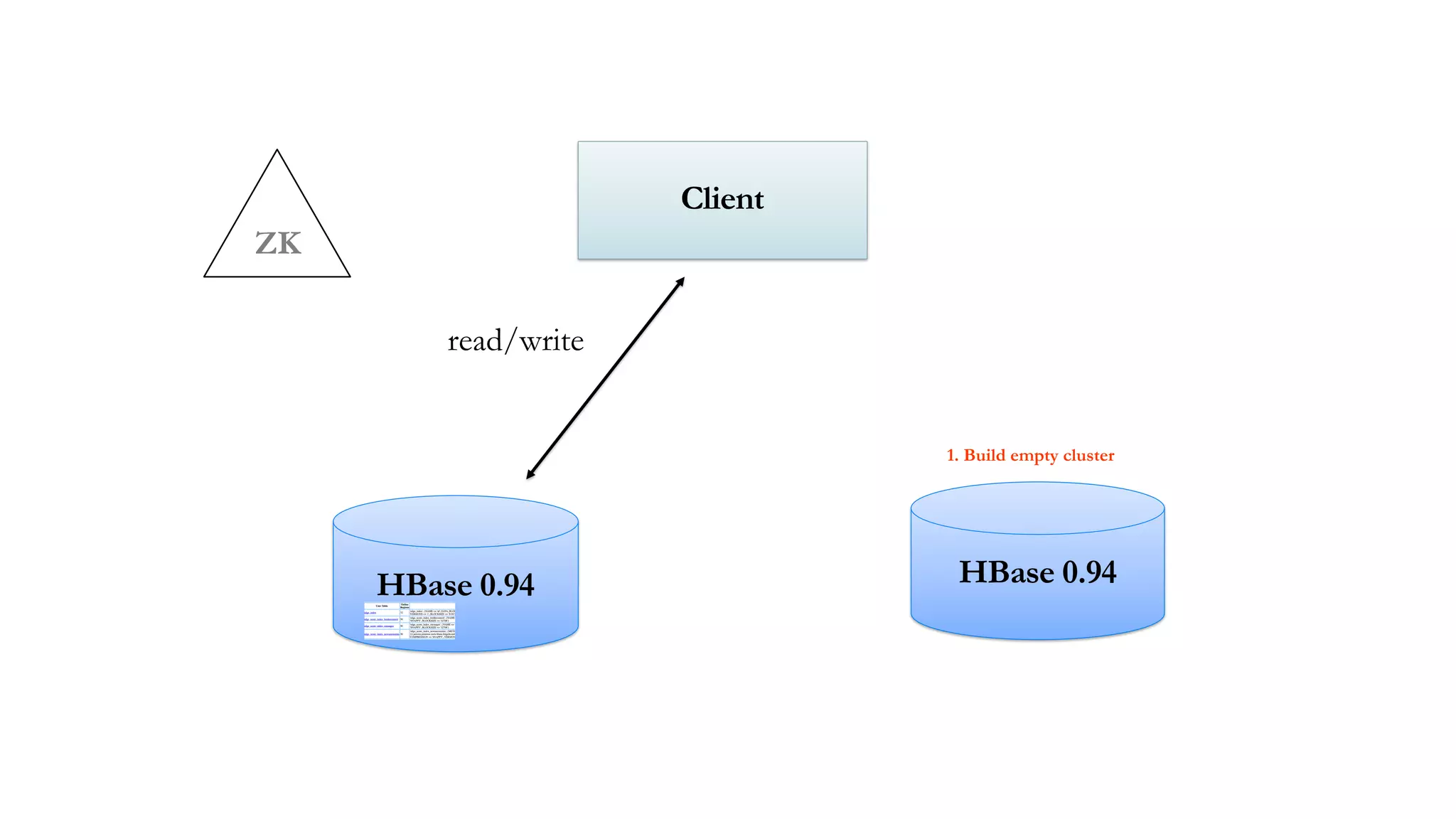

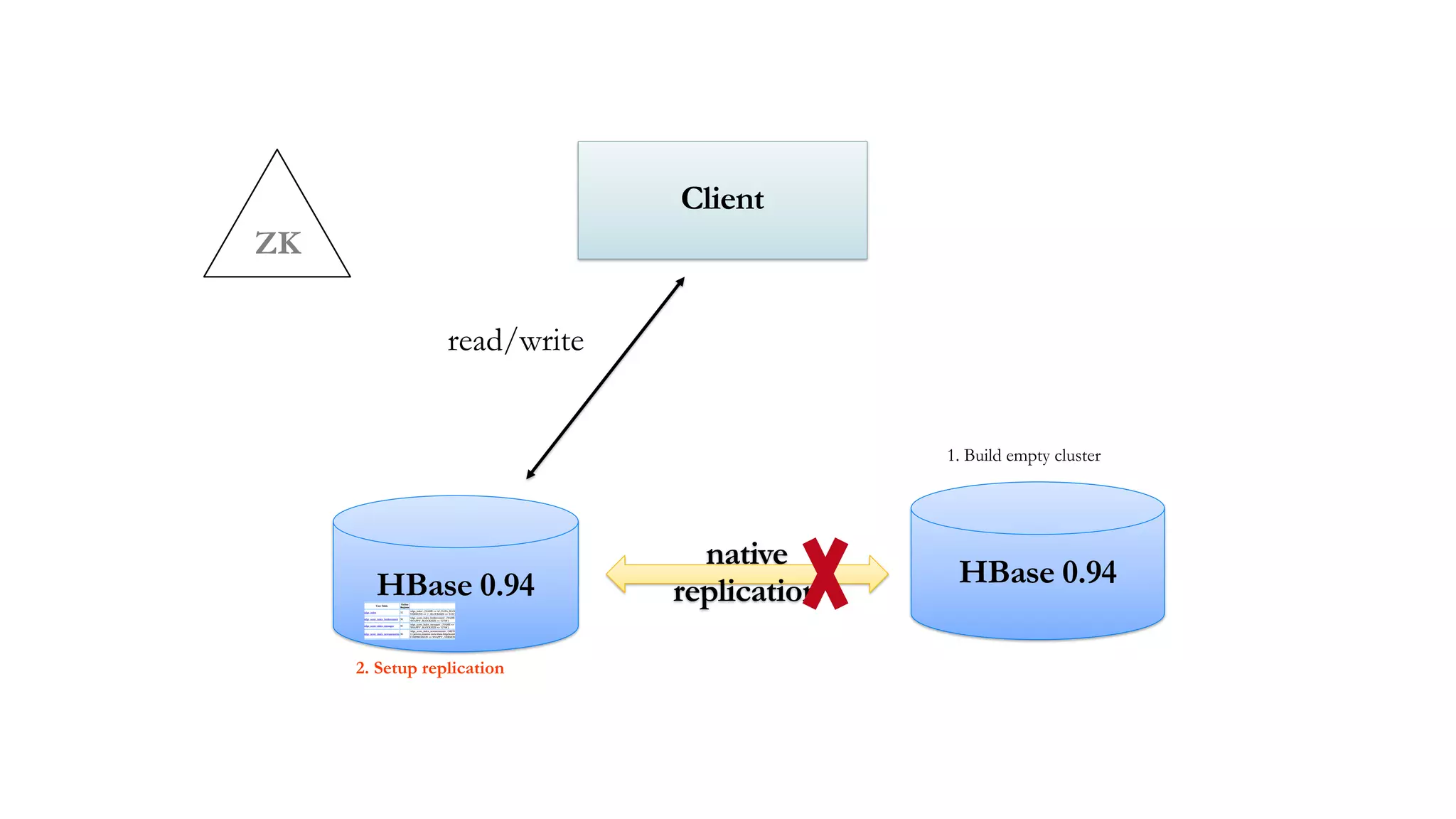

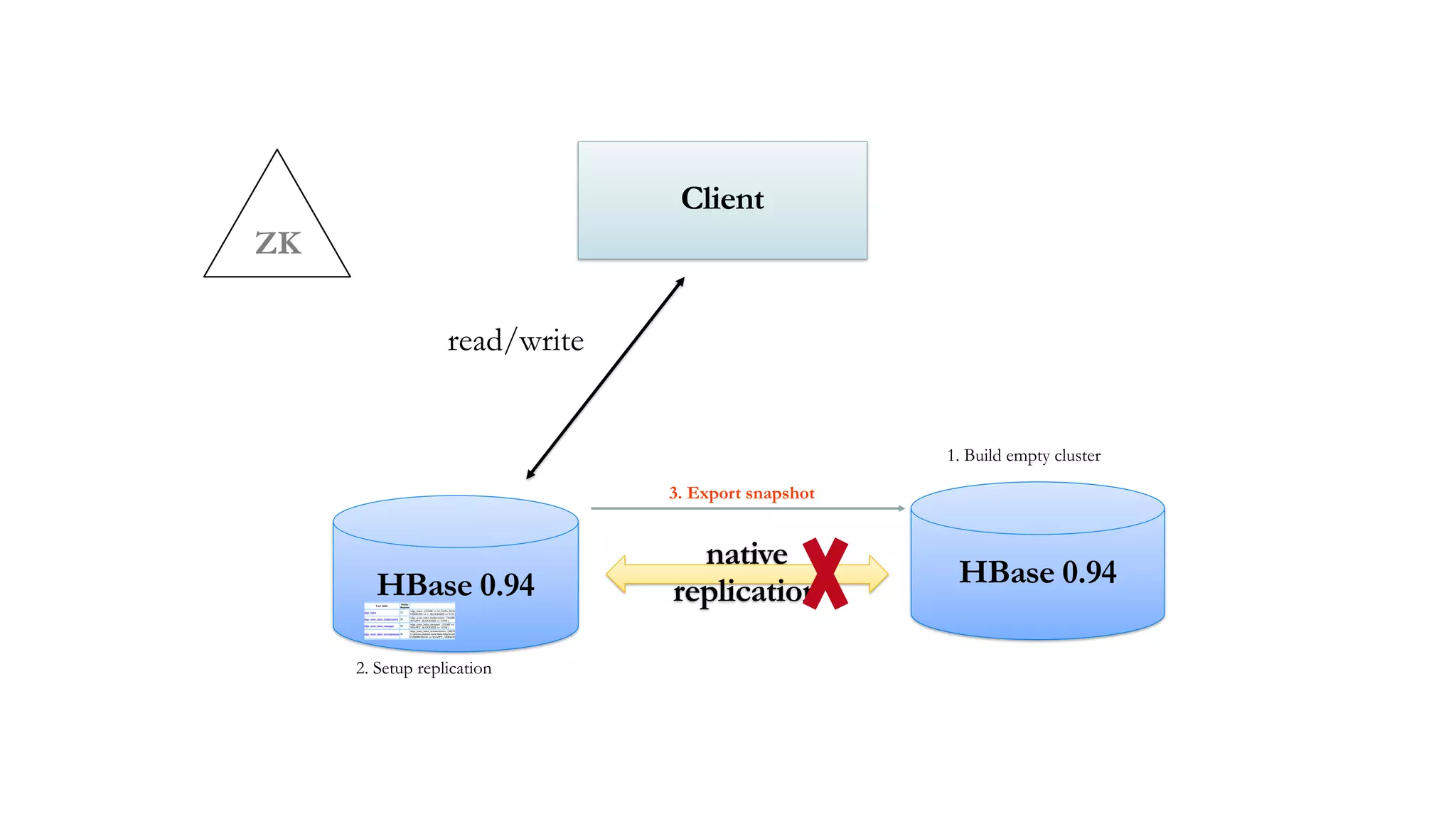

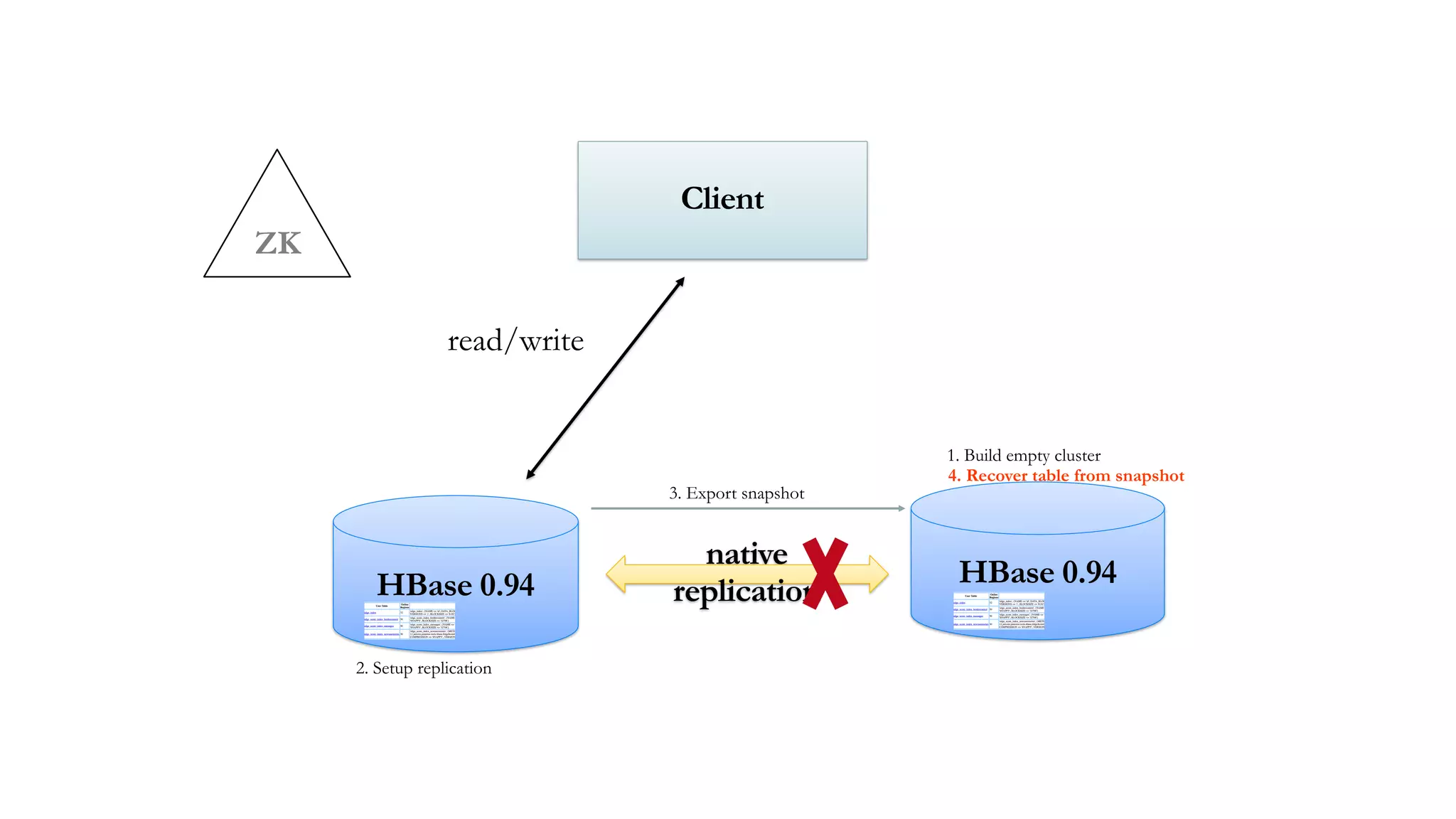

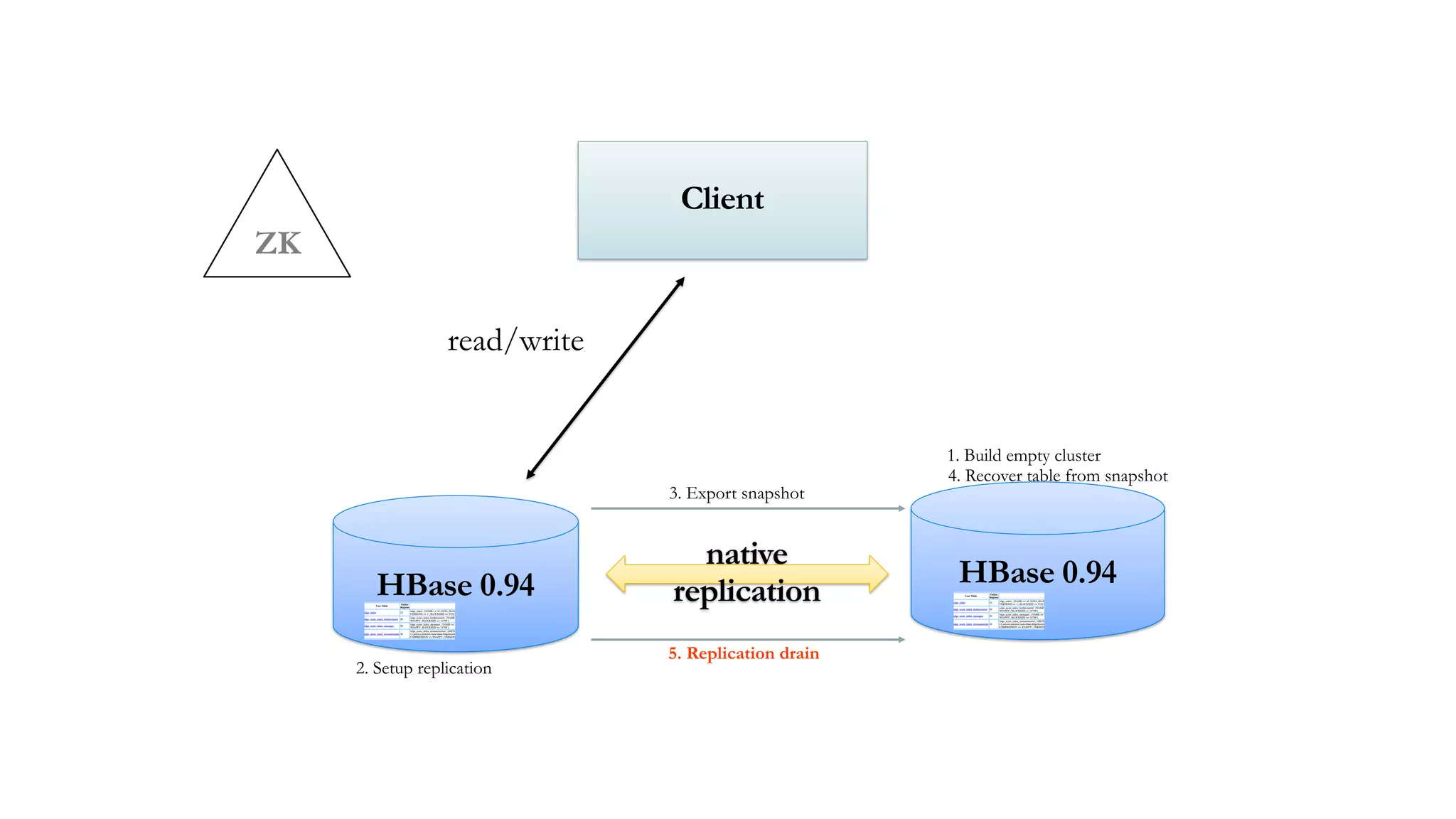

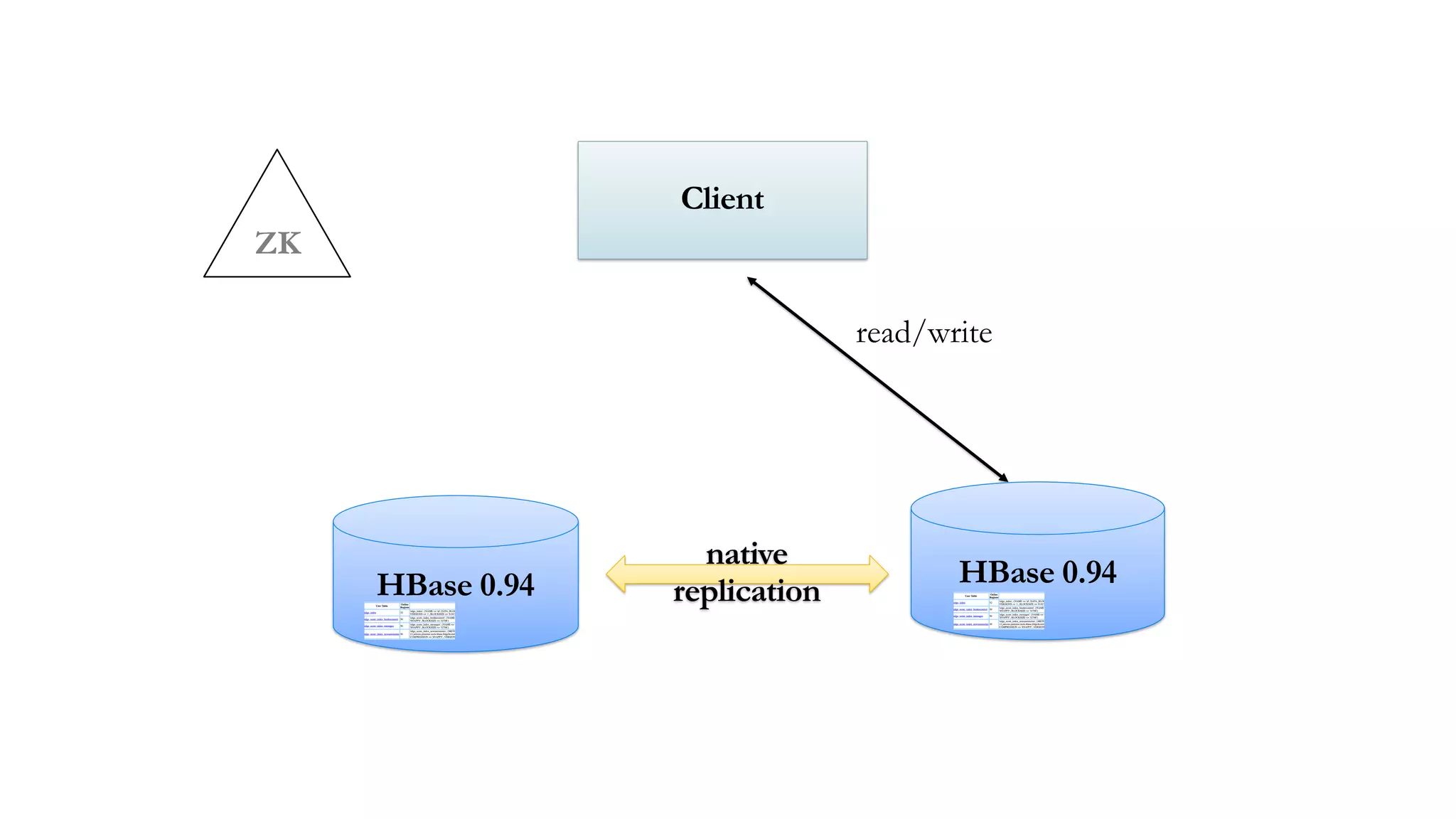

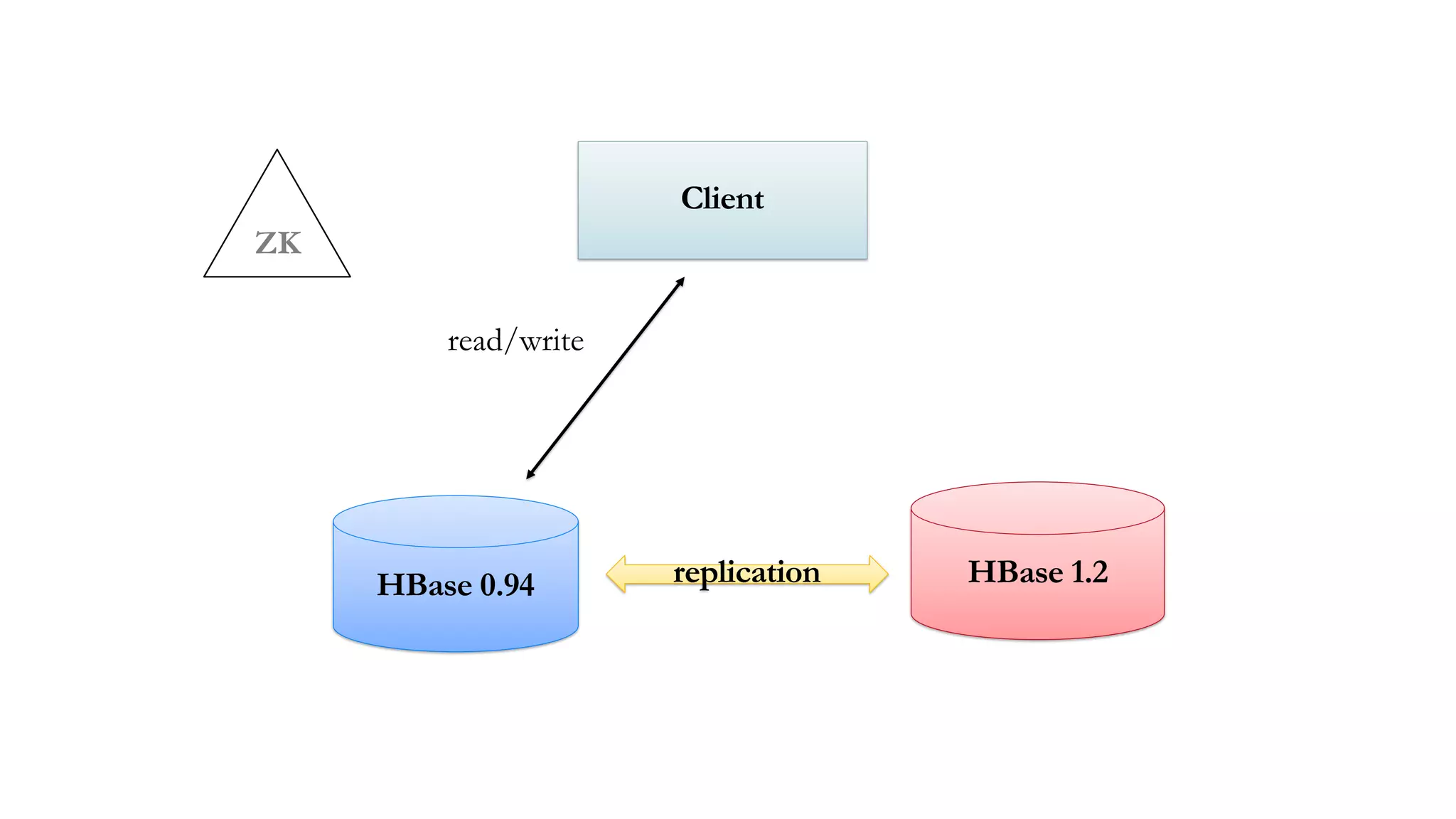

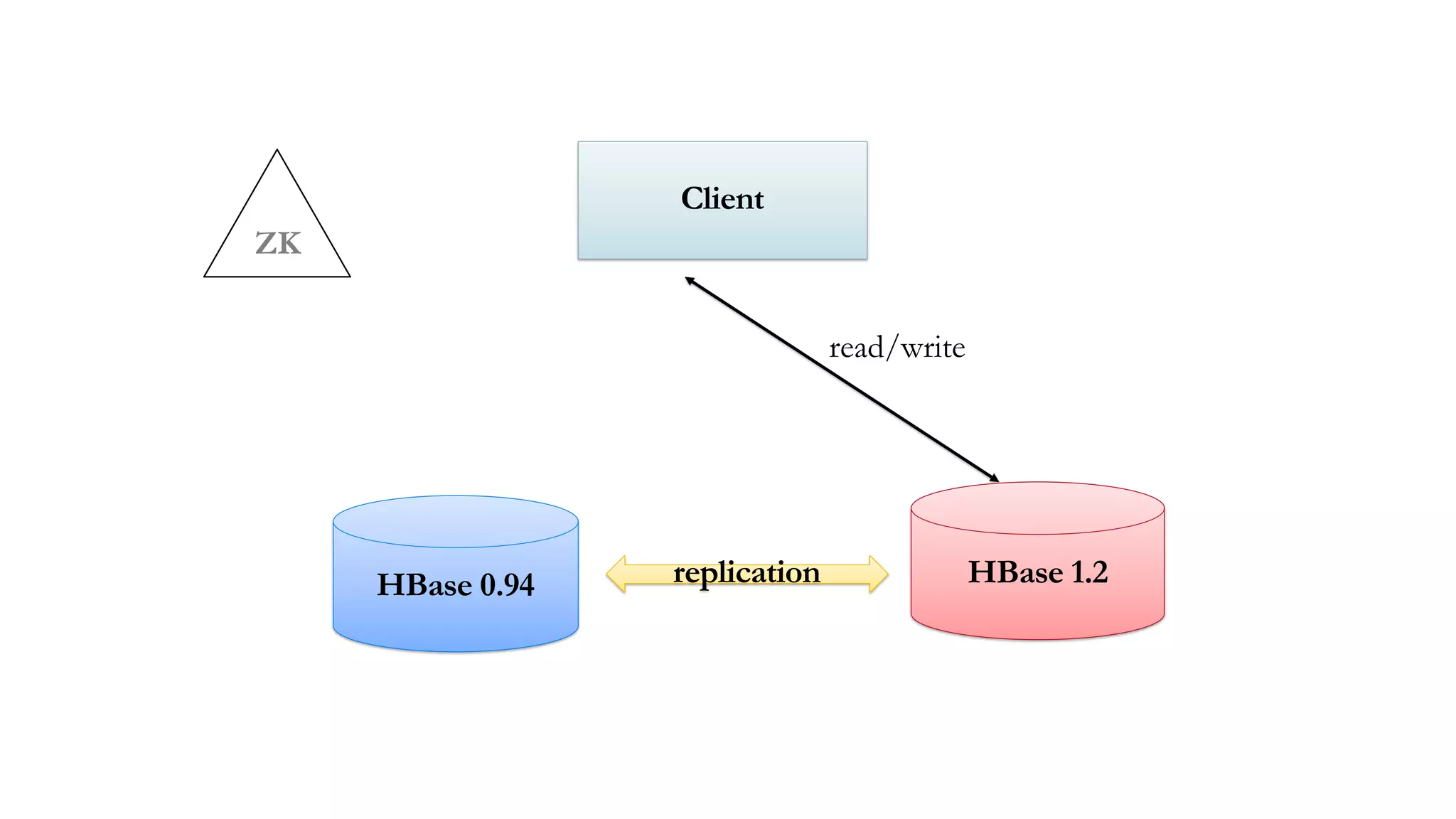

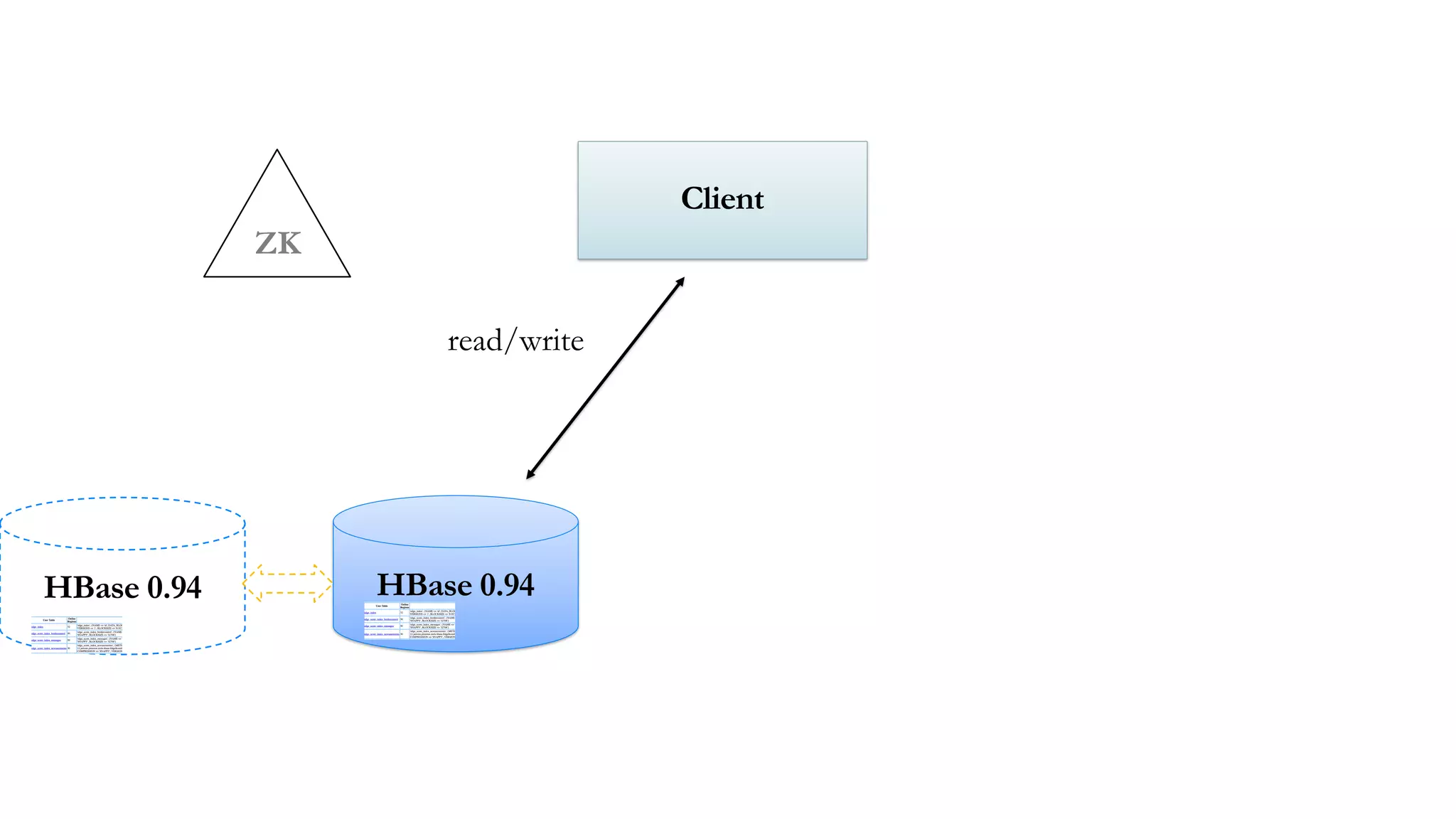

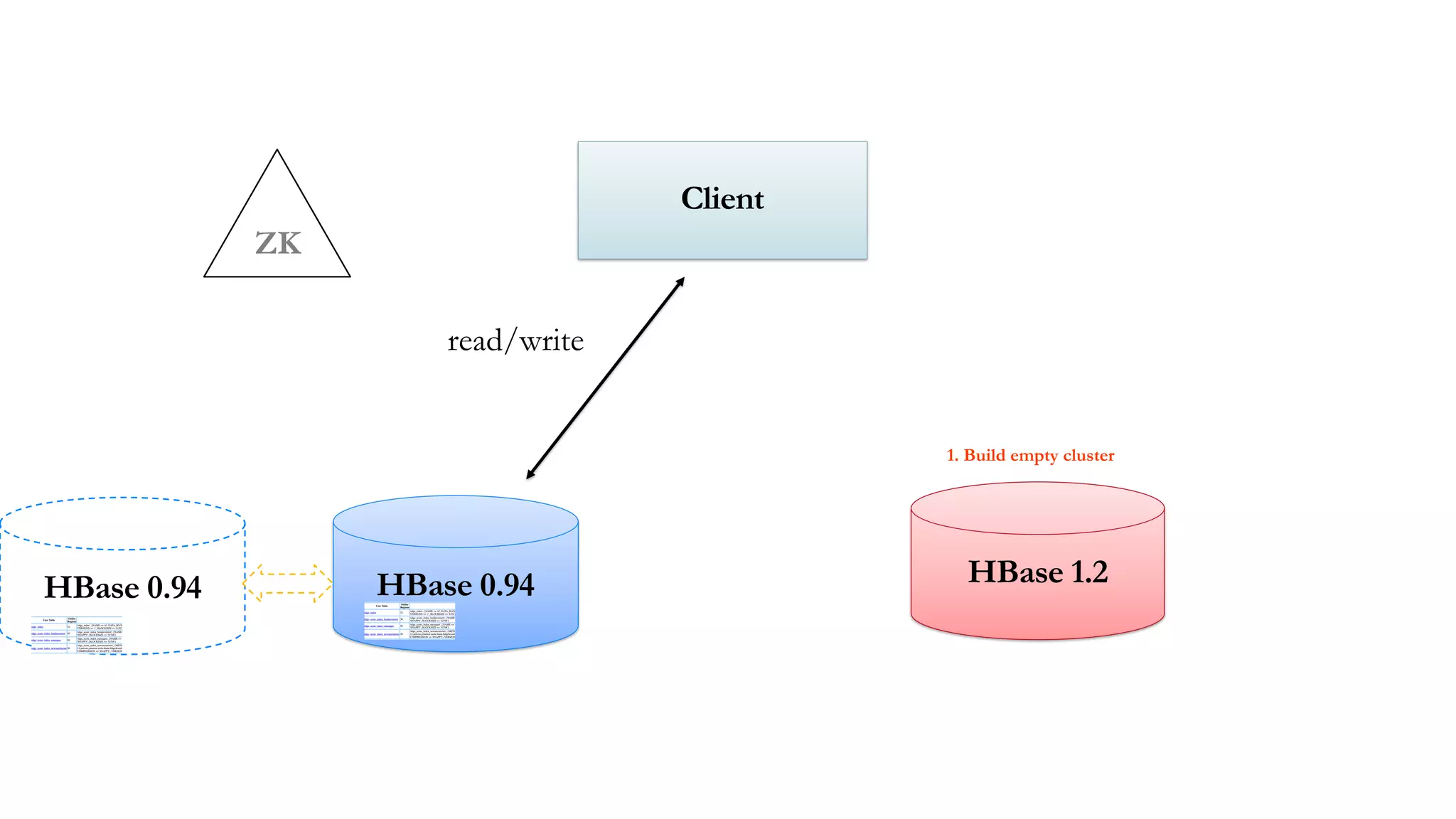

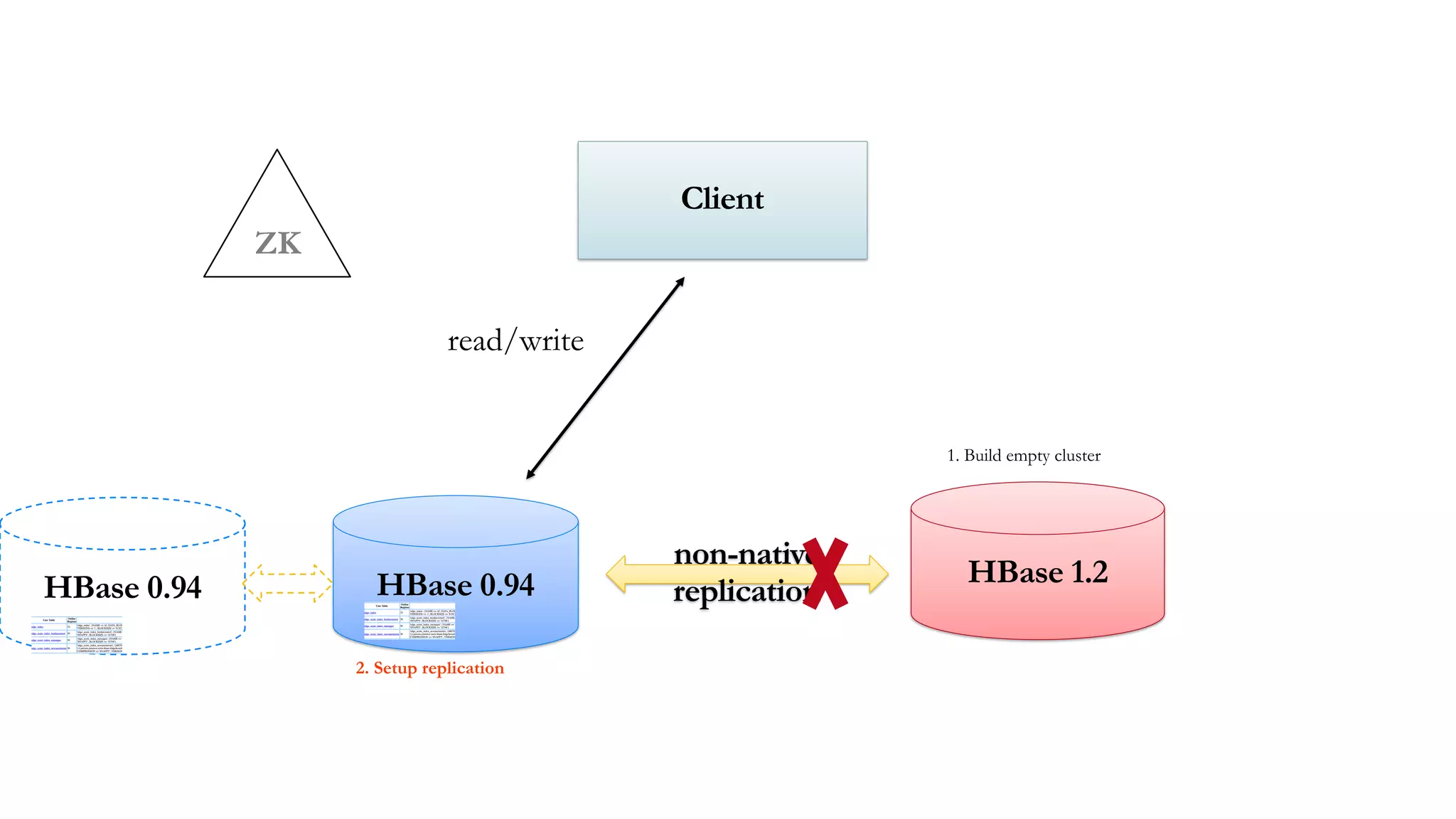

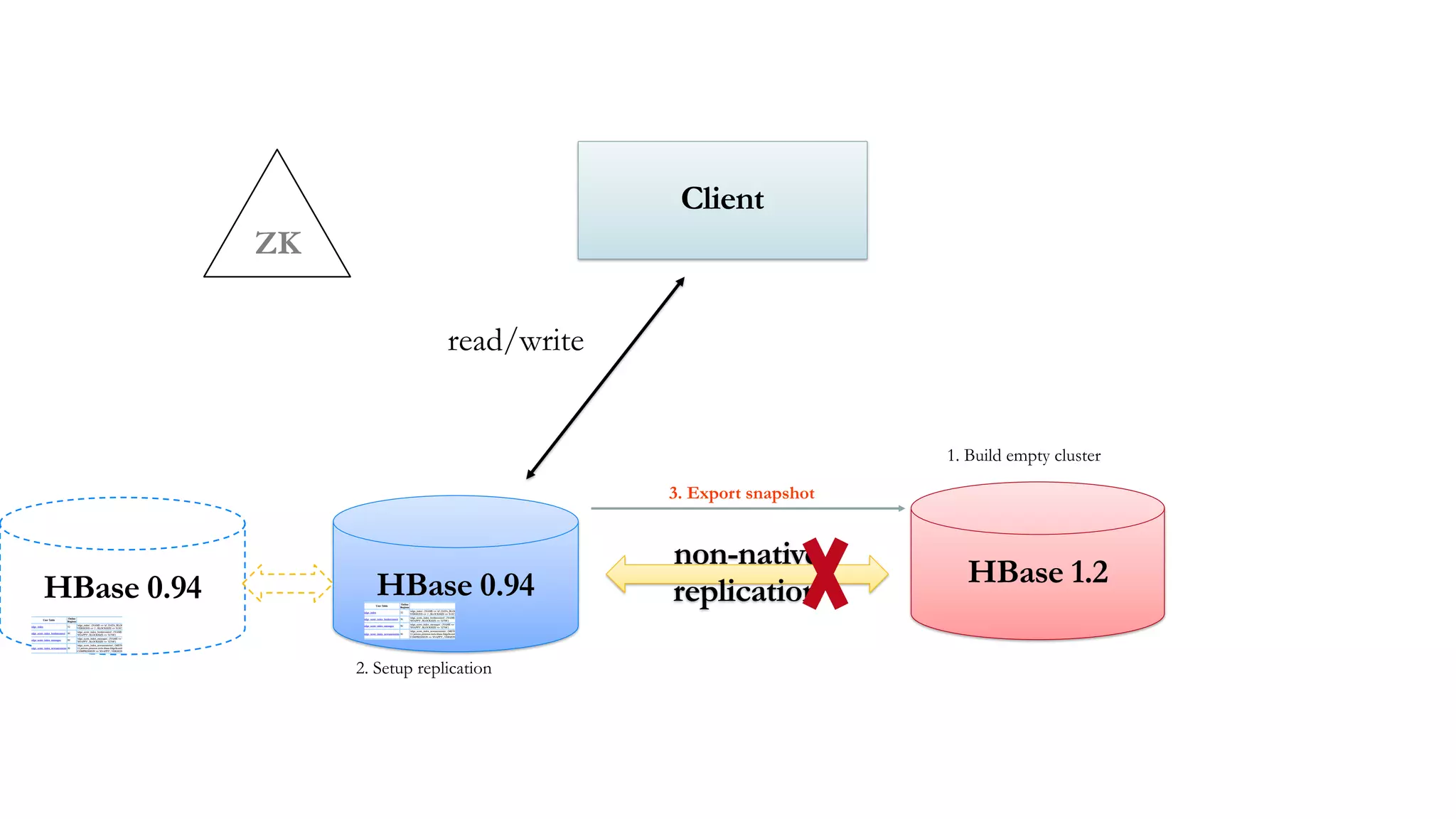

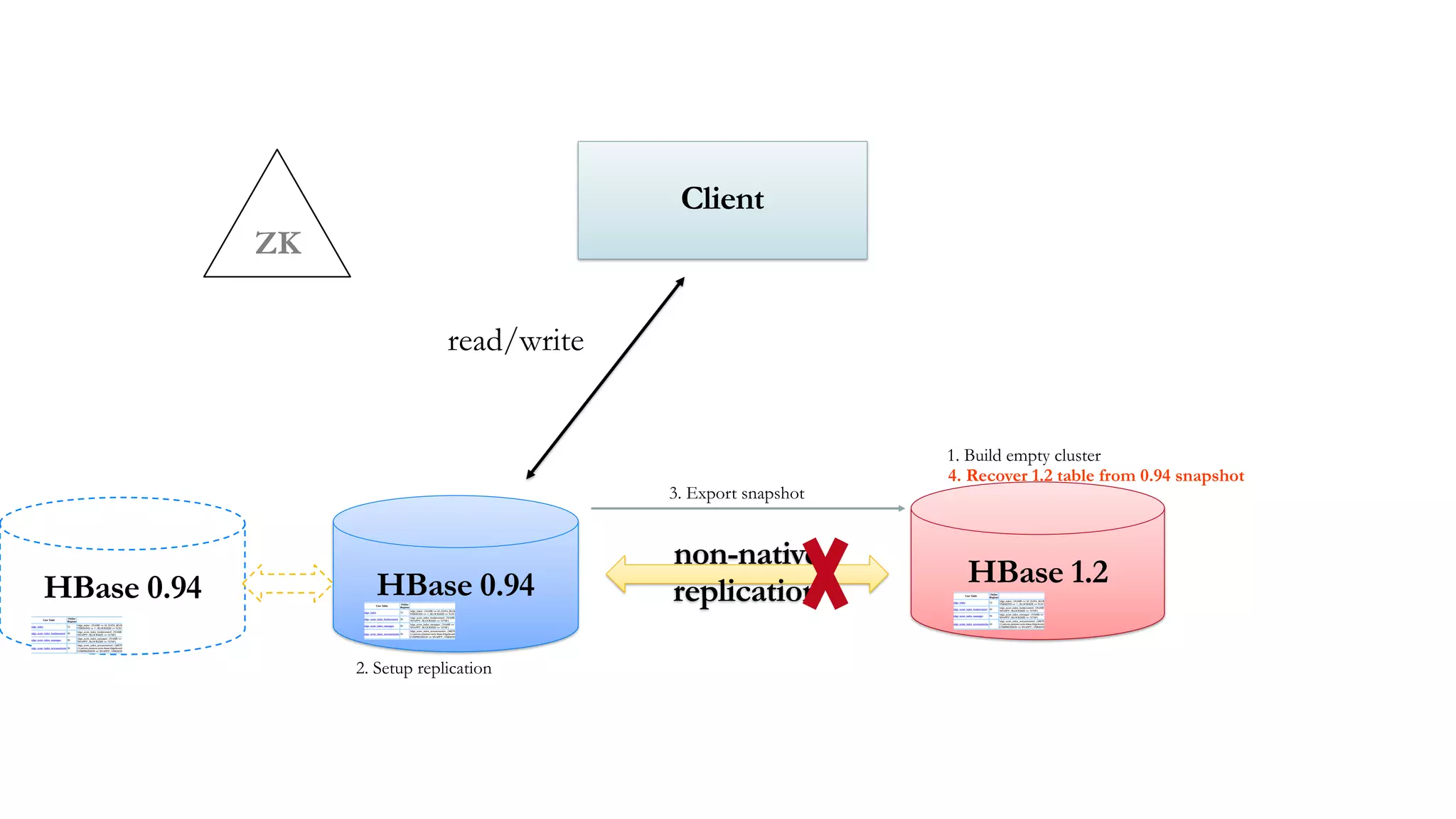

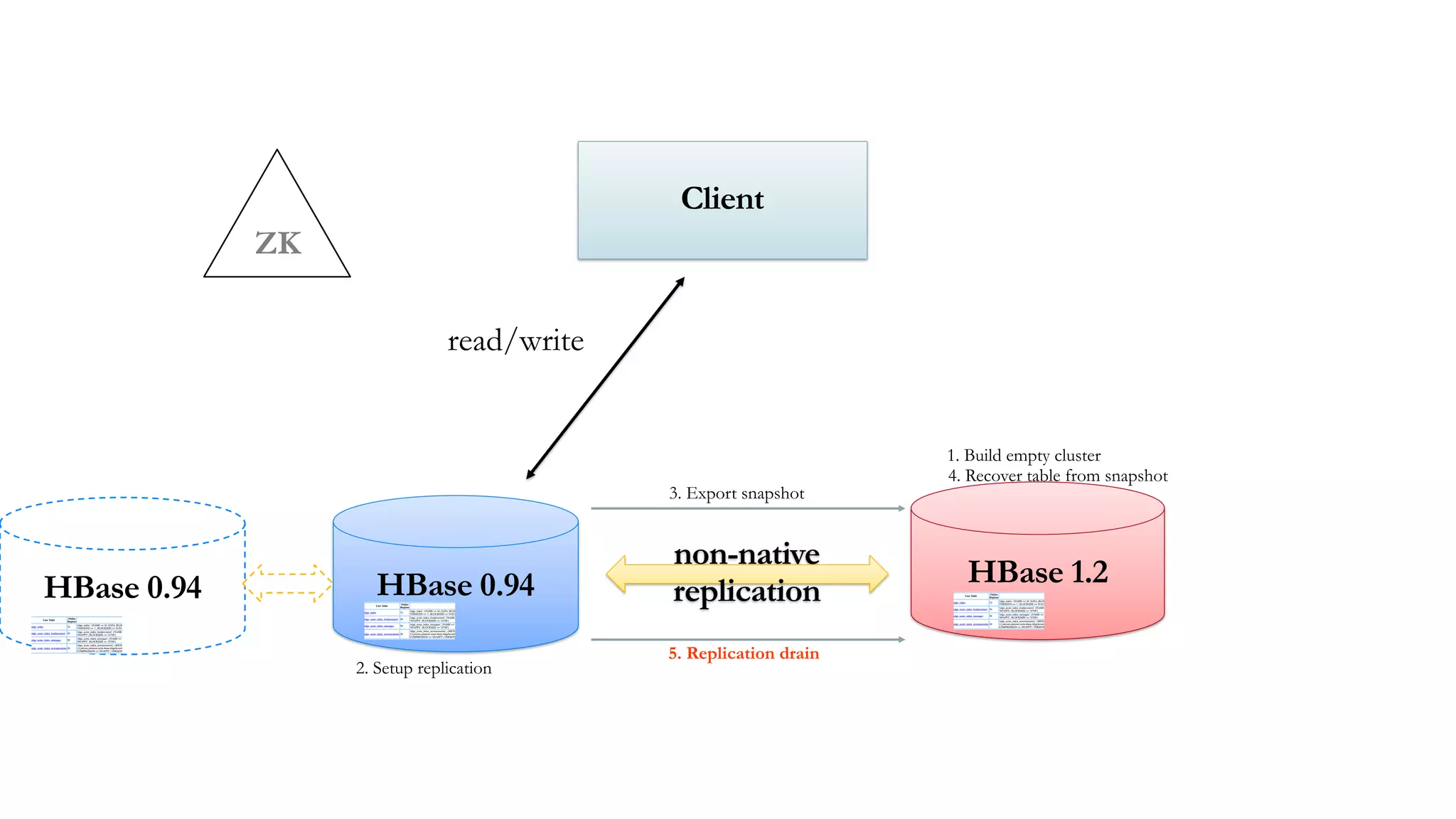

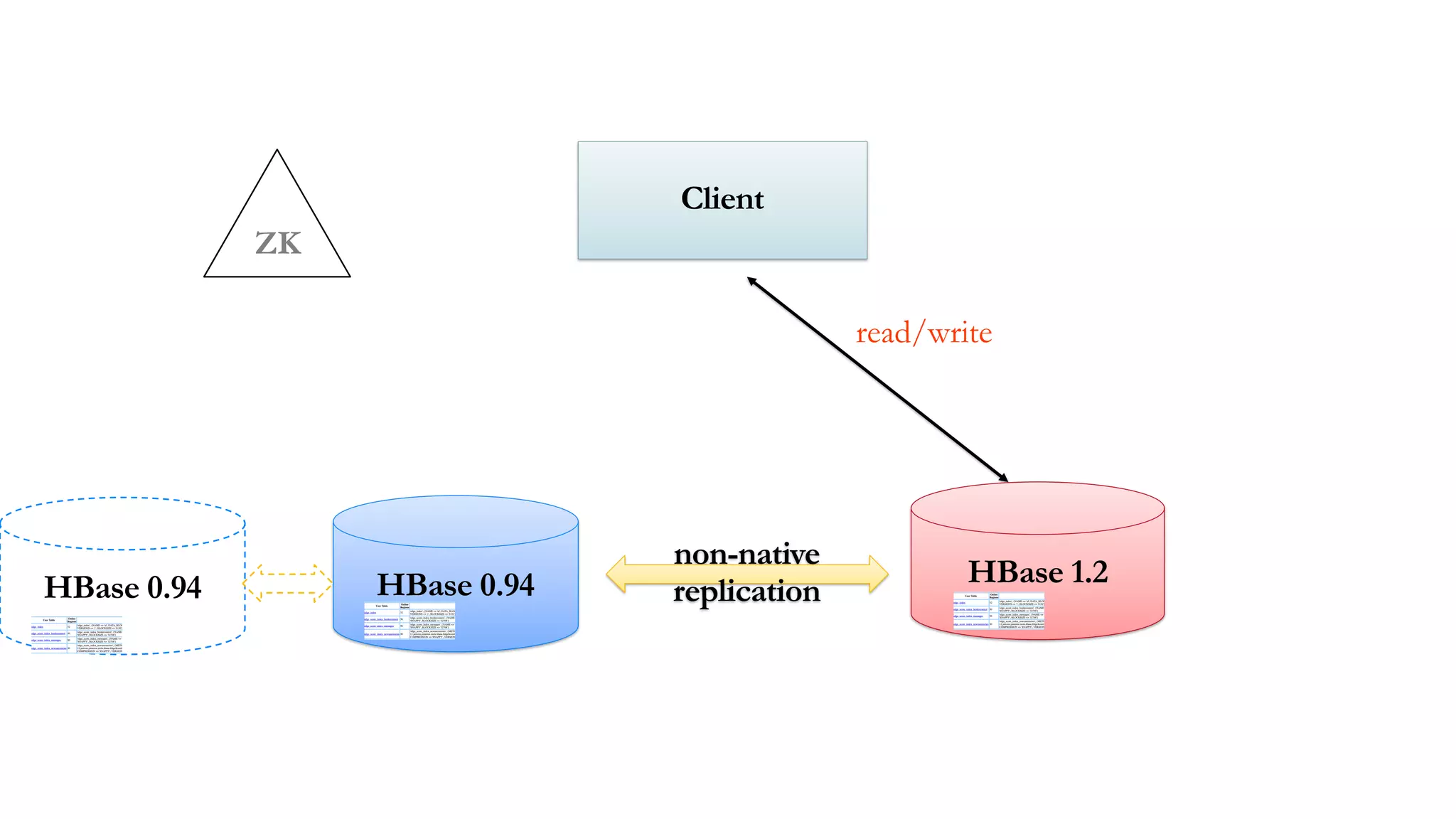

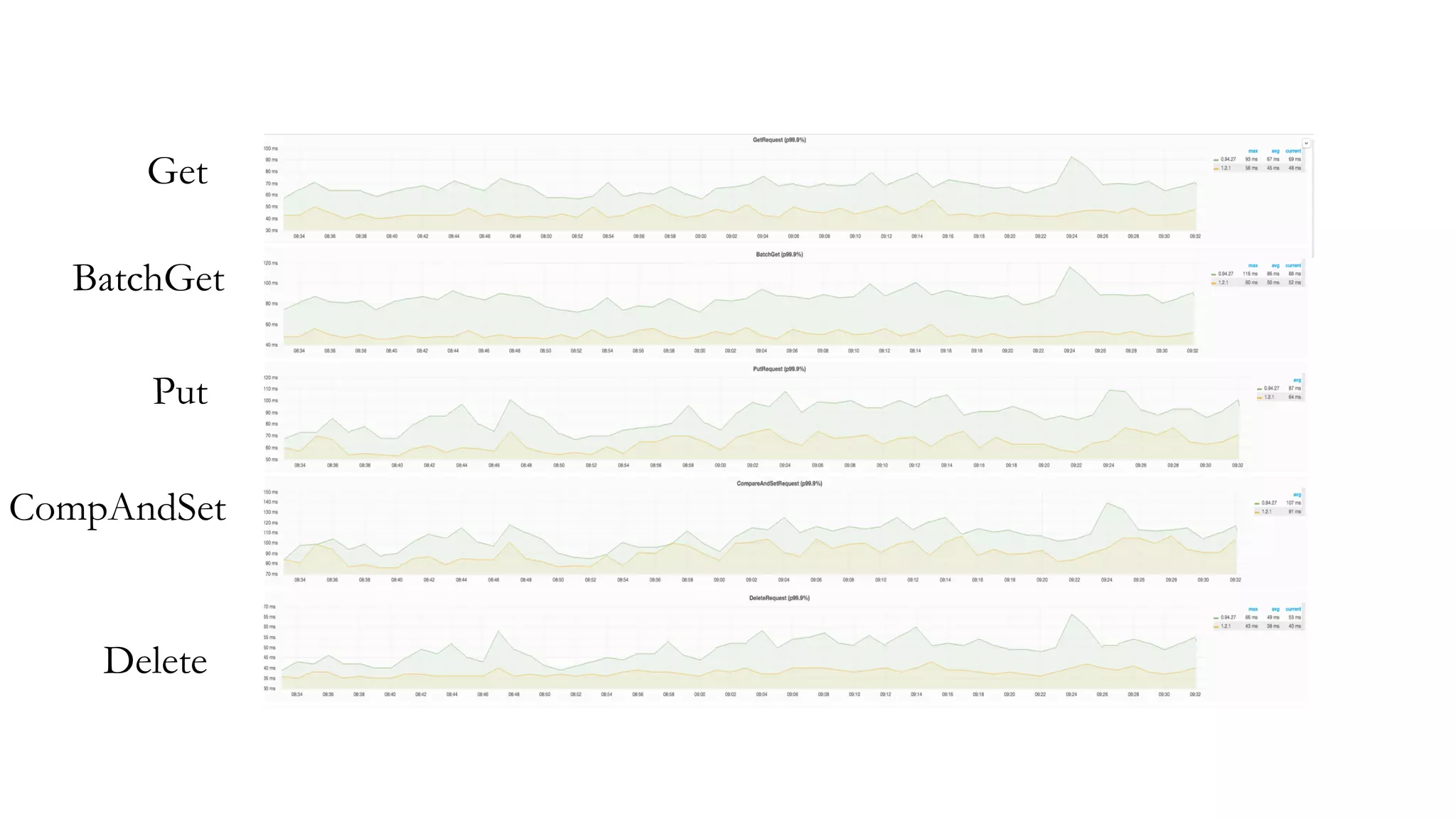

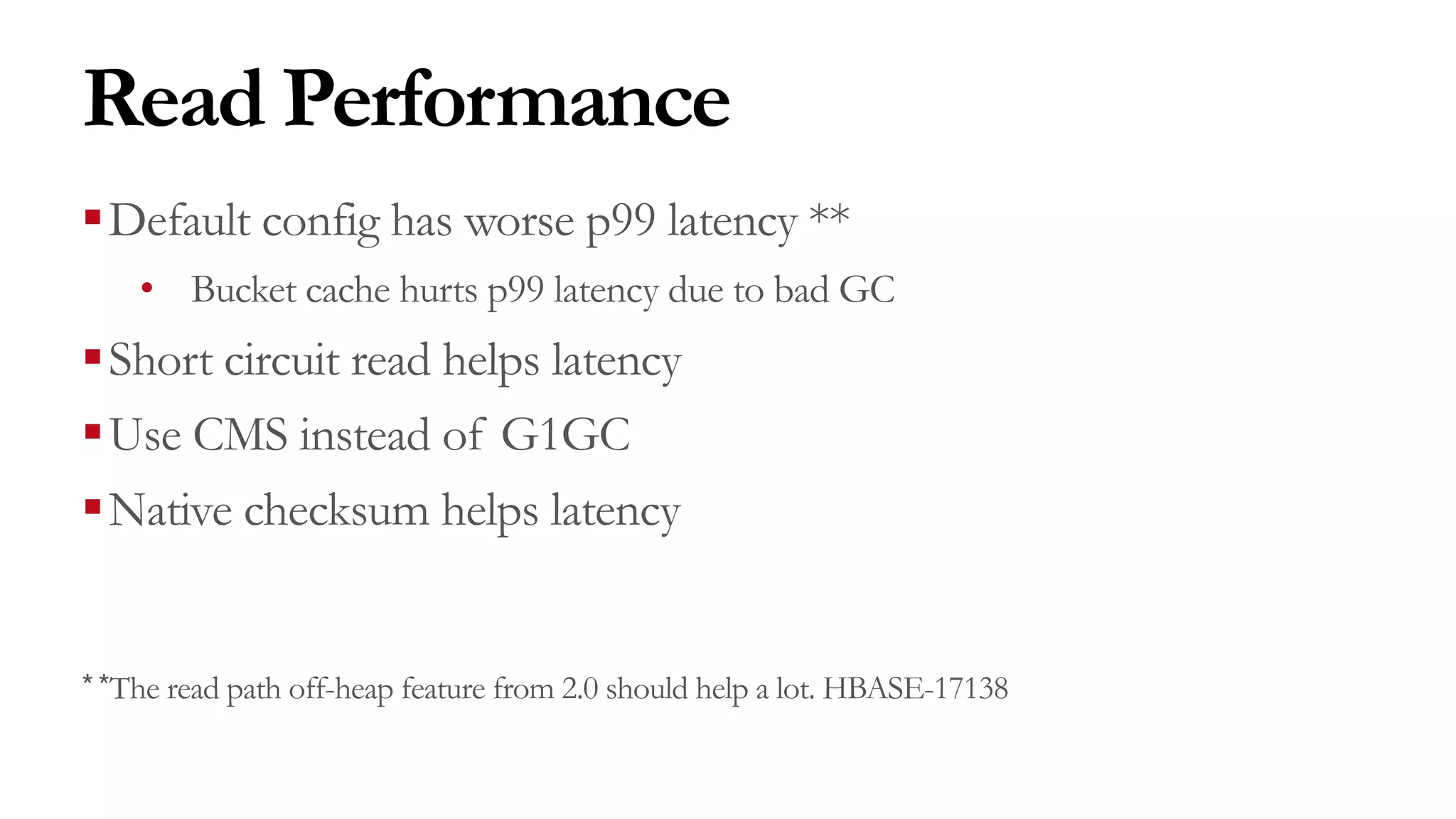

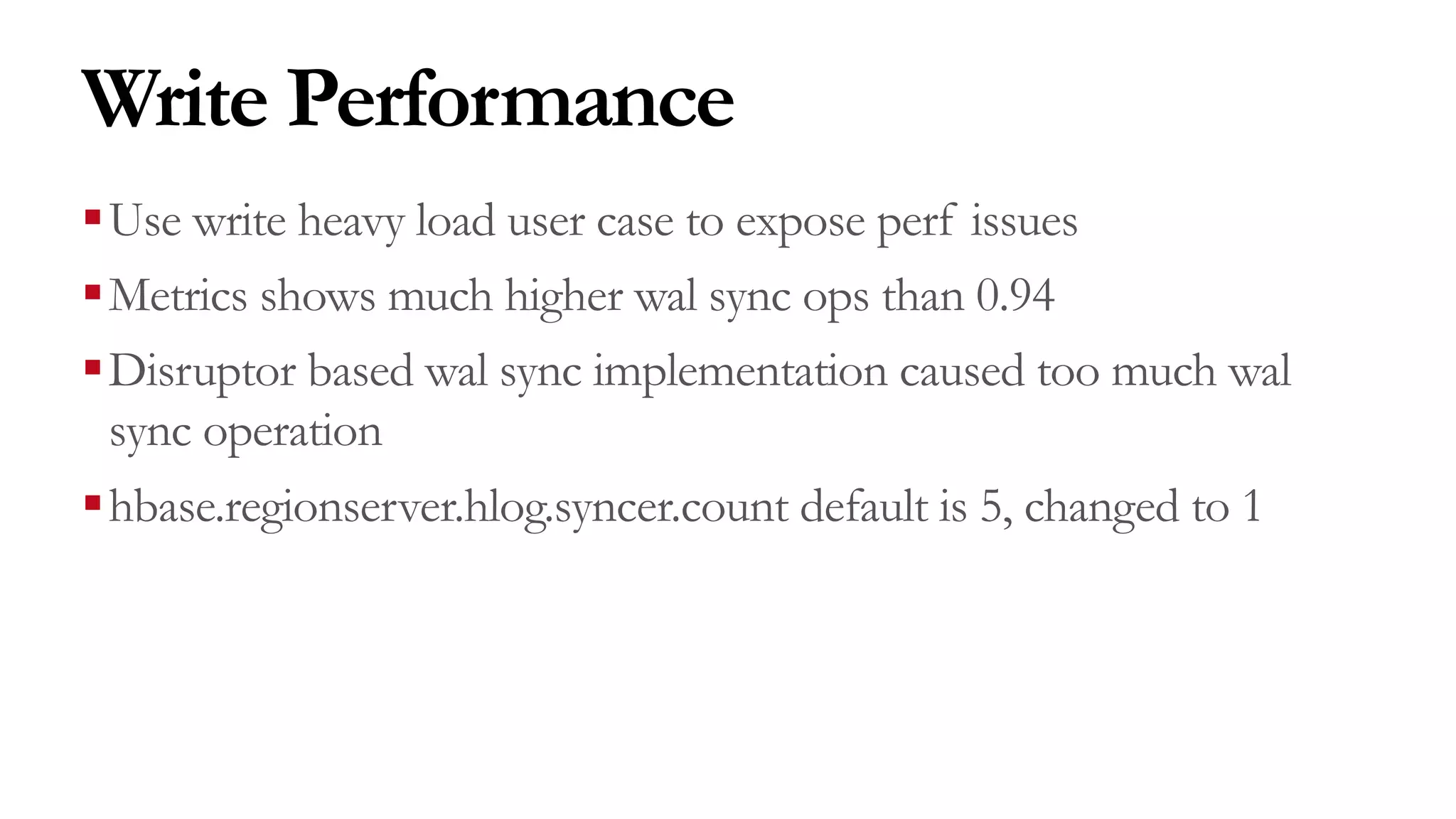

The document discusses the upgrading of HBase at Pinterest, detailing the challenges and migration steps from HBase version 0.94 to 1.2, focusing on the need for zero downtime during the upgrade. It highlights the critical applications relying on HBase and describes the successful live switch and performance improvements achieved without service interruptions. Key strategies included setting up replication, exporting snapshots, and using an enhanced asynchronous client for better data handling between versions.