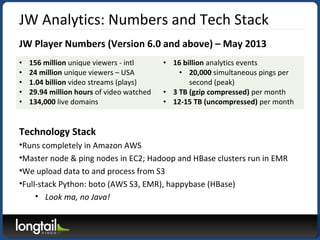

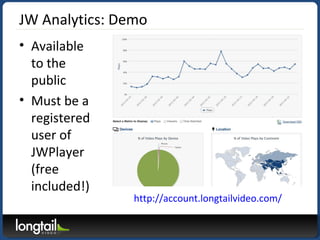

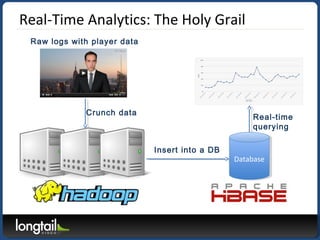

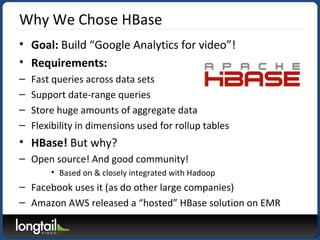

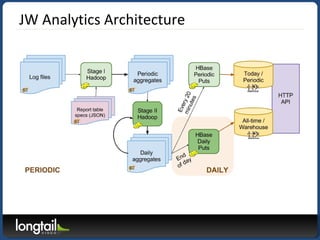

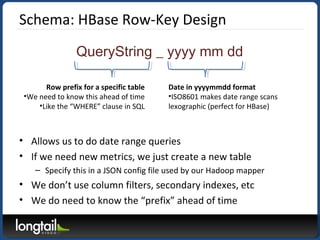

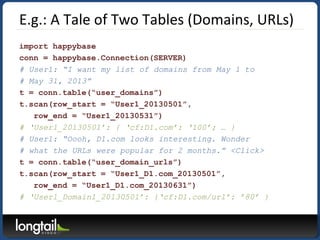

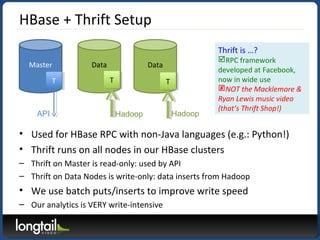

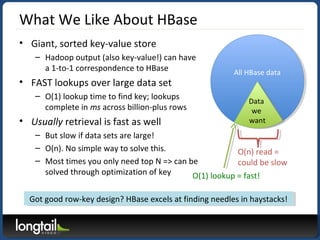

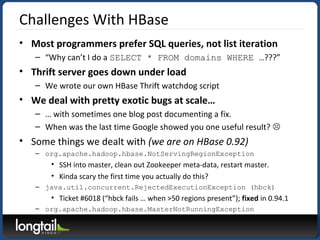

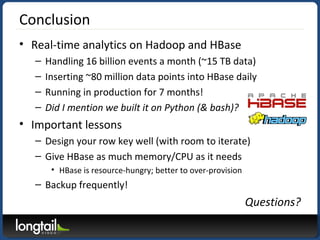

The document discusses the use of Hadoop and HBase for real-time video analytics by JW Player, detailing their technology stack, viewer statistics, and the decision to use HBase for fast querying and large data storage. It outlines the architecture, schema design, and challenges faced with HBase, emphasizing the importance of optimal row-key design and resource management. The overall goal is to create a comprehensive analytics platform for video content management and insights.