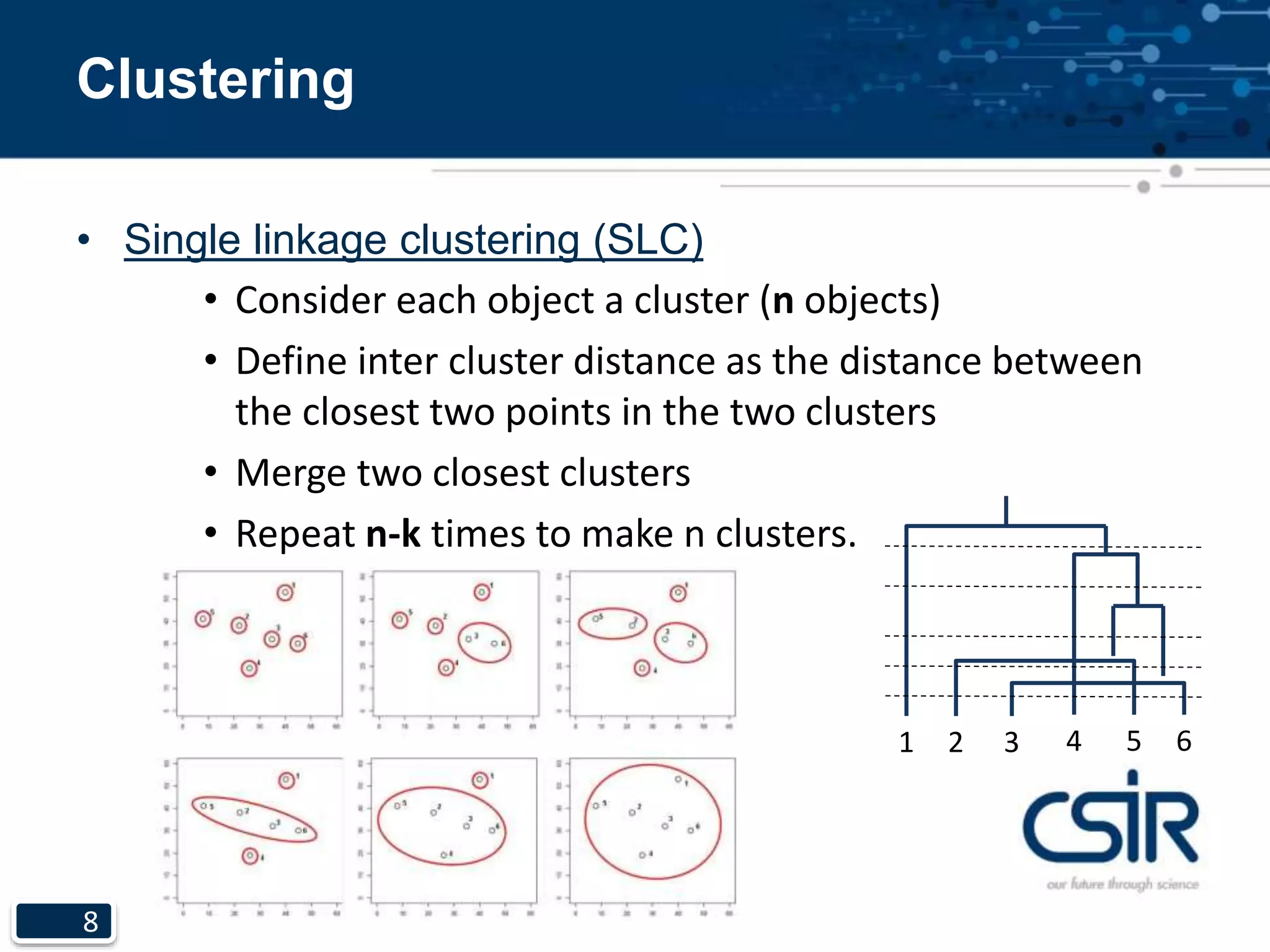

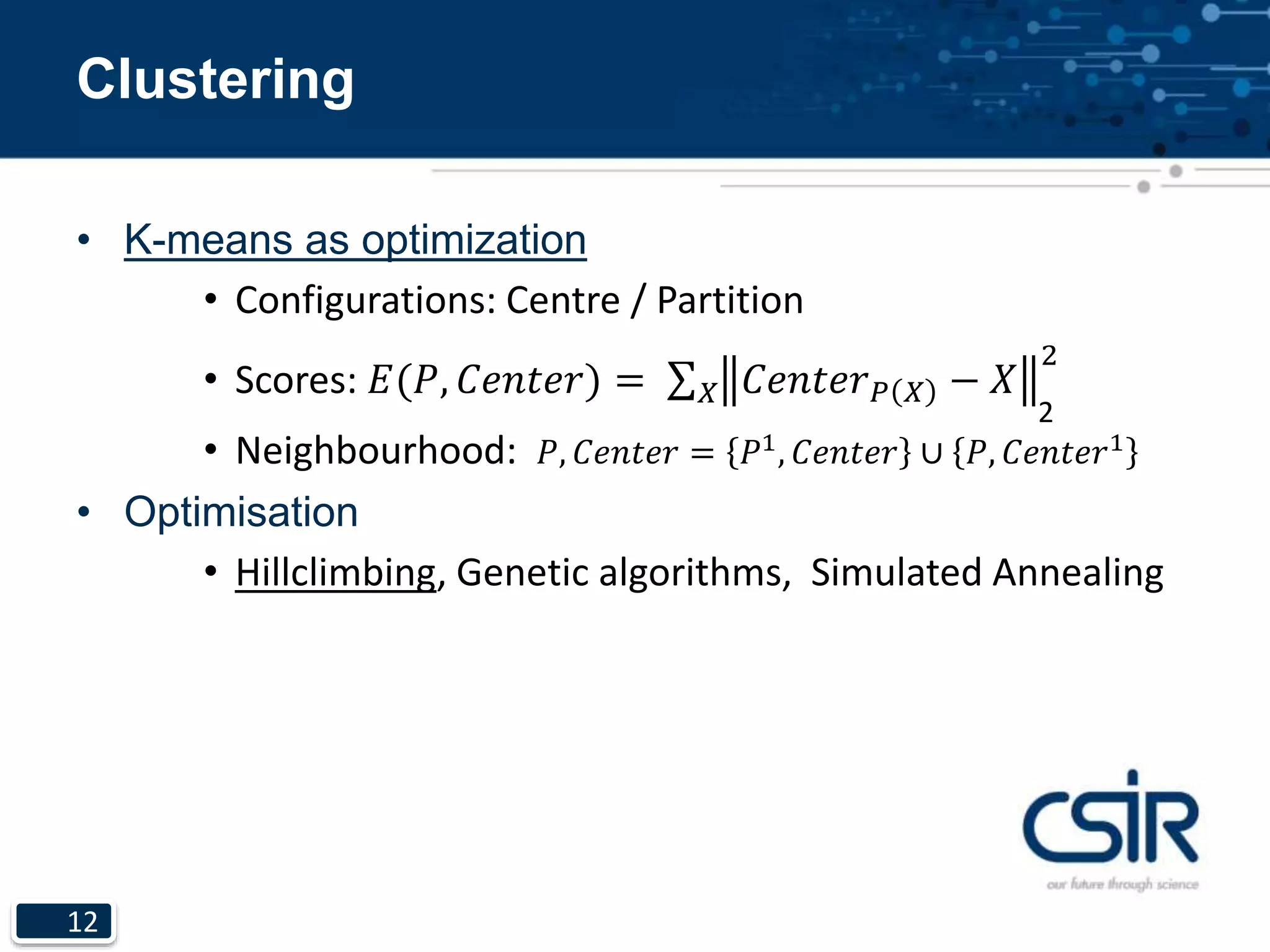

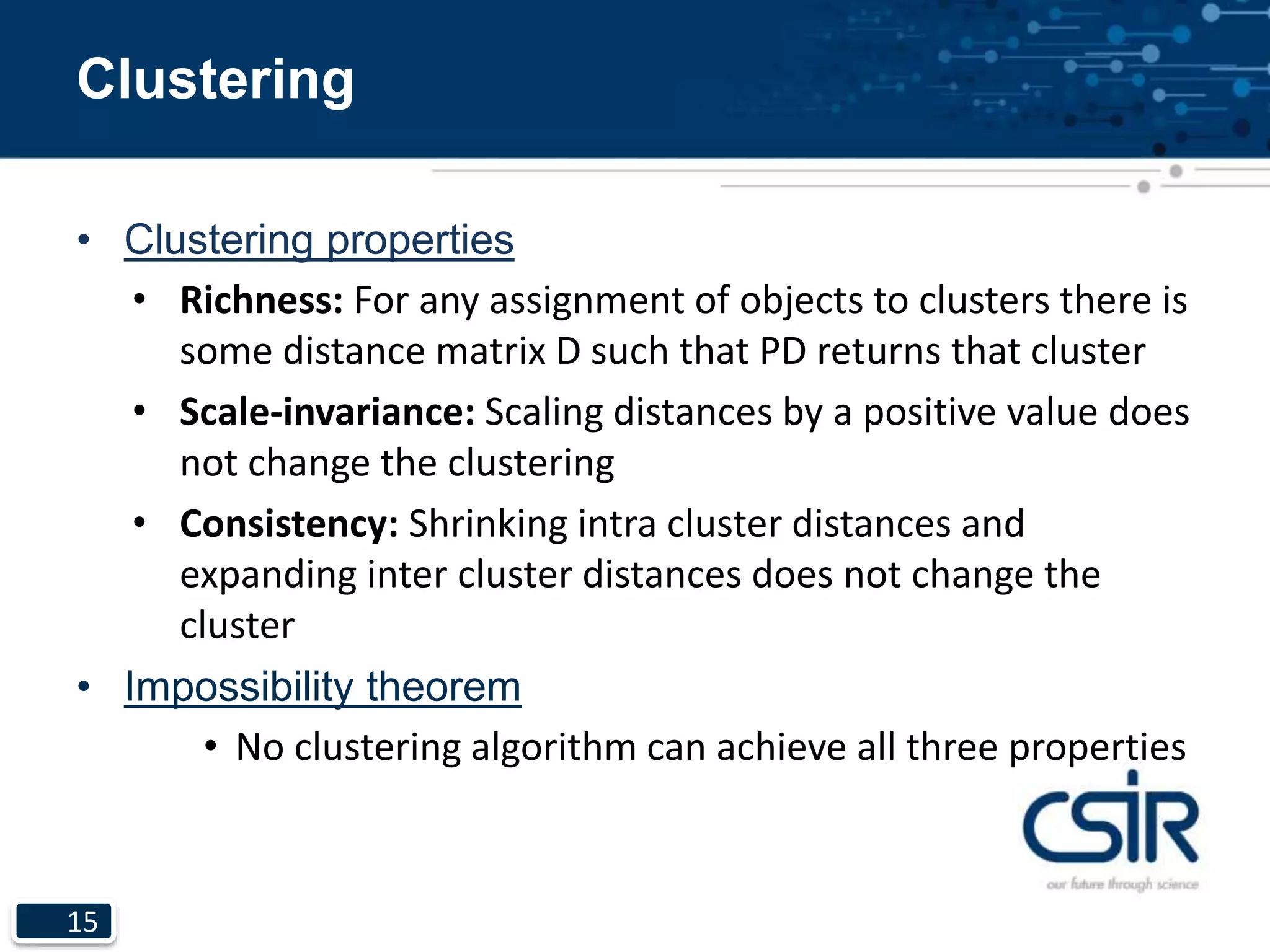

This document provides an overview of unsupervised learning and clustering algorithms. It introduces clustering as the task of grouping objects such that objects within the same group are more similar to each other than objects in other groups. It describes single linkage clustering, k-means clustering, and expectation maximization algorithms. It also discusses properties of clustering, noting that no algorithm can achieve richness, scale-invariance, and consistency simultaneously.