This document provides an overview of various Linux text processing tools including:

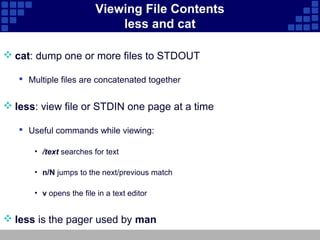

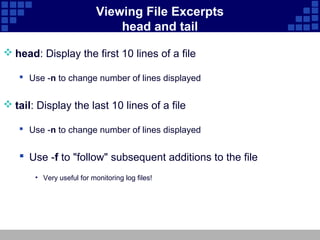

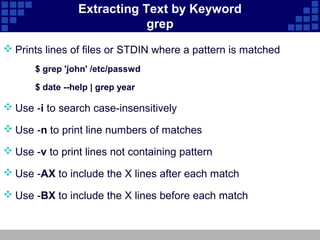

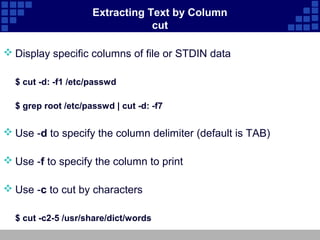

- Tools for extracting text from files like head, tail, grep, cut

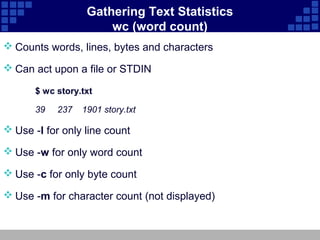

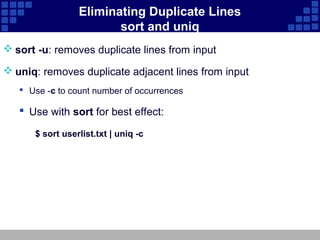

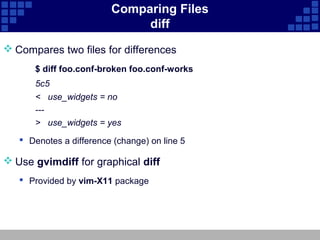

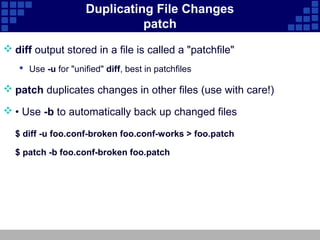

- Tools for analyzing text like wc, sort, diff, patch

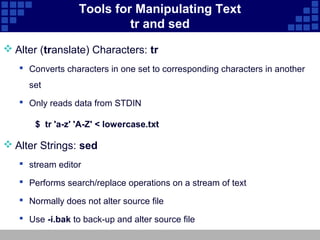

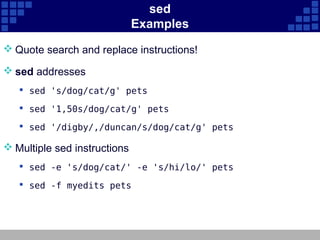

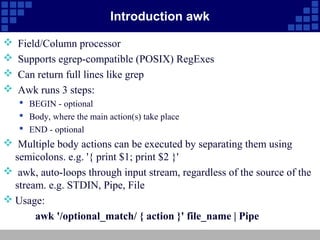

- Tools for manipulating text like tr, sed, and awk

It describes the basic usage and functions of each tool along with examples.

![Sorting Text sort

Sorts text to STDOUT - original file unchanged

$ sort [options] file(s)

Common options

-r performs a reverse (descending) sort

-n performs a numeric sort

-f ignores (folds) case of characters in strings

-u (unique) removes duplicate lines in output

-t c uses c as a field separator

-k X sorts by c-delimited field X

• Can be used multiple times](https://image.slidesharecdn.com/unit8textprocessingtools-120309010648-phpapp02/85/Unit-8-text-processing-tools-10-320.jpg)

![Special Characters for Complex Searches

Regular Expressions

^ represents beginning of line

$ represents end of line

Character classes as in bash:

[abc], [^abc]

[[:upper:]], [^[:upper:]]

Used by:

grep, sed, less, others](https://image.slidesharecdn.com/unit8textprocessingtools-120309010648-phpapp02/85/Unit-8-text-processing-tools-20-320.jpg)