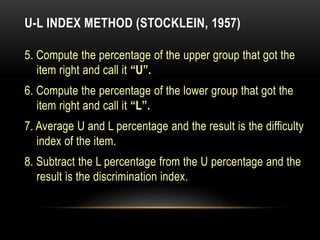

Traditional pen and paper tests can be used to measure students' knowledge in various ways. There are different types of tests including achievement tests, personality tests, mastery tests, and standardized tests. Tests can be constructed using various item formats such as multiple choice, essay, and matching questions. When developing a test, educators must consider test planning, construction, administration, and evaluation. The evaluation process includes analyzing item difficulty, effectiveness, and student responses to improve future assessments.