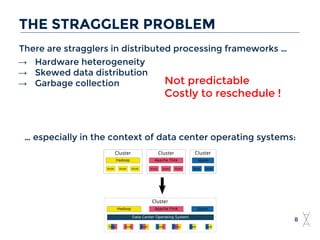

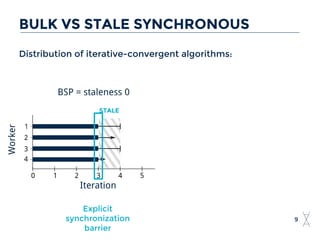

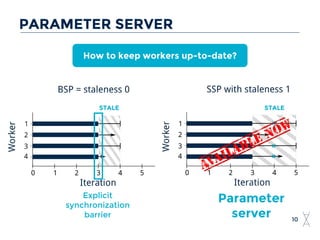

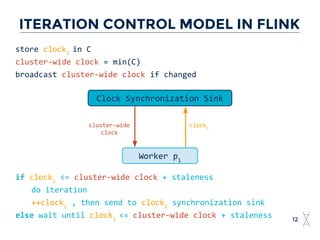

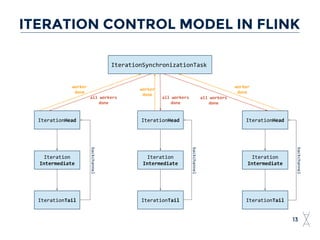

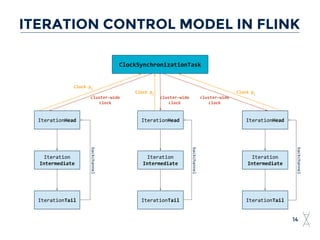

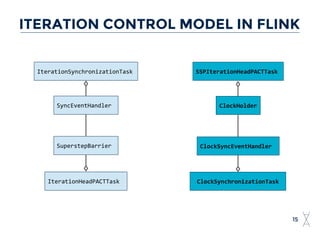

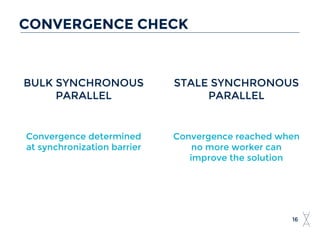

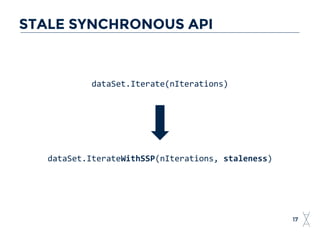

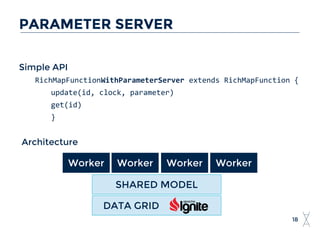

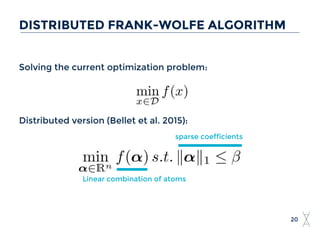

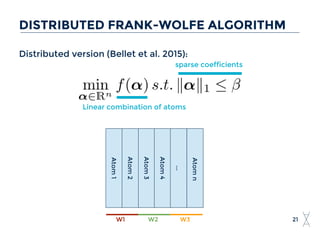

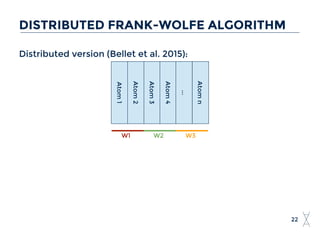

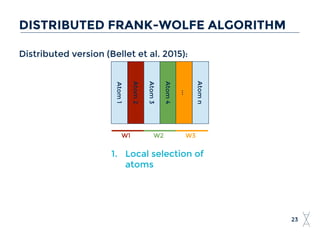

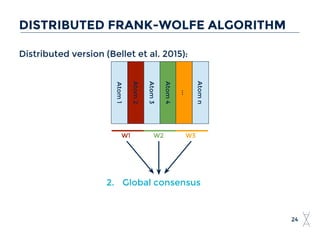

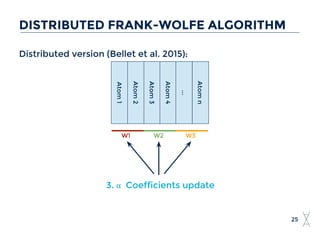

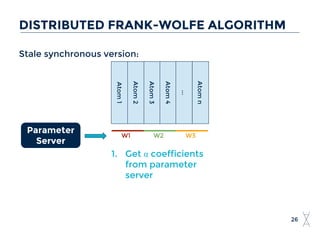

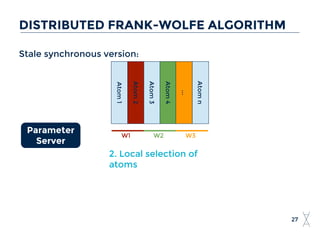

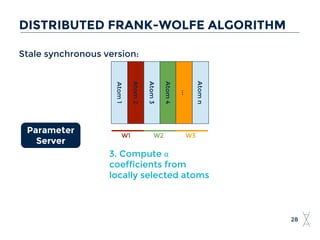

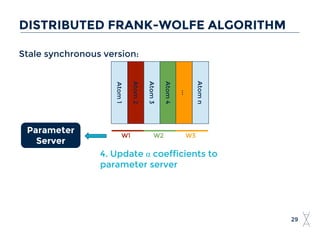

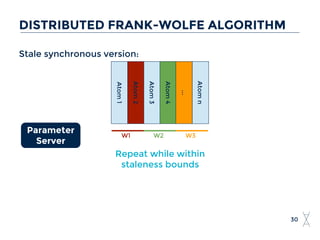

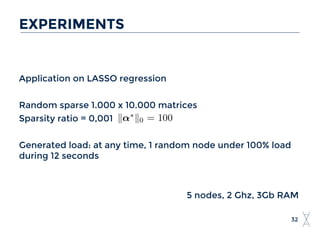

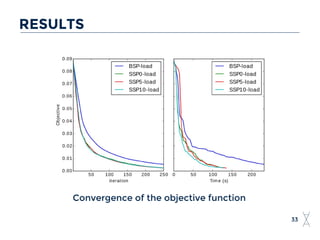

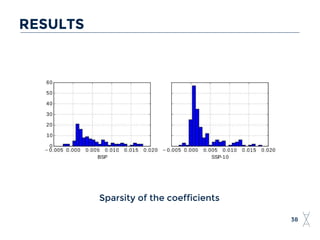

This document discusses two topics: 1) Stale Synchronous Parallel (SSP) iterations on Apache Flink to address stragglers, and 2) a distributed Frank-Wolfe algorithm using SSP and a parameter server. For SSP on Flink, it describes integrating an iteration control model and API to allow iterations when worker data is within a staleness threshold. For the distributed Frank-Wolfe algorithm, it applies SSP to coordinate local atom selection and global coefficient updates via a parameter server in solving LASSO regression problems.