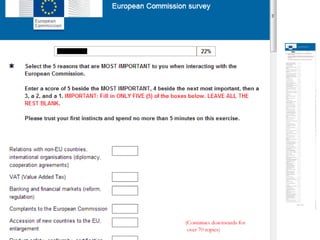

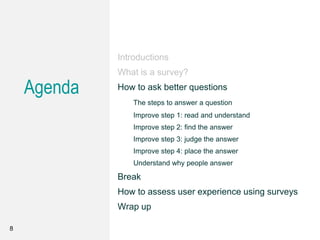

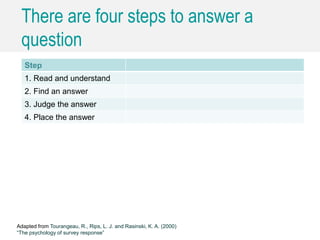

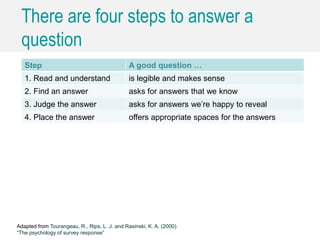

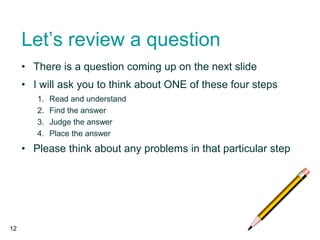

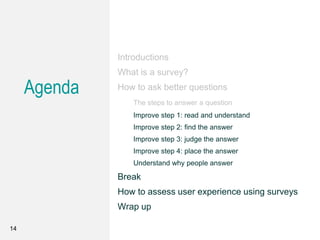

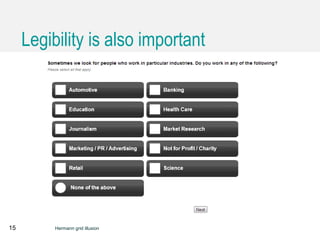

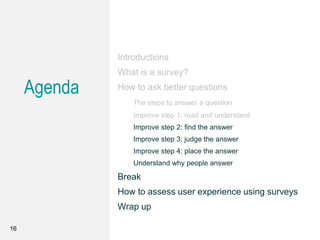

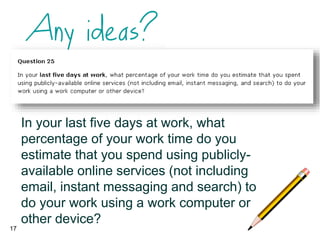

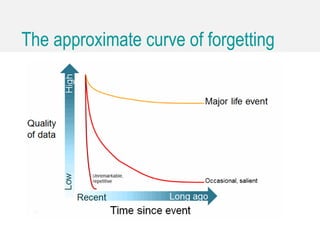

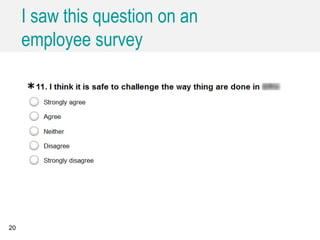

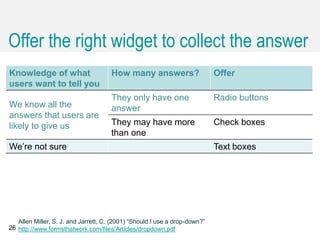

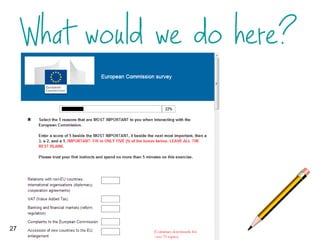

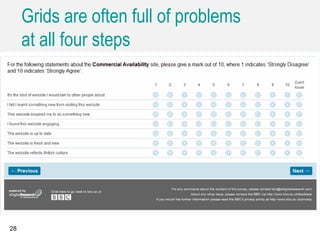

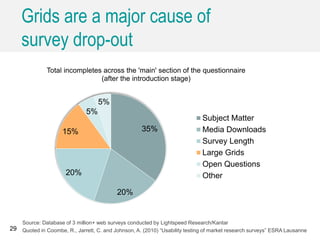

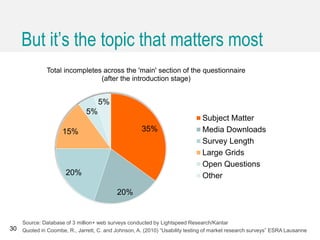

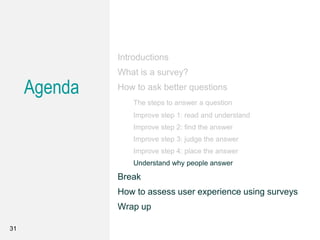

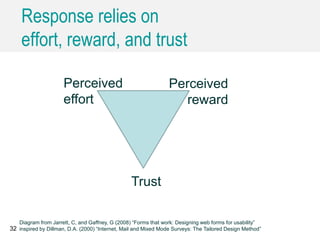

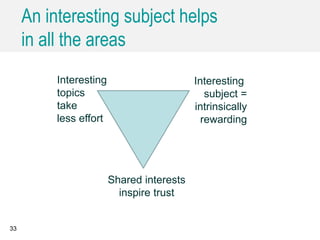

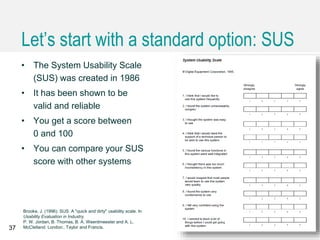

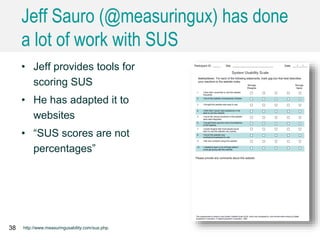

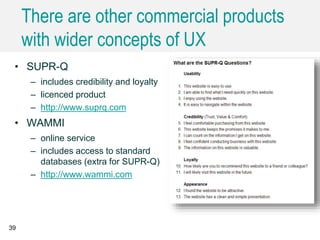

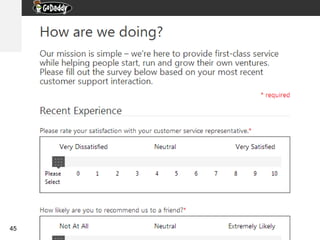

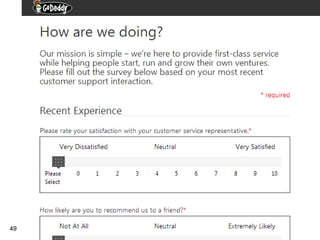

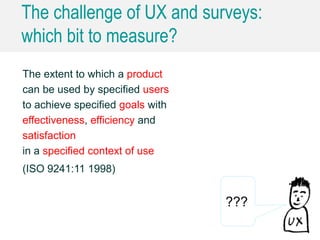

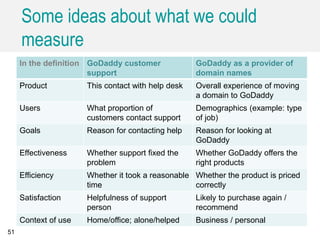

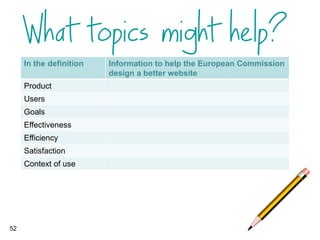

The document outlines a workshop led by Caroline Jarrett on improving question-asking skills and user experience assessment through surveys. It details a structured approach to crafting effective survey questions, including steps such as reading, finding, judging, and placing answers, alongside emphasizing the importance of user trust and engagement. The workshop also covers practical tips for assessing user experience and designing better surveys to gather meaningful feedback.