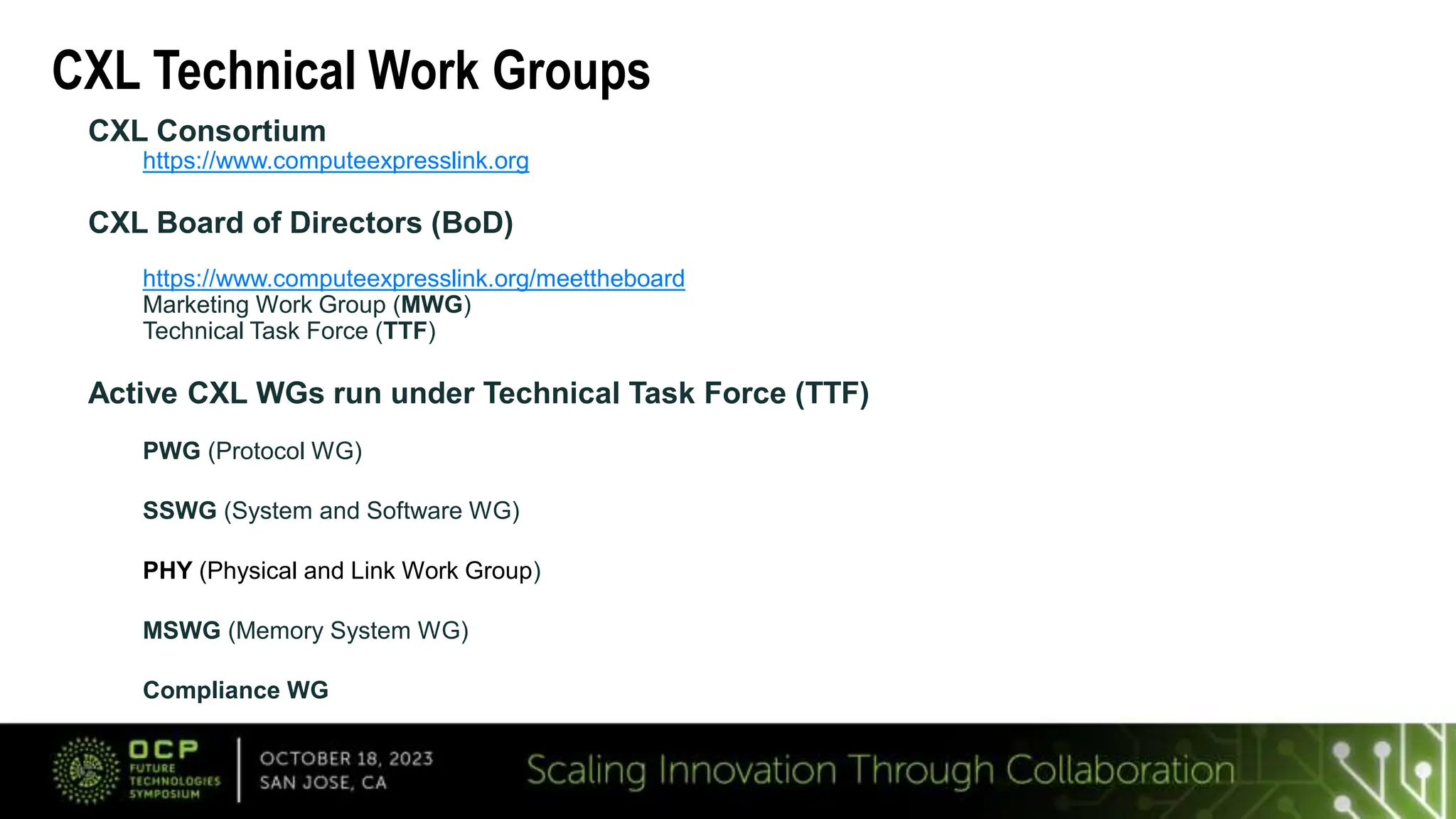

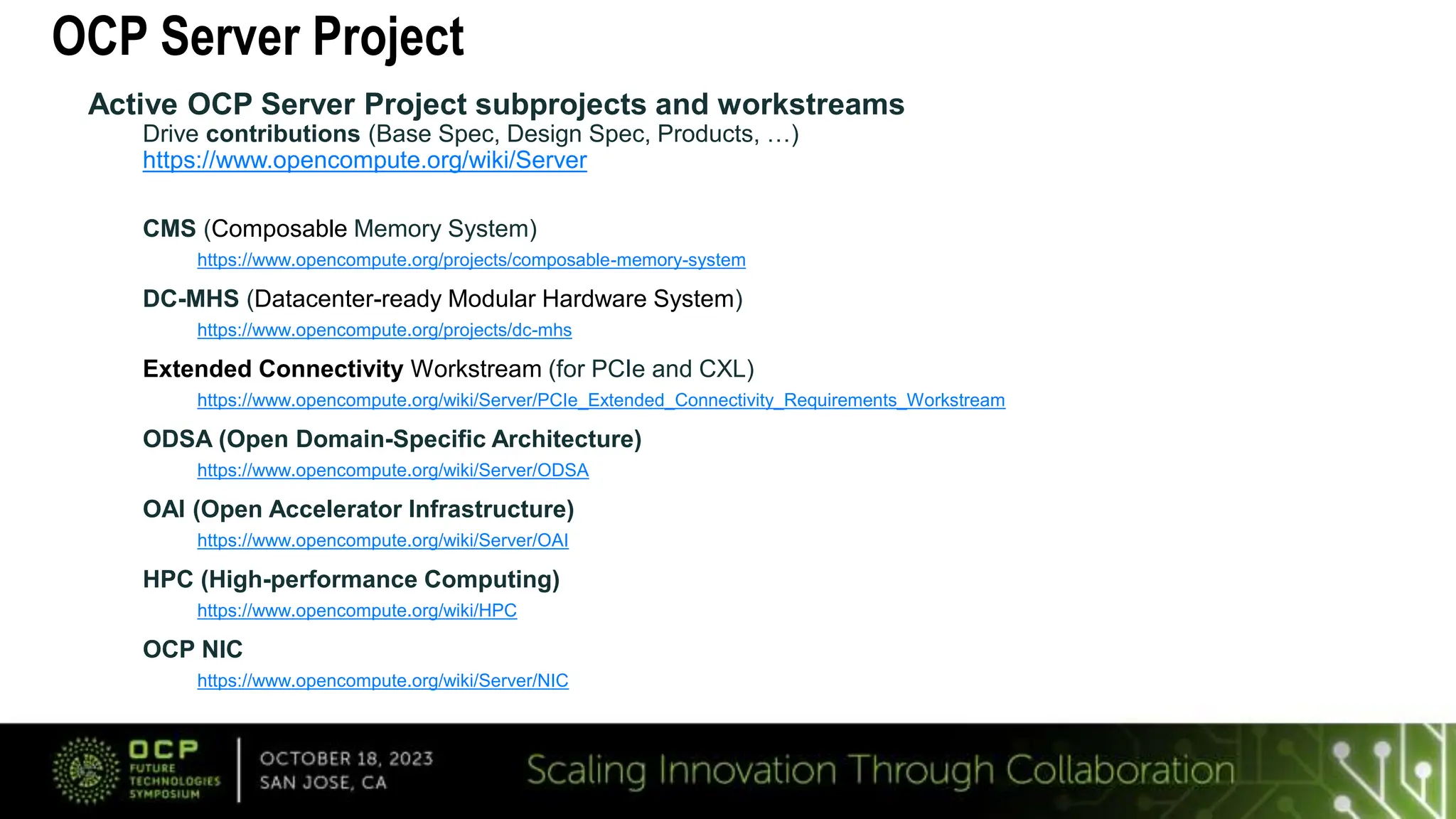

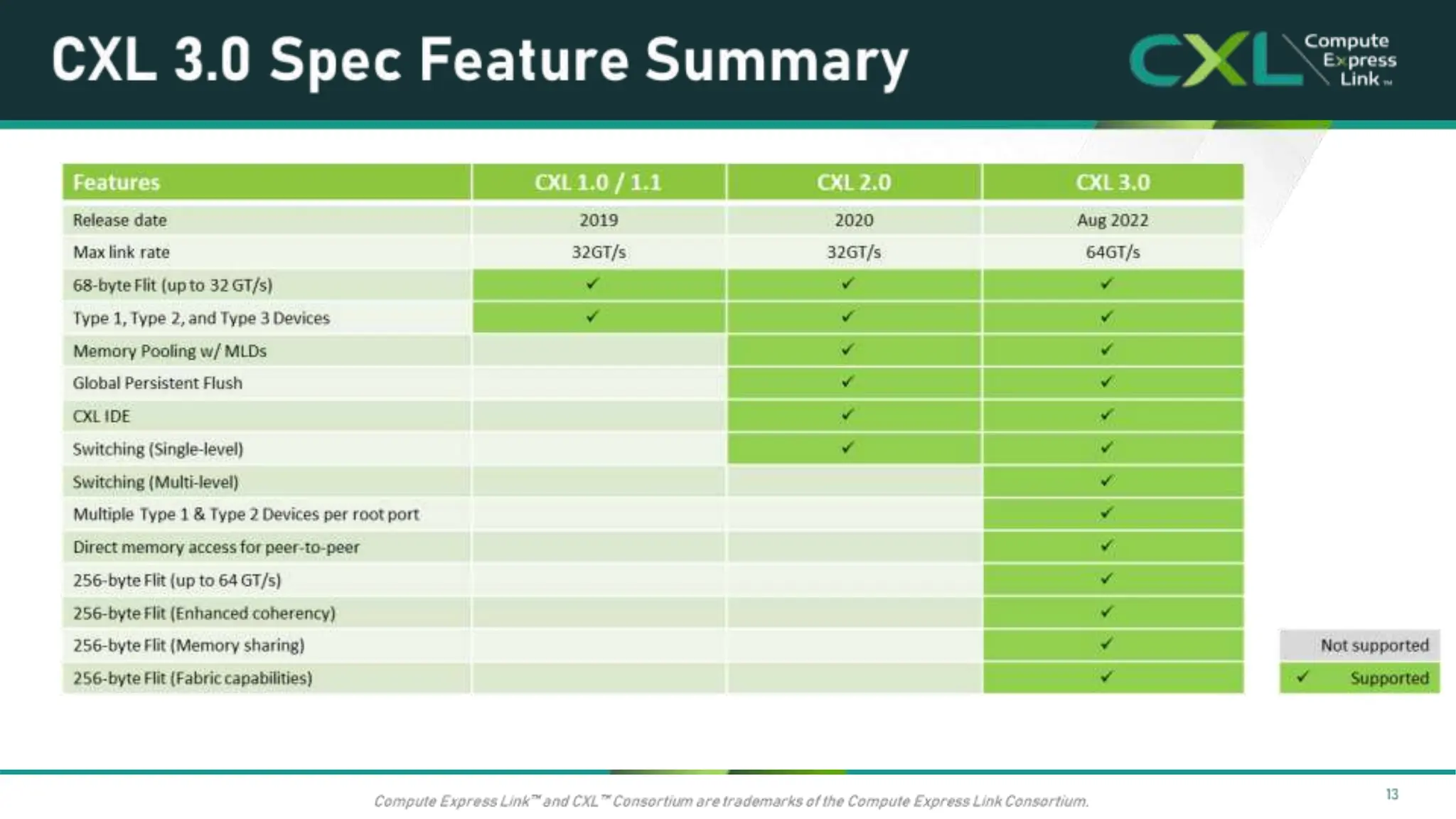

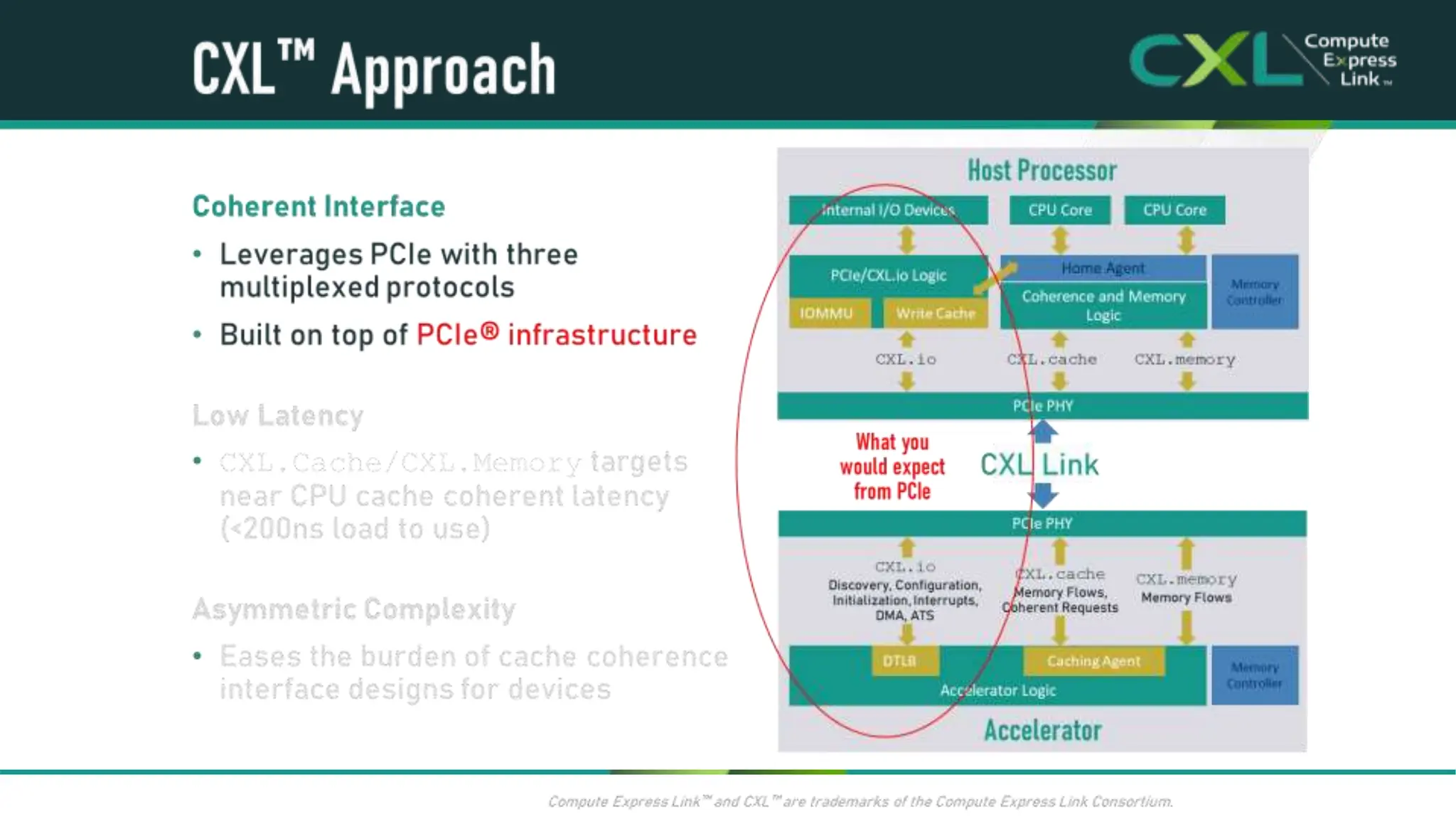

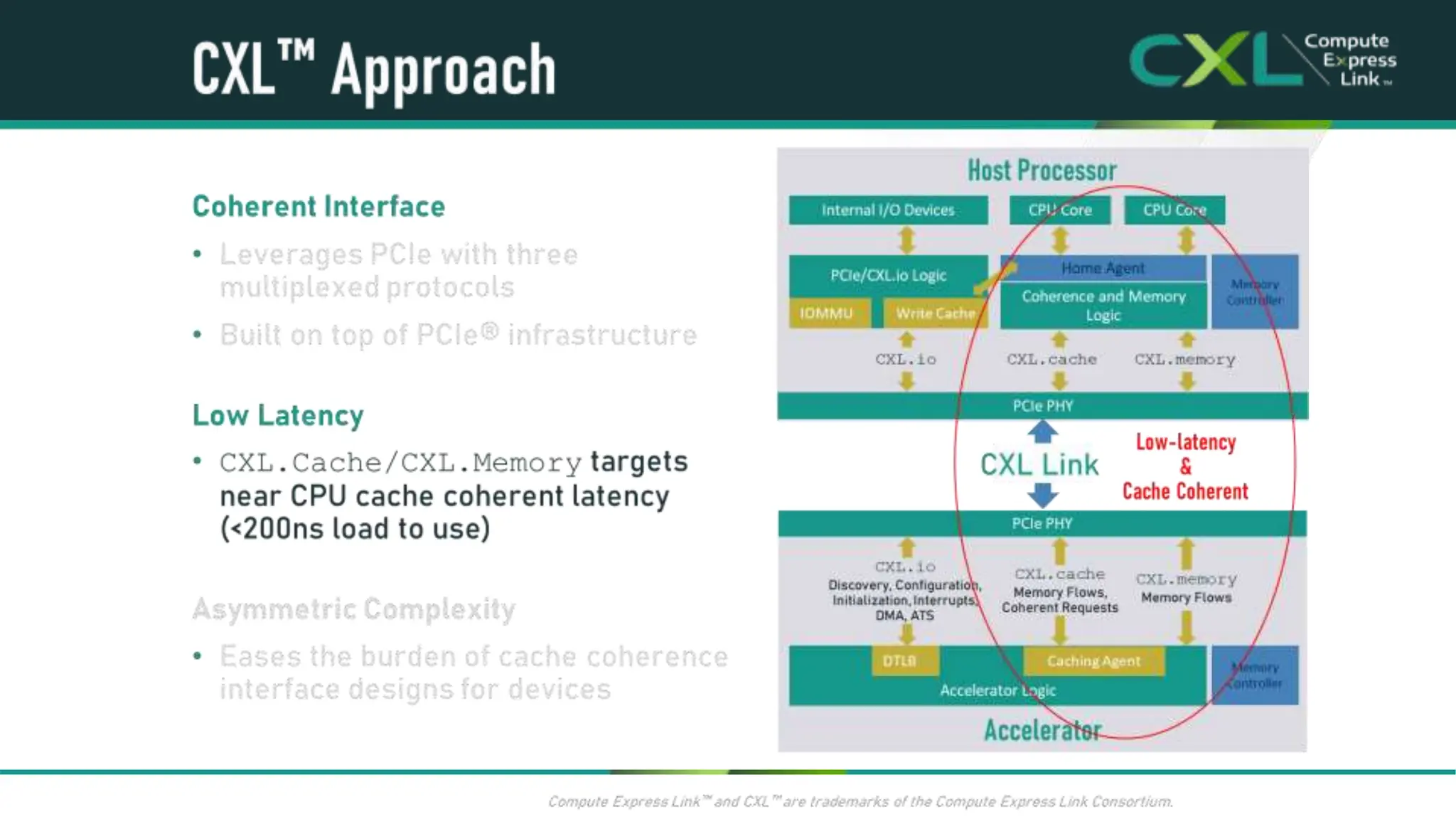

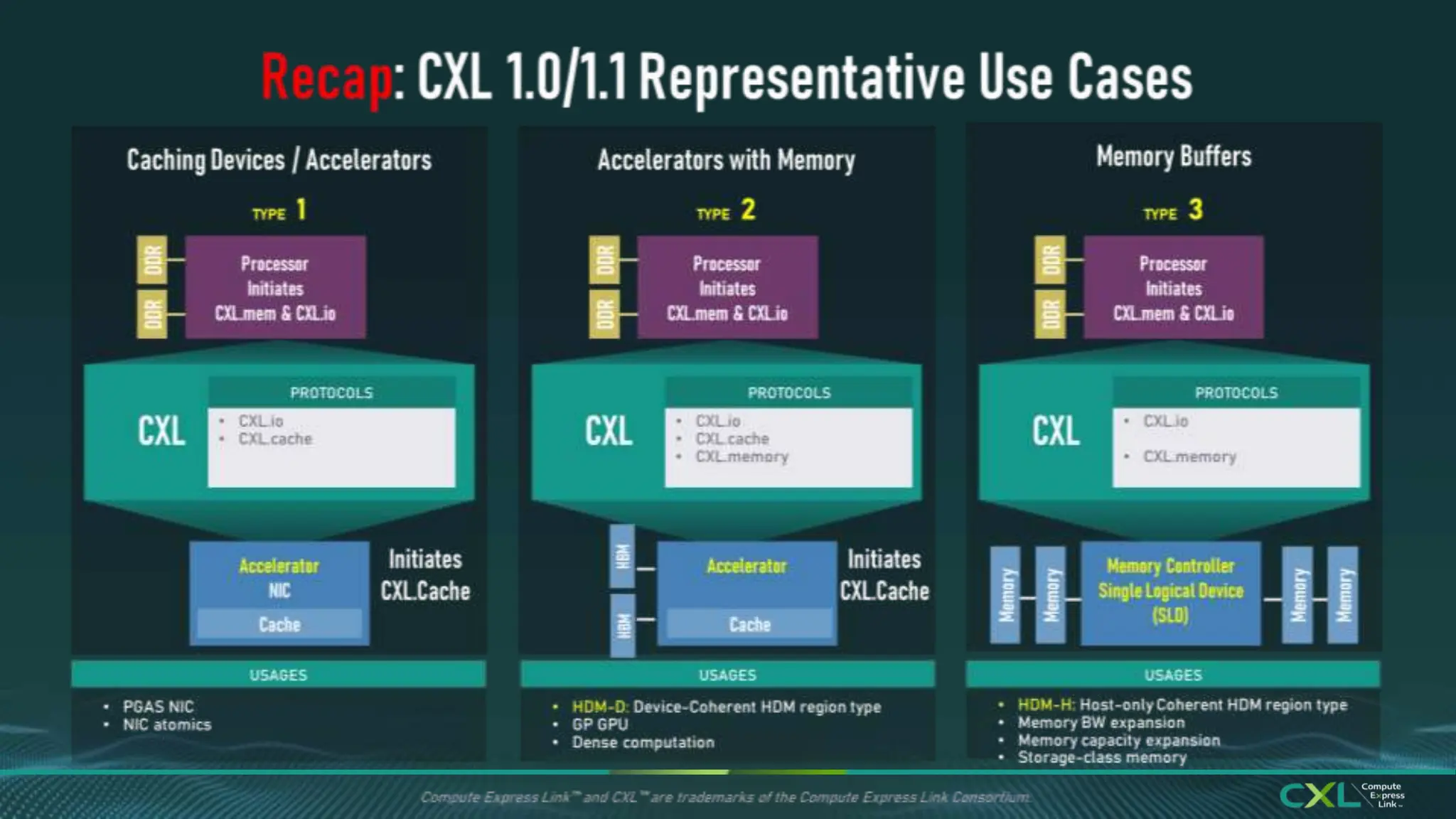

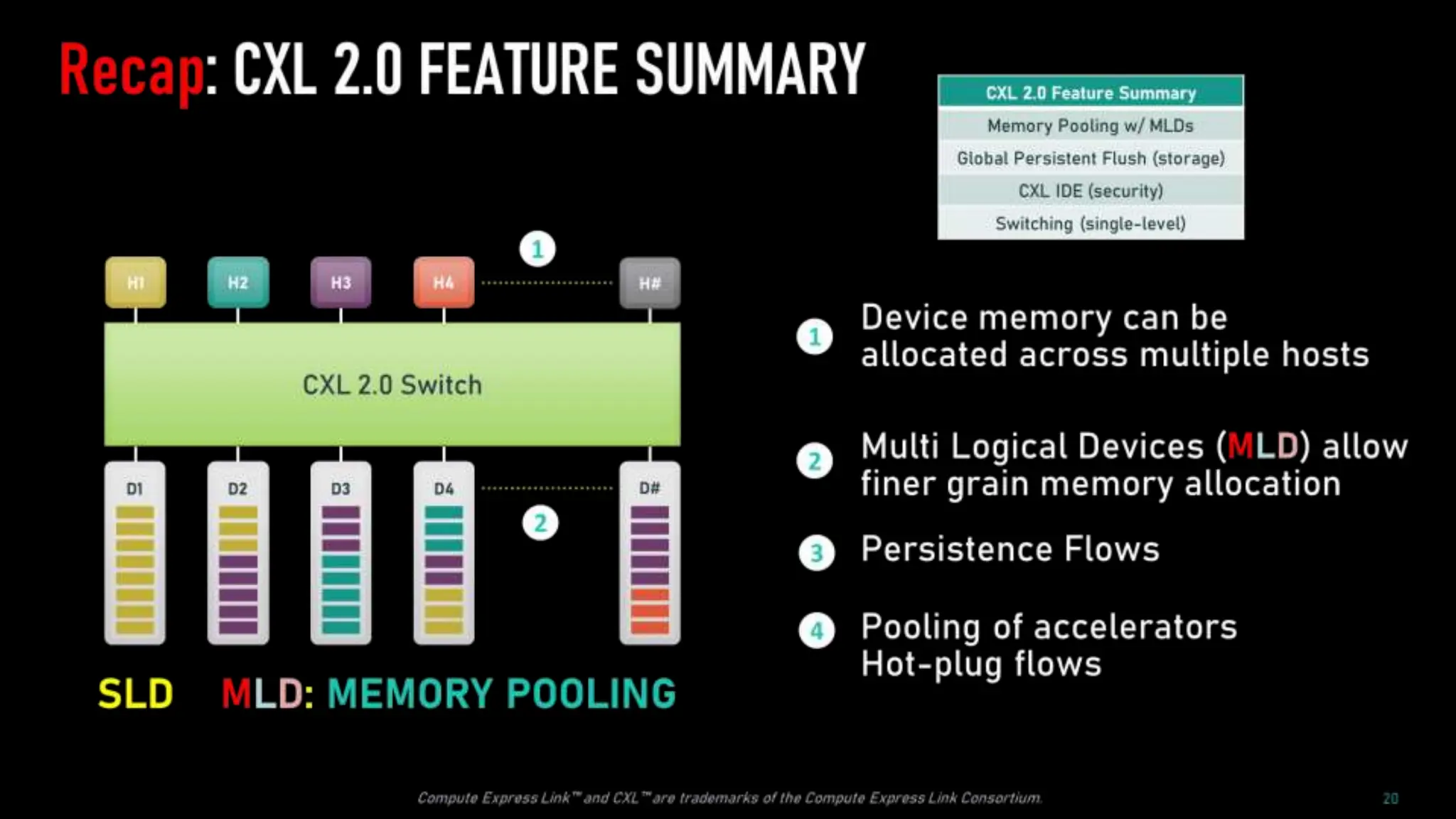

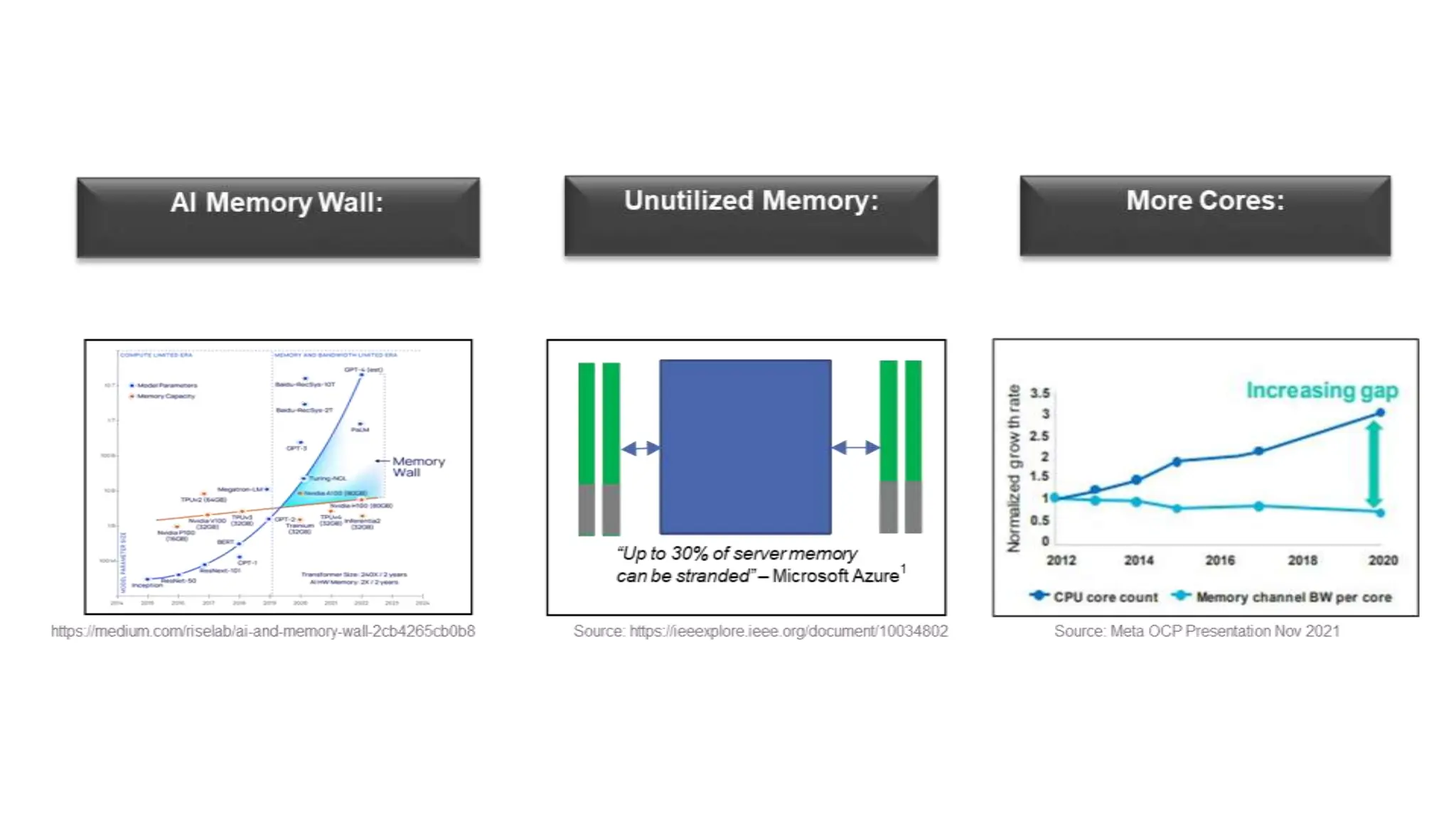

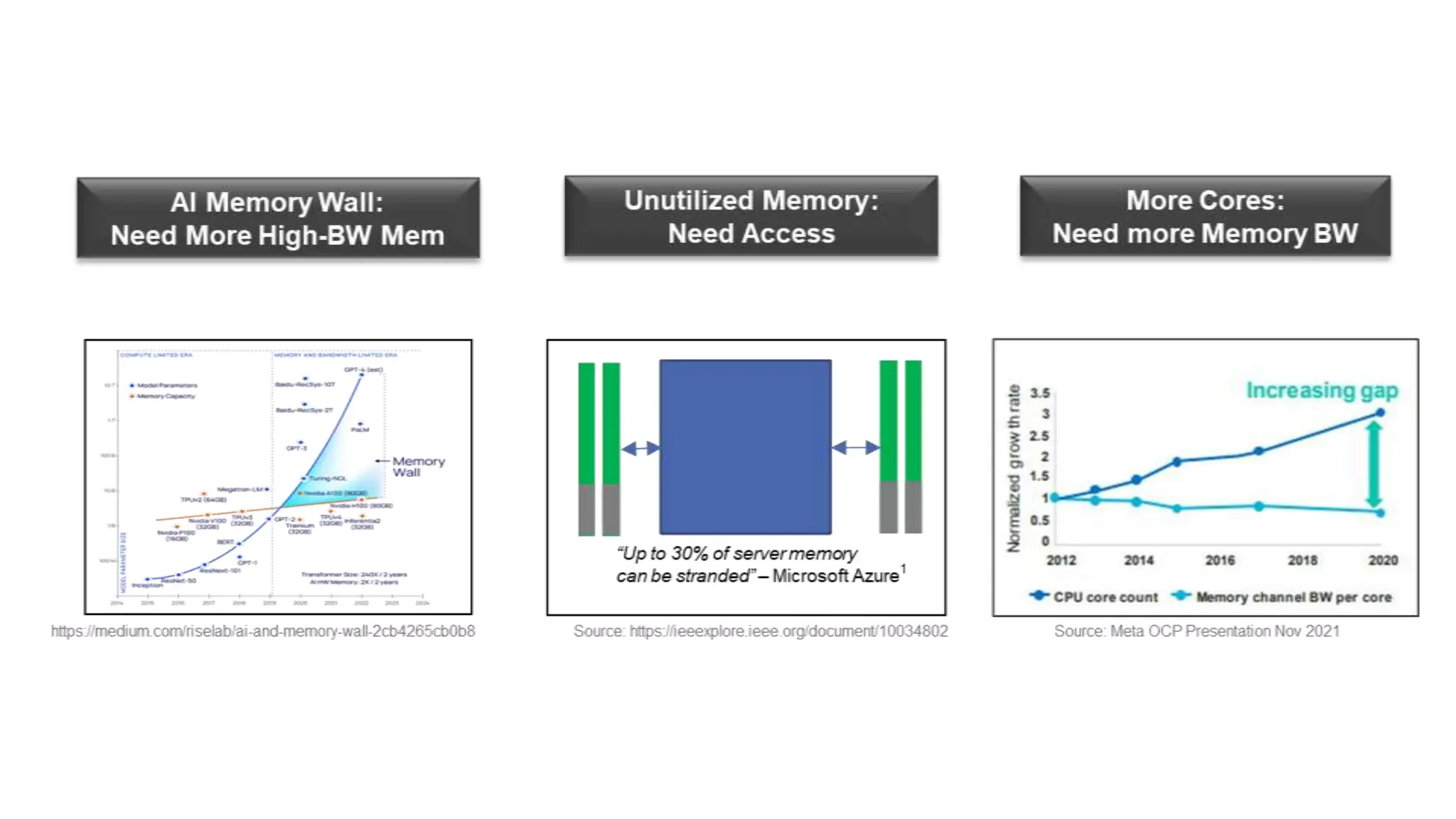

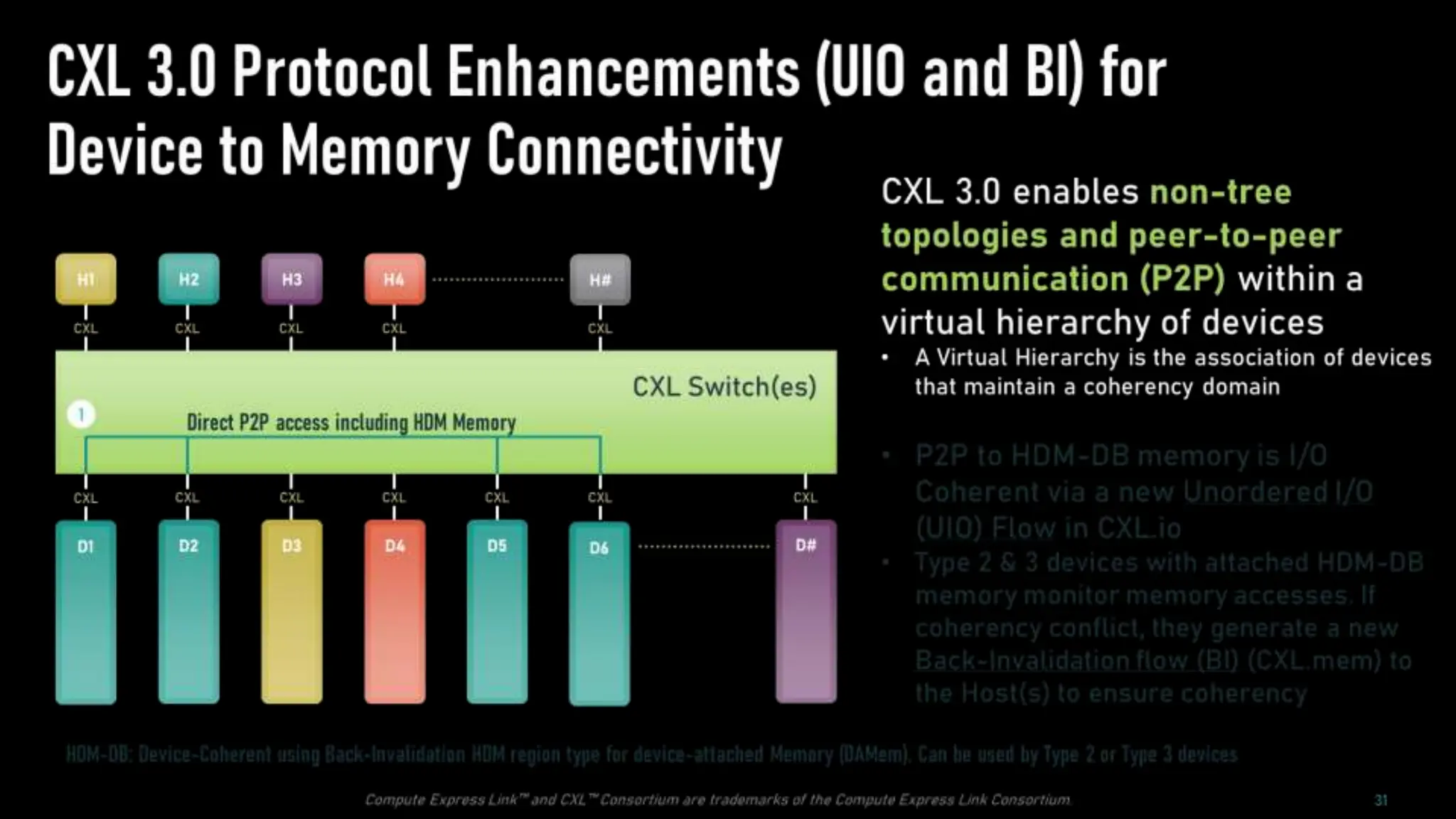

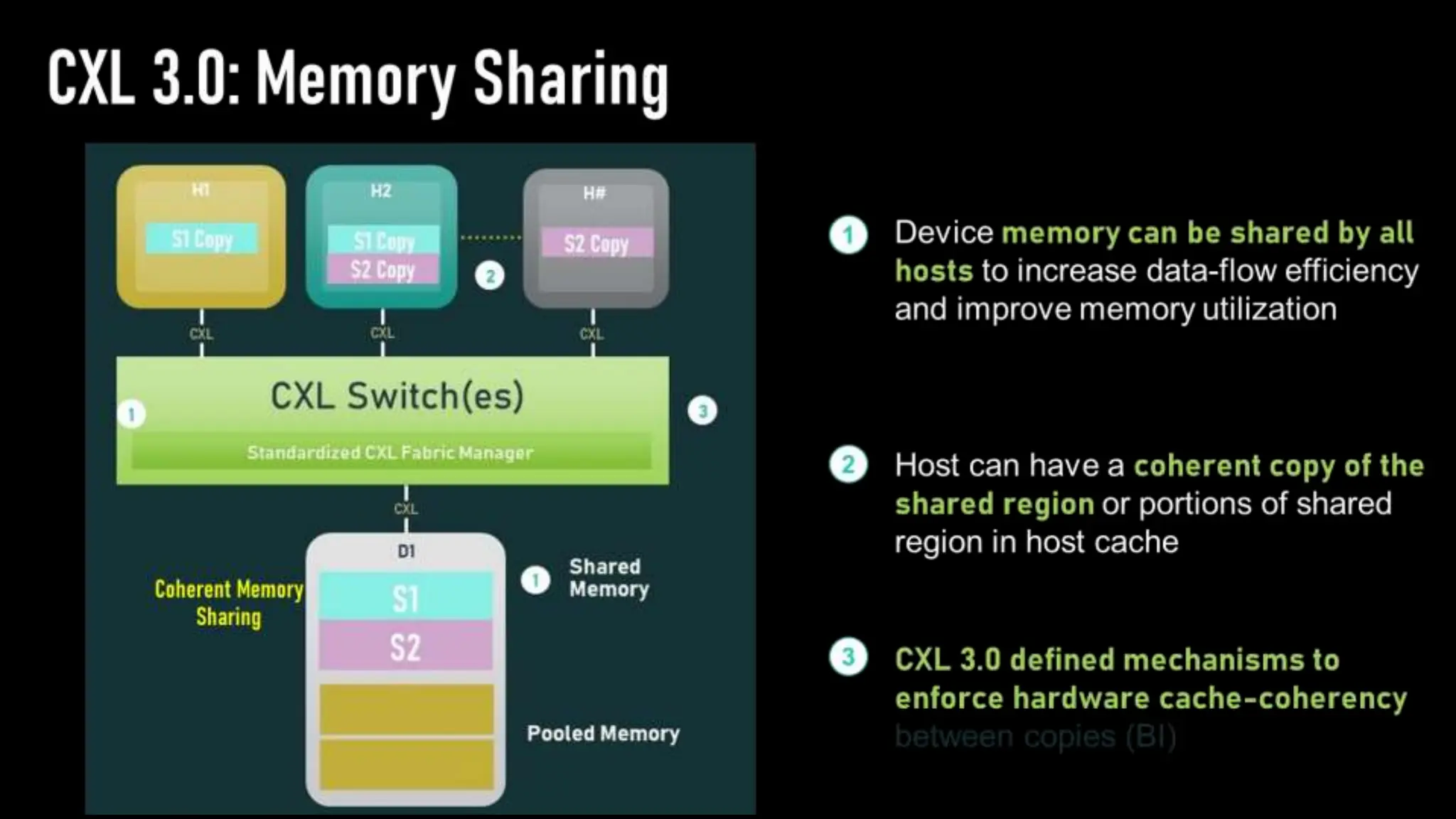

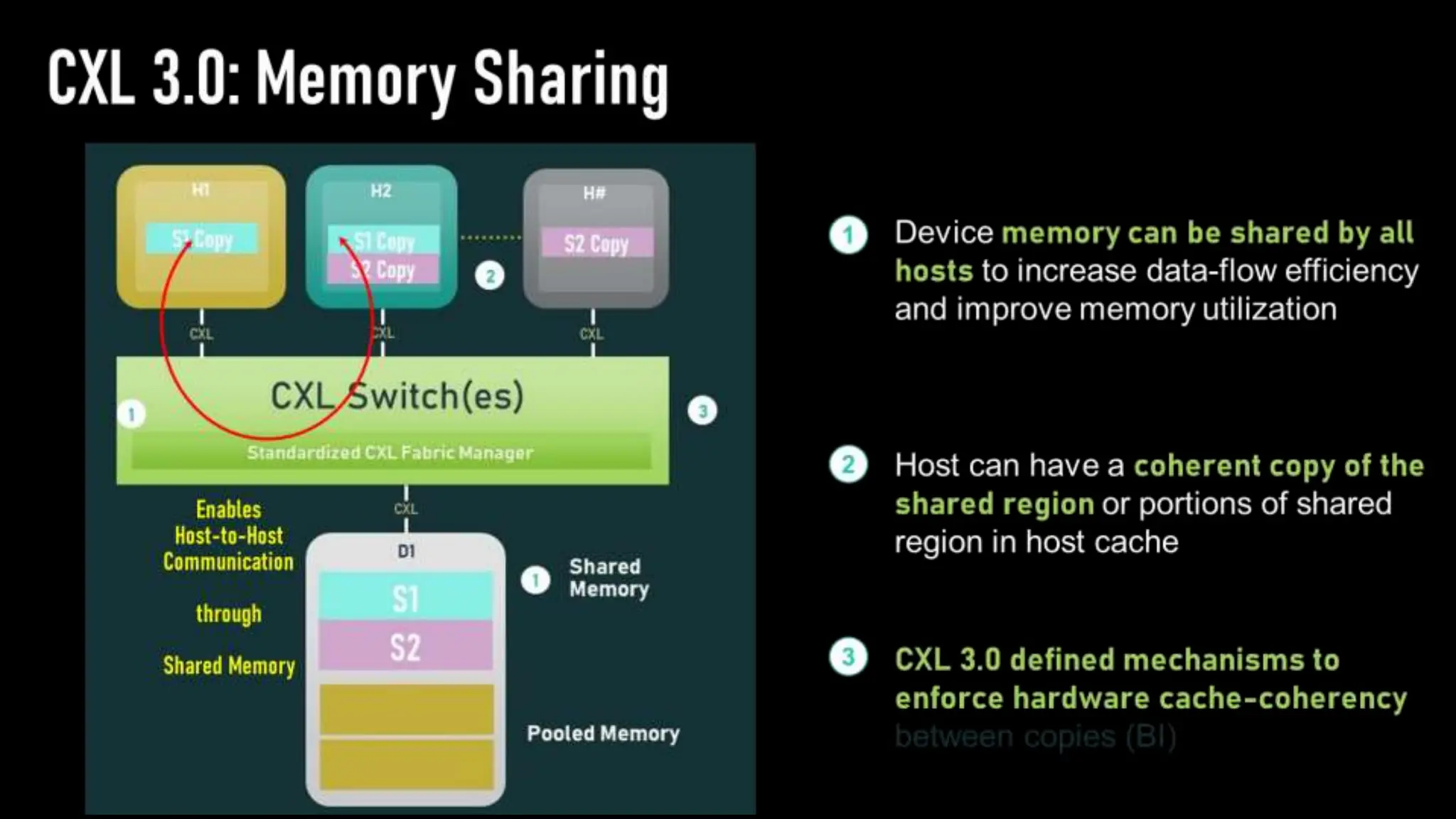

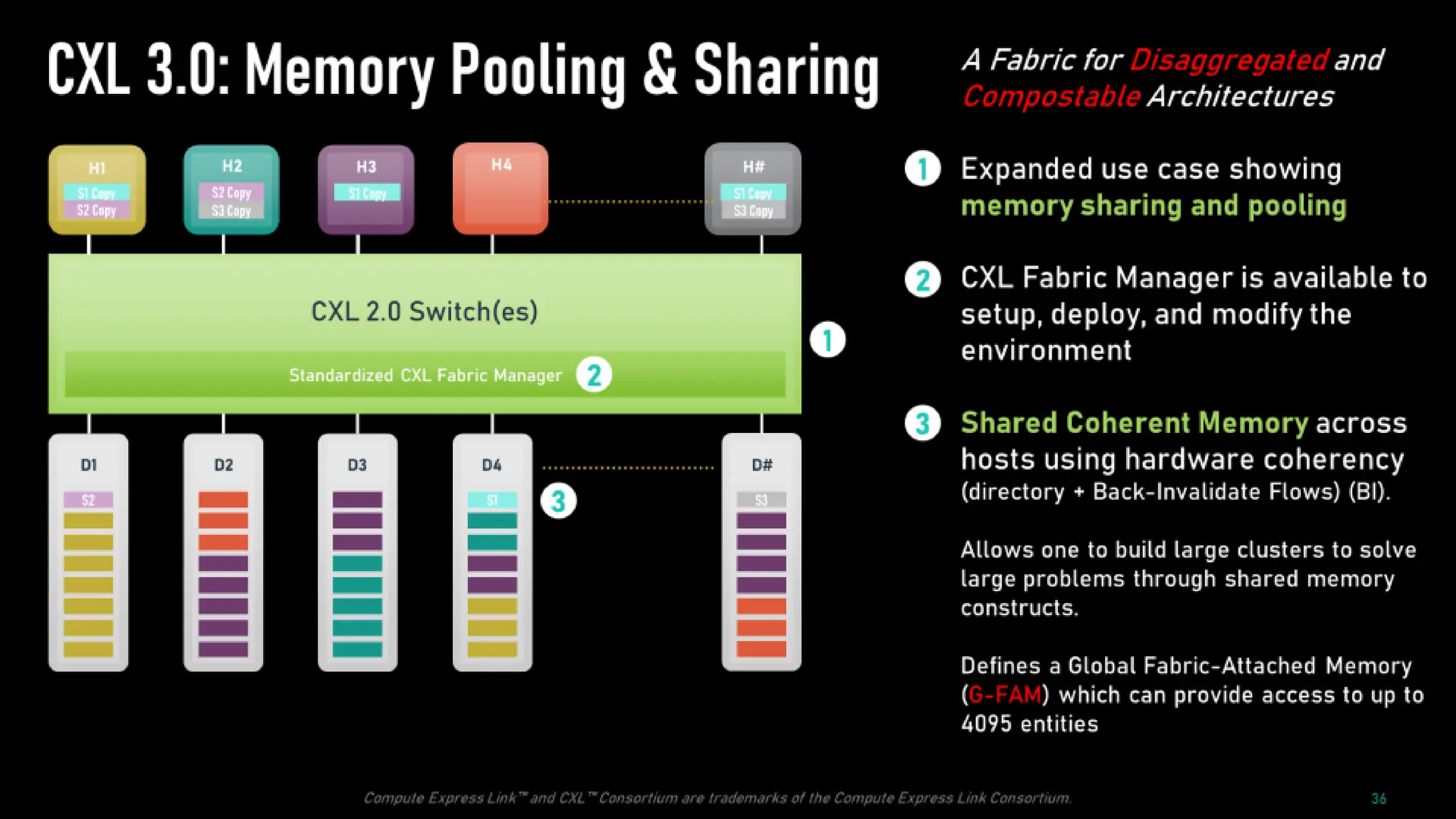

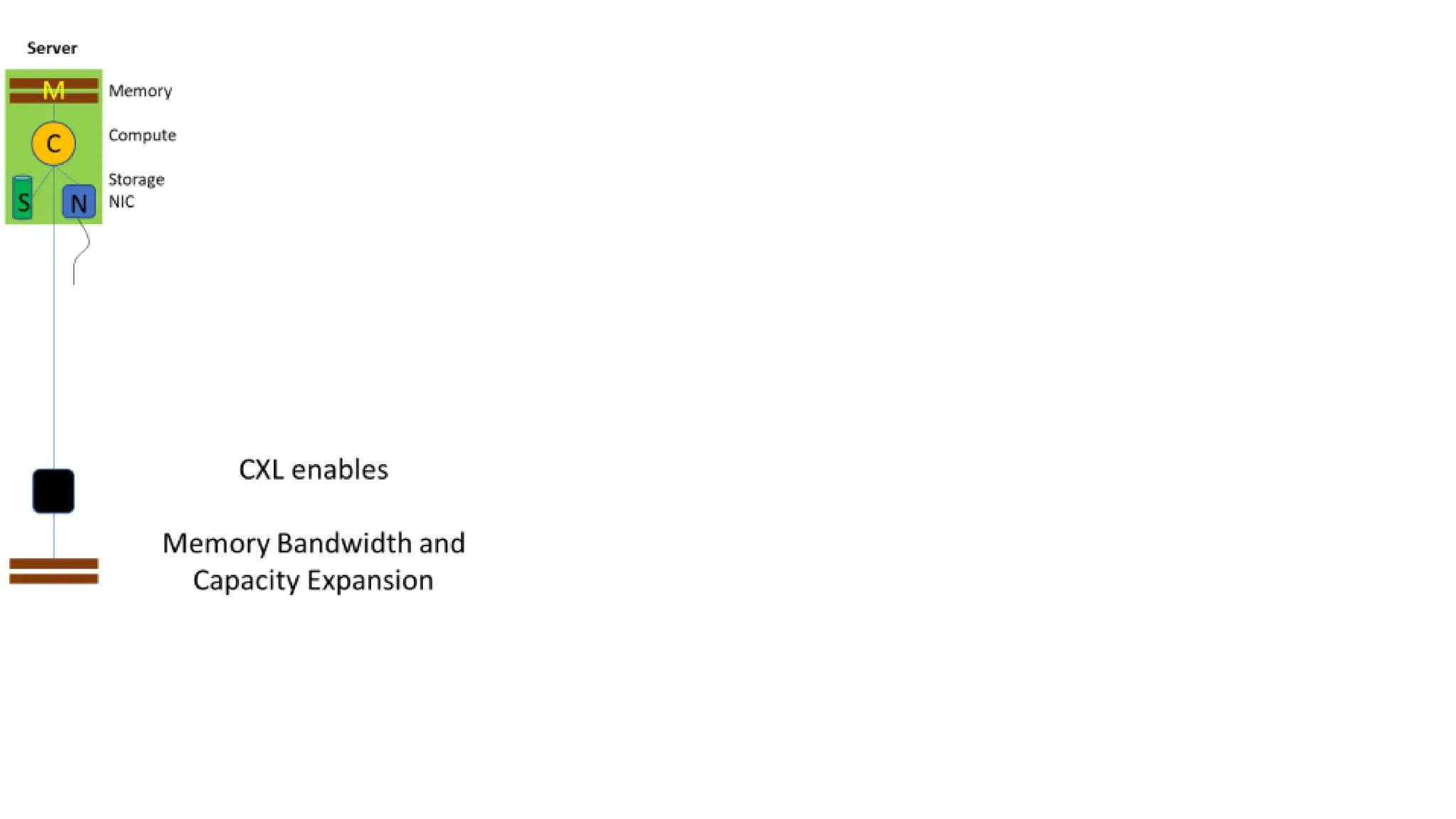

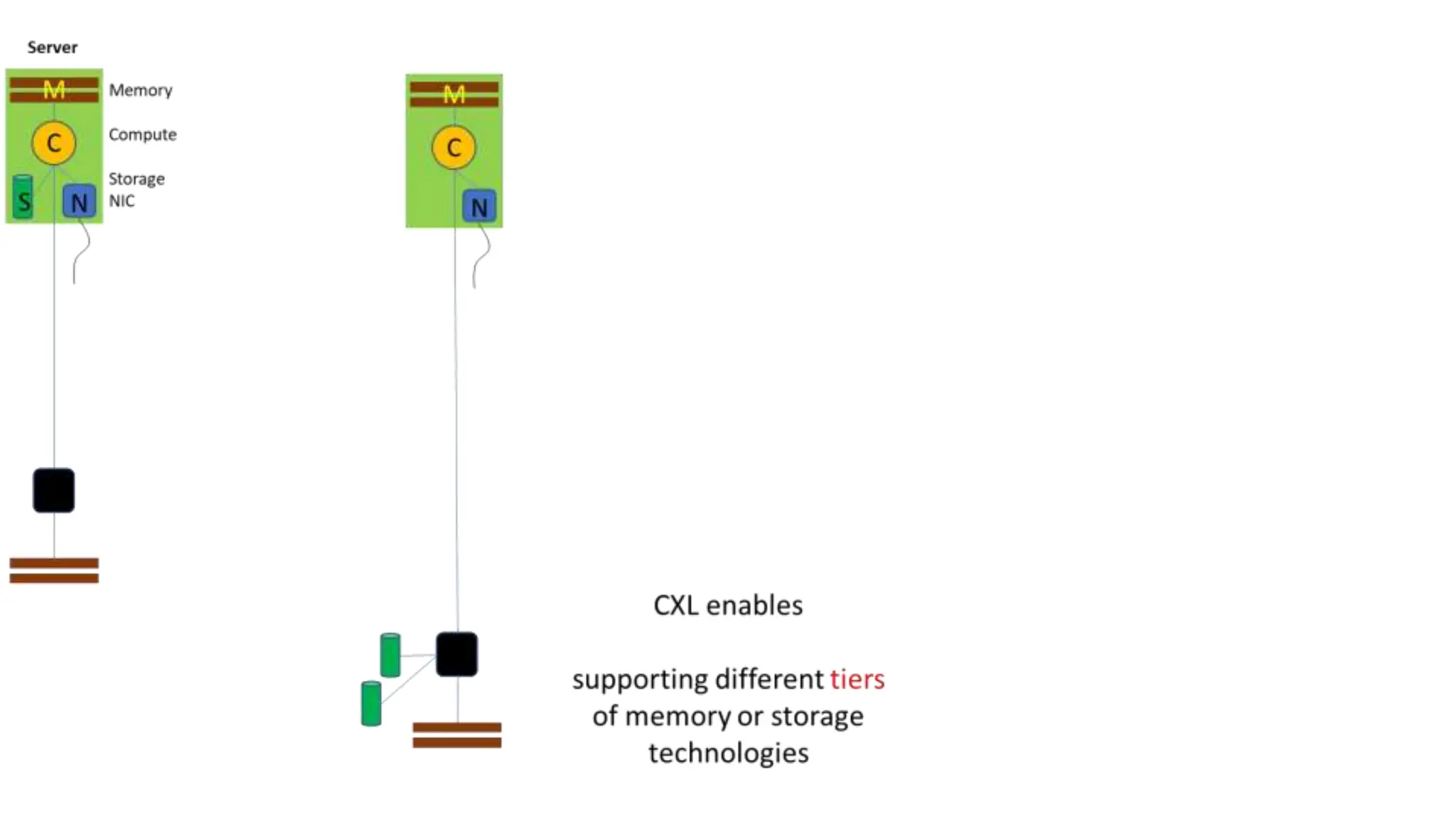

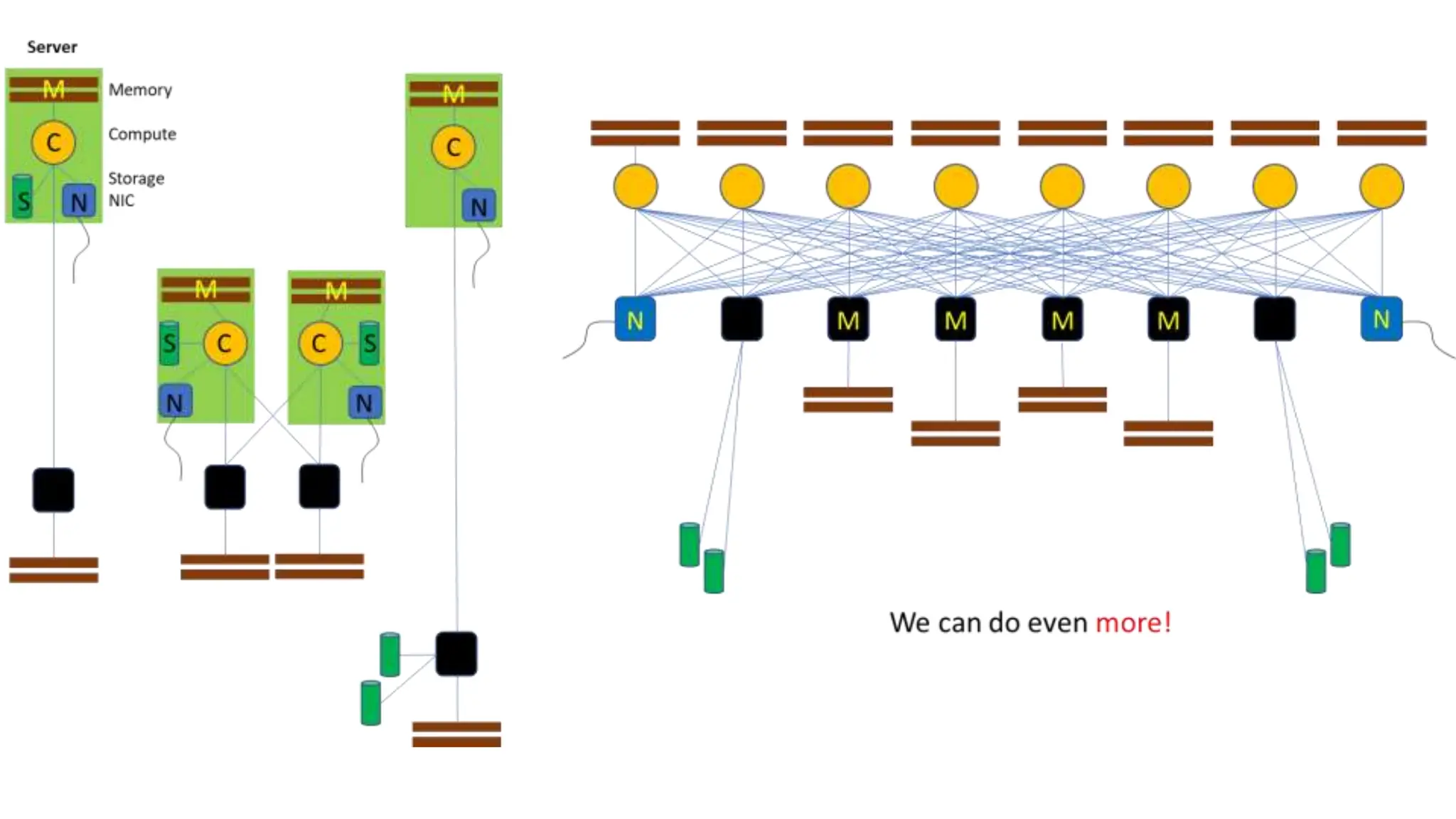

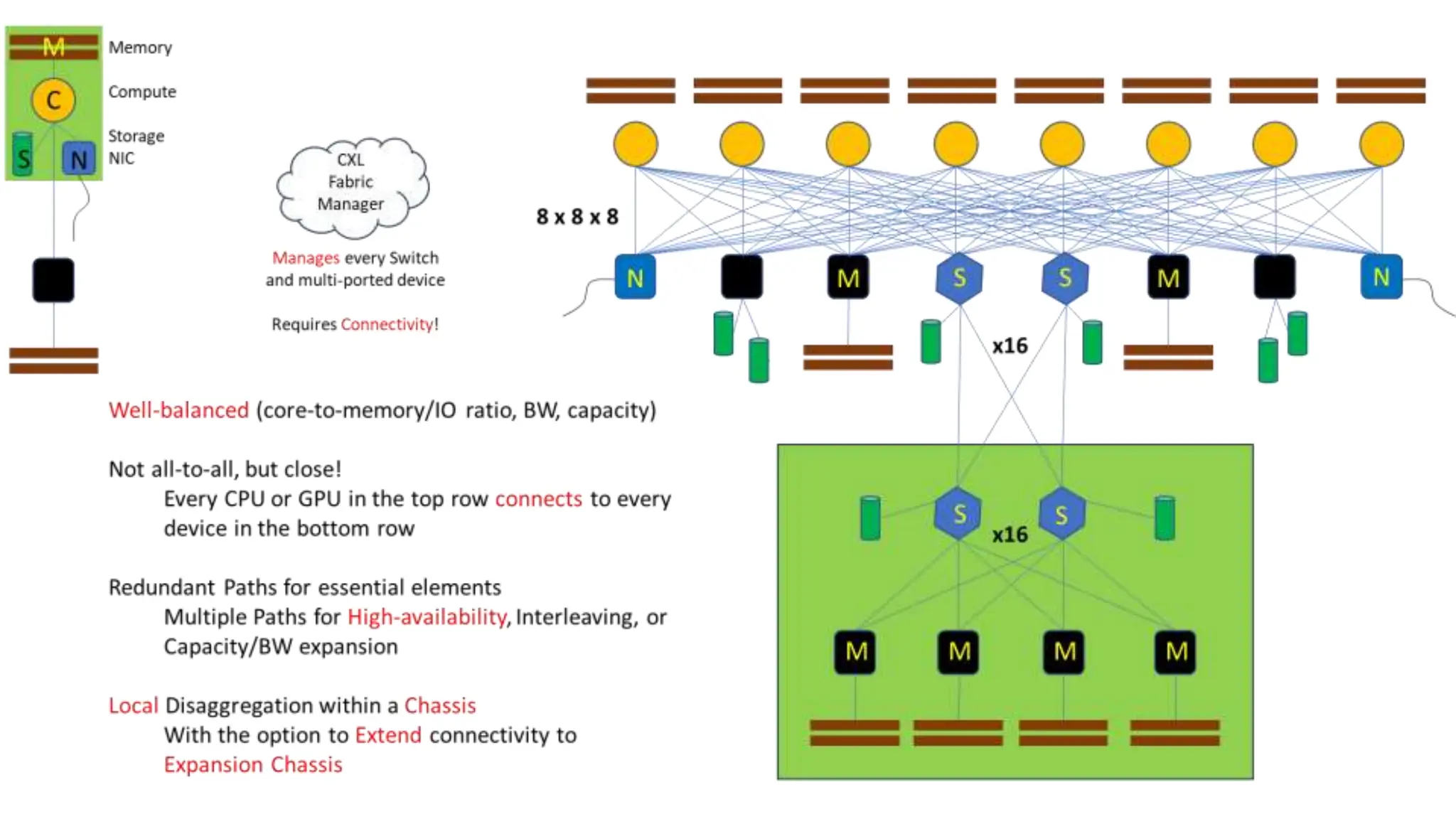

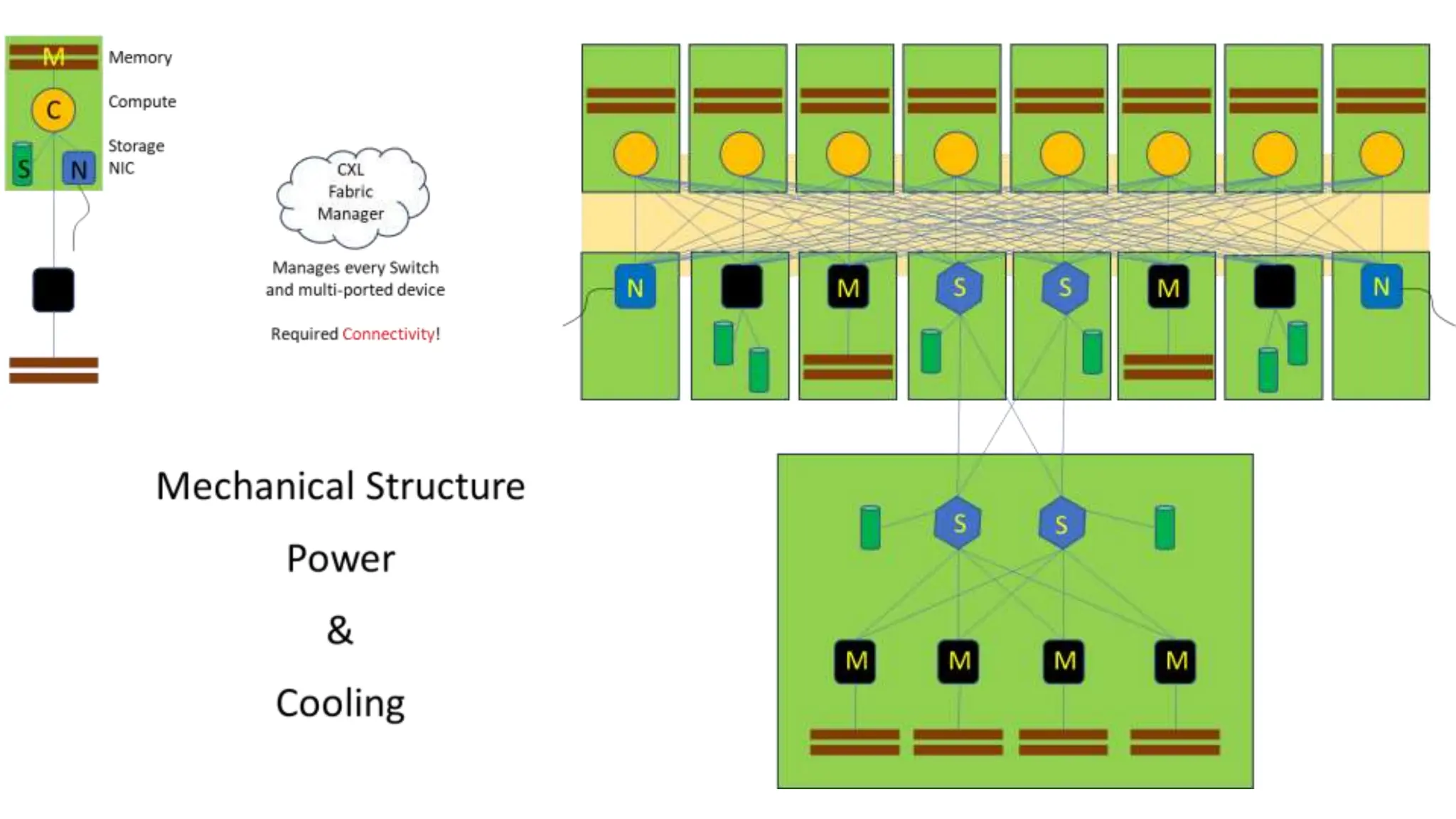

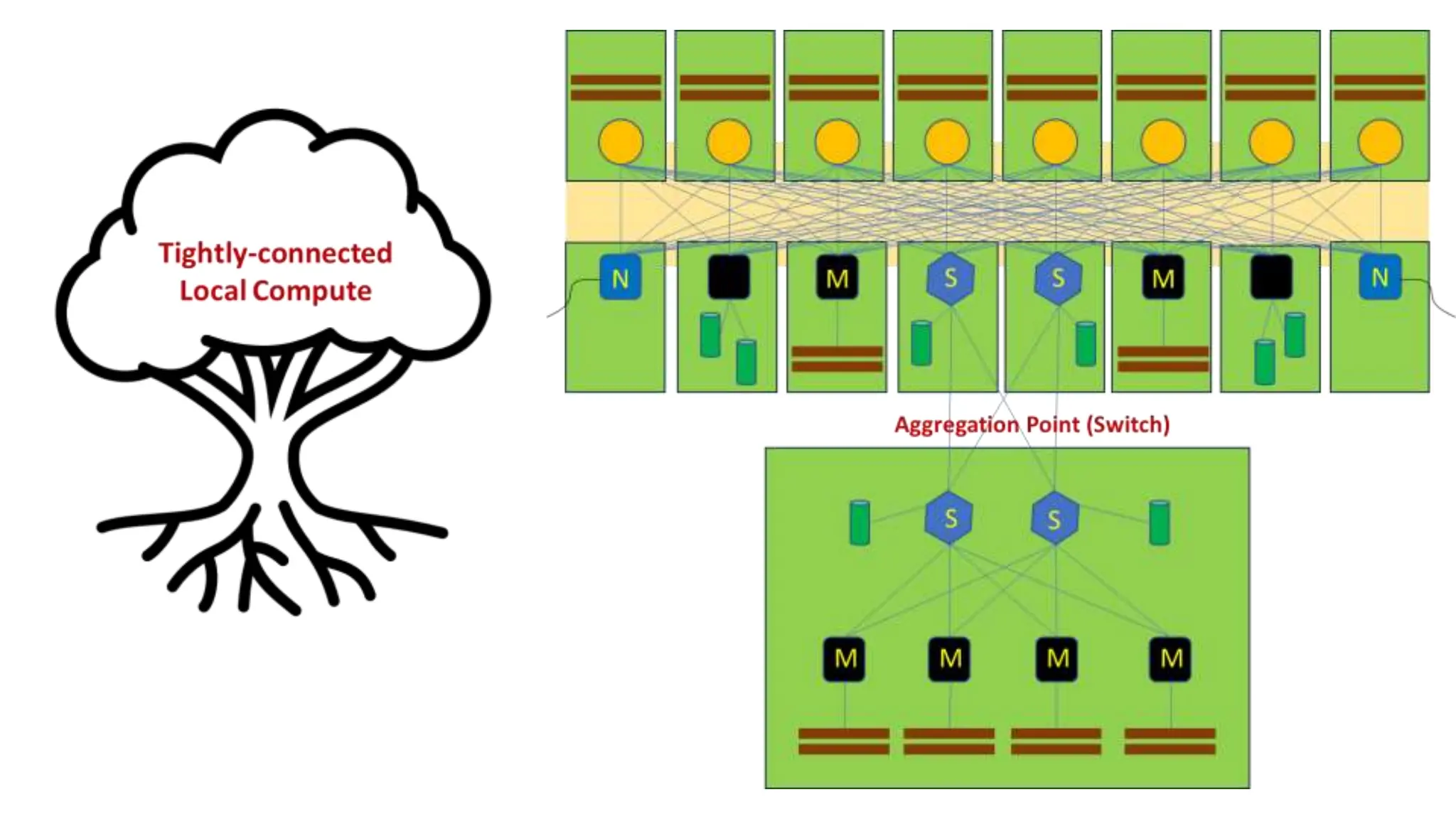

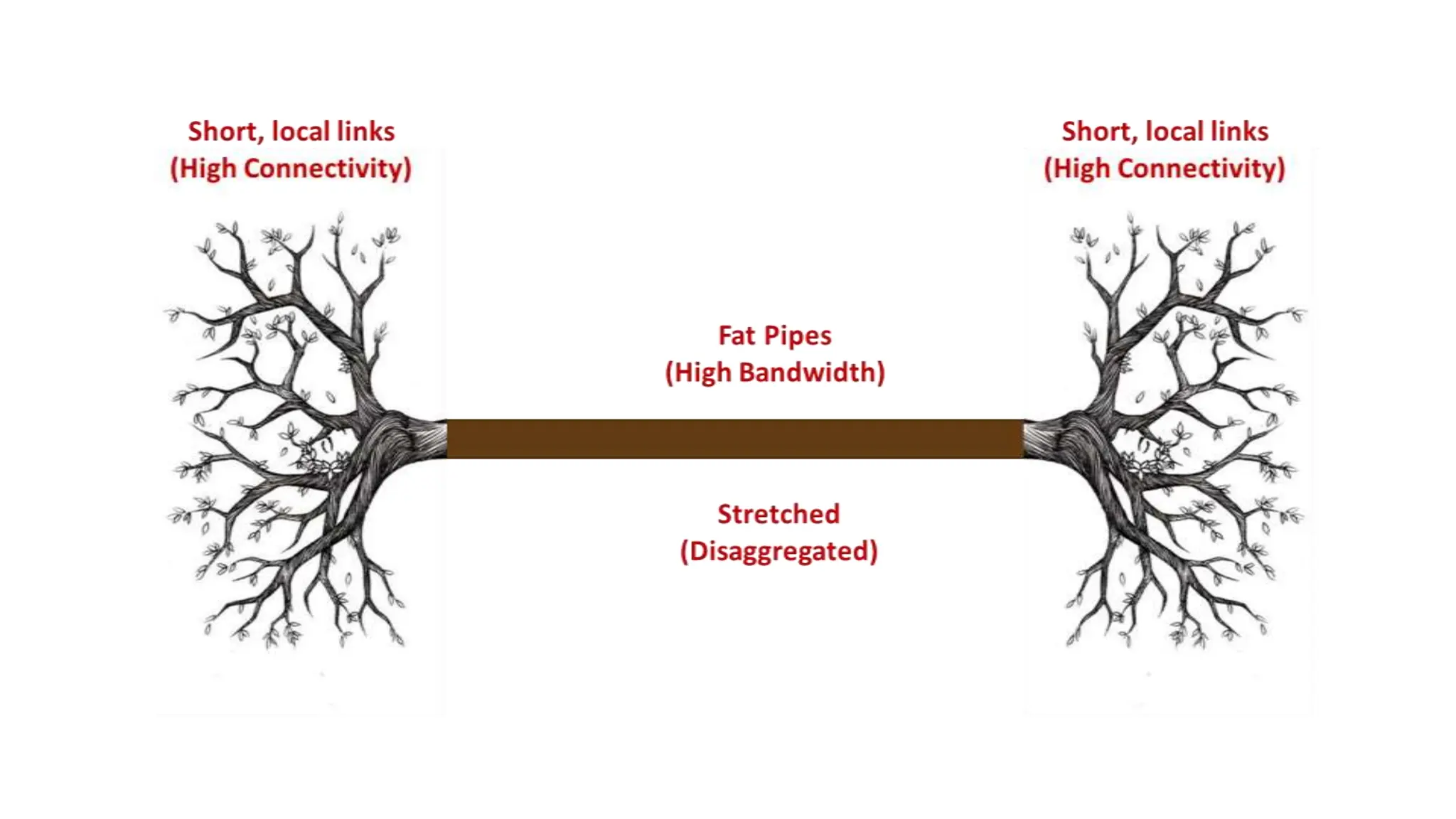

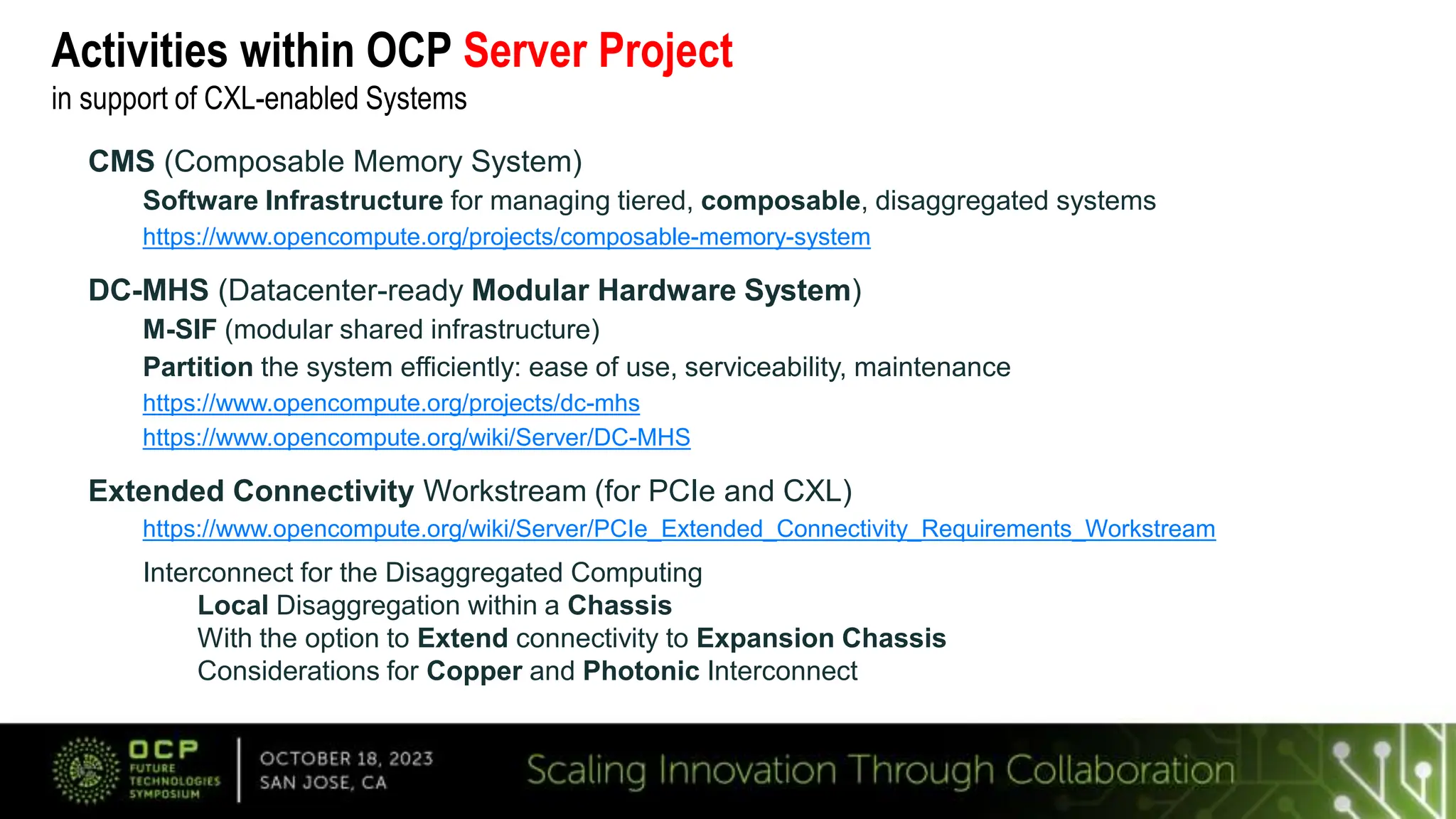

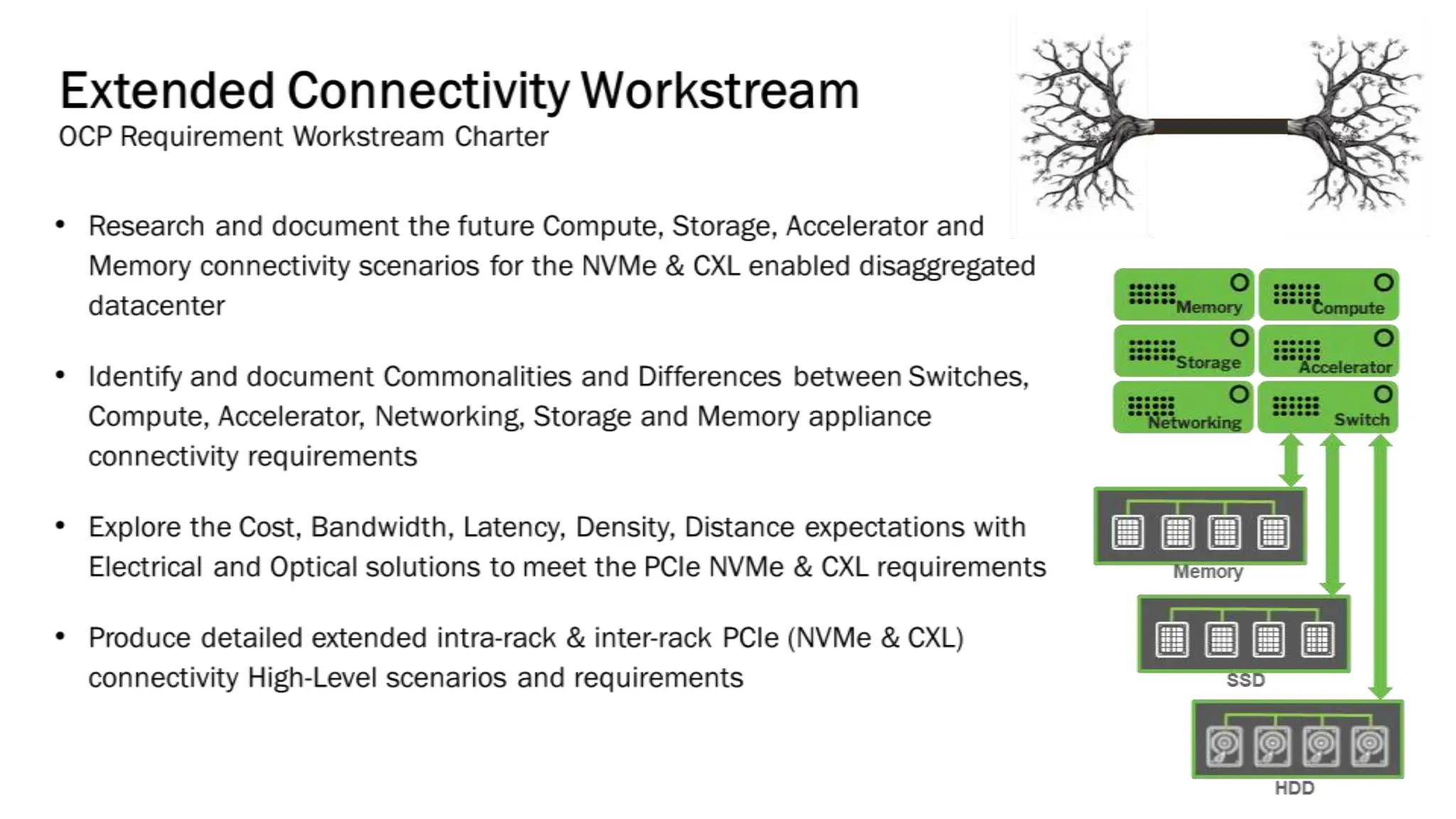

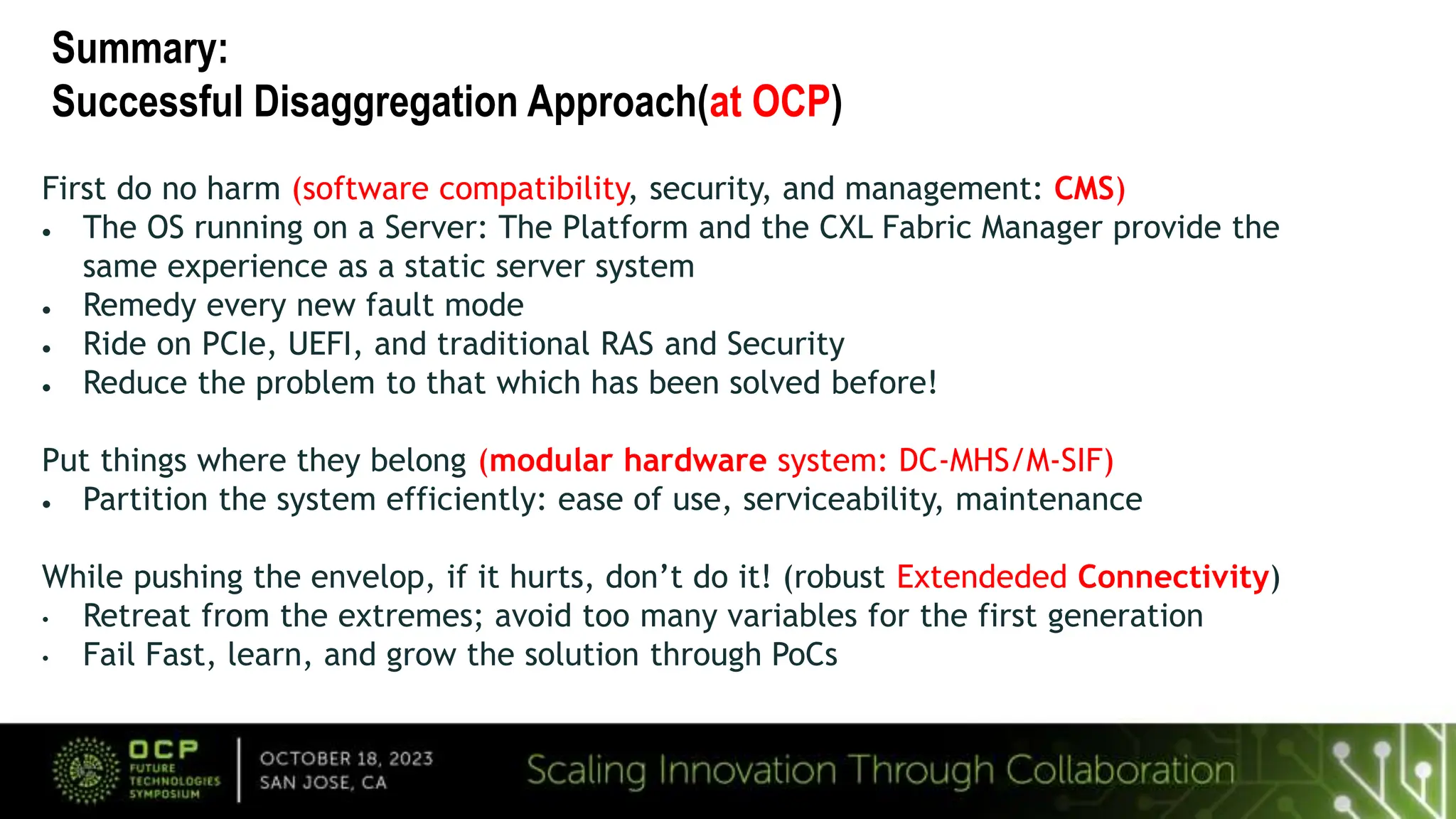

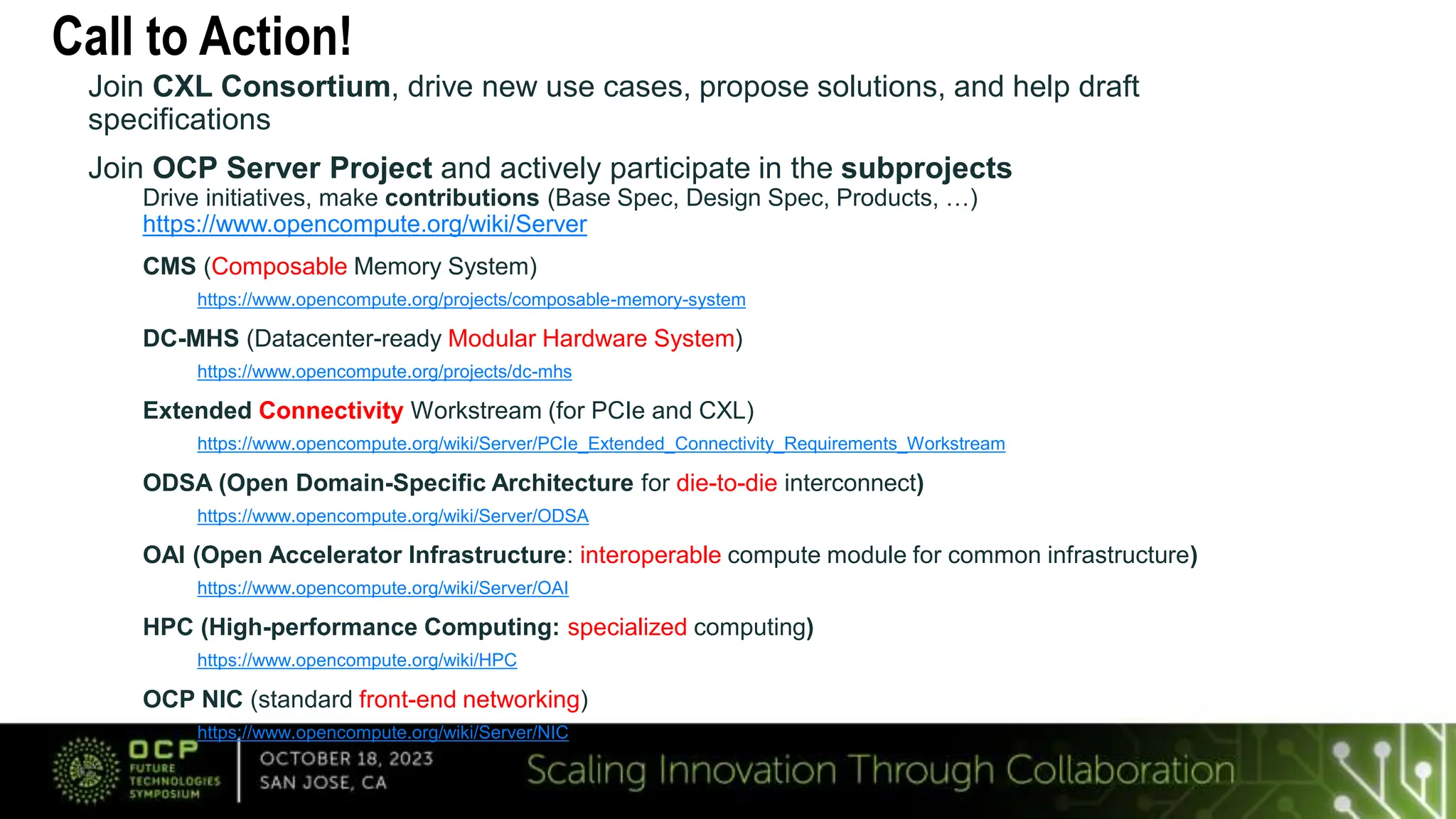

The document outlines the current state and activities of the CXL (Compute Express Link) and OCP (Open Compute Project) consortiums, highlighting CXL's adoption by over 250 companies as a standard for efficient data-movement in modular computing systems. It discusses system design challenges, advancements in CXL specifications, and the need for interoperability and software compatibility in a disaggregated computing environment. Additionally, it includes calls to action for participation in CXL and OCP initiatives to drive new use cases and specifications.