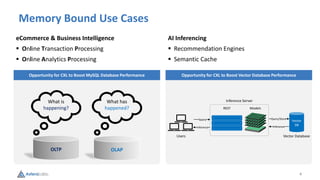

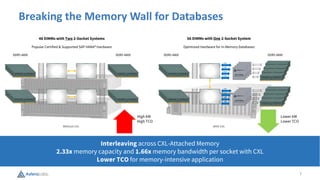

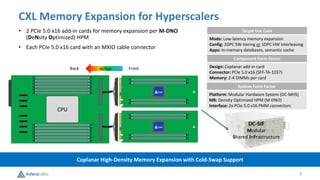

The document discusses advancements in memory technology utilizing CXL (Compute Express Link) to address challenges such as memory bandwidth, latency, and integration complexity. It highlights the potential of CXL to significantly improve server memory performance and scalability, particularly for applications in ecommerce, business intelligence, and AI. The document calls for collaboration and engagement to maximize the benefits of CXL technology in modern computing infrastructure.