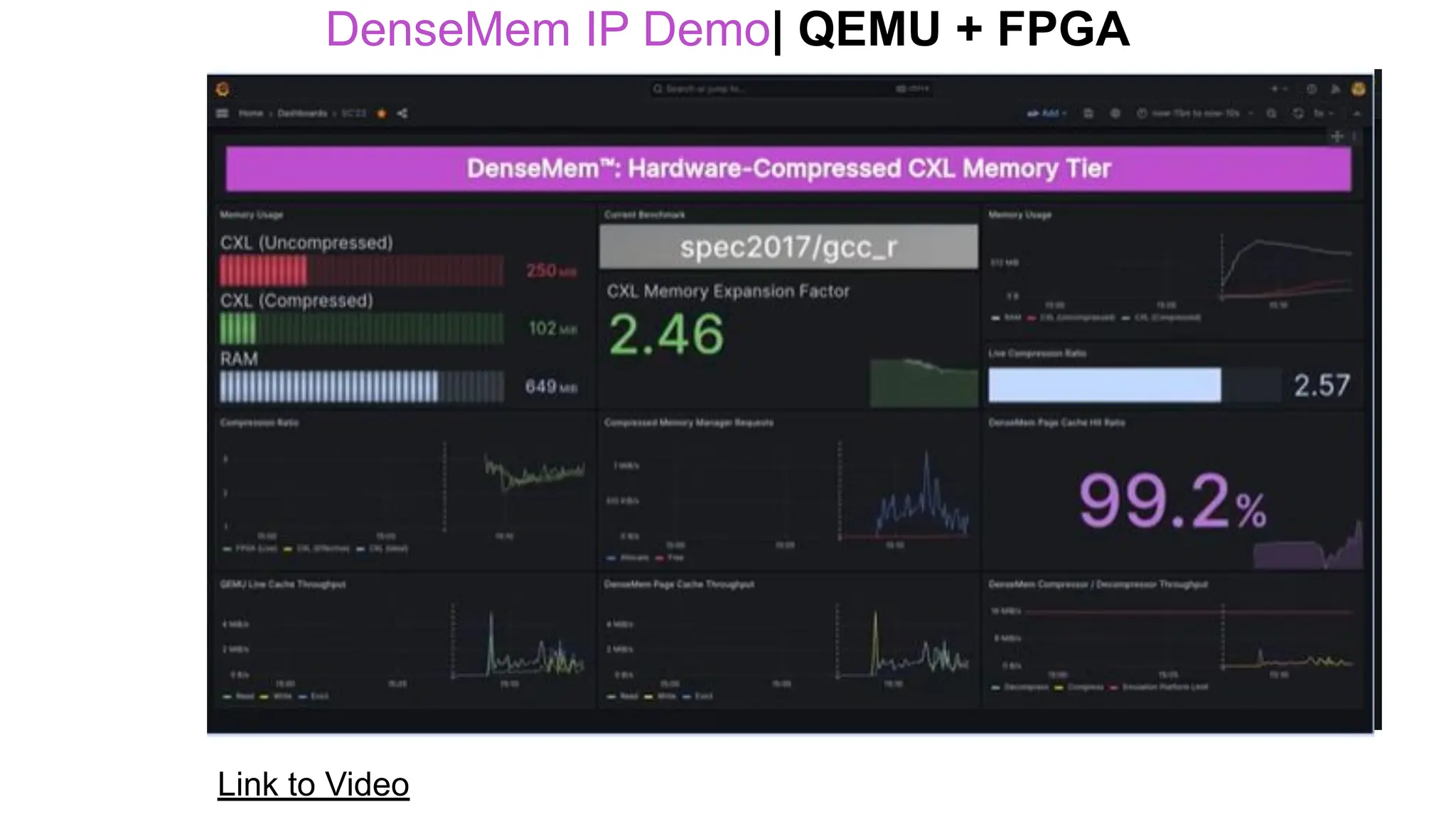

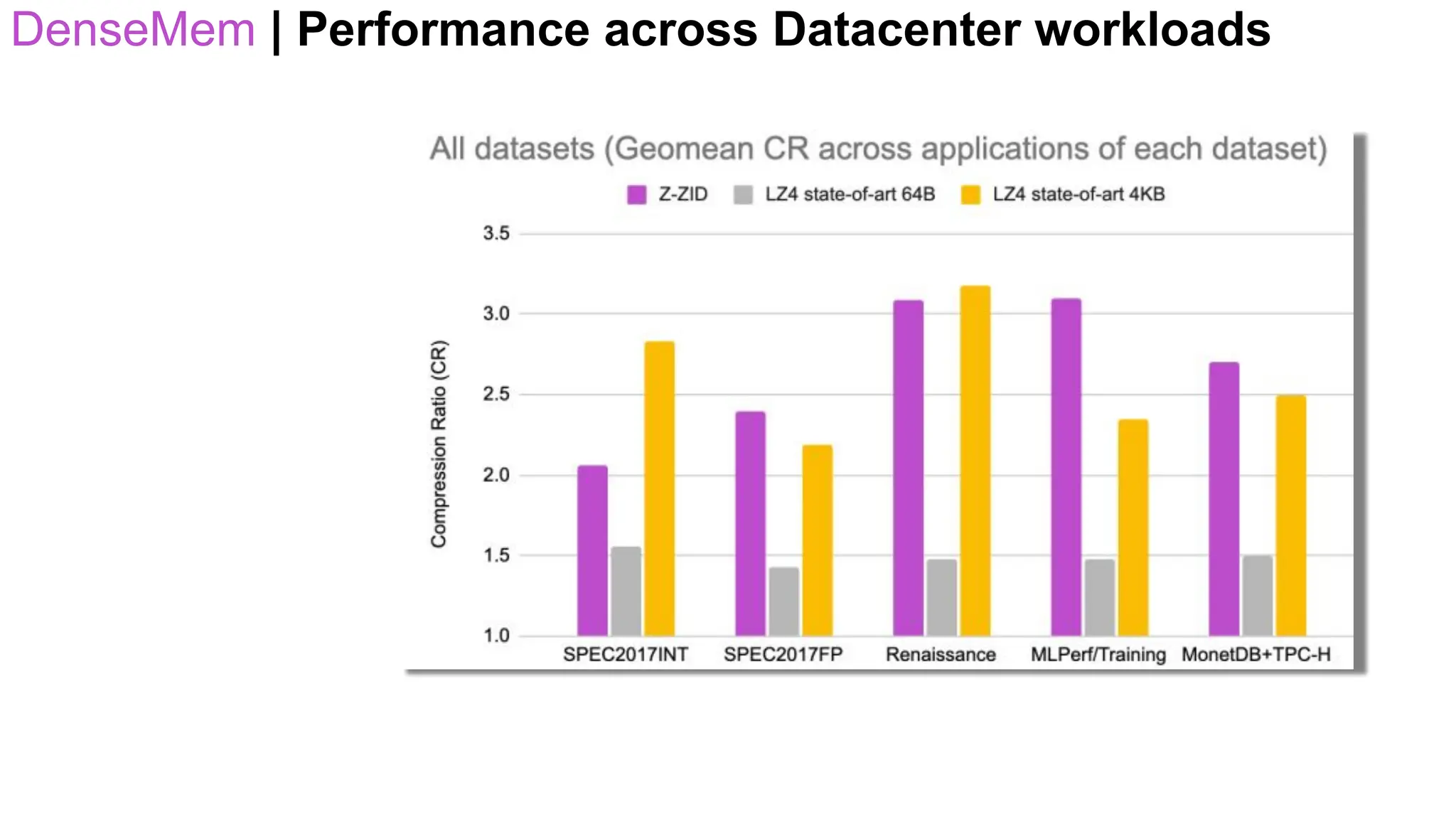

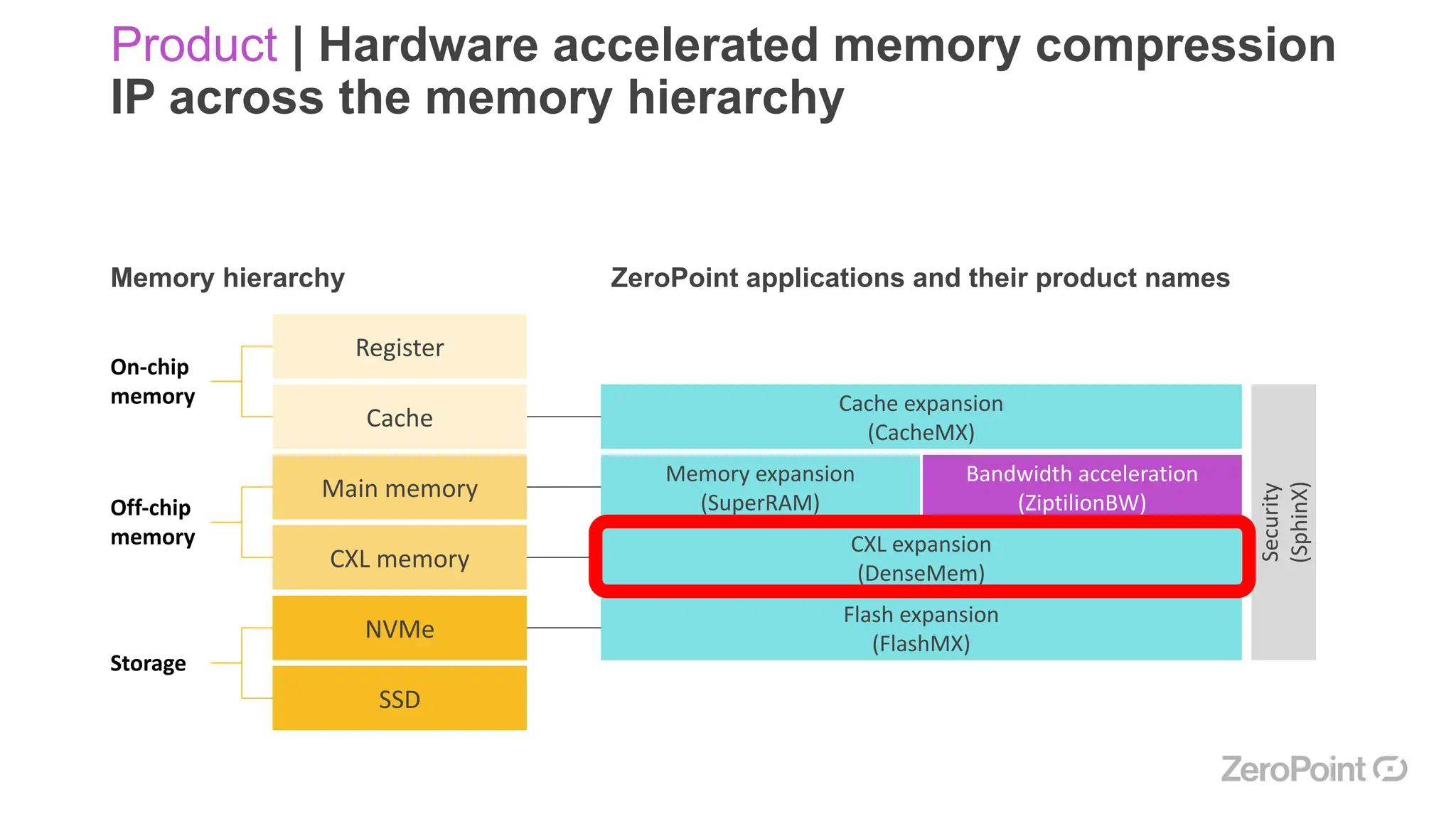

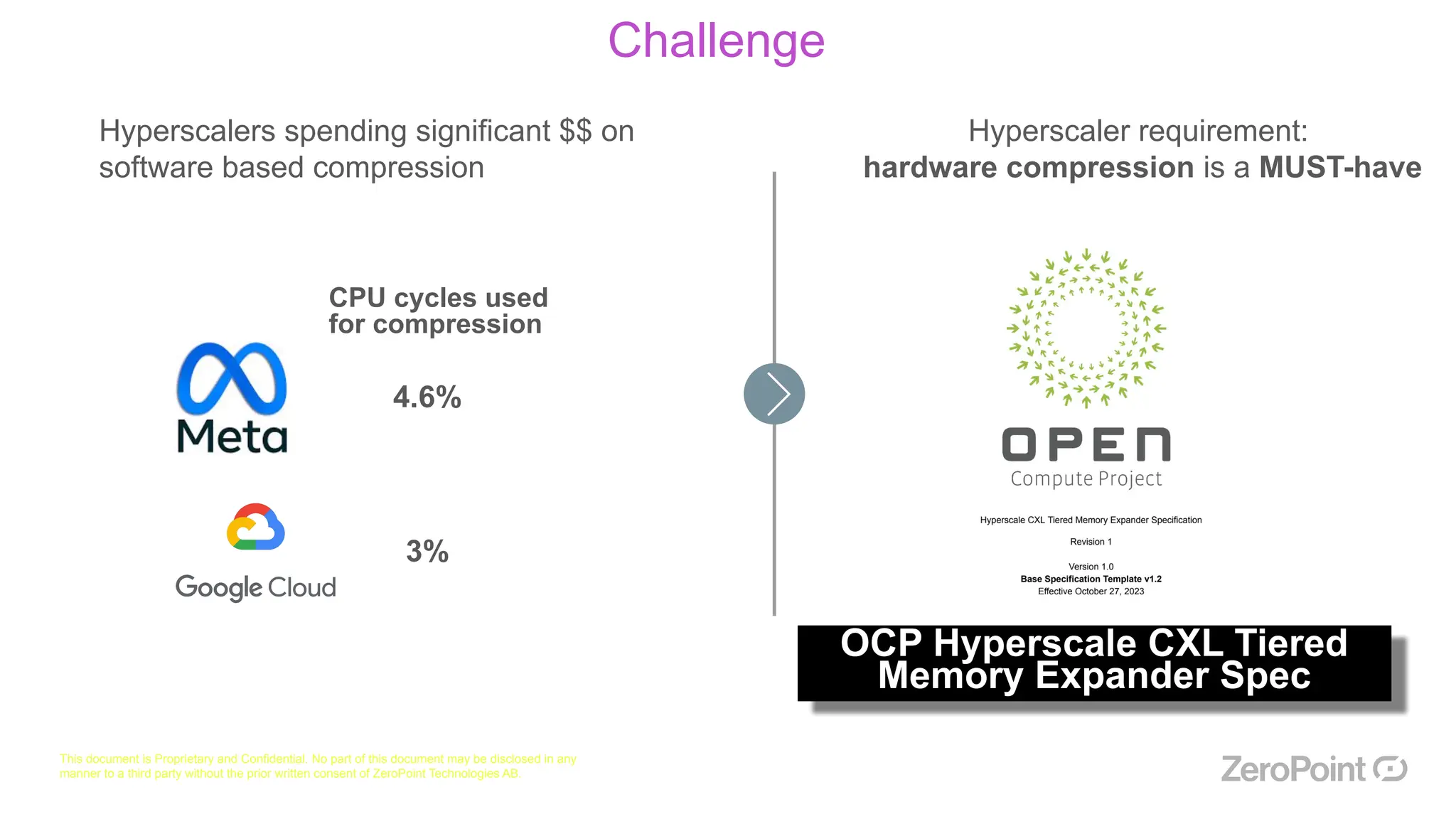

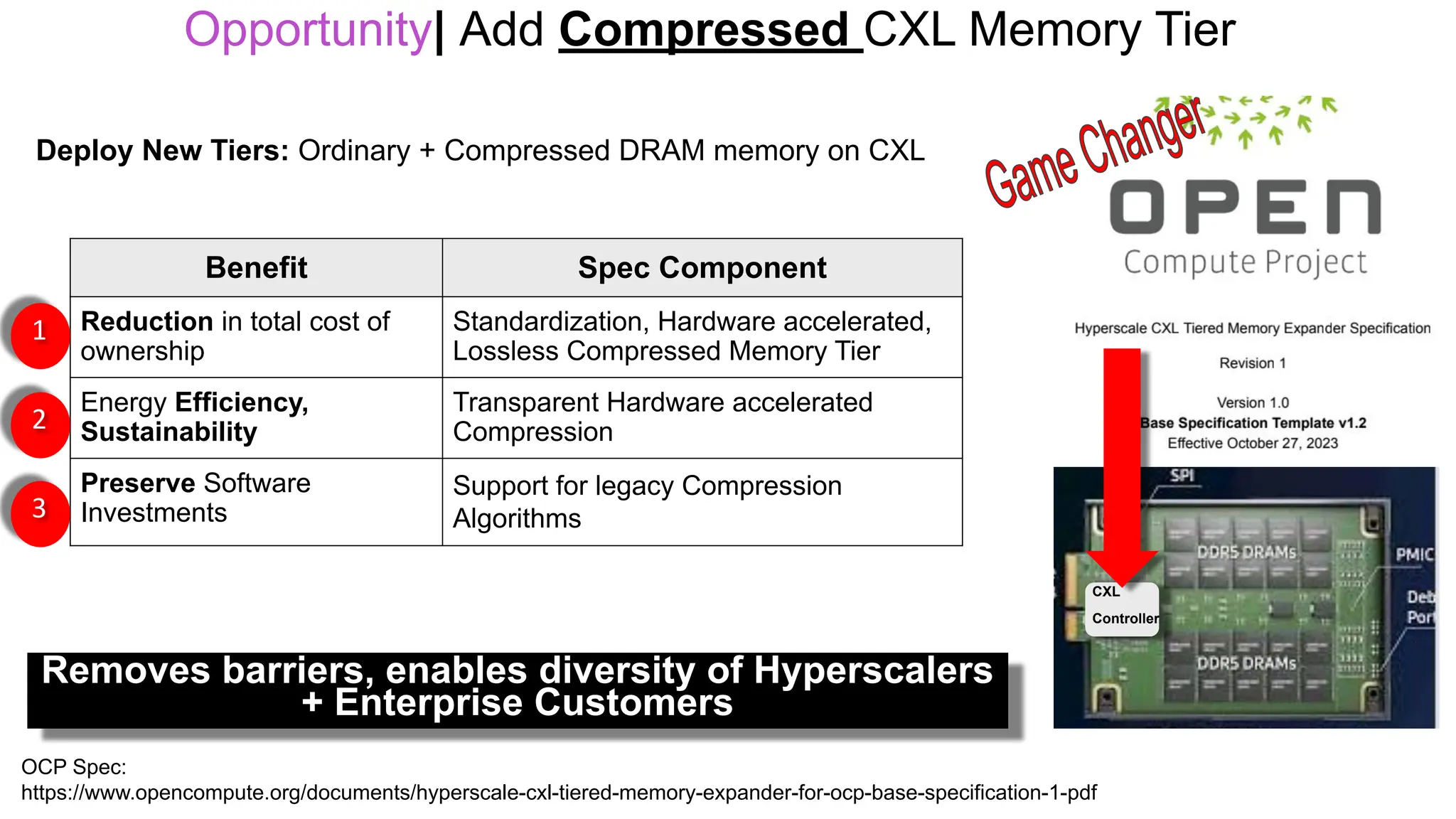

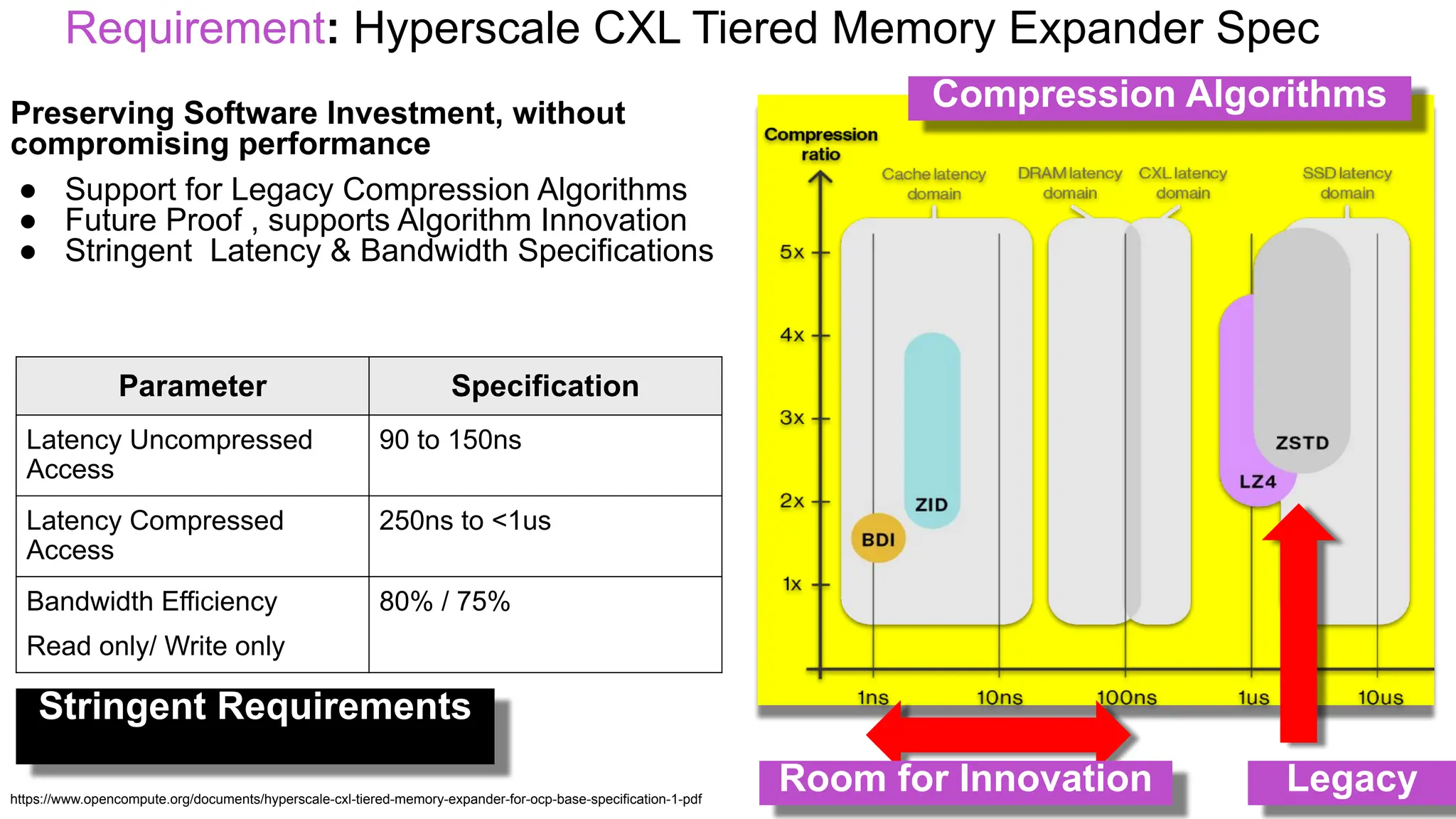

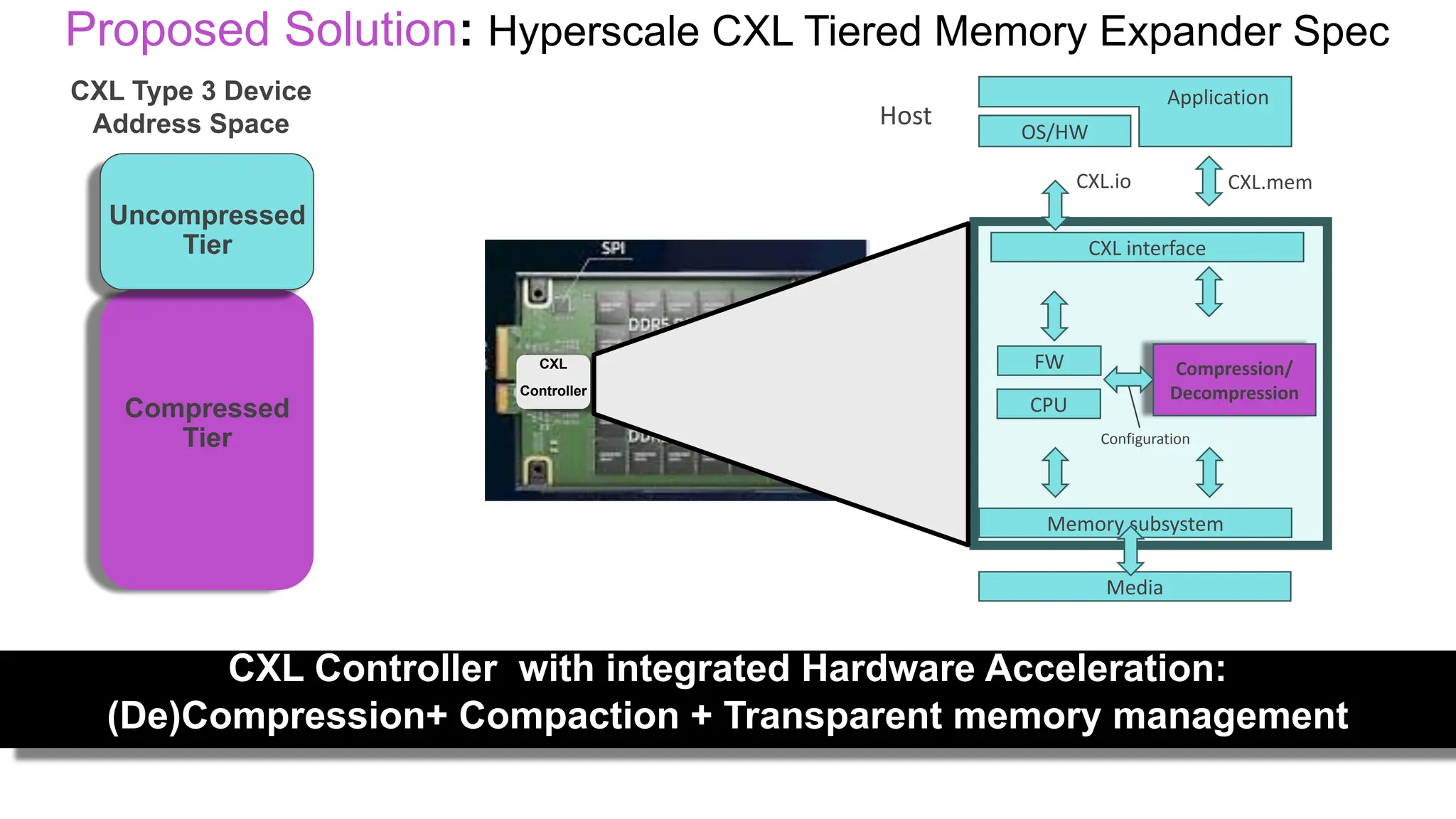

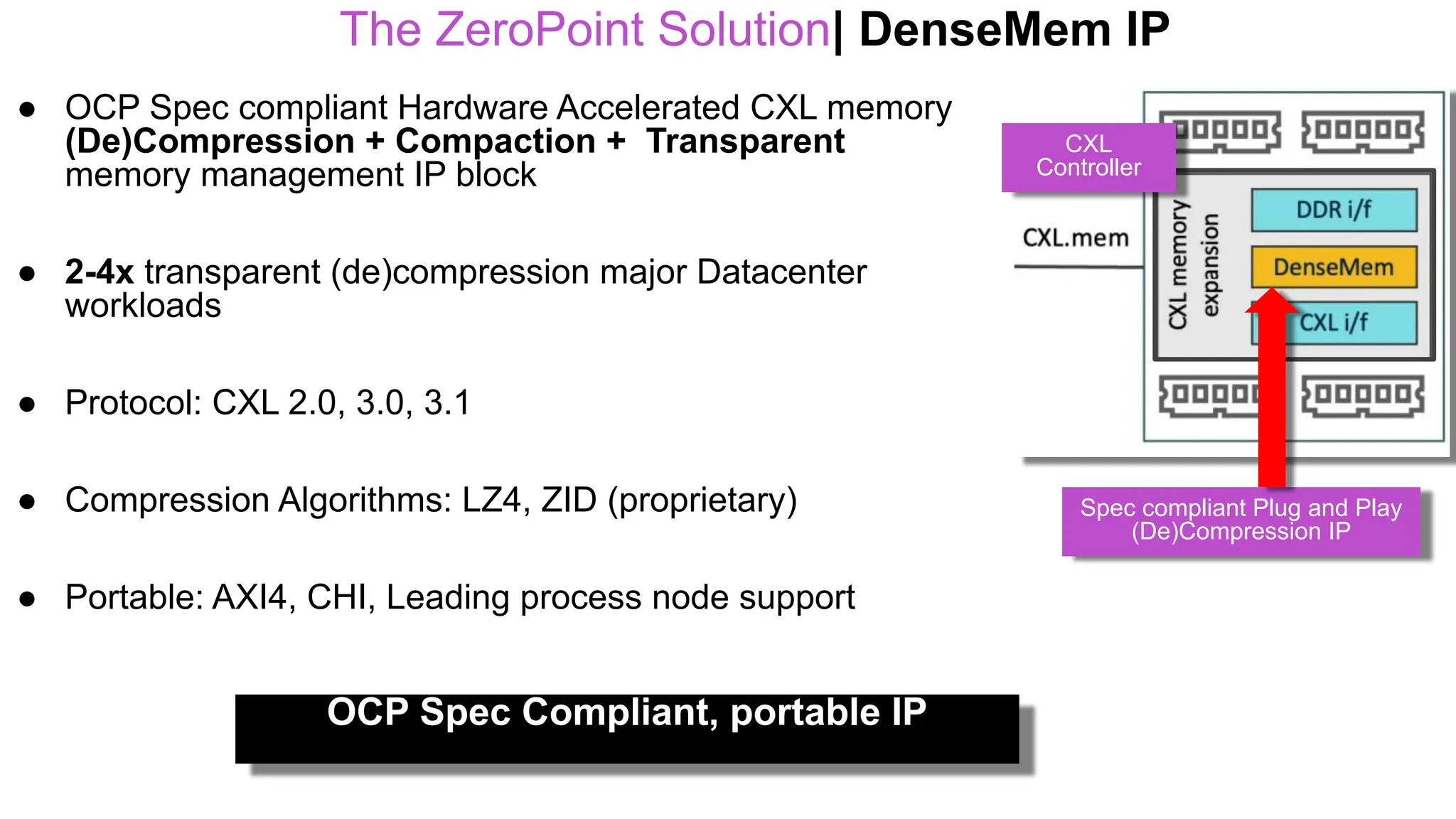

This document outlines a proprietary portable hardware IP solution by Zeropoint Technologies for lossless memory compression aimed at reducing total cost of ownership (TCO) in data centers by 20-25%. It emphasizes the importance of hardware-based compression for hyperscalers to meet performance and efficiency requirements while providing a compliant and scalable architecture. The solution includes various products designed to enhance memory capacity, bandwidth, and performance while ensuring compatibility with existing software and legacy algorithms.

![ZeroPoint Solution | Reduce Data Center TCO 20-25%

Today With CXL only With our

compression only

With CXL & Our

compression

~850

~780

~700

~650

-20-25%

CAPEX:

CPU

CAPEX: Memory

CAPEX: Other

OPEX: Power

OPEX: Cooling

OPEX: Other

Waste: Compression tax

Total Cost of Ownership (TCO) for 40 server rack over 3y lifetime [kUSD]](https://image.slidesharecdn.com/zptmemoryfabricforumfeb92024-240215165501-de6dd484/75/Q1-Memory-Fabric-Forum-ZeroPoint-Remove-the-waste-Release-the-power-9-2048.jpg)