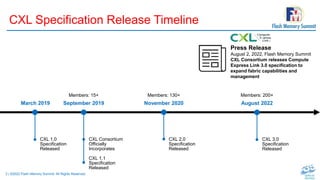

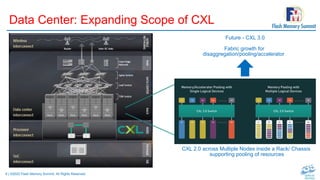

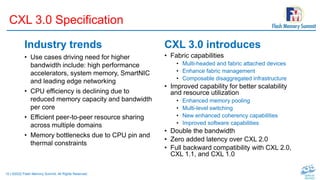

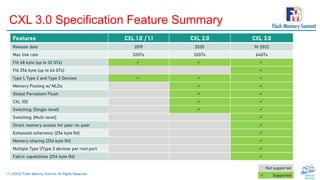

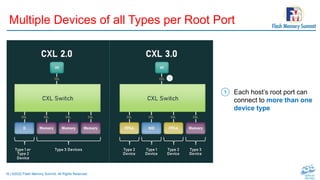

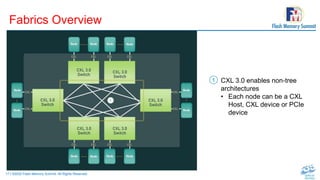

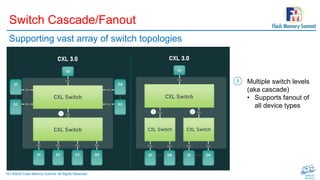

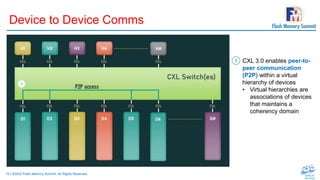

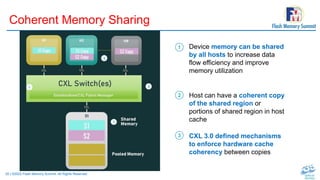

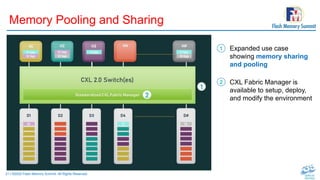

The CXL Consortium has introduced the CXL 3.0 specification to enhance fabric capabilities and management, supporting higher bandwidth and resource pooling across multiple node environments. With over 200 member companies, CXL aims to address industry needs for coherent I/O and increased memory efficiency, adapting to trends in cloud computing and AI. The new specification includes features like improved memory pooling, enhanced scaling, and is fully backward compatible with previous versions.