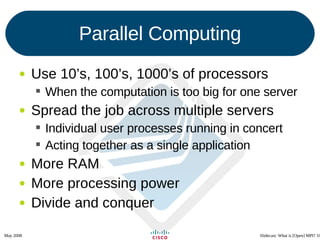

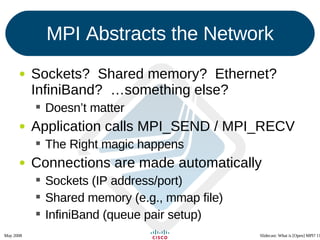

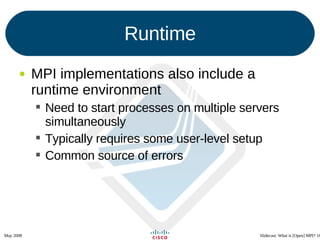

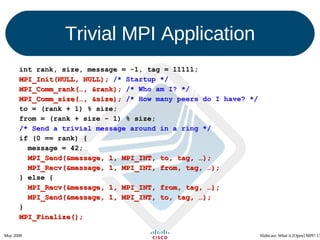

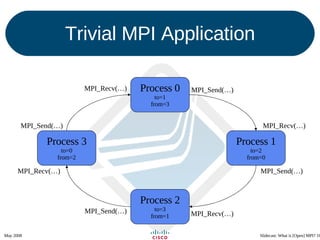

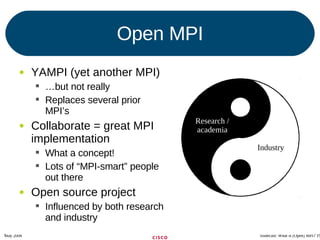

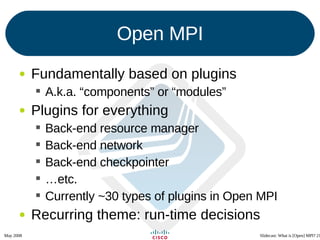

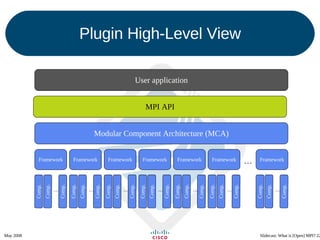

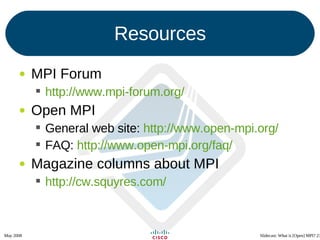

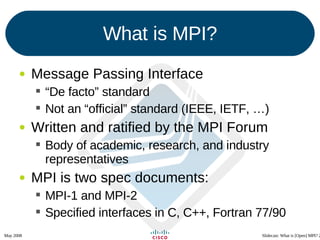

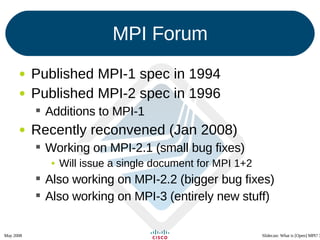

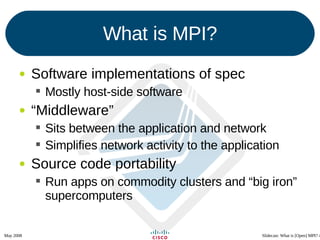

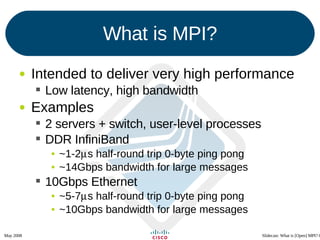

The document provides an overview of the Message Passing Interface (MPI), a standard for parallel computing facilitating communication between processes in high-performance computing environments. It discusses the history, specifications, various implementations, and intended audience for MPI, emphasizing its importance in efficiently utilizing multiple servers. Open MPI, as an open-source implementation, is highlighted for its modular architecture and support from both research and industry sectors.

![Slidecast: What is [Open] MPI? Jeff Squyres May 2008](https://image.slidesharecdn.com/test-1230829557420508-1/75/What-is-Open-MPI-1-2048.jpg)

![MPI Implementations Many exist / are available for customers Vendors: HP MPI, Intel MPI, Scali MPI Have their own support channels Open source: Open MPI, MPICH[2], … Rely on open source community for support But also have some vendor support Various research-quality implementations Proof-of-concept Not usually intended for production usage](https://image.slidesharecdn.com/test-1230829557420508-1/85/What-is-Open-MPI-7-320.jpg)

![Target Audience Scientists and engineers Don’t know or care how network works Not computer scientists Sometimes not even [very good] programmers Parallel computing Using tens, hundreds, or thousands of servers in a single computational program Intended for high-performance computing](https://image.slidesharecdn.com/test-1230829557420508-1/85/What-is-Open-MPI-9-320.jpg)