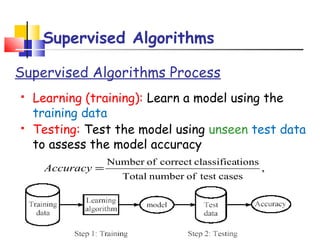

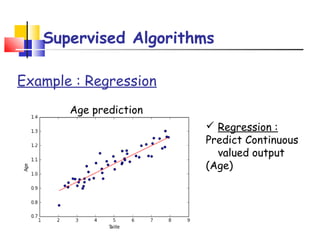

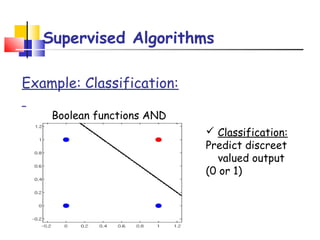

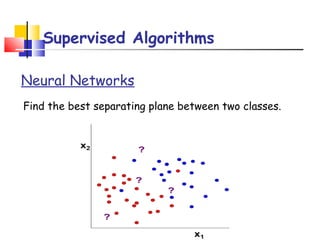

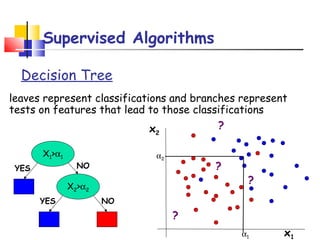

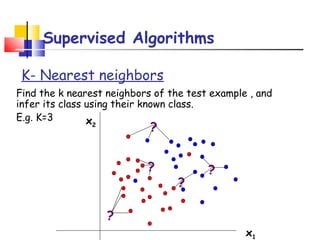

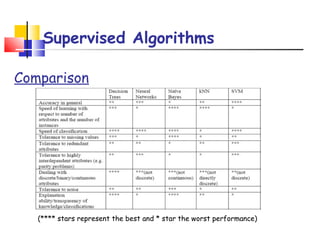

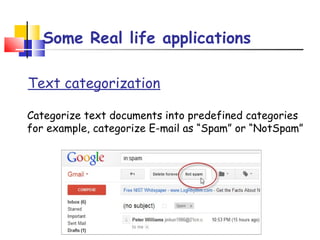

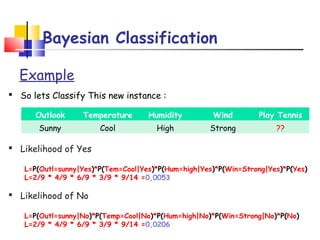

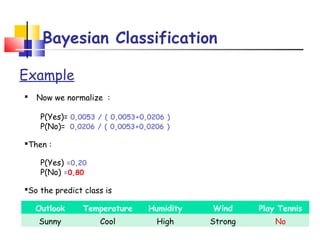

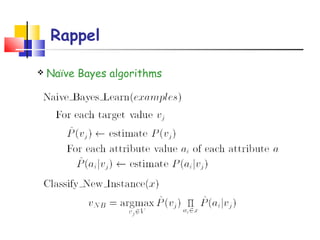

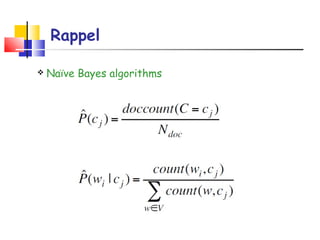

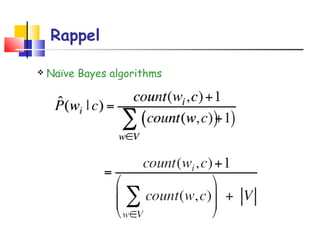

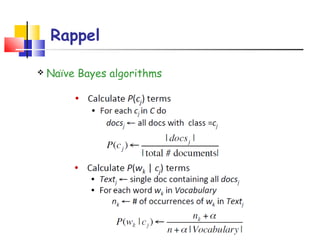

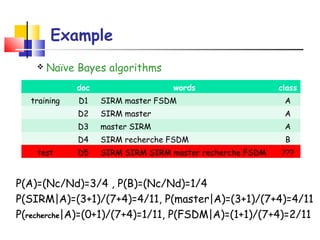

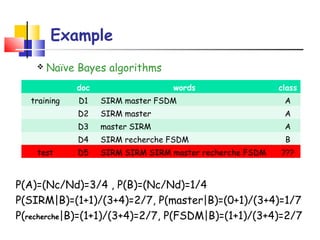

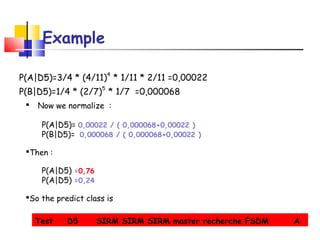

Supervised machine learning algorithms are categorized as either supervised or unsupervised. Supervised algorithms learn from labeled examples to predict future labels, while unsupervised algorithms find hidden patterns in unlabeled data. Specifically, supervised algorithms are presented with labeled training data and learn a model to predict the class labels of new test data. Common supervised algorithms include neural networks, decision trees, k-nearest neighbors, and Naive Bayes classifiers. Naive Bayes is an easy to implement algorithm that assumes independence between features. It has been successfully applied to problems like spam filtering.