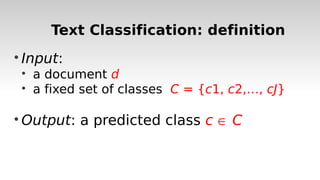

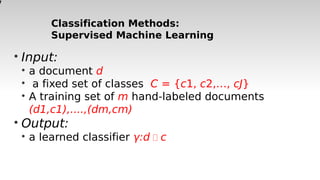

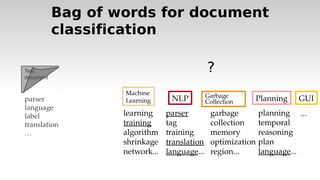

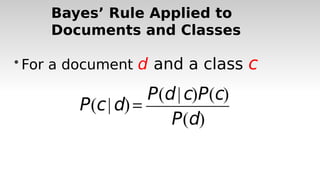

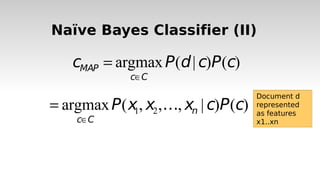

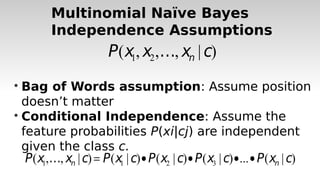

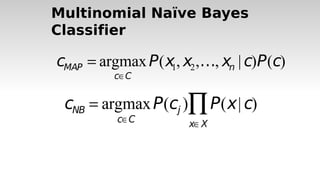

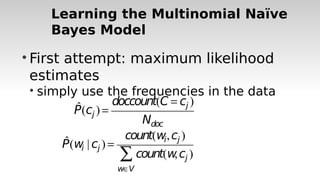

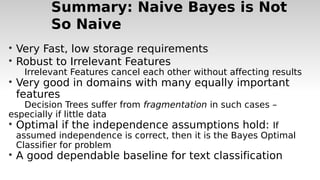

This document provides an overview of text classification and the Naive Bayes classification method. It defines text classification as assigning categories, topics or genres to documents. It describes classification methods like hand-coded rules and supervised machine learning. It explains the bag-of-words representation and how Naive Bayes classification works by calculating the probability of a document belonging to a class using Bayes' rule and independence assumptions. It discusses parameter estimation and how to build a multinomial Naive Bayes classifier for text classification tasks.