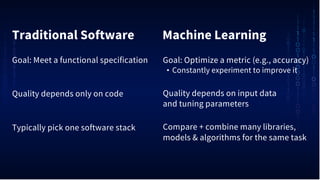

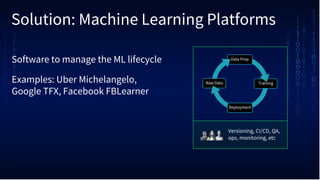

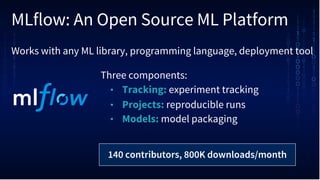

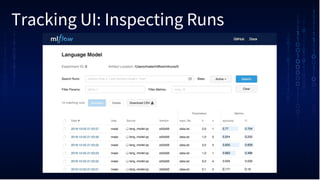

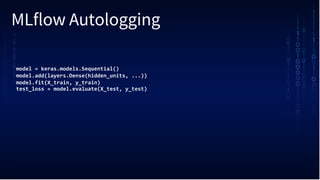

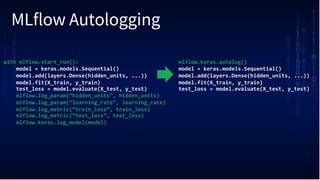

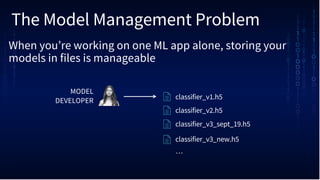

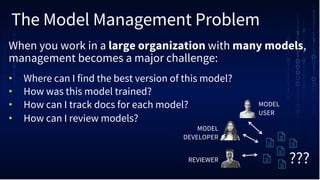

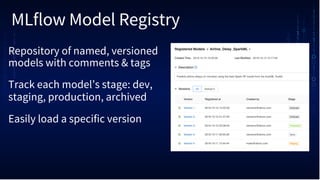

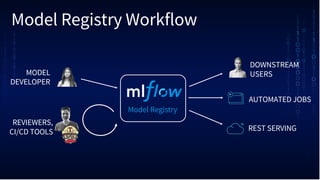

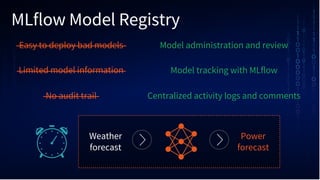

The document discusses the complexities of machine learning (ML) model management compared to traditional software development, including challenges in data preparation, training, and deployment. It presents MLflow, an open-source platform designed to track experiments, facilitate reproducible runs, and manage model packaging, highlighting its features such as automatic logging and model registry. The document also notes recent updates to MLflow and encourages users to start using the platform through installation and access to resources.