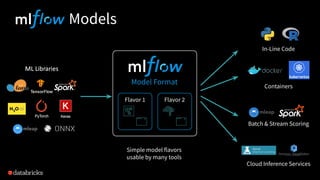

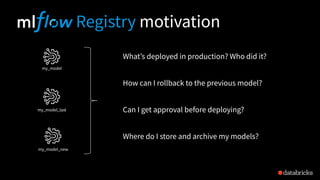

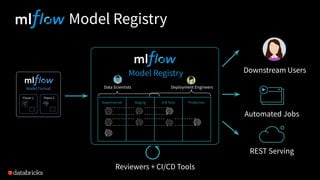

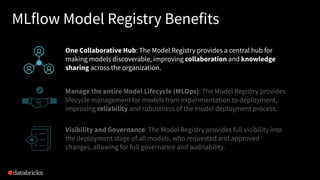

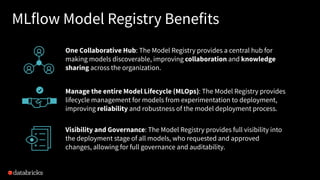

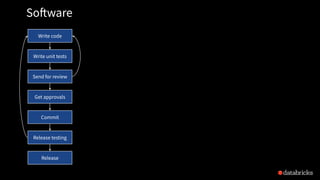

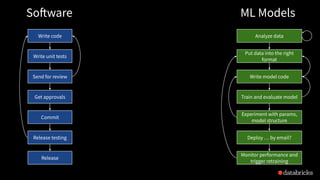

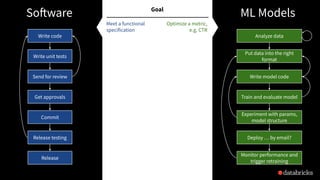

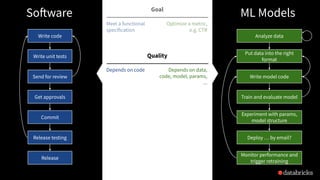

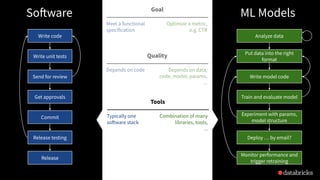

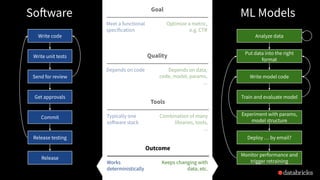

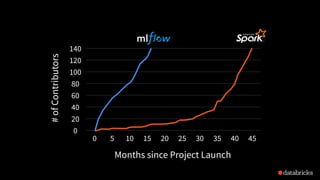

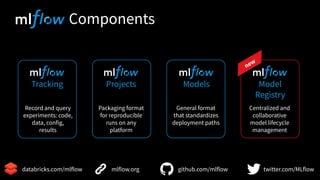

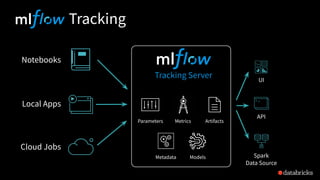

The document presents an agenda for a presentation on MLflow, highlighting its role in the deployment lifecycle of machine learning models. It discusses the complexities of deploying ML models compared to traditional software, emphasizing data management, model training, and monitoring. MLflow is introduced as an open-source platform that provides features for tracking experiments, managing lifecycle, and improving collaboration in model development.

![X, y = get_training_data()

opt = keras.optimizers.Adam(lr=params["learning_rate"],

beta_1=params["beta_1"],

beta_2=params["beta_2"],

epsilon=params["epsilon"])

model = Sequential()

model.add(Dense(int(params["units"]), ...)

model.add(Dense(1))

model.compile(loss="mse", optimizer=opt)

rest = model.fit(X, y, epochs=50, batch_size=64, validation_split=.2)](https://image.slidesharecdn.com/2020-01-16-managingthemachinelearninglifecyclewithmlflowmlopsparis-200128145349/85/Managing-the-Machine-Learning-Lifecycle-with-MLflow-23-320.jpg)

![# Enable MLflow Autologging

mlflow.keras.autolog()

X, y = get_training_data()

opt = keras.optimizers.Adam(lr=params["learning_rate"],

beta_1=params["beta_1"],

beta_2=params["beta_2"],

epsilon=params["epsilon"])

model = Sequential()

model.add(Dense(int(params["units"]), ...)

model.add(Dense(1))

model.compile(loss="mse", optimizer=opt)

rest = model.fit(X, y, epochs=50, batch_size=64, validation_split=.2)](https://image.slidesharecdn.com/2020-01-16-managingthemachinelearninglifecyclewithmlflowmlopsparis-200128145349/85/Managing-the-Machine-Learning-Lifecycle-with-MLflow-24-320.jpg)