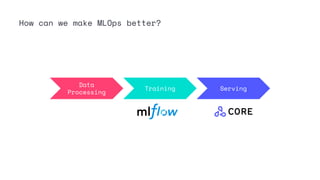

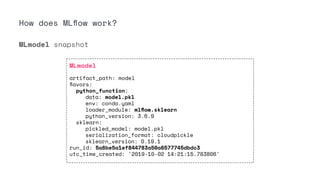

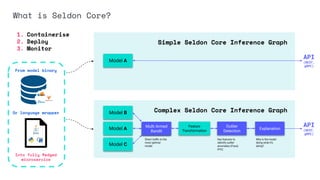

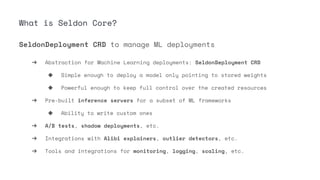

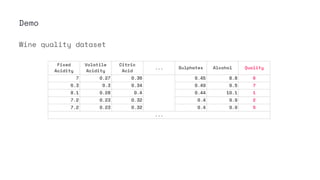

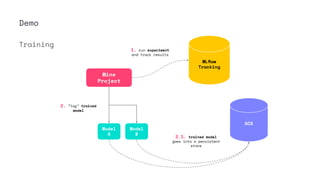

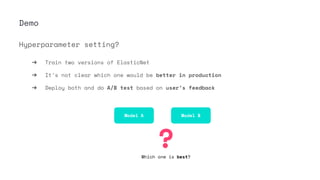

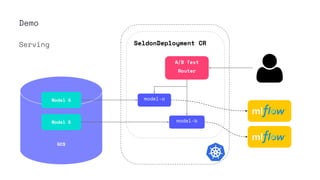

The document provides an overview of seamless MLOps using Seldon and MLflow. It discusses how MLOps is challenging due to the wide range of requirements across the ML lifecycle. MLflow helps with training by allowing experiment tracking and model versioning. Seldon Core helps with deployment by providing servers to containerize models and infrastructure for monitoring, A/B testing, and feedback. The demo shows training models with MLflow, deploying them to Seldon for A/B testing, and collecting feedback to optimize models.

![What do we mean by MLOps?

“MLOps (a compound of “machine learning” and “operations”)

is a practice for collaboration and communication between

data scientists and operations professionals to help manage

production ML lifecycle.” [1]

[1] https://en.wikipedia.org/wiki/MLOps](https://image.slidesharecdn.com/20adriangonzalez-martin-201124200310/85/Seamless-MLOps-with-Seldon-and-MLflow-9-320.jpg)

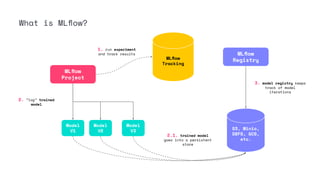

![What is MLflow?

➔ Open Source project initially started by Databricks

➔ Now part of the LFAI

“MLflow is an open source platform to manage the ML

lifecycle, including experimentation, reproducibility,

deployment, and a central model registry.” [2]

[2] https://mlflow.org/](https://image.slidesharecdn.com/20adriangonzalez-martin-201124200310/85/Seamless-MLOps-with-Seldon-and-MLflow-13-320.jpg)

![Deploying models is hard

Analysis Tools

Serving

Infrastructure

Monitoring

Machine

Resource

Management

Process

Management

Tools

Analysis Tools

Serving

Infrastructure

Monitoring

Machine

Resource

Management

Process

Management Tools

ML

Code

Data Verification

Data Collection

Feature Extraction

Configuration

Seldon can help with these...

➔ Serving infrastructure

➔ Scaling up (and down!)

➔ Monitoring models

➔ Updating models

[3] Hidden Technical Debt in Machine Learning Systems, NIPS 2015](https://image.slidesharecdn.com/20adriangonzalez-martin-201124200310/85/Seamless-MLOps-with-Seldon-and-MLflow-20-320.jpg)

![What is Seldon Core?

➔ Open Source project created by Seldon

“An MLOps framework to package, deploy, monitor and manage

thousands of production machine learning models” [3]

[3] https://github.com/SeldonIO/seldon-core](https://image.slidesharecdn.com/20adriangonzalez-martin-201124200310/85/Seamless-MLOps-with-Seldon-and-MLflow-21-320.jpg)

![How does Seldon Core work?

Advanced Deployment Models

https://docs.seldon.io/projects/seldon-core/en/latest/examples/mlflow_server_ab_test_ambassador.html

a-b-deployment.yaml

apiVersion: machinelearning.seldon.io/v1alpha2

kind: SeldonDeployment

metadata:

name: wines-classifier

spec:

name: wines-classifier

predictors:

- graph:

children: []

implementation: MLFLOW_SERVER

modelUri: gs://seldon-models/mlflow/model-a

name: wines-classifier

name: model-a

replicas: 1

traffic: 50

- graph:

children: []

implementation: MLFLOW_SERVER

modelUri: gs://seldon-models/mlflow/model-b

name: wines-classifier

name: model-b

replicas: 1

traffic: 50

➔ A/B Tests

◆ We’ll see this in the demo!

➔ Shadow Deployments](https://image.slidesharecdn.com/20adriangonzalez-martin-201124200310/85/Seamless-MLOps-with-Seldon-and-MLflow-31-320.jpg)

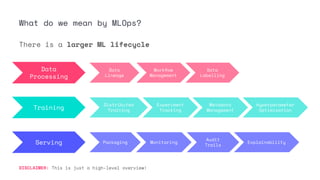

![Demo a-b-deployment.yaml

apiVersion: machinelearning.seldon.io/v1alpha2

kind: SeldonDeployment

metadata:

name: wines-classifier

spec:

name: wines-classifier

predictors:

- graph:

children: []

implementation: MLFLOW_SERVER

modelUri: gs://seldon-models/mlflow/model-a

name: wines-classifier

name: model-a

replicas: 1

traffic: 50

- graph:

children: []

implementation: MLFLOW_SERVER

modelUri: gs://seldon-models/mlflow/model-b

name: wines-classifier

name: model-b

replicas: 1

traffic: 50

Serving

Model A

Model B

{

{](https://image.slidesharecdn.com/20adriangonzalez-martin-201124200310/85/Seamless-MLOps-with-Seldon-and-MLflow-39-320.jpg)