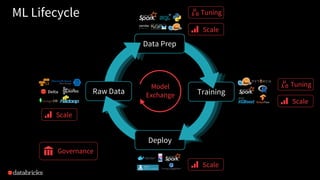

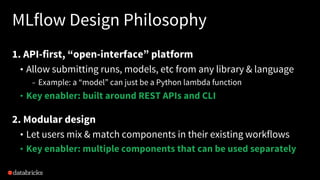

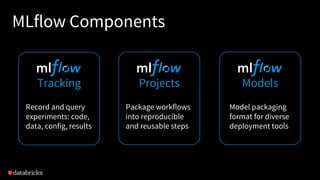

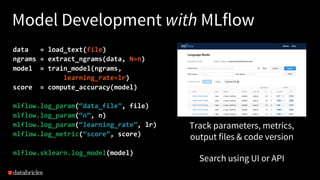

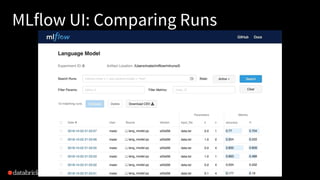

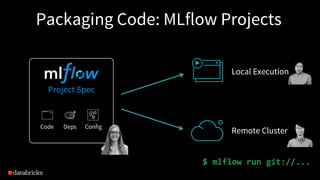

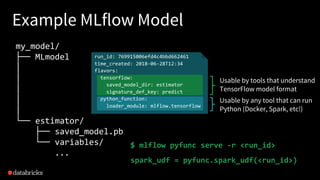

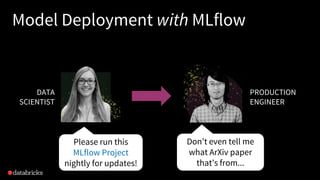

The document discusses Databricks and its MLflow platform which aims to simplify the end-to-end machine learning (ML) lifecycle. MLflow is an open-source platform that supports any ML library and allows users to track experiments, package workflows, and deploy models, enhancing collaboration and reproducibility. Various companies have adopted MLflow for different use cases, enhancing their ML development processes and improving efficiency.