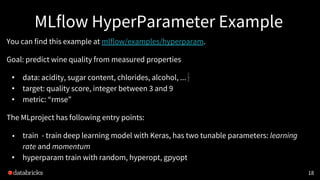

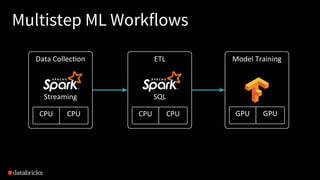

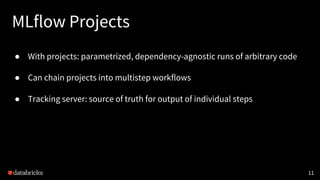

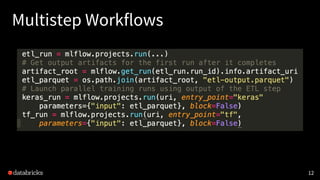

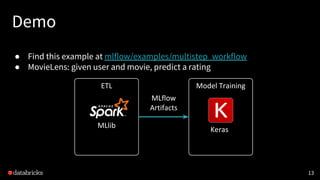

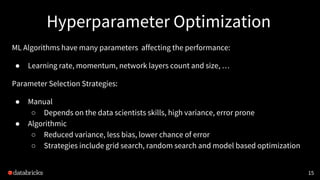

MLflow is an open-source platform designed to streamline the machine learning lifecycle by providing tools for tracking experiments, packaging models, and supporting various deployment tools. The latest updates include Java API integration, support for popular frameworks like PyTorch and Keras, and enhancements in user experience and functionality for managing multi-step workflows and hyperparameter tuning. The document also offers resources for getting started with MLflow and examples for practical applications such as predicting wine quality.

![Model Based Hyperparam Optimization

1. Select “best parameters” based on current model.

Params[n+1] = Select(Model[n])

2. Obtain new data points by training the model with new parameters

Metric[n+1] = Train(Params[n+1])

3. Use new data points to update the model.

Model[n+1] = Update(Model[n], Metric[n+1])](https://image.slidesharecdn.com/finalmesospheremlflowmeetupslides-180924225236/85/Advanced-MLflow-Multi-Step-Workflows-Hyperparameter-Tuning-and-Integrating-Custom-Libraries-16-320.jpg)