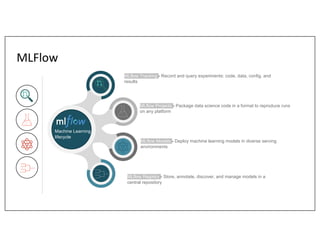

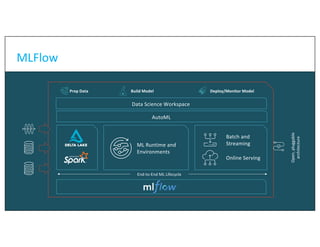

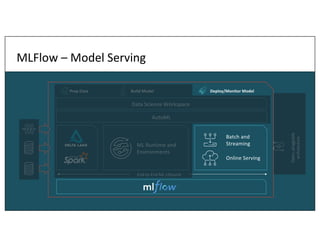

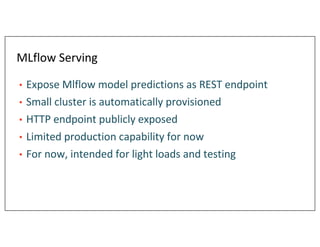

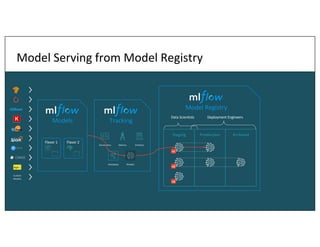

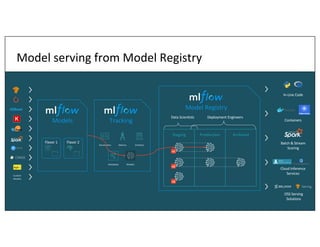

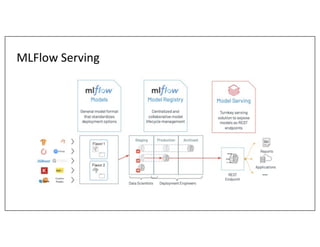

The document discusses the productionizing of ML models using MLflow, emphasizing its capabilities in managing, serving, and monitoring models across diverse environments. It includes an overview of MLflow's features, such as model tracking, registry management, and online serving, while highlighting the architecture supporting both batch and streaming data. Additionally, it addresses the customization of serving clusters and the monitoring of model performance, along with links for further exploration.