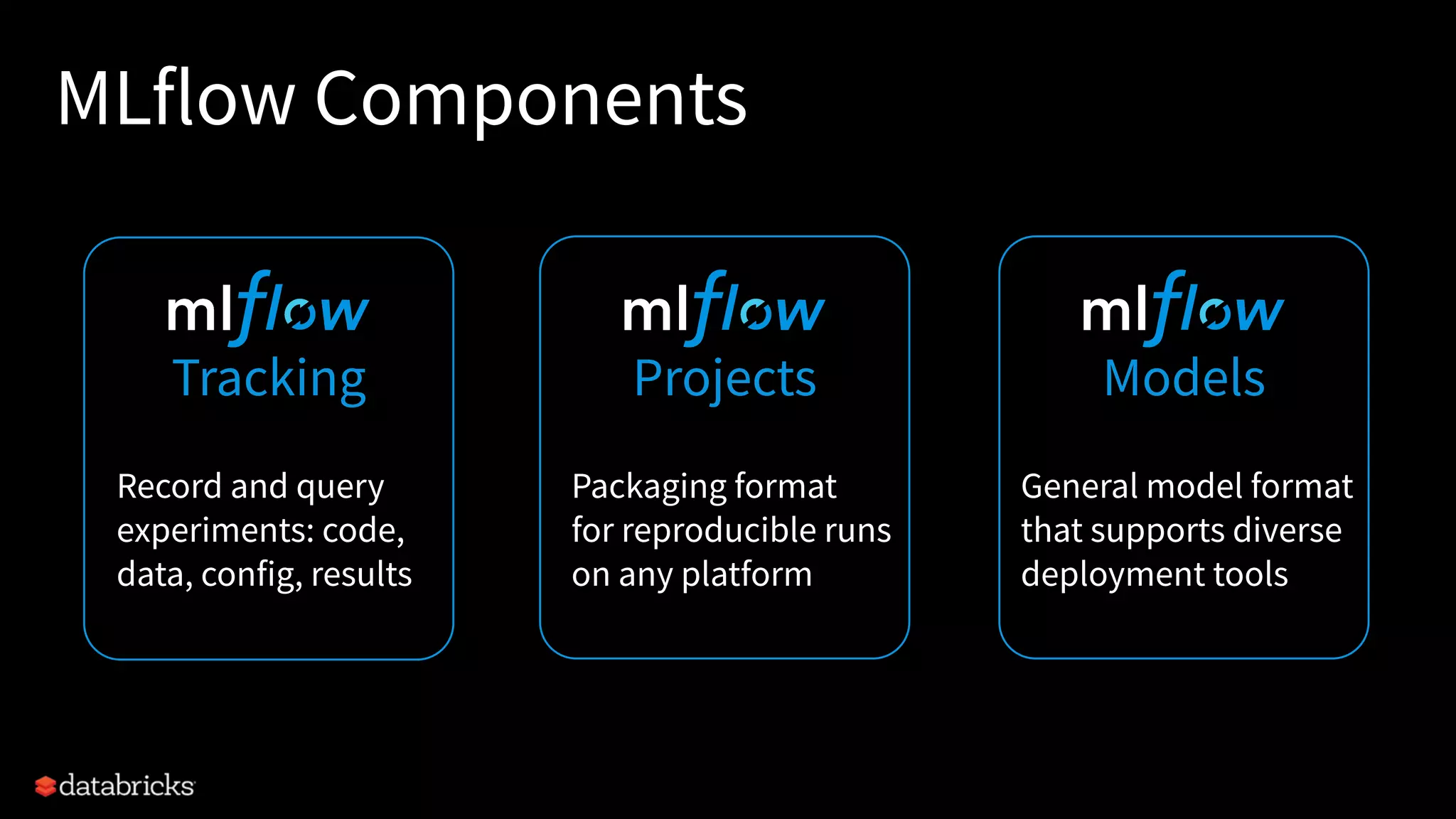

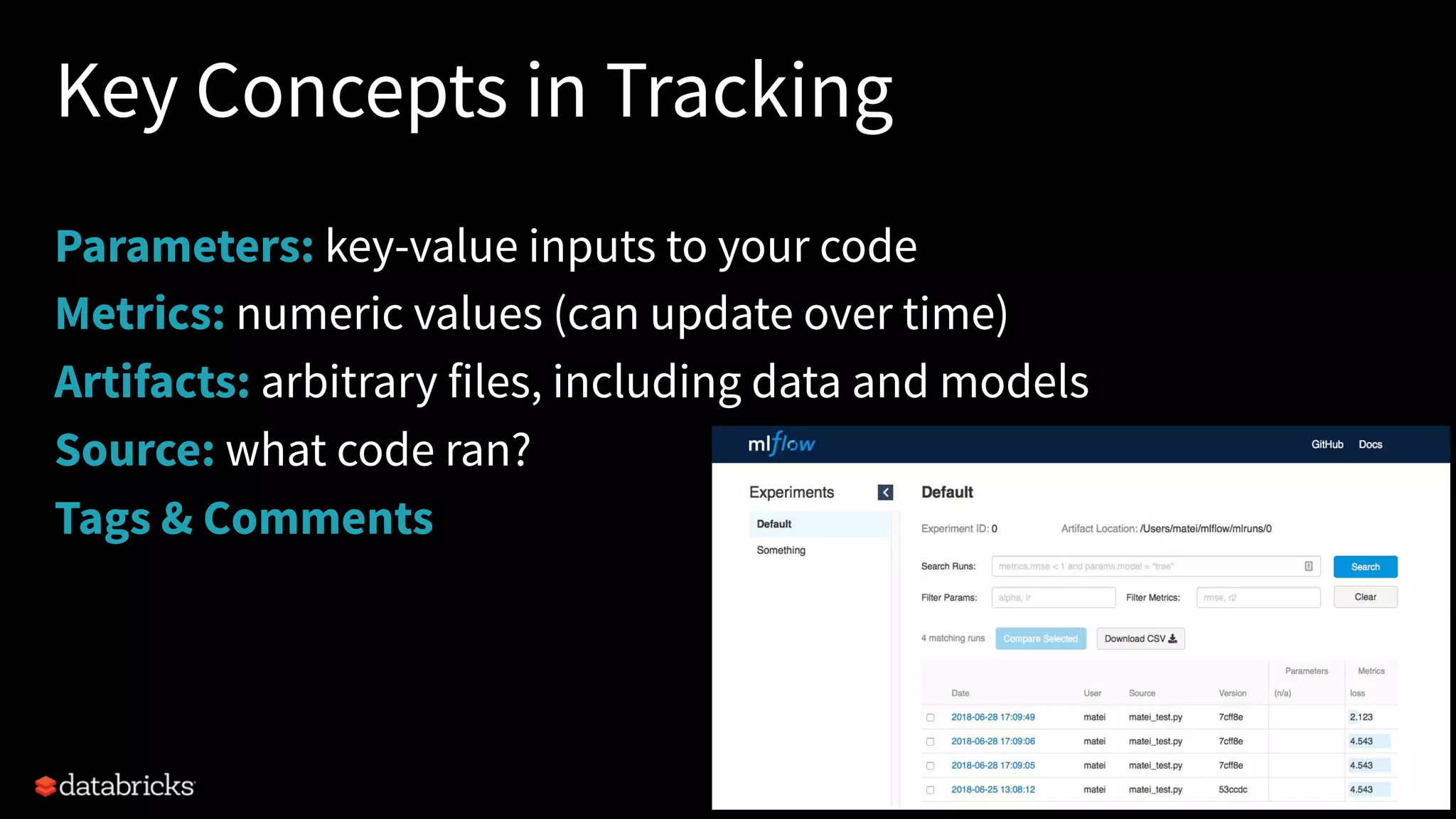

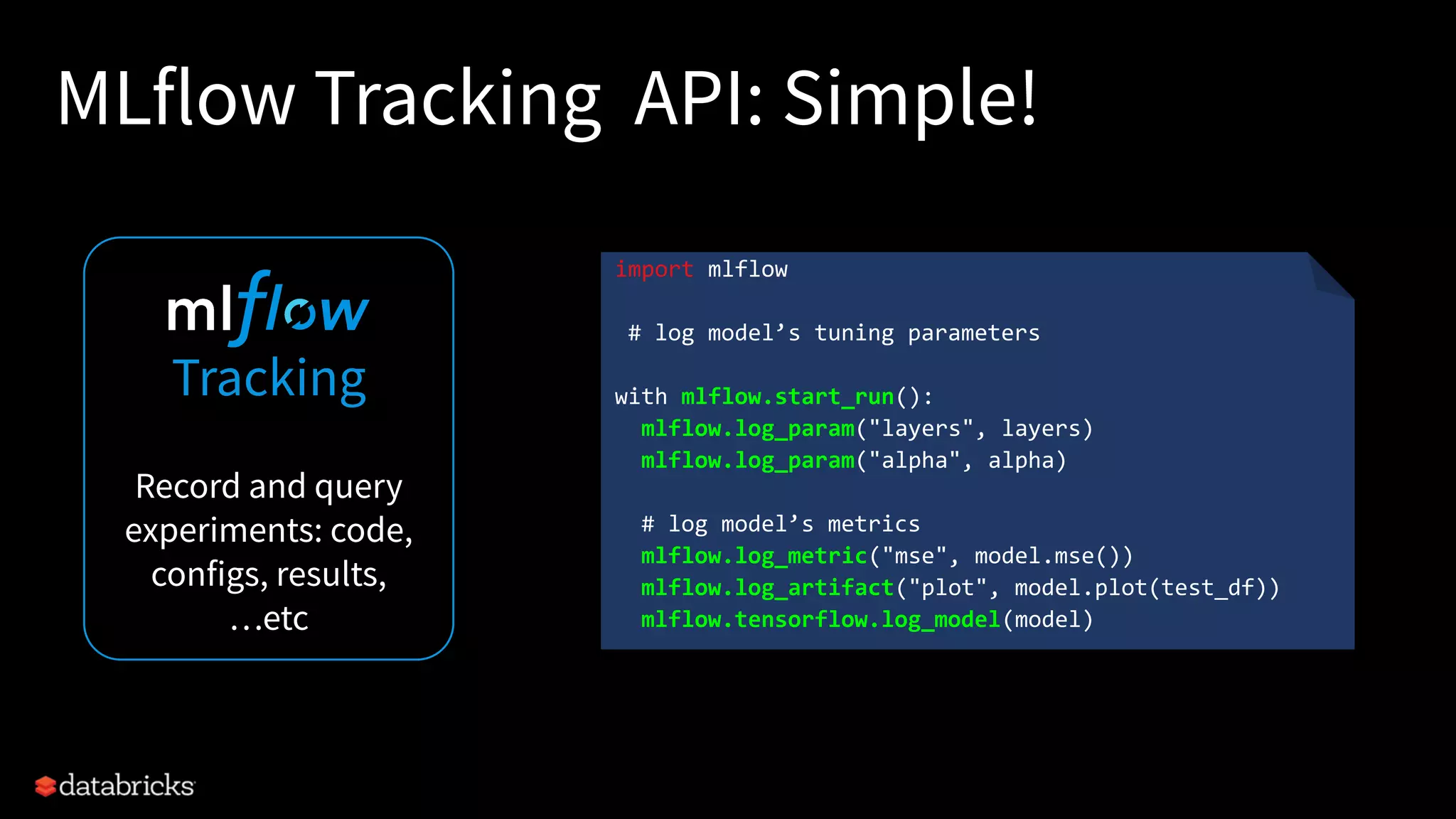

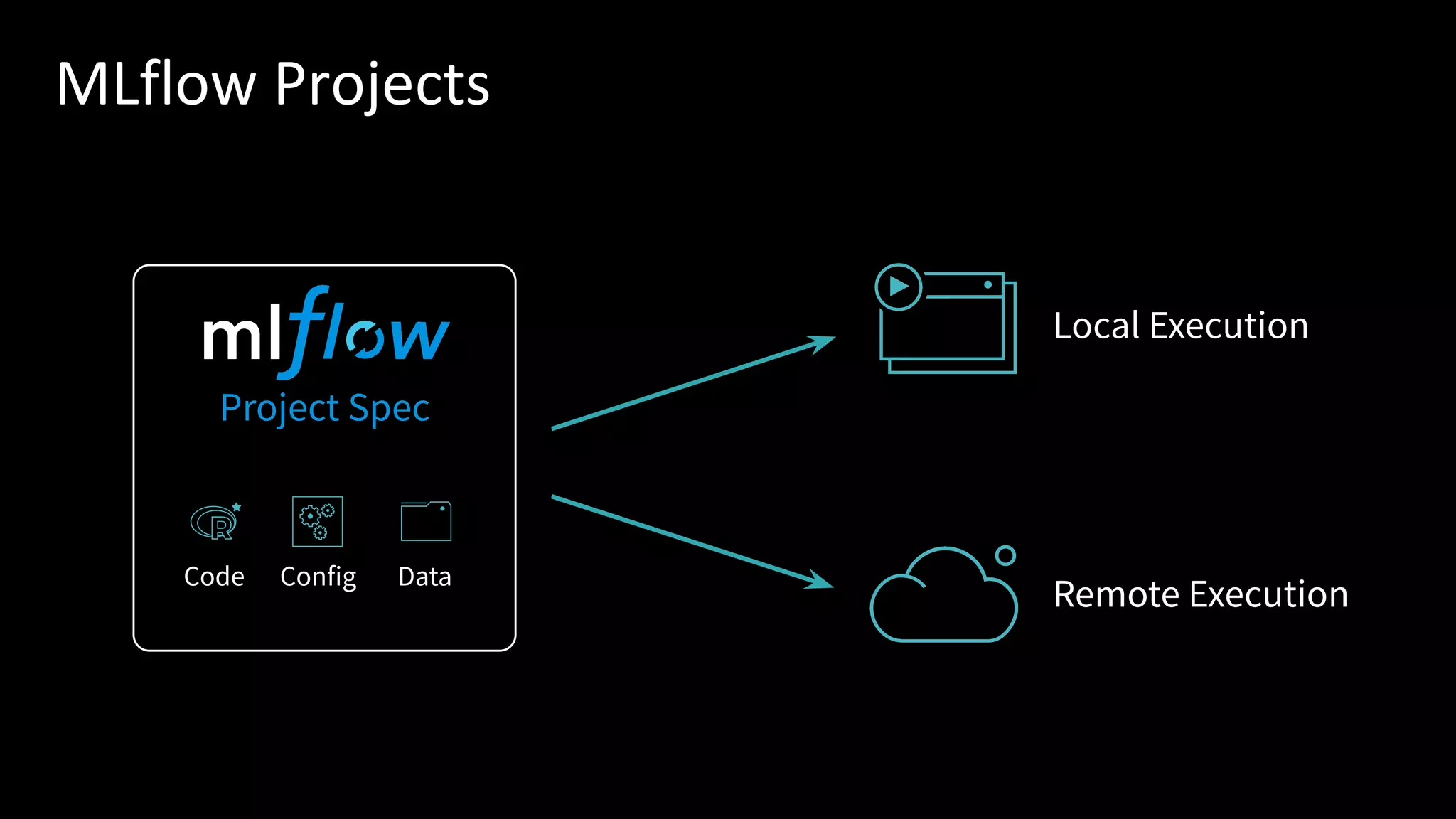

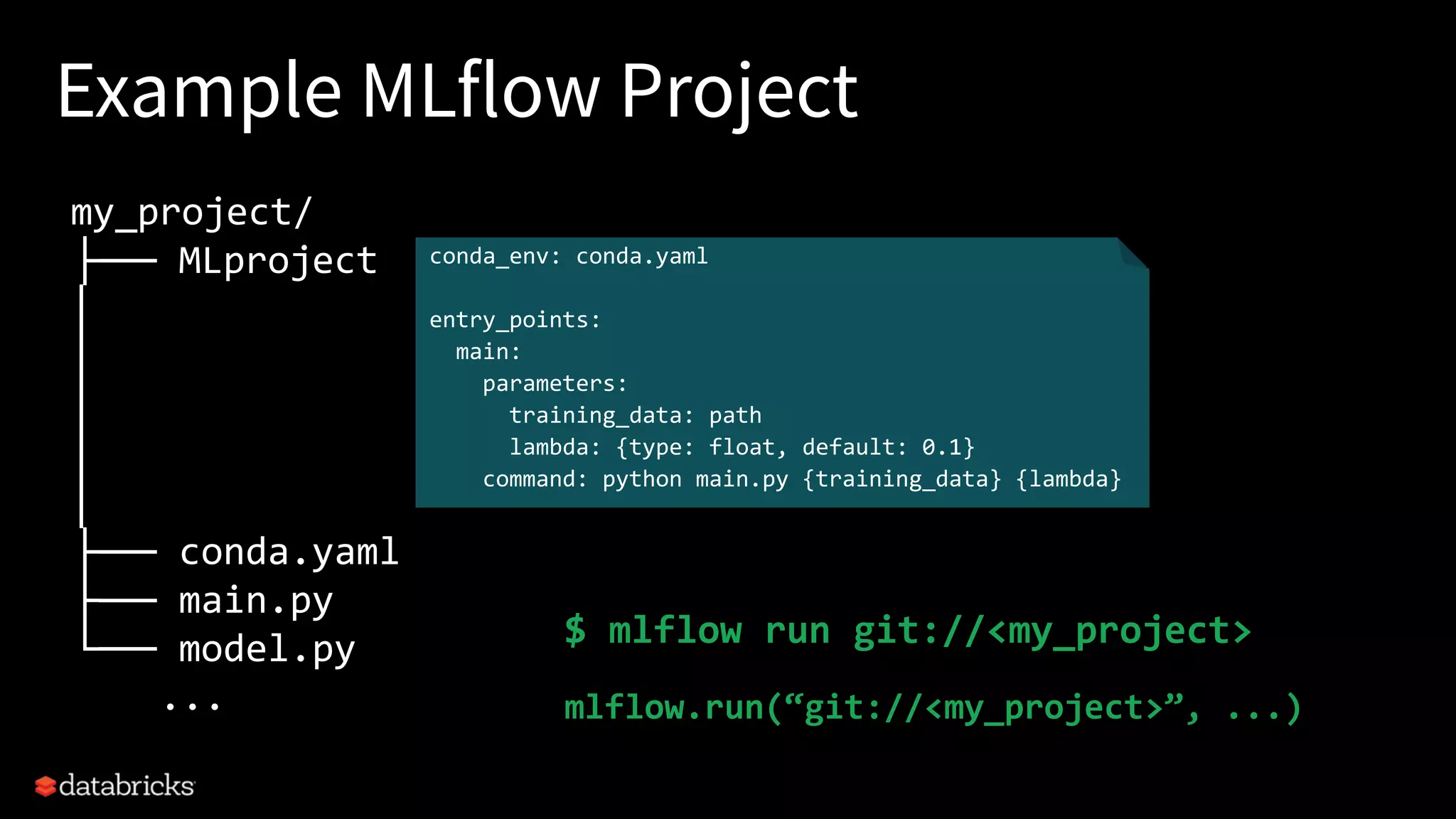

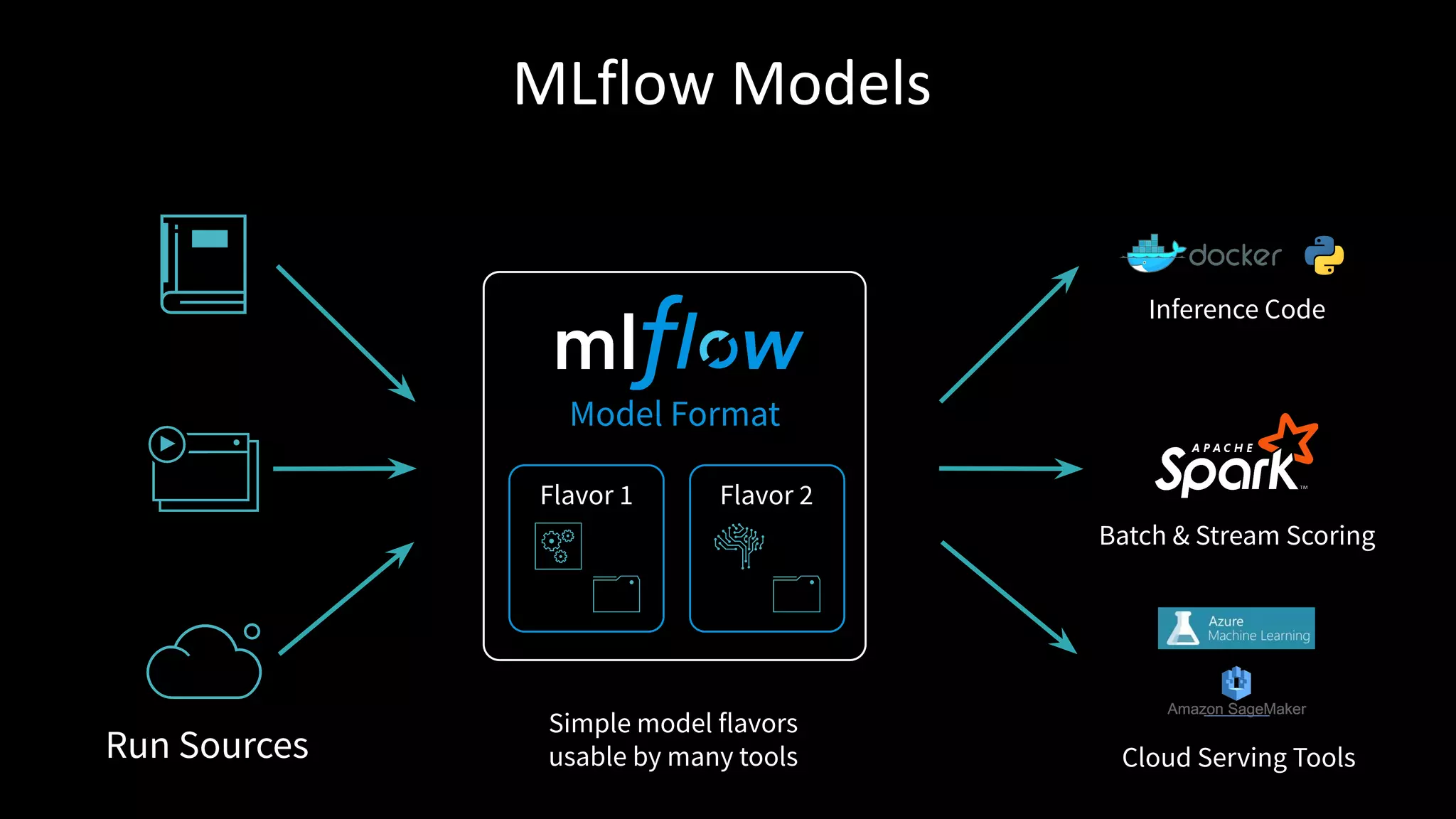

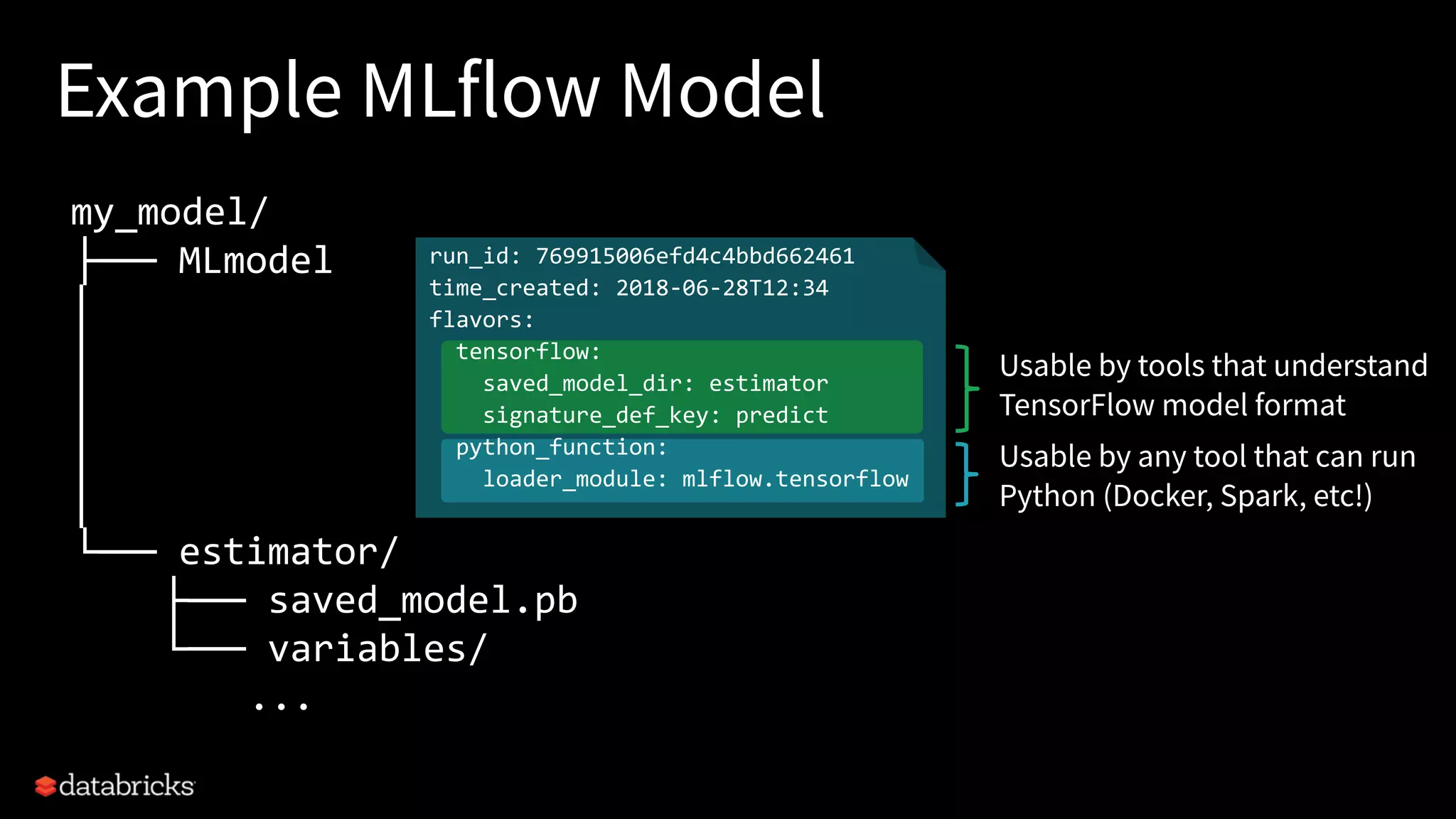

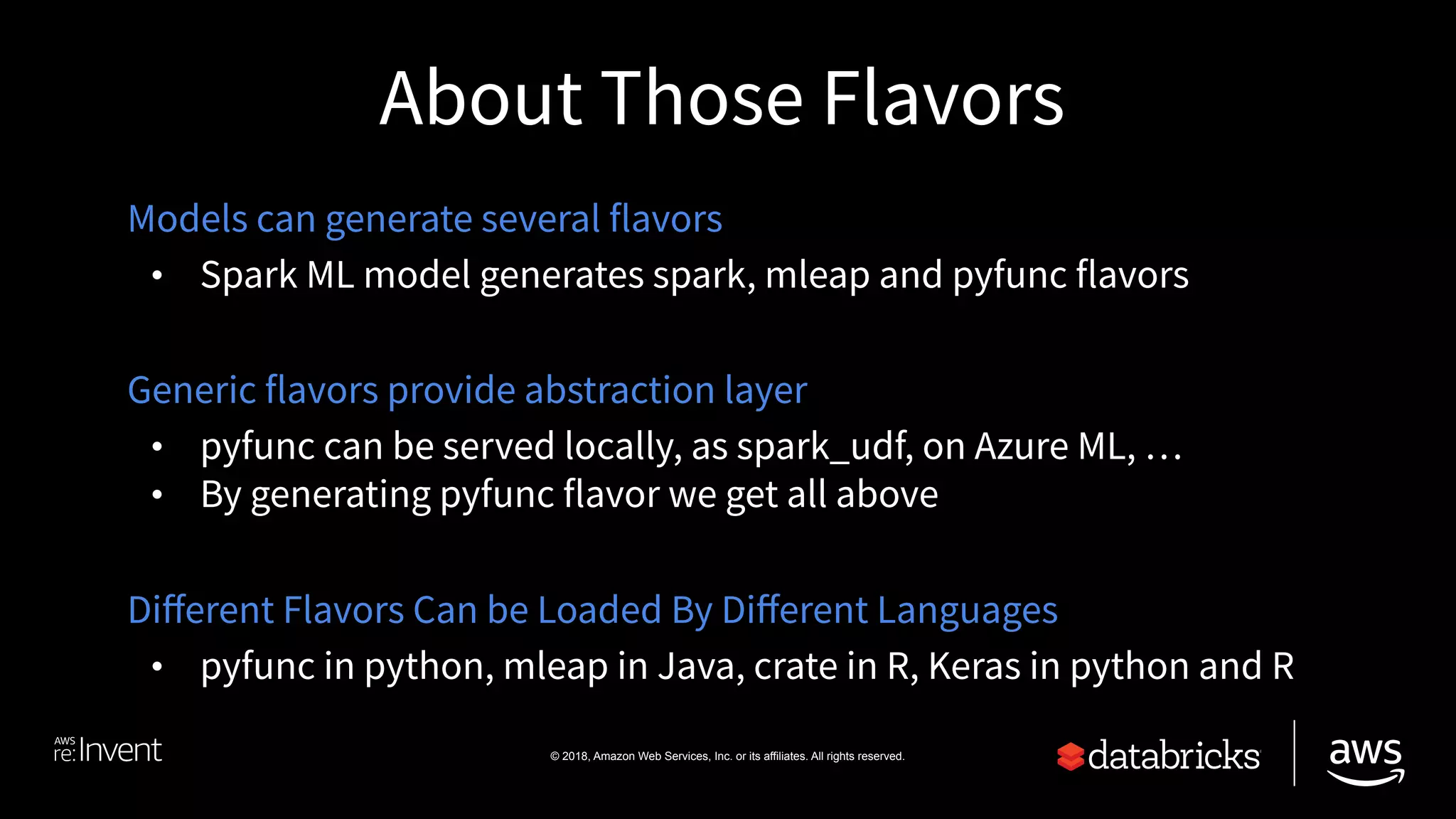

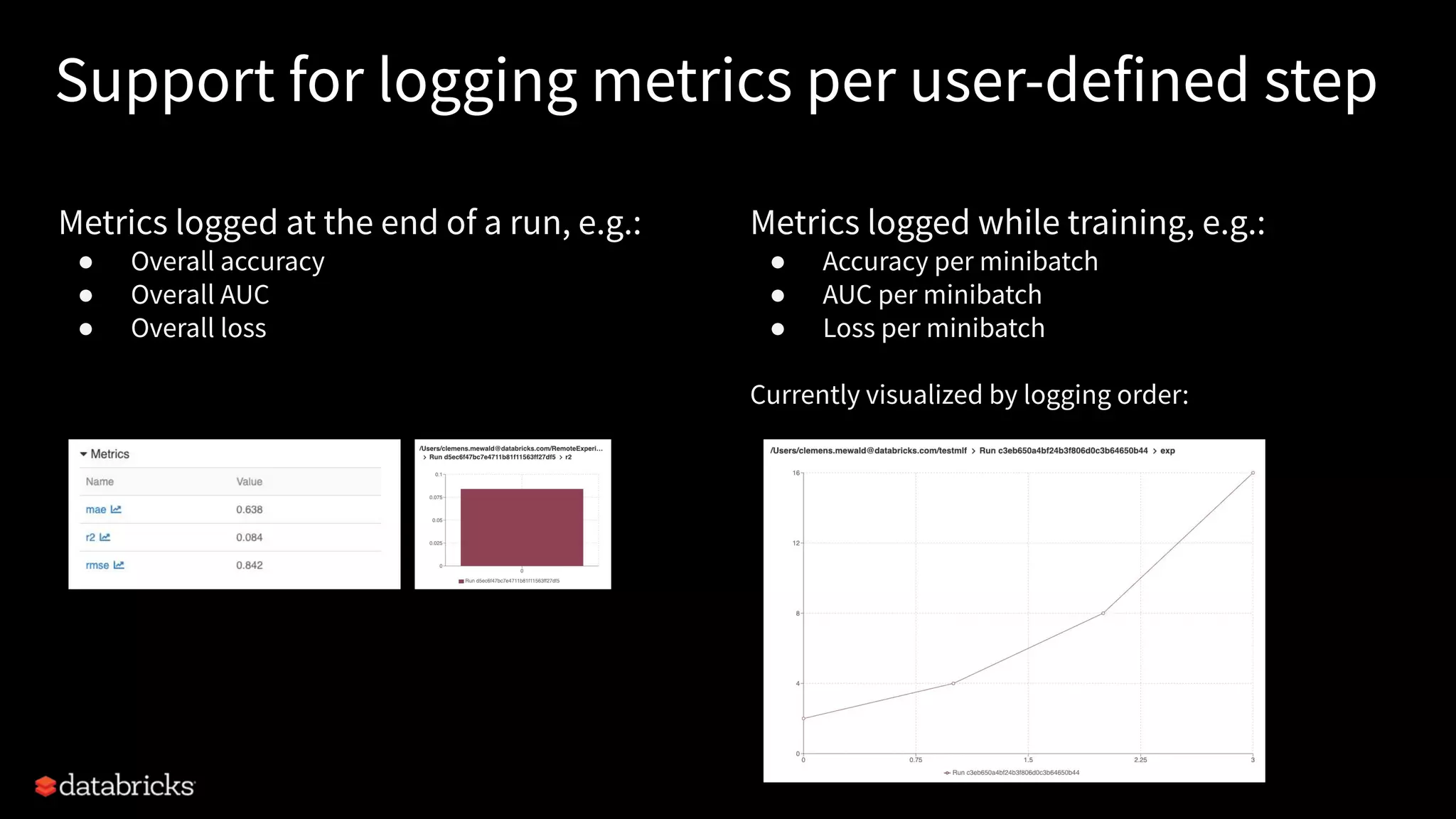

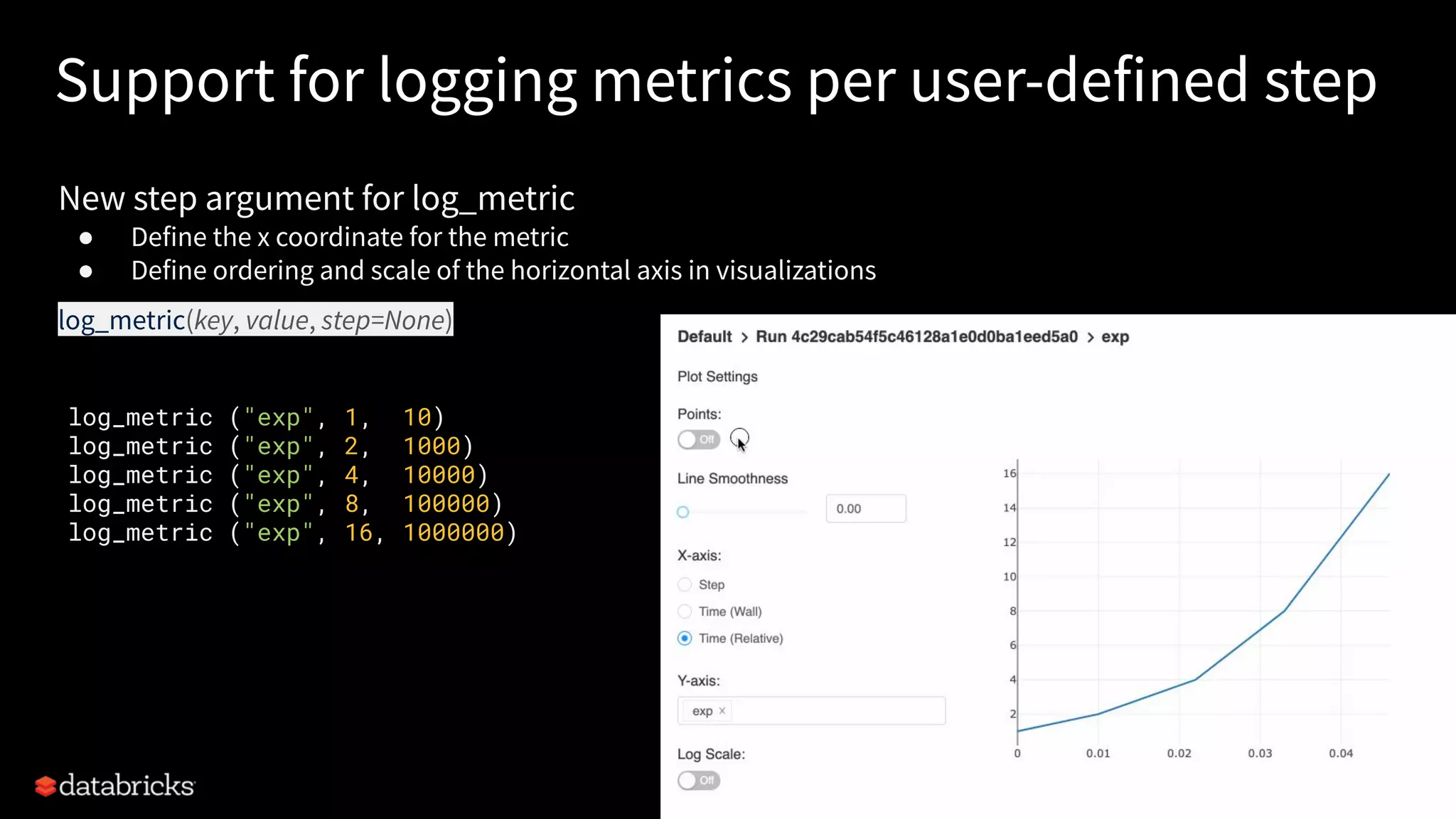

The document outlines the features and components of MLflow, an open-source platform designed to manage the machine learning lifecycle. It discusses concepts like tracking experiments, packaging projects, and model formats that support diverse deployment tools. The document also highlights recent updates in version 1.0, including new features for tracking metrics and improved search capabilities.

![Selected New Features in MLflow 1.0

• Support for logging metrics per user-defined step

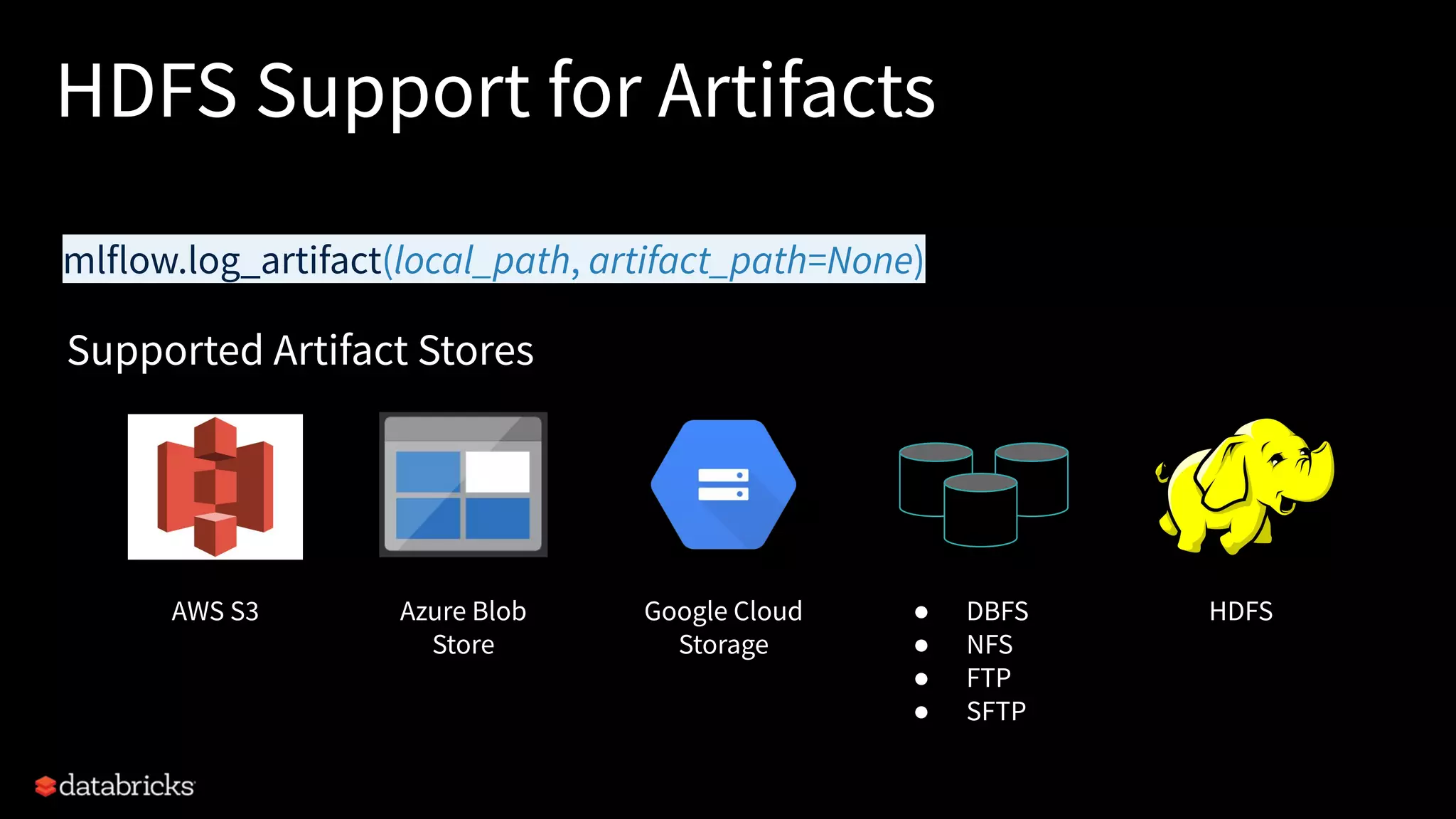

• HDFS support for artifacts

• ONNX Model Flavor [experimental]

• Deploying an MLflow Model as a Docker Image [experimental]](https://image.slidesharecdn.com/mlflowparisdataengmeetup-190729154707/75/Utilisation-de-MLflow-pour-le-cycle-de-vie-des-projet-Machine-learning-34-2048.jpg)

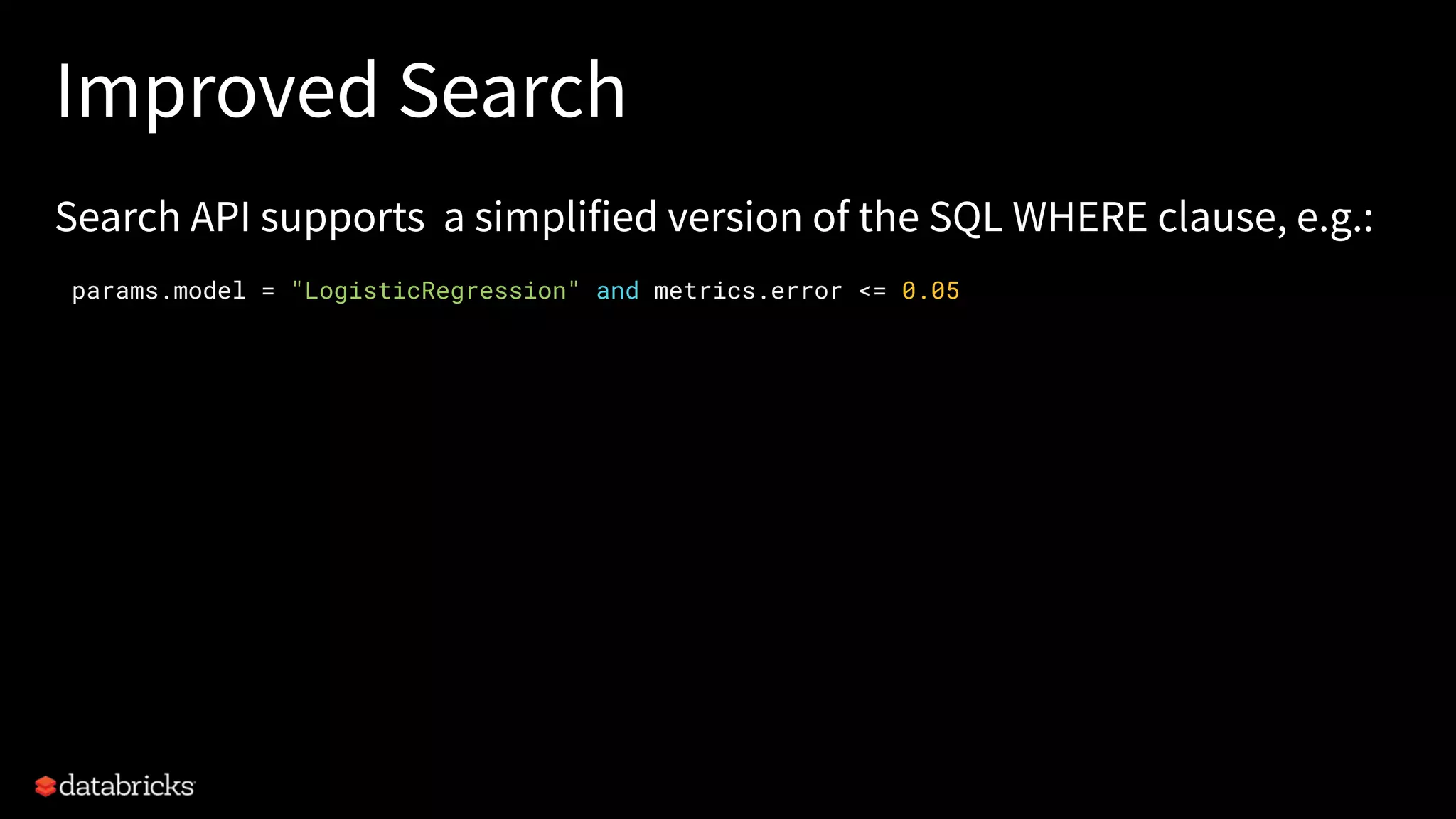

![Improved Search

Search API supports a simplified version of the SQL WHERE clause, e.g.:

params.model = "LogisticRegression" and metrics.error <= 0.05

all_experiments = [exp.experiment_id for

exp in MlflowClient().list_experiments()]

runs = MlflowClient().search_runs(

all_experiments,

"params.model='LogisticRegression'"

" and metrics.error<=0.05",

ViewType.ALL)

Python API Example](https://image.slidesharecdn.com/mlflowparisdataengmeetup-190729154707/75/Utilisation-de-MLflow-pour-le-cycle-de-vie-des-projet-Machine-learning-38-2048.jpg)

![Improved Search

Search API supports a simplified version of the SQL WHERE clause, e.g.:

Python API Example UI Example

all_experiments = [exp.experiment_id for

exp in MlflowClient().list_experiments()]

runs = MlflowClient().search_runs(

all_experiments,

"params.model='LogisticRegression'"

" and metrics.error<=0.05",

ViewType.ALL)

params.model = "LogisticRegression" and metrics.error <= 0.05](https://image.slidesharecdn.com/mlflowparisdataengmeetup-190729154707/75/Utilisation-de-MLflow-pour-le-cycle-de-vie-des-projet-Machine-learning-39-2048.jpg)

![ONNX Model Flavor

[Experimental]

ONNX models export both

• ONNX native format

• Pyfunc

mlflow.onnx.load_model(model_uri)

mlflow.onnx.log_model(onnx_model, artifact_path, conda_env=None)

mlflow.onnx.save_model(onnx_model, path, conda_env=None,

mlflow_model=<mlflow.models.Model object>)

Supported Model Flavors

Scikit TensorFlow MLlib H2O PyTorch Keras MLeap

Python

Function

R FunctionONNX](https://image.slidesharecdn.com/mlflowparisdataengmeetup-190729154707/75/Utilisation-de-MLflow-pour-le-cycle-de-vie-des-projet-Machine-learning-41-2048.jpg)

![Docker Build

[Experimental]

$ mlflow models build-docker -m "runs:/some-run-uuid/my-model" -n "my-image-name"

$ docker run -p 5001:8080 "my-image-name"

Builds a Docker image whose default entrypoint serves the

specified MLflow model at port 8080 within the container.](https://image.slidesharecdn.com/mlflowparisdataengmeetup-190729154707/75/Utilisation-de-MLflow-pour-le-cycle-de-vie-des-projet-Machine-learning-42-2048.jpg)