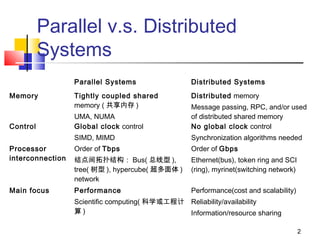

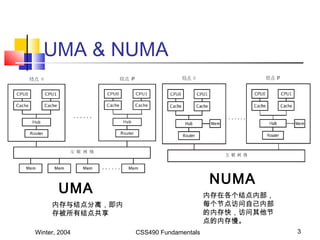

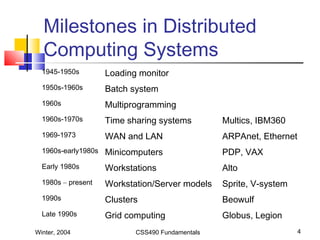

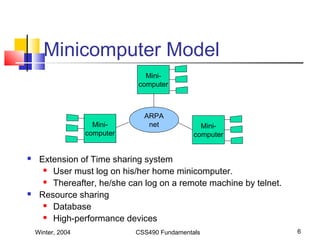

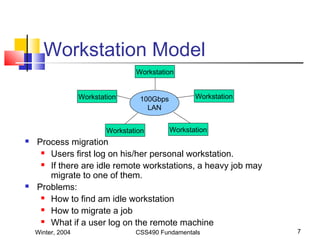

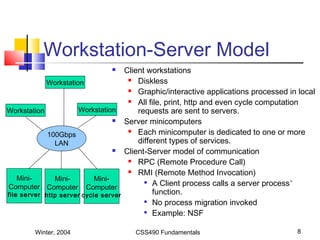

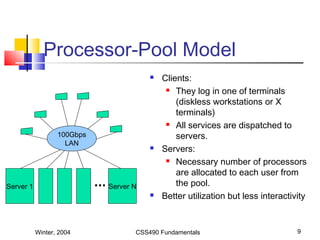

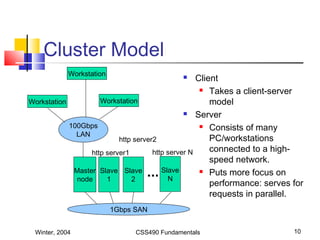

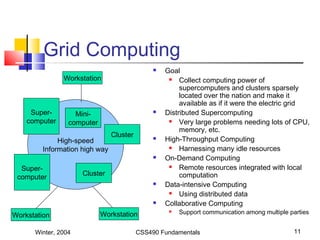

This document compares and contrasts parallel and distributed computing systems. Parallel systems have tightly coupled shared memory and global clock control, while distributed systems have distributed memory and no global clock. The milestones of distributed computing include time sharing systems in the 1960s-1970s, networks in the 1960s-1970s, minicomputers in the 1960s-early 1980s, and clusters and grid computing in the 1990s-present. Common system models are minicomputer, workstation, workstation-server, processor pool, cluster, and grid computing models.