The document provides an introduction to parallel computing, covering topics like:

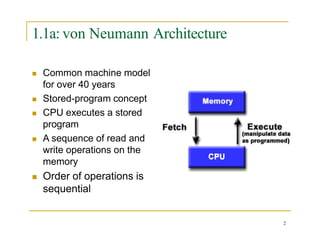

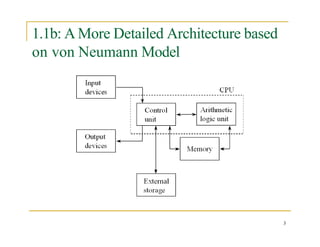

- The von Neumann architecture and its limitations for modern computing needs

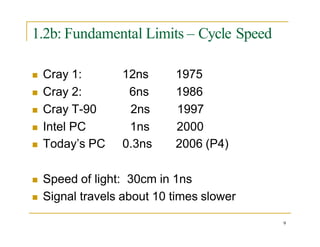

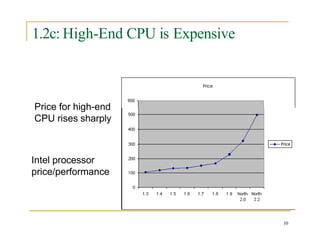

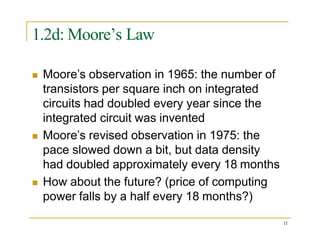

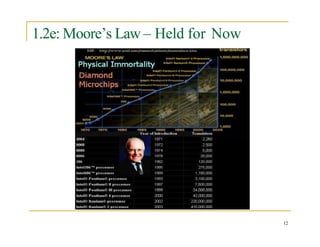

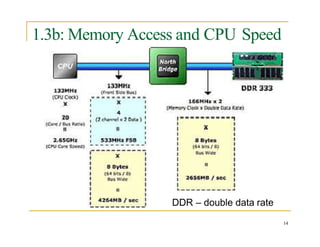

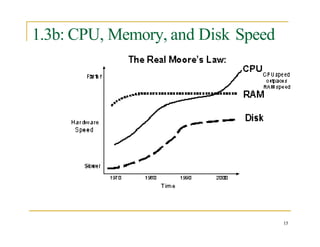

- Motivations for parallel computing like fundamental limits on CPU speed and the growing disparity between CPU and memory speeds

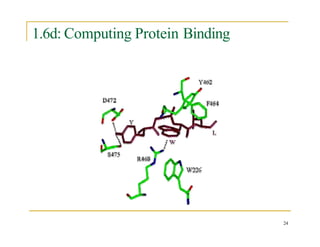

- Examples of applications that benefit greatly from large-scale, parallel computing like semiconductor simulation, drug design, and weather forecasting

- Issues involved in parallel computing like designing efficient parallel algorithms and programming tools

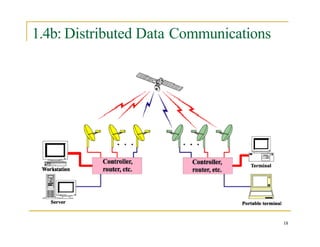

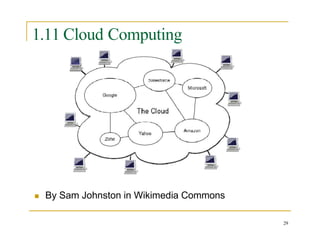

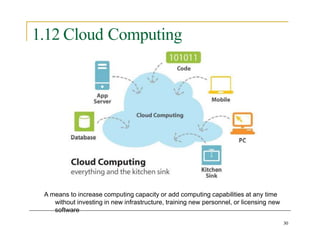

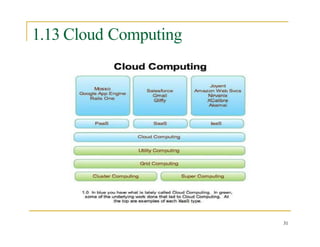

- Models of parallel and distributed computing like message passing interface (MPI), cloud computing, and utility computing