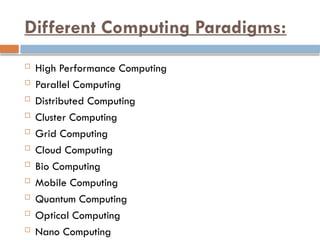

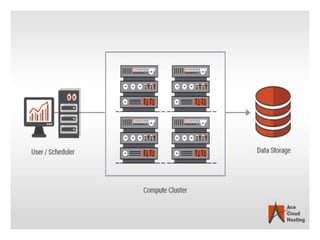

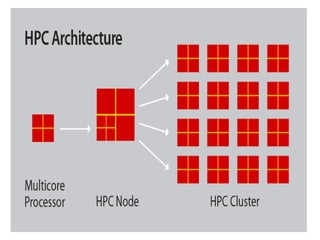

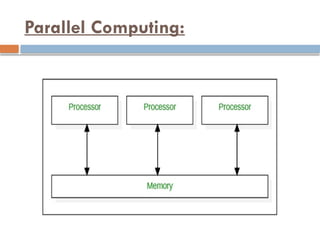

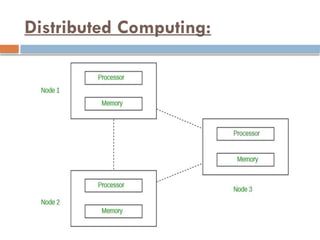

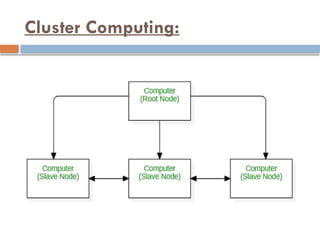

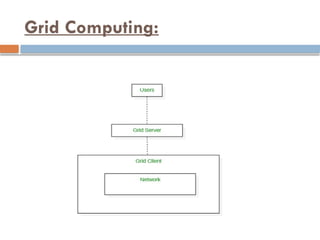

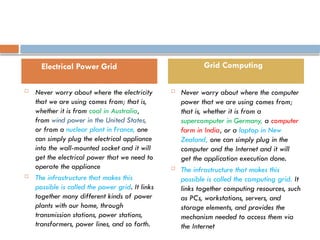

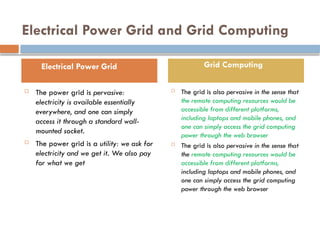

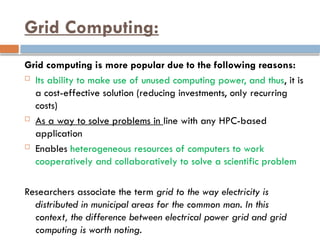

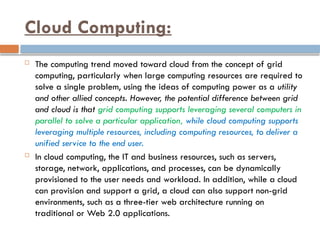

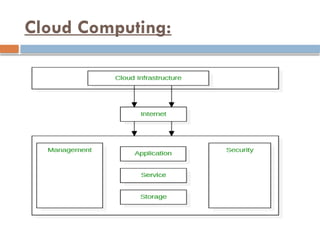

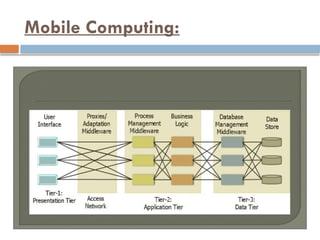

The document discusses various computing paradigms, including high-performance computing (HPC), parallel computing, distributed computing, cluster computing, grid computing, cloud computing, bio computing, mobile computing, quantum computing, optical computing, and nano computing. It highlights the importance of HPC for scientific advancements and complex problem-solving across fields, and explains how different computing systems can work independently or collaboratively. Additionally, it outlines the evolution from grid to cloud computing, emphasizing their distinct functionalities and uses.