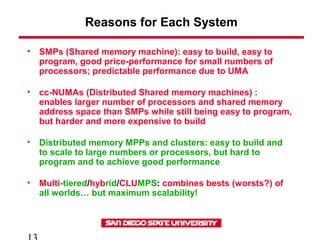

Uniform memory access (UMA) systems provide uniform memory access times for processors, while non-uniform memory access (NUMA) systems provide faster access to local versus remote memory. Cache coherence must be maintained across networks in NUMA systems as well as within nodes to ensure processors see updated values. Distributed shared memory (cc-NUMA) systems consist of processors with local memory and a global address space, requiring cache coherence to be enforced across the network.