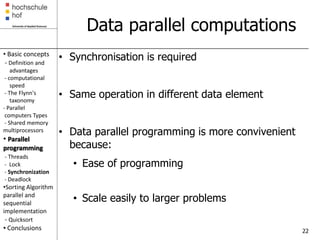

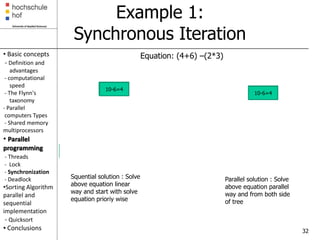

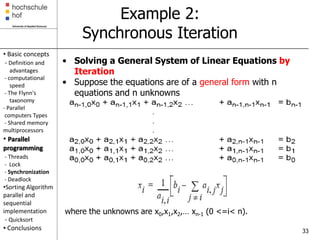

This document discusses parallel programming concepts including threads, synchronization, and barriers. It defines parallel programming as carrying out many calculations simultaneously. Advantages include increased computational power and speed up. Key issues in parallel programming are sharing resources between threads, and ensuring synchronization through locks and barriers. Data parallel programming is discussed where the same operation is performed on different data elements simultaneously.

![Example

• Basic concepts

- Definition and •For(i=0;i<n;i++)

advantages

- computational •a[i]=a[i]+k

speed

- The Flynn's

taxonomy a[]=a[]+

- Parallel

computers Types k;

- Shared memory

multiprocessors

• Parallel

programming

- Threads

- Lock

- Synchronization a[n-1]=a[n-

a[0]=a[0]+k a[1]=a[1]+k

- Deadlock 1]+k

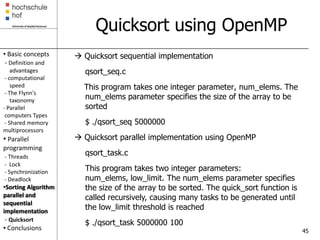

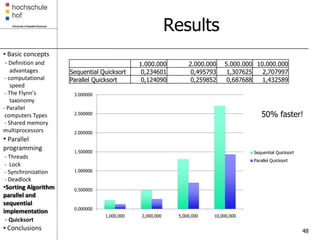

•Sorting Algorithm

parallel and

sequential

implementation

A[0]

A[1] A[n-1]

- Quicksort

• Conclusions 24](https://image.slidesharecdn.com/parallelprogramming-13452777741194-phpapp01-120818033618-phpapp01/85/Parallel-Programming-24-320.jpg)

![Barrier Requirement

• Basic concepts

- Definition and

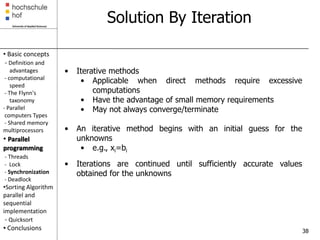

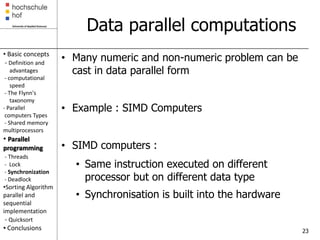

• Data parallel technique is applied to Multiprocessor

advantages or Multicomputer .

- computational

speed

- The Flynn's

taxonomy

- Parallel • The whole construct should not be completed before

computers Types

- Shared memory the instances thus a barrier is required .

multiprocessors

• Parallel

programming

- Threads

- Lock

• Forall(i=0; i<n;i++)

- Synchronization

- Deadlock a[i]=a[i] +k;

•Sorting Algorithm

parallel and

sequential

implementation

- Quicksort

• Conclusions 25](https://image.slidesharecdn.com/parallelprogramming-13452777741194-phpapp01-120818033618-phpapp01/85/Parallel-Programming-25-320.jpg)

![Sequential Code

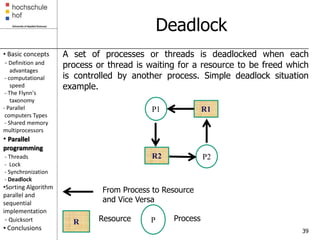

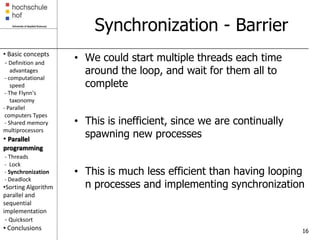

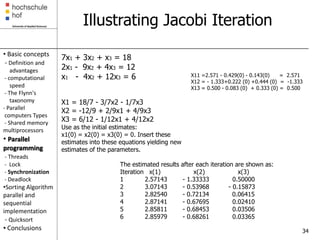

• Basic concepts 7x1 + 3x2 + x3 = 18

for (i=0; i<n; i++) 2x1 - 9x2 + 4x3 = 12

- Definition and

advantages x[i] = b[i]; x1 - 4x2 + 12x3 = 6

- computational for (iter = 0; iter < limit;

Use as the initial estimates:

speed

- The Flynn's

iter++){ x1(0) = x2(0) = x3(0) = 0. Insert these

taxonomy for (i=0; i<n; i++){ estimates into these equations yielding new

estimates of the parameters.

- Parallel sum = 0;

computers Types

- Shared memory

for (j=0; j<n; j++) Iteration 1:

newx[0] = (18 – 0)/7 = 2.571

multiprocessors if (i != j)

• Parallel sum = sum + newx[1] = - (12 – 0)/9 = -1.333

programming a[i][j]*x[j]; newx[2] = (6 – 0)/12 = 0.500

- Threads

- Lock x1(1) = 2.571 x2(1) = -1.333 x3(1) = 0.500

- Synchronization newx[i] = (b[i]- sum)/a[i][i]

- Deadlock Iteration 2:

•Sorting Algorithm } newx[0] = 2.571 +0.500357= 3.071

parallel and

sequential for (i=0; i<n; i++)

newx[1] = -1.333+0.792762 = -

implementation x[i] = newx[i]; 0.540

- Quicksort } newx[2] = 0.500 -0.657282 = - 0.158

• Conclusions 35](https://image.slidesharecdn.com/parallelprogramming-13452777741194-phpapp01-120818033618-phpapp01/85/Parallel-Programming-35-320.jpg)

![Parallel Code

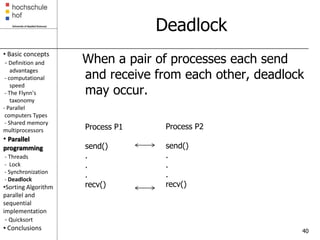

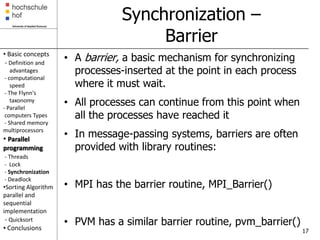

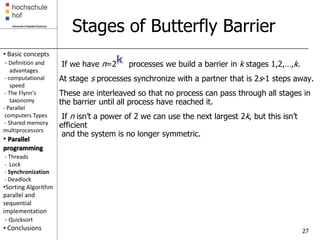

• Suppose we have a process Pi for each unknown xi; the code

for process Pi may be:

• Basic concepts x[i] = b[i]

- Definition and Iteration 1

for (iter = 0; iter < limit; iter++) Broadcast_Rv

advantages {

X1[0]=2.571 X1[0]=2.571

- computational sum = -a[i][i] * x[i];

speed for (j = 0; j < n; j++) X1[1]=-1.333 X1[1]=-

- The Flynn's sum = sum + a[i][j] * x[j]; 1.333

taxonomy new_x[i] = (b[i] - sum) /a[i][i]; X1[2]=0.5 X1[2]=0.5

- Parallel broadcast_receive(&new_x[i]); Iteration 2 X2[0] =

computers Types global_barrier(); 3.071

- Shared memory } X2[0] = 3.071 X2[1]= -

multiprocessors • broadcast receive() is used 0.540

X2[1]= - X2[2]=-

• Parallel here 0.540 0.158

programming (1) to send the newly computed

X2[2]=- X3[0] =

- Threads value of x[i] from process Pi to 2.825

0.158

every other process and (2) to X3[1]= -

- Lock Iteration 3

collect data broadcasted from any 0.721

- Synchronization

other processes to process Pi. X2[0] = X3[2]=

- Deadlock 2.825 0.064

•Sorting Algorithm X2[1]= -

parallel and 0.721

sequential X2[2]=-

implementation 0.064

- Quicksort

• Conclusions Recieve Send

36](https://image.slidesharecdn.com/parallelprogramming-13452777741194-phpapp01-120818033618-phpapp01/85/Parallel-Programming-36-320.jpg)