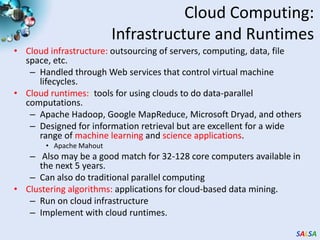

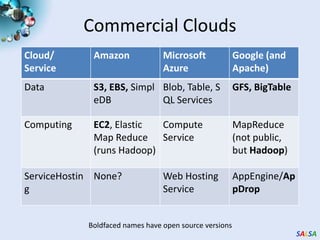

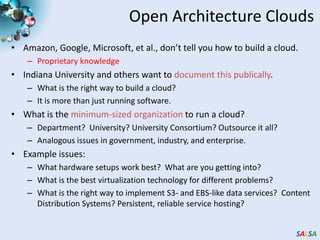

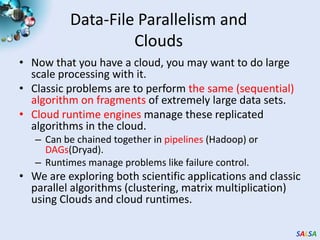

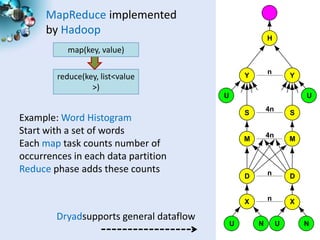

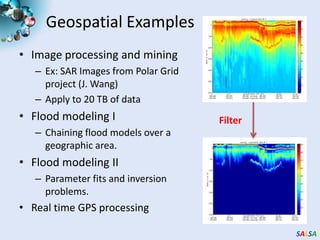

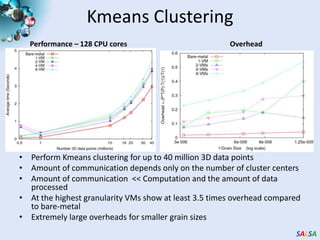

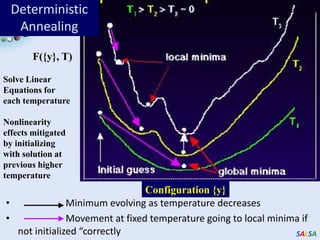

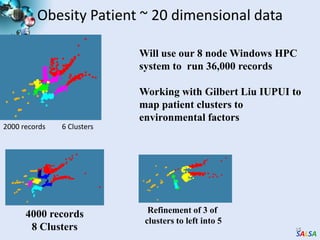

Cloud computing provides outsourced computing infrastructure and tools like Hadoop and Dryad for data-parallel processing. Commercial clouds are proprietary but open-source versions exist. Building open-architecture clouds requires understanding hardware, virtualization, services, and runtimes best practices. Cloud runtimes can run data-file parallel and dataflow applications at large scales for problems in areas like biology, geospatial processing, and clustering. Deterministic annealing is a parallelizable algorithm for data clustering that has been run on clouds. Clouds may change scientific computing by providing controllable, sustainable infrastructure without local clusters.