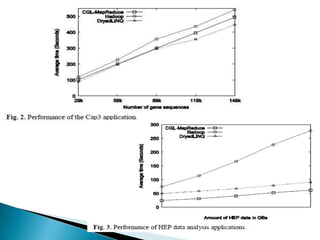

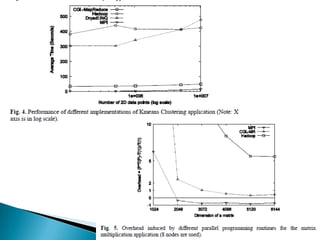

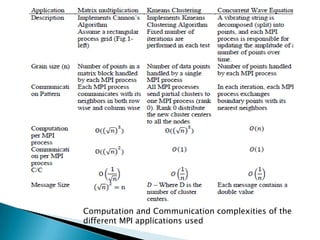

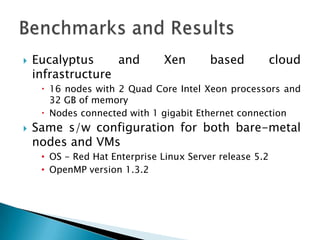

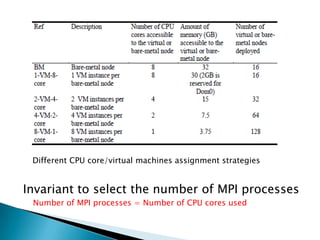

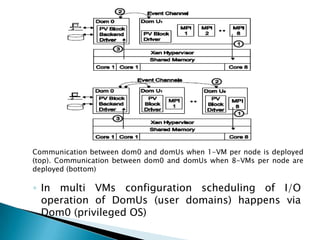

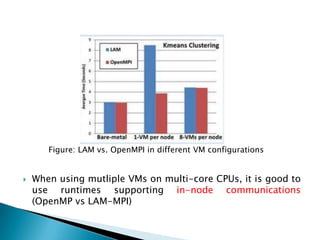

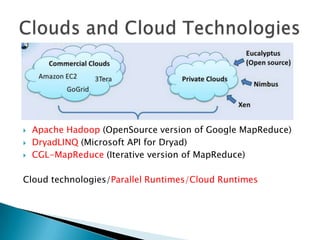

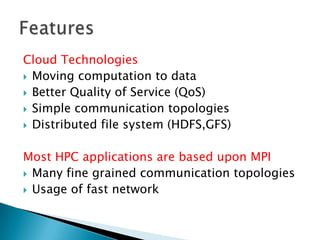

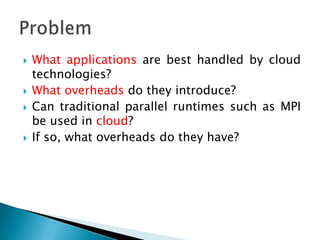

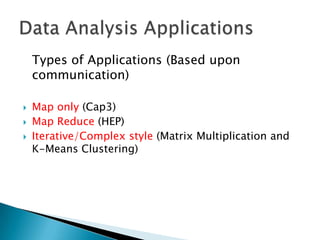

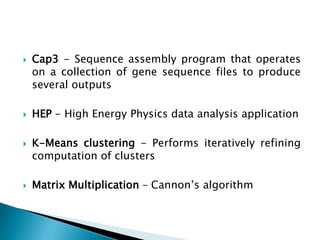

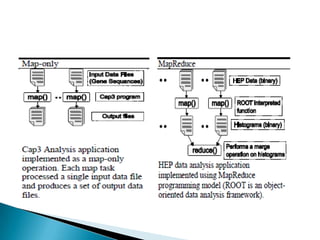

This document discusses using cloud computing technologies for data analysis applications. It presents different cloud runtimes like Hadoop, DryadLINQ, and CGL-MapReduce and compares their features to MPI. Applications like Cap3 and HEP are well-suited for cloud runtimes while iterative applications show higher overhead. Results show that as the number of VMs per node increases, MPI performance decreases by up to 50% compared to bare metal nodes. Integration of MapReduce and MPI could help improve performance of some applications on clouds.

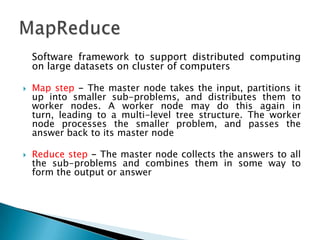

![ MapReduce does not support iterative/complex style

applications so [Fox] build CGL- MapReduce

CGL-Mapreduce – Supports long running tasks and retains

static data in memory across invocations](https://image.slidesharecdn.com/ppt-comp7850-111217023720-phpapp02/85/HPC-with-Clouds-and-Cloud-Technologies-14-320.jpg)

![ Performance (average running time)

Overhead = [P * T(P) – T(1)]/T(1)

P = No. of processes

DryadLINQ

Hadoop/

CGL

MapReduce/M

PI](https://image.slidesharecdn.com/ppt-comp7850-111217023720-phpapp02/85/HPC-with-Clouds-and-Cloud-Technologies-15-320.jpg)