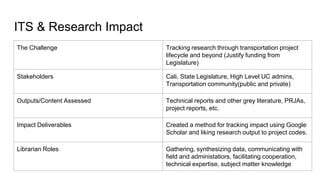

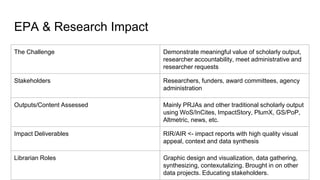

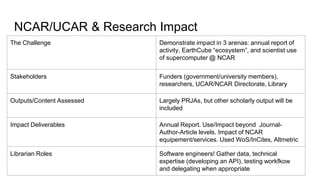

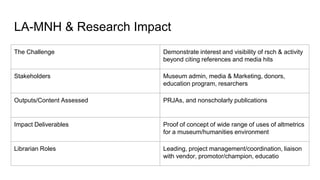

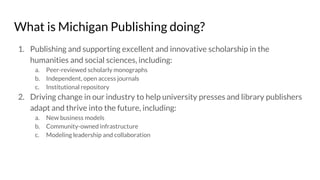

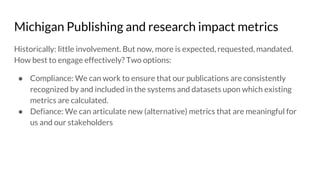

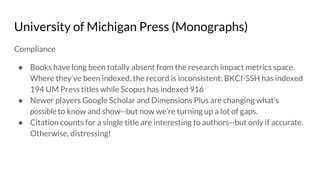

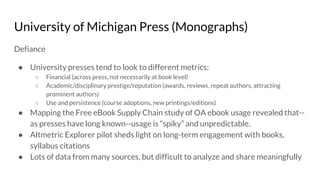

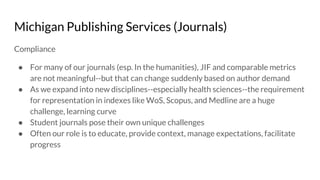

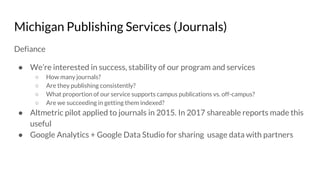

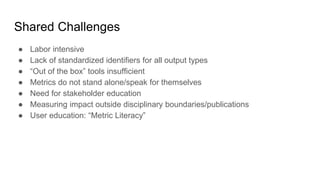

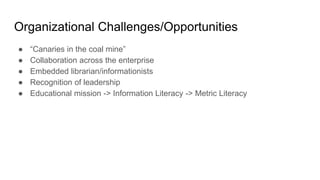

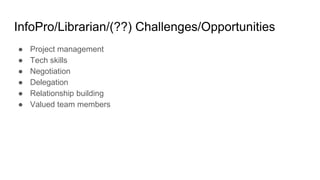

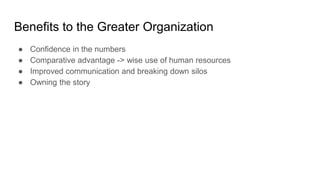

The document presents a series of case studies regarding the use of scholarly metrics in specialized contexts, addressing challenges and roles of librarians in demonstrating research impact. It highlights the need for tailored metrics that extend beyond traditional academic outputs to encompass diverse stakeholders and objectives. The discussion also emphasizes alternative approaches to compliance and defiance in the evolving landscape of research impact measurement, urging for better data practices and education around metrics.