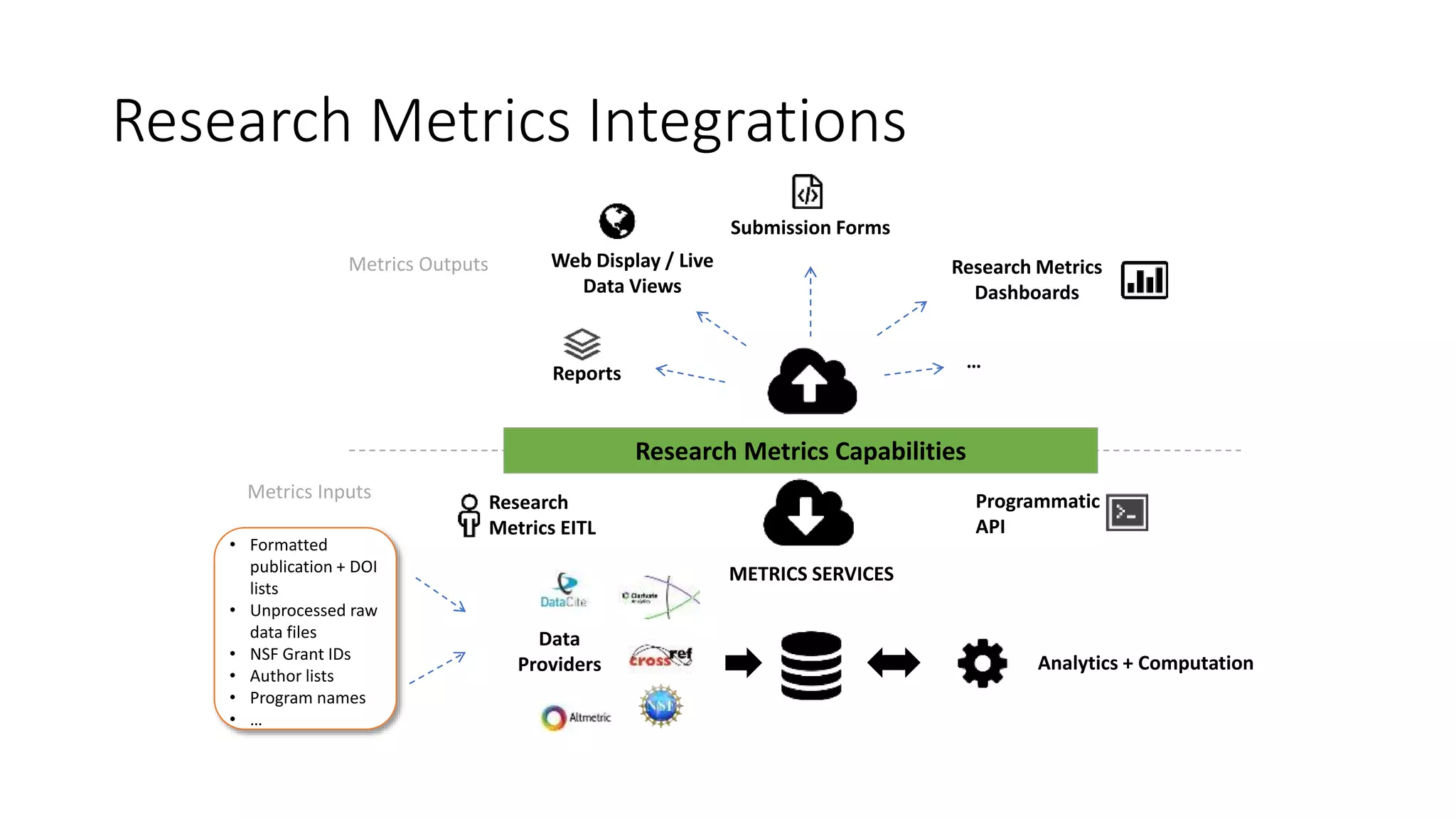

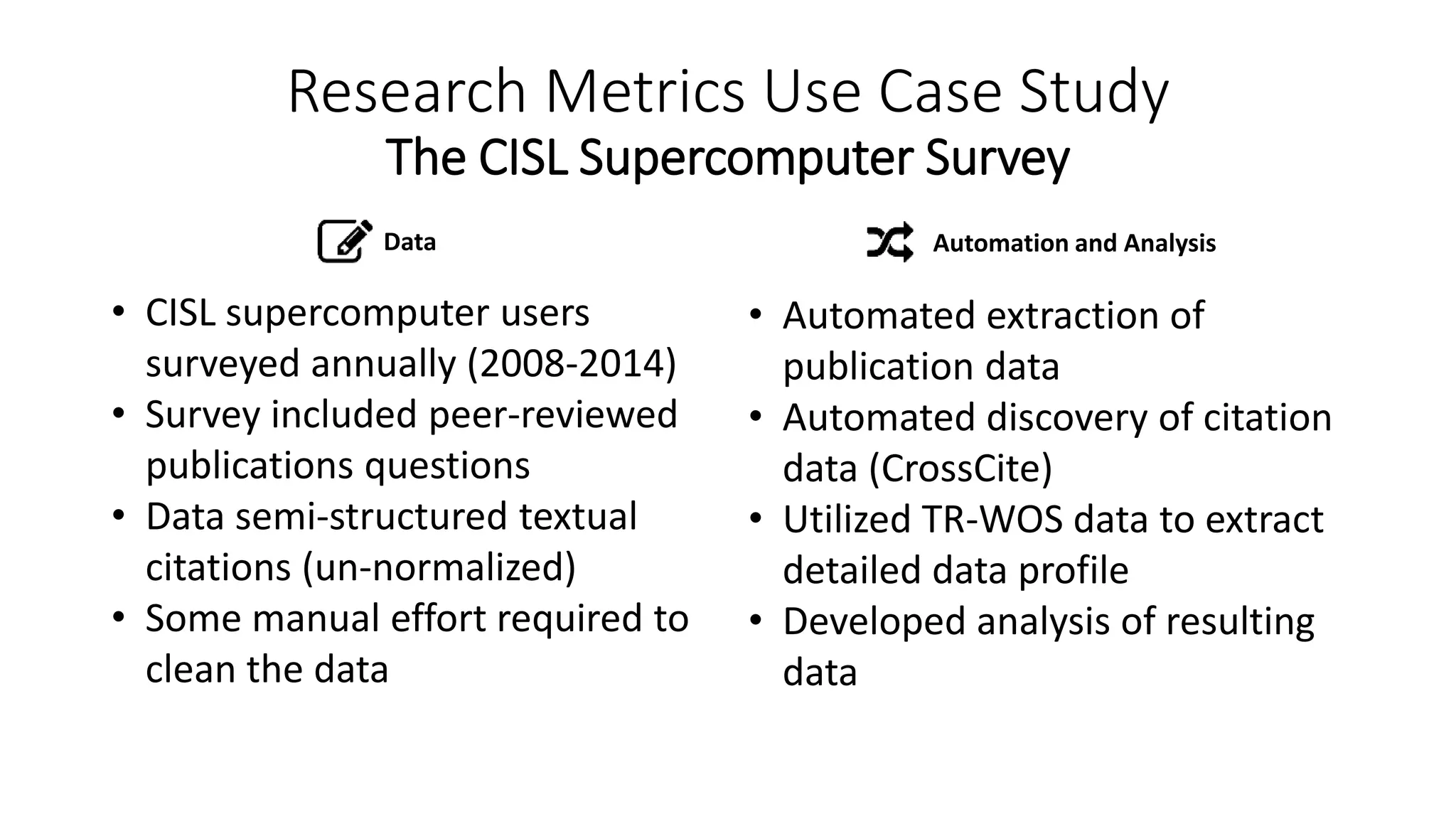

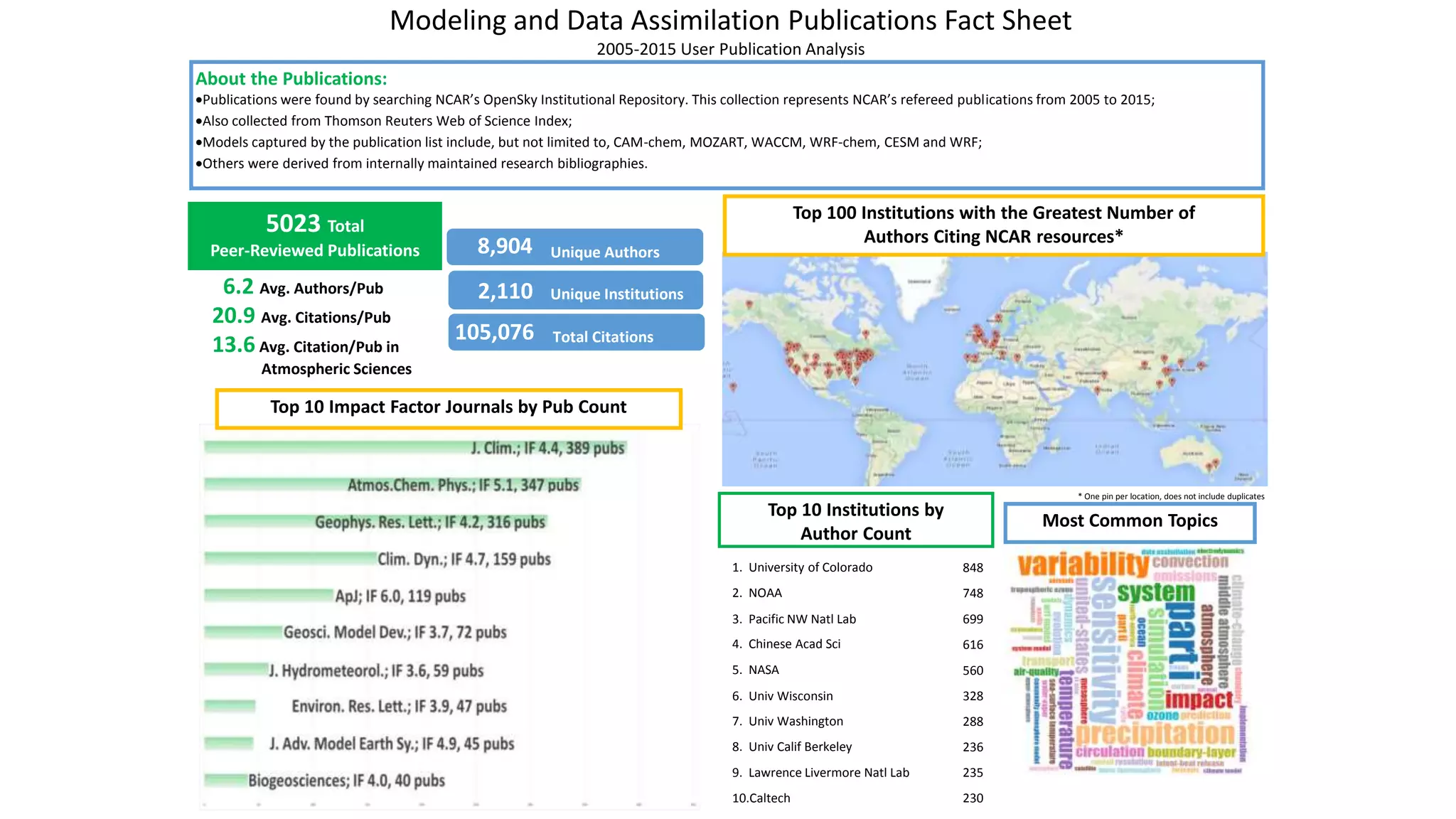

The document discusses the impact of research in specialized settings through three case studies, highlighting the importance of tailored metrics for different institutional needs. It outlines the development of research metrics services at the National Center for Atmospheric Research and the University of Michigan Press, emphasizing automation and stakeholder collaboration in data collection and interpretation. Key takeaways include the necessity of automating metrics efforts and the extension of 'metrics' beyond traditional academic publications.