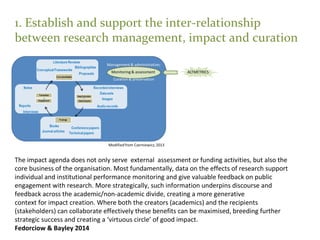

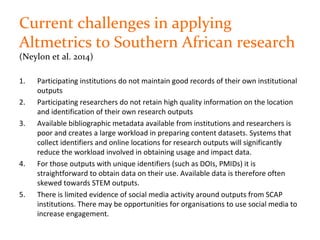

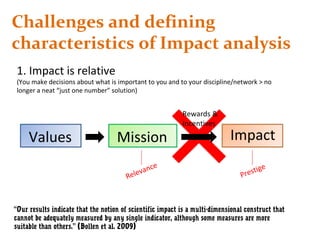

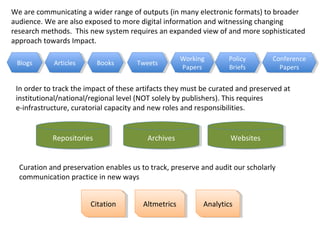

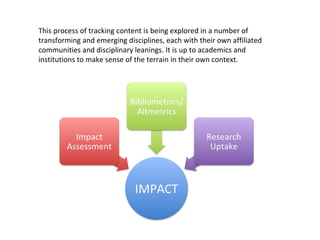

The document discusses the importance of impact analysis and scholarly communication within research, emphasizing that impact extends beyond traditional citation metrics and includes social, financial, and environmental effects. It highlights challenges in measuring impact accurately, the need for new approaches like altmetrics, and the critical role of open access in facilitating scholarly communication. To improve impact tracking, institutions must establish supportive infrastructures, effectively curate research outputs, and promote dialogues around impact measurement in the context of Southern African research.

![(2) Time is the central commodity

According to Brody and Harnad (2005), it takes five

years for a paper in physics to receive half of the

cited-by references that the article will ever acquire.

If you want to keep pace with your researchers, you

cannot make collection decisions based on five-year

old information. […] The biggest problem in using JIF

and others is that in today’s research landscape they

are lagging indicators.

Michalek et al. 2014](https://image.slidesharecdn.com/erpaltmetrics05-140613041330-phpapp02/85/Altmetrics-Impact-Analysis-and-Scholarly-Communication-11-320.jpg)